Metrics-Server

Kubernetes从1.8版本开始,CPU、内存等资源的metrics信息可以通过 Metrics API来获取,用户可以直接获取这些metrics信息

环境

192.168.48.101 master01

192.168.48.201 node01

192.168.48.202 node02

Metrics API

- 通过Metrics API我们可以获取到指定node或者pod的当前资源使用情况,API本身不存储任何信息,所以我们不可能通过API来获取资源的历史使用情况。

- Metrics API的获取路径位于:

/apis/metrics.k8s.io/ - 获取Metrics API的前提条件是metrics server要在K8S集群中成功部署

- 更多的metrics资料请参考:

<https://github.com/kubernetes/metrics>

Metrics Install

由于官方已经弃用heapster,现metrics-server作为其替代方案,当前最新版本为v0.3.3

官方项目地址

https://github.com/kubernetes-incubator/metrics-server

下载项目

git clone https://github.com/kubernetes-incubator/metrics-server

yaml文件在/root/metrics-server/deploy/1.8+

[root@master01 ~]# cd metrics-server/deploy/1.8+/

[root@master01 1.8+]# ls

aggregated-metrics-reader.yaml auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml metrics-server-deployment.yaml metrics-server-service.yaml resource-reader.yaml

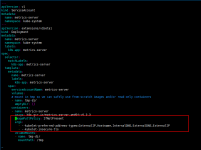

修改yaml文件,添加参数

vim metrics-server-deployment.yaml

- name: metrics-server

image: k8s.gcr.io/metrics-server-amd64:v0.3.3

imagePullPolicy: IfNotPresent

args:

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

- --kubelet-insecure-tls

Metrics镜像

k8s.gcr.io/metrics-server-amd64:v0.3.3

镜像下载

链接: https://pan.baidu.com/s/12d-2XTzw1gwLR52erGQ9hg 提取码: yxan

导入Metrics镜像

docker load -i metrics-0.3.3.tar.gz

创建Metrics

[root@master01 ~]# cd metrics-server/deploy/1.8+/

[root@master01 1.8+]# kubectl apply -f .

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.extensions/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

[root@master01 1.8+]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-fb8b8dccf-jfm2m 1/1 Running 3 8d

coredns-fb8b8dccf-r8tqp 1/1 Running 3 8d

etcd-master 1/1 Running 2 8d

kube-apiserver-master 1/1 Running 2 8d

kube-controller-manager-master 1/1 Running 2 8d

kube-flannel-ds-amd64-26xjf 1/1 Running 3 8d

kube-flannel-ds-amd64-82s7n 1/1 Running 3 8d

kube-flannel-ds-amd64-sd2j9 1/1 Running 3 8d

kube-proxy-792hk 1/1 Running 2 8d

kube-proxy-88cgt 1/1 Running 3 8d

kube-proxy-frgtq 1/1 Running 2 8d

kube-scheduler-master 1/1 Running 2 8d

kubernetes-dashboard-5f7b999d65-9v5f9 1/1 Running 0 46m

metrics-server-c9f69c698-c7zl9 1/1 Running 0 5s

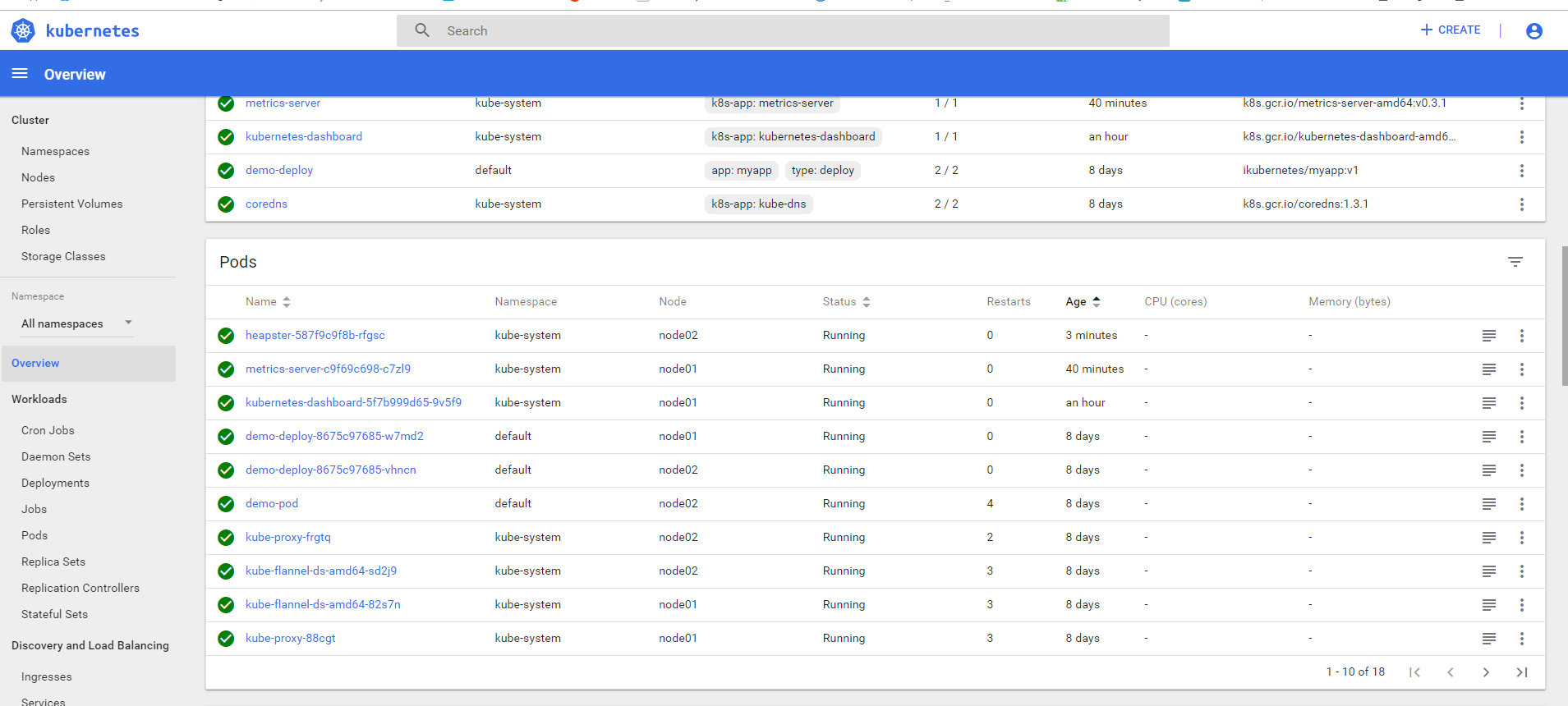

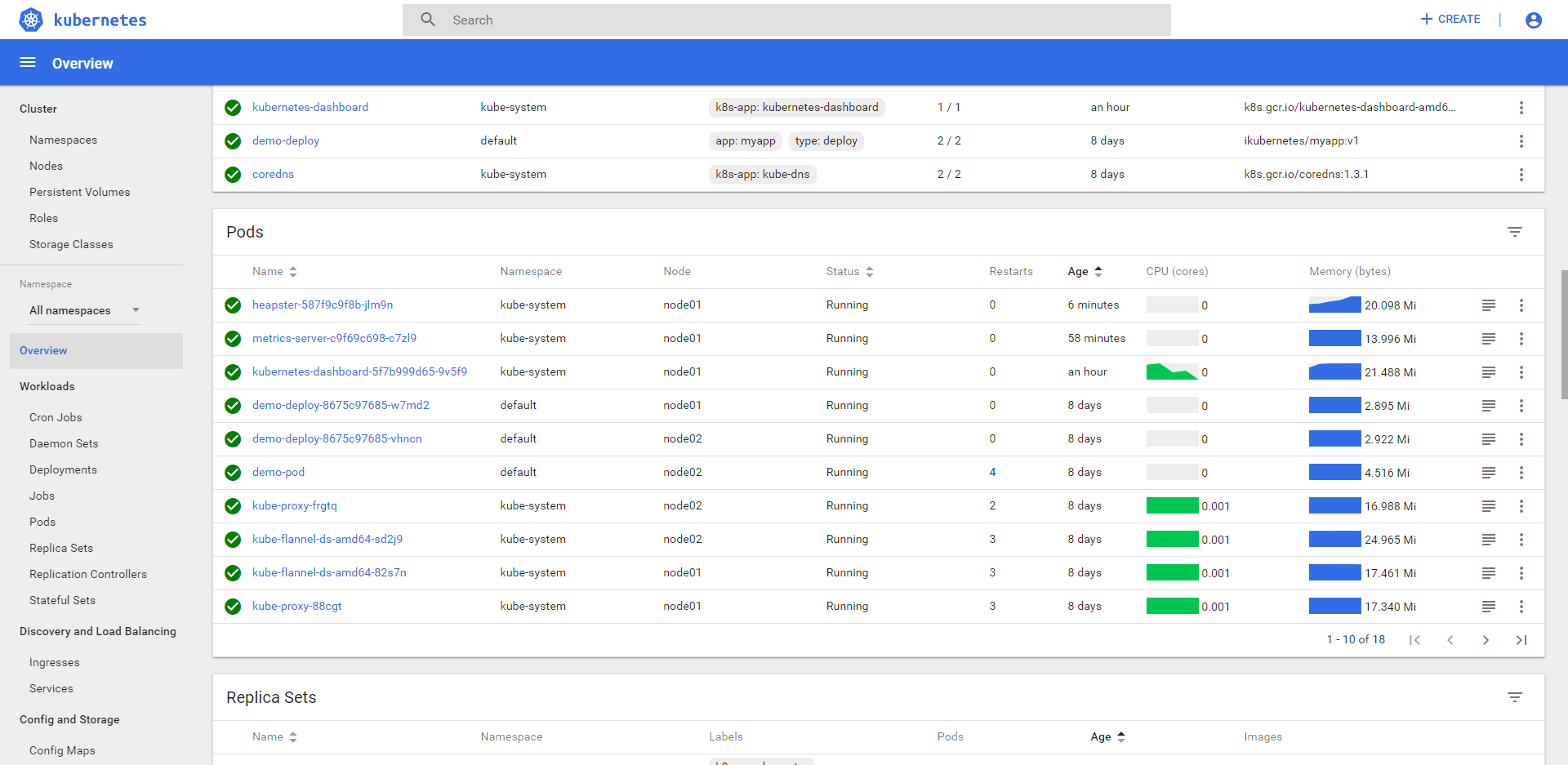

验收

部署成功后,达到如下结果,则为正常:

[root@master01 ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 86m 4% 963Mi 25%

node01 26m 1% 522Mi 13%

node02 21m 1% 503Mi 13%

[root@master01 ~]# kubectl top pods --all-namespaces

NAMESPACE NAME CPU(cores) MEMORY(bytes)

default demo-deploy-8675c97685-vhncn 0m 2Mi

default demo-deploy-8675c97685-w7md2 0m 2Mi

default demo-pod 0m 4Mi

kube-system coredns-fb8b8dccf-jfm2m 2m 13Mi

kube-system coredns-fb8b8dccf-r8tqp 2m 13Mi

kube-system etcd-master 13m 71Mi

kube-system kube-apiserver-master 18m 297Mi

kube-system kube-controller-manager-master 9m 50Mi

kube-system kube-flannel-ds-amd64-26xjf 2m 12Mi

kube-system kube-flannel-ds-amd64-82s7n 2m 17Mi

kube-system kube-flannel-ds-amd64-sd2j9 2m 18Mi

kube-system kube-proxy-792hk 1m 17Mi

kube-system kube-proxy-88cgt 1m 19Mi

kube-system kube-proxy-frgtq 1m 16Mi

kube-system kube-scheduler-master 1m 15Mi

kube-system kubernetes-dashboard-5f7b999d65-9v5f9 1m 11Mi

kube-system metrics-server-c9f69c698-c7zl9 1m 13Mi

Heapster

目前dashboard插件如果想在界面上显示资源使用率,它还依赖于heapster;另外,测试发现k8s 1.8版本的kubectl top也依赖heapster,因此建议补充安装heapster,无需安装influxdb和grafana。

准备heapster.yaml

vim heapster.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:heapster

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

k8s-app: heapster

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: k8s.gcr.io/heapster-amd64:v1.5.4

imagePullPolicy: IfNotPresent

command:

- /heapster

#- --source=kubernetes:https://kubernetes.default

- --source=kubernetes.summary_api:''

#- --sink=influxdb:http://monitoring-influxdb.kube-system.svc:8086

livenessProbe:

httpGet:

path: /healthz

port: 8082

scheme: HTTP

initialDelaySeconds: 180

timeoutSeconds: 5

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

#kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

heapster镜像

k8s.gcr.io/heapster-amd64:v1.5.4

镜像下载

链接:https://pan.baidu.com/s/1an-hA1hhsbbIGaLGgPYd-Q 提取码:n0dw

导入heapster镜像

dockerl load -i heapster-1.5.4.tar.gz

创建heapster

[root@master01 ~]# kubectl apply -f heapster.yaml

serviceaccount/heapster created

clusterrolebinding.rbac.authorization.k8s.io/heapster created

deployment.apps/heapster created

service/heapster created

[root@master01 ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-fb8b8dccf-jfm2m 1/1 Running 5 8d 10.244.0.9 master01 <none> <none>

coredns-fb8b8dccf-r8tqp 1/1 Running 4 8d 10.244.0.10 master01 <none> <none>

etcd-master01 1/1 Running 3 8d 192.168.48.200 master01 <none> <none>

heapster-587f9c9f8b-rfgsc 1/1 Running 0 76s 10.244.2.7 node02 <none> <none>

kube-apiserver-master01 1/1 Running 3 8d 192.168.48.200 master01 <none> <none>

kube-controller-manager-master01 1/1 Running 3 8d 192.168.48.200 master01 <none> <none>

kube-flannel-ds-amd64-26xjf 1/1 Running 4 8d 192.168.48.200 master01 <none> <none>

kube-flannel-ds-amd64-82s7n 1/1 Running 3 8d 192.168.48.201 node01 <none> <none>

kube-flannel-ds-amd64-sd2j9 1/1 Running 3 8d 192.168.48.202 node02 <none> <none>

kube-proxy-792hk 1/1 Running 3 8d 192.168.48.200 master01 <none> <none>

kube-proxy-88cgt 1/1 Running 3 8d 192.168.48.201 node01 <none> <none>

kube-proxy-frgtq 1/1 Running 2 8d 192.168.48.202 node02 <none> <none>

kube-scheduler-master 1/1 Running 3 8d 192.168.48.200 master01 <none> <none>

kubernetes-dashboard-5f7b999d65-9v5f9 1/1 Running 0 84m 10.244.1.6 node01 <none> <none>

metrics-server-c9f69c698-c7zl9 1/1 Running 0 38m 10.244.1.7 node01 <none> <none>

结果发现dashboard并没有生成图形

查看日志

[root@master01 ~]# kubectl logs -n kube-system heapster-587f9c9f8b-rfgsc

I0408 13:00:46.262981 1 heapster.go:78] /heapster --source=kubernetes.summary_api:''

I0408 13:00:46.263011 1 heapster.go:79] Heapster version v1.5.4

I0408 13:00:46.263200 1 configs.go:61] Using Kubernetes client with master "https://10.96.0.1:443" and version v1

I0408 13:00:46.263210 1 configs.go:62] Using kubelet port 10255

I0408 13:00:46.263834 1 heapster.go:202] Starting with Metric Sink

I0408 13:00:46.270800 1 heapster.go:112] Starting heapster on port 8082

E0408 13:01:05.003469 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.200:10255: Get http://192.168.48.200:10255/stats/summary/: dial tcp 192.168.48.200:10255: getsockopt: connection refused

E0408 13:01:05.007752 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.202:10255: Get http://192.168.48.202:10255/stats/summary/: dial tcp 192.168.48.202:10255: getsockopt: connection refused

E0408 13:01:05.022308 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.201:10255: Get http://192.168.48.201:10255/stats/summary/: dial tcp 192.168.48.201:10255: getsockopt: connection refused

W0408 13:01:25.000727 1 manager.go:152] Failed to get all responses in time (got 0/3)

E0408 13:02:05.013965 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.202:10255: Get http://192.168.48.202:10255/stats/summary/: dial tcp 192.168.48.202:10255: getsockopt: connection refused

E0408 13:02:05.016730 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.200:10255: Get http://192.168.48.200:10255/stats/summary/: dial tcp 192.168.48.200:10255: getsockopt: connection refused

E0408 13:02:05.017933 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.201:10255: Get http://192.168.48.201:10255/stats/summary/: dial tcp 192.168.48.201:10255: getsockopt: connection refused

W0408 13:02:25.001764 1 manager.go:152] Failed to get all responses in time (got 0/3)

E0408 13:03:05.008722 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.202:10255: Get http://192.168.48.202:10255/stats/summary/: dial tcp 192.168.48.202:10255: getsockopt: connection refused

E0408 13:03:05.019129 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.201:10255: Get http://192.168.48.201:10255/stats/summary/: dial tcp 192.168.48.201:10255: getsockopt: connection refused

E0408 13:03:05.019318 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.200:10255: Get http://192.168.48.200:10255/stats/summary/: dial tcp 192.168.48.200:10255: getsockopt: connection refused

W0408 13:03:25.001723 1 manager.go:152] Failed to get all responses in time (got 0/3)

E0408 13:04:05.004438 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.200:10255: Get http://192.168.48.200:10255/stats/summary/: dial tcp 192.168.48.200:10255: getsockopt: connection refused

E0408 13:04:05.004837 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.201:10255: Get http://192.168.48.201:10255/stats/summary/: dial tcp 192.168.48.201:10255: getsockopt: connection refused

E0408 13:04:05.017424 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.202:10255: Get http://192.168.48.202:10255/stats/summary/: dial tcp 192.168.48.202:10255: getsockopt: connection refused

W0408 13:04:25.001505 1 manager.go:152] Failed to get all responses in time (got 0/3)

E0408 13:05:05.004518 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.201:10255: Get http://192.168.48.201:10255/stats/summary/: dial tcp 192.168.48.201:10255: getsockopt: connection refused

E0408 13:05:05.006935 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.202:10255: Get http://192.168.48.202:10255/stats/summary/: dial tcp 192.168.48.202:10255: getsockopt: connection refused

E0408 13:05:05.007187 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.200:10255: Get http://192.168.48.200:10255/stats/summary/: dial tcp 192.168.48.200:10255: getsockopt: connection refused

W0408 13:05:25.001816 1 manager.go:152] Failed to get all responses in time (got 0/3)

E0408 13:06:05.009242 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.202:10255: Get http://192.168.48.202:10255/stats/summary/: dial tcp 192.168.48.202:10255: getsockopt: connection refused

E0408 13:06:05.019315 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.201:10255: Get http://192.168.48.201:10255/stats/summary/: dial tcp 192.168.48.201:10255: getsockopt: connection refused

E0408 13:06:05.024390 1 manager.go:101] Error in scraping containers from kubelet_summary:192.168.48.200:10255: Get http://192.168.48.200:10255/stats/summary/: dial tcp 192.168.48.200:10255: getsockopt: connection refused

原因10255端口没开,所有节点都要开启10255端口,添加readOnlyPort: 10255

vim /var/lib/kubelet/config.yaml

.....

port: 10250

readOnlyPort: 10255

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

重启kubelet

[root@master01 ~]# systemctl daemon-reload

[root@master01 ~]# systemctl restart kubelet.service

[root@master01 ~]# netstat -ntlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:45028 0.0.0.0:* LISTEN 58103/kubelet

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 58103/kubelet

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 43472/kube-proxy

tcp 0 0 192.168.48.200:2379 0.0.0.0:* LISTEN 43426/etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 43426/etcd

tcp 0 0 192.168.48.200:2380 0.0.0.0:* LISTEN 43426/etcd

tcp 0 0 127.0.0.1:10257 0.0.0.0:* LISTEN 43368/kube-controll

tcp 0 0 127.0.0.1:10259 0.0.0.0:* LISTEN 43343/kube-schedule

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 951/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1034/master

tcp6 0 0 :::30080 :::* LISTEN 43472/kube-proxy

tcp6 0 0 :::10250 :::* LISTEN 58103/kubelet

tcp6 0 0 :::10251 :::* LISTEN 43343/kube-schedule

tcp6 0 0 :::6443 :::* LISTEN 43325/kube-apiserve

tcp6 0 0 :::10252 :::* LISTEN 43368/kube-controll

tcp6 0 0 :::10255 :::* LISTEN 58103/kubelet

tcp6 0 0 :::10256 :::* LISTEN 43472/kube-proxy

tcp6 0 0 :::30001 :::* LISTEN 43472/kube-proxy

tcp6 0 0 :::22 :::* LISTEN 951/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1034/master

[root@master ~]#

重启heapster

[root@master ~]# kubectl delete -f heapster.yaml

serviceaccount "heapster" deleted

clusterrolebinding.rbac.authorization.k8s.io "heapster" deleted

deployment.apps "heapster" deleted

service "heapster" deleted

[root@master ~]# kubectl apply -f heapster.yaml

serviceaccount/heapster created

clusterrolebinding.rbac.authorization.k8s.io/heapster created

deployment.apps/heapster created

service/heapster created

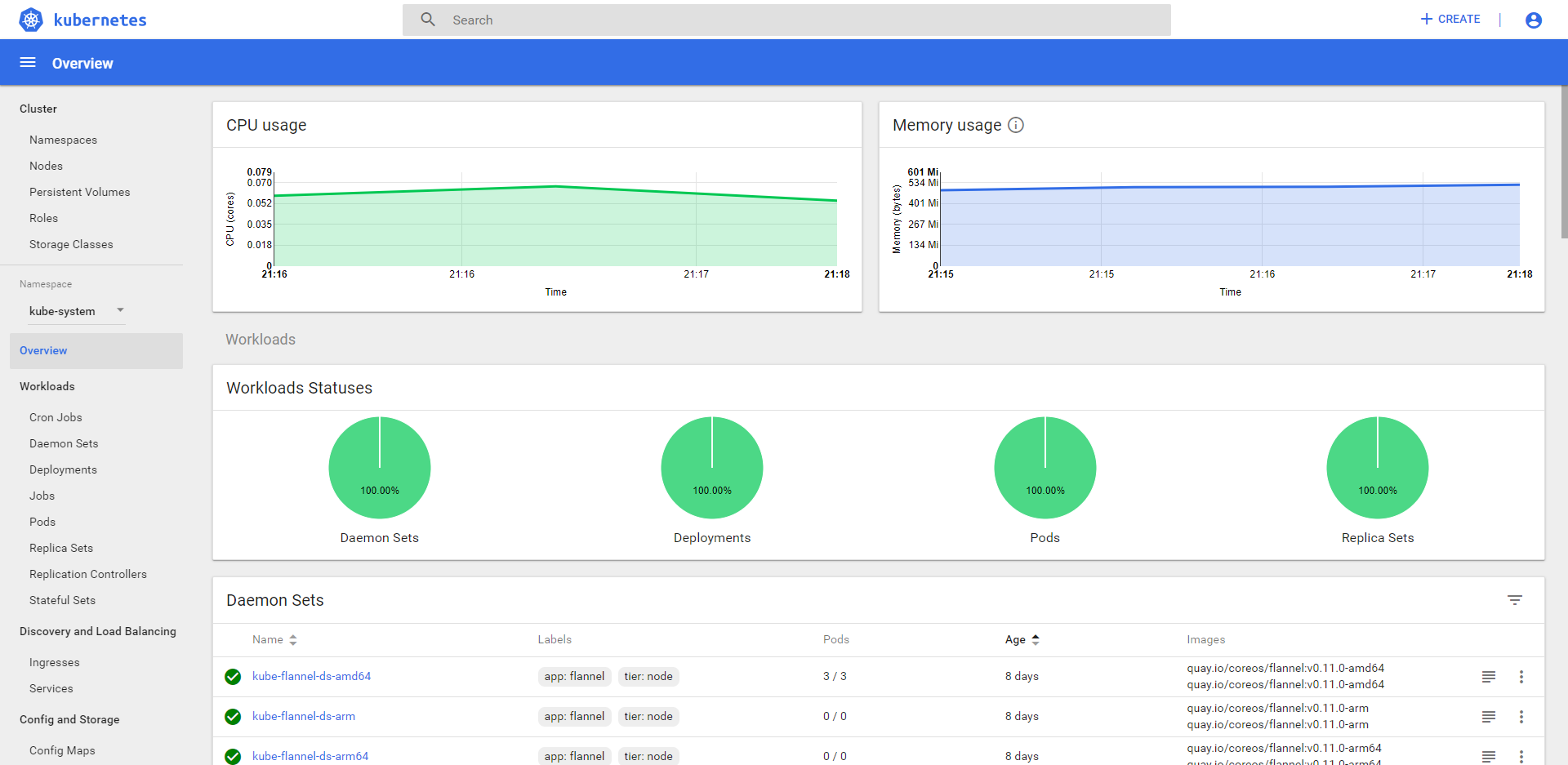

验收

经过一段时间收集,图形界面已出

本文介绍如何在Kubernetes集群中部署Metrics Server和Heapster,以获取和监控节点及Pod的资源使用情况,包括详细的步骤和常见问题解决办法。

本文介绍如何在Kubernetes集群中部署Metrics Server和Heapster,以获取和监控节点及Pod的资源使用情况,包括详细的步骤和常见问题解决办法。

1253

1253

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?