前戏:

Kubernetes中文文档

Kubernetes集群部署

部署web UI(Dashboard)

- 拉取到本地,创建容器

[root@Fone7 dashboard]# kubectl create -f dashboard-configmap.yaml

configmap/kubernetes-dashboard-settings created

[root@Fone7 dashboard]# kubectl create -f dashboard-rbac.yaml

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

[root@Fone7 dashboard]# kubectl create -f dashboard-secret.yaml

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-key-holder created

- 修改镜像地址

# vim dashboard-controller.yaml

image: registry.cn-beijing.aliyuncs.com/kubernetes2s/kubernetes-dashboard-amd64

# kubectl create -f dashboard-controller.yaml

serviceaccount/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

[root@Fone7 dashboard]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kubernetes-dashboard-77fd5947f-gqgft 1/1 Running 0 5m3s

- 修改yml文件,允许其他节点访问

# vim dashboard-service.yaml

spec:

# 加入下面这一行

type: NodePort

...

# kubectl create -f dashboard-service.yaml

service/kubernetes-dashboard created

- 生成token

# vim k8s-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dashboard-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

# kubectl create -f k8s-admin.yaml

serviceaccount/dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[root@Fone7 k8s]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

dashboard-admin-token-vzmfz kubernetes.io/service-account-token 3 49s

default-token-9slj4 kubernetes.io/service-account-token 3 23h

kubernetes-dashboard-certs Opaque 0 43m

kubernetes-dashboard-key-holder Opaque 2 43m

kubernetes-dashboard-token-jmk6l kubernetes.io/service-account-token 3 20m

[root@Fone7 k8s]# kubectl describe secret dashboard-admin-token-vzmfz -n kube-system

Name: dashboard-admin-token-vzmfz

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 103c7142-9973-11ea-b60d-080027b6e76f

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1359 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tdnptZnoiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMTAzYzcxNDItOTk3My0xMWVhLWI2MGQtMDgwMDI3YjZlNzZmIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.twUnFS7avAu4B8IuozgYDbic8GxkrIyc7P205-pG0h5giiQeU-sIJNWc-fKR0DLDzb98QZqAILH6CNCUNwSJwynUxIBoKIkJqaA-ljfGeHh4xSCCoNb7vG66UPjP1mC5woxyIRMg5TTeAWpkMKUm21sp6HVsZHLxyMUk99EtpXa13vWsv2HSN_LWG5zN2zndKFQQ-57K_p5DoJxqHGDLoSJOQ1_DSuFs1wydH15ot0PORaU0nLGNHlPrtWYlCyARhC4tiUmwMsx0c6LqTh3ZbFmXiswFwGAhSVNMgfAS0YIBGwTAndEi_lPsmA_1cV0k2Gn7GoHIxNvKZtYtWe735g

- 浏览器登陆

[root@Fone7 dashboard]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.0.0.19 <none> 443:49208/TCP 80s

在火狐浏览器访问https://192.168.33.8:49208。(谷歌浏览器无法访问,使用火狐)

将第4步中的token输入令牌,登陆

- 总结

- 部署:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kubeflannel.yml - 将Service改为NodePort

kubectl patch svc kubernetes-dashboard -p ‘{“spec”:{“type”:“NodePort”}}’ -n kube-system - 认证:

认证时的账号必须为ServiceAccount:被dashboard pod拿来由kubernetes进行认证; - token:

(1)创建ServiceAccount,根据其管理目标,使用rolebinding或clusterrolebinding绑定至合理role或clusterrole;

(2)获取到此ServiceAccount的secret,查看secret的详细信息,其中就有token; - kubeconfig: 把ServiceAccount的token封装为kubeconfig文件

(1)创建ServiceAccount,根据其管理目标,使用rolebinding或clusterrolebinding绑定至合理role或clusterrole;

(2)kubectl get secret | awk '/^ServiceAccount/{print $1}'

KUBE_TOKEN=$(kubectl get secret SERVCIEACCOUNT_SERRET_NAME -o jsonpath={.data.token} | base64 -d)

(3)生成kubeconfig文件

kubectl config set-cluster --kubeconfig=/PATH/TO/SOMEFILE

kubectl config set-credentials NAME --token=$KUBE_TOKEN --kubeconfig=/PATH/TO/SOMEFILE

kubectl config set-context

kubectl config use-contextkubectl config set-cluster mycluster --kubeconfig=/root/def-ns-admin.conf --server="https://192.168.33.6:6443" --certificate-authority=/etc/kubernetes/pki/ca.crt --embed-certs=true kubectl config set-credentials def-ns-admin --token=$DEF_NS_ADMIN_TOKEN --kubeconfig=/root/def-ns-admin.conf kubectl config set-context def-ns-admin@kubernetes --cluster=kubernetes --user=def-ns-admin --kubeconfig=/root/def-ns-admin.conf kubectl config view --kubeconfig=/root/def-ns-admin.conf kubectl config use-context def-ns-admin@kubernetes --kubeconfig=/root/def-ns-admin.conf

注意:

- dashboard镜像版本使用1.8.3时dashboard登陆会有跳过按钮,1.10.1版本去除了跳过按钮(保证安全性)

部署多master集群

- 将master节点文件复制到master2上

# scp -r /opt/kubernetes/ master2:/opt/

# scp /usr/lib/systemd/system/{kube-apiserver,kube-scheduler,kube-controller-manager}.service master2:/usr/lib/systemd/system

- 在master2节点上修改配置文件IP并启动

# cd /opt/kubernetes/cfg/

# vim kube-apiserver

# systemctl start kube-apiserver

# systemctl start kube-scheduler

# systemctl start kube-controller-manager

# ps -fe | grep kube

# /opt/kubernetes/bin/kubectl get cs

# /opt/kubernetes/bin/kubectl get nodes

nginx + keepalived(LB)

待验证

nginx主备节点安装nginx参考

- 修改配置文件

# vim /etc/nginx/nginx.conf

# 修改,加大后台进程

worker_processes 4;

# http上面加入

stream {

log_format main "$remote_addr $upstream_addr - $time_local $status";

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.33.7:6443;

}

server {

listen 0.0.0.0:88;

proxy_pass k8s-apiserver;

}

}

# systemctl restart nginx

# systemctl status nginx

# ps -ef | grep nginx

# yum install -y keepalived

# vim /etc/keepalived/keepalived.conf

global_defs {

# 接收邮件地址

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

# 邮件发送地址

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/usr/local/nginx/sbin/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER # 备节点则改为BACKUP

interface enp0s3 # 这里修改为配置VIP的网卡

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.33.10/24

}

track_script {

check_nginx

}

}

# vim /usr/local/nginx/sbin/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

# chmod +x /usr/local/nginx/sbin/check_nginx.sh

# systemctl start keepalived

# ip a # 查看VIP是否生效

- 在两个node节点修改配置(server改为192.168.33.10:88)

# cd /opt/kubernetes/cfg/

# grep 7 *

# vim bootstrap.kubeconfig

# vim kubelet.kubeconfig

# vim kube-proxy.kubeconfig

# systemctl restart kubelet

# systemctl restart kube-proxy

# ps -ef | grep kube

回到master检查集群状态:kubectl get node

kubectl命令行管理工具

- 命令概要:

- kubectl管理应用程序生命周期

- 创建

kubectl run nginx --replicas=3 --image=nginx:1.14 --port=80

kubectl get deploy,pods

说明:

–replicas: 副本数,一般在两个以上,相当于跑了多少个服务 - 发布

kubectl expose deployment nginx --port=80 --type=NodePort --target-port=80 --name=nginx-service

检查:

kubectl get service

kubectl get pods

kubectl logs [pod_name]

kubectl describe pod [pod_name] - 更新

kubectl set image deployment/nginx nginx=nginx:1.15

触发滚动更新,保证业务不中断进行发布更新

kubectl get pods 查看滚动更新过程 - 回滚

查看:

kubectl rollout history deployment/nginx

回滚到上一个版本:(同样是滚动更新)

kubectl rollout undo deployment/nginx - 删除

kubectl delete deploy/nginx

kubectl delete svc/nginx-service

- kubectl远程连接K8s集群

- 设置连接的API地址

kubectl config set-cluster kubernetes

–server=https://192.168.33.7:6443

–embed-certs=true

–certificate-authority=ca.pem

–kubeconfig=config - 设置使用的证书

kubectl config set-credentials cluster-admin

–certificate-authority=ca.pem

–embed-certs=true

–client-key=admin-key.pem

–client-certificate=admin.pem

–kubeconfig=config - 设置上下文

kubectl config set-context default --cluster=kubernetes --user=cluster-admin --kubeconfig=config

kubectl config use-context default --kubeconfig=config - 执行完成以后会生成一个config文件,将其复制到远程连接机器中的~/.kube/目录下,即可使用kubectl命令管理k8s集群。

如果config不是放在~/.kube/目录,需要使用参数指定文件位置--kubeconfig config

YAML 配置文件管理资源

- 语法格式:

- 缩进表示层级关系

- 不支持制表符tab缩进,只支持使用空格缩进

- 通常开头缩进2个空格

- 字符后缩进1个空格,如冒号、逗号等

- “

---”表示YAML格式,表示一个文件的开始或者分割 - “#”注释

- 配置文件说明:

- 定义配置时,指定最新稳定版API(当前为v1);

- 配置文件应该存储在集群之外的版本控制仓库中。如果需要,可以快速回滚配置、重新创建和恢复;

- 应该使用YAML格式编写配置文件,而不是JSON。尽管这些格式都可以使用,但YAML对用户更加友好;

- 可以将相关对象组合成单个文件,通常会更容易管理;

- 不要没必要的指定默认值,简单和最小配置减少错误;

- 在注释中说明一个对象描述更好维护。

- 饭粒:创建并启动一个nginx实例

# vim nginx-deployment.yaml

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # tells deployment to run 2 pods matching the template

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels:

app: nginx

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

selector:

app: nginx

# kubectl create -f nginx-deployment.yaml

实时查看pod创建情况

# kubectl get pod -w

# kubectl get svc

获取所有版本

# kubectl api-versions

在浏览器访问 node IP:端口

配置文件说明:

- 系统生成YAML配置文件

-

饭粒1:使用run命令导出

kubectl run nginx --image=nginx --replicas=3 --dry-run -o yaml > my-deployment.yaml

说明:- 加上

--dry-run参数不会真正执行,只是做检查。 -o指定输出格式,可以指定yaml、json格式。- 重定向到输出文件。

- 系统会将提交创建资源对象的所有字段都输出,可以进入文件将不需要的删除。

获取资源清单:kubectl api-resources

- 加上

-

饭粒2:使用get命令导出

kubectl get deploy/nginx --export -o yaml > me-deploy.yaml

说明见饭粒1 -

忘记关键字查找

kubectl explain --help

例如,查看容器资源可用字段:kubectl explain pods.spec.containers

这个命令会输出顶层的属性,我们只需要明白<string>表示字符串,<Object>表示对象, [] 表示数组即可,对象在 YAML 文件中就需要缩进,数组就需要通过添加一个破折号来表示一个 Item,对于对象和对象数组我们不知道里面有什么属性的,我们还可以继续在后面查看。可以传入一个--recursive参数来获取所有层级属性。

kubectl api-resources可以打印所有已经注册的API资源。

-

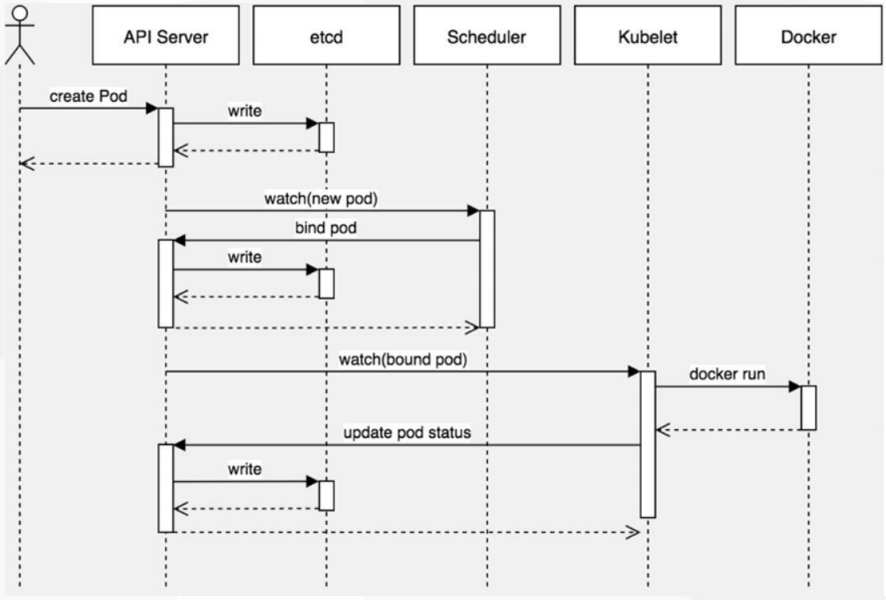

深入理解Pod

- Pod

- 最小部署单元

- 一组容器的集合

- 一个Pod中的容器共享网络命名空间

- Pod是短暂的

- 容器分类

- Infrastructure Container:基础容器

- 维护整个Pod网络空间

- InitContainers:初始化容器 官文

- 先于业务容器执行

- Containers:业务容器

- 并行启动

- Infrastructure Container:基础容器

- 镜像拉取策略(imagePullPolicy)

- IfNotPresent:默认值,镜像在宿主机上不存在时才拉取

- Always:每次创建Pod都会重新拉取一次镜像

- Never:Pod永远不会主动拉取这个镜像

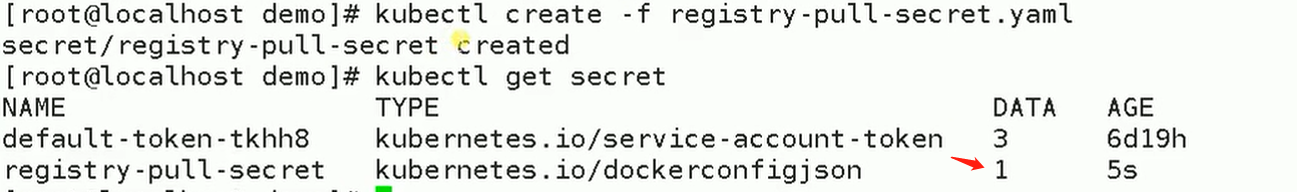

- 拉取需要认证的仓库镜像(私有镜像)

- 登陆

docker login -p [password] -u [username] - 获取认证信息

cat .docker/config.json

cat .docker/config.json | base64 -w 0

# vim registry-pull-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: registry-pull-secret

namespace: blog

data:

.dockerconfigjson: [上面生成的base64编码]

type: kubernetes.io/dockerconfigjson

# kubectl create -f registry-pull-secret.yaml

# kubectl get secret

输出的Data大于0才算配置成功

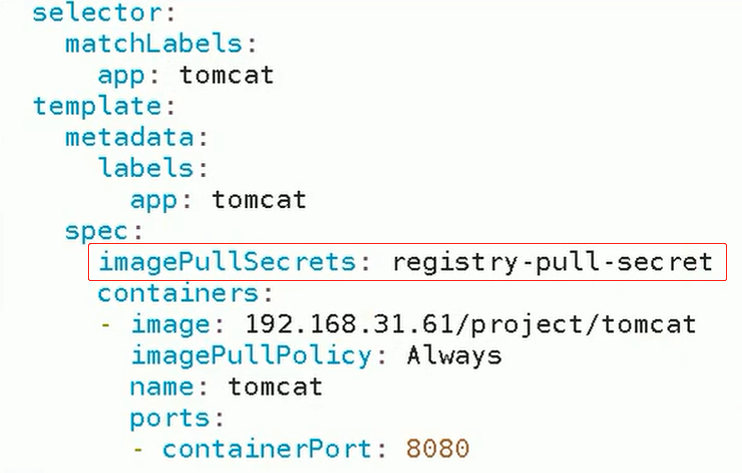

将其写入YAML文件

...

imagePullSecrets:

- name: registry-pull-secret

...

-

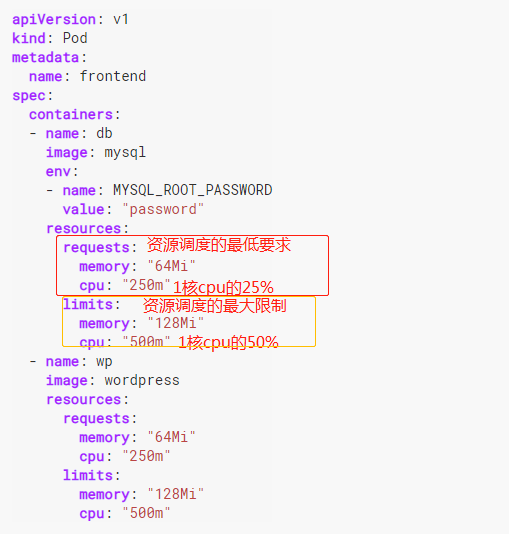

pod资源限制 官文

- spec.containers[].resources.limits.cpu

- spec.containers[].resources.limits.memory

- spec.containers[].resources.requests.cpu

- spec.containers[].resources.requests.memory

- 饭粒

# vim wordpress.yaml apiVersion: v1 kind: Pod metadata: name: frontend spec: containers: - name: db image: mysql env: - name: MYSQL_ROOT_PASSWORD value: "333333" resources: requests: memory: "64Mi" cpu: "250m" limits: memory: "128Mi" cpu: "500m" # 0.5核cpu - name: wp image: wordpress resources: requests: memory: "64Mi" cpu: "250m" limits: memory: "128Mi" cpu: "500m" # kubectl apply -f wordpress.yaml # 启动实例 # kubectl describe pod frontend # 查看pod调度情况 # kubectl describe nodes 192.168.33.8 # 查看节点资源使用情况 # kubectl get ns # 查看所有的namespace -

重启策略(restartPolicy:放在containers同级)

- Always:当容器终止退出后,总是重启容器(默认策略)

- OnFailure:当容器异常退出(退出状态码非0)时,才重启容器

- Never:从不重启容器

-

健康检查(Probe)官文

- Probe有以下两种类型:

- livenessProbe:如果检查失败,将杀死容器,根据Pod的restartPolicy来操作。

- readinessProbe:如果检查失败,Kubernetes会把Pod从service endpoints中剔除。

- Probe支持以下三种检查方法:

- httpGet:发送HTTP请求,返回200-400范围状态码为成功。

- exec:执行Shell命令返回状态码是0为成功。

- tcpSocket:发起TCP Socket建立成功。

- 饭粒

apiVersion: v1 kind: Pod metadata: labels: test: liveness name: liveness-exec spec: containers: - name: liveness image: busybox args: - /bin/sh - -c - touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600 livenessProbe: exec: command: - cat - /tmp/healthy initialDelaySeconds: 5 periodSeconds: 5 -

调度约束(与containers同级)

- nodeName:用于将Pod调度到指定的Node名称上(绕过调度器调度)

- nodeSelector:用于将Pod调度到匹配Label的Node上(label匹配,通过调度器调度)

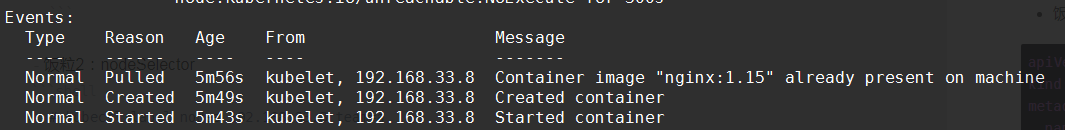

- 饭粒1:nodeName

apiVersion: v1 kind: Pod metadata: name: pod-example labels: app: nginx spec: nodeName: 192.168.33.8 containers: - name: nginx image: nginx:1.15kubectl describe pod [pod_name]查看调度信息。

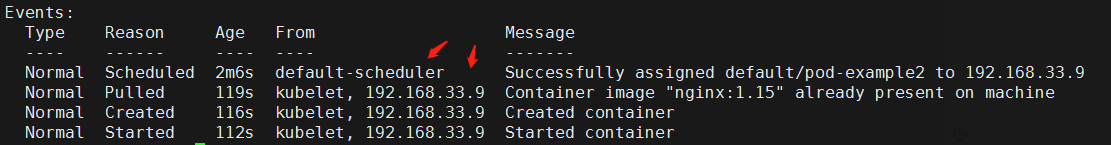

- 饭粒2:nodeSelector

# kubectl label nodes 192.168.33.8 team=a # kubectl label nodes 192.168.33.9 team=b # kubectl get nodes --show-labelsapiVersion: v1 kind: Pod metadata: name: pod-example spec: nodeSelector: team: b containers: - name: nginx image: nginx:1.15kubectl describe pod [pod_name]查看调度信息。

-

pod鼓掌排查

pod状态:官文值 描述 Pending Pod创建已经提交到Kubernetes。但是,因为某种原因而不能顺利创建。例如 下载镜像慢,调度不成功。kubectl describe pod [pod_name]打印信息中Events前两步Running Pod已经绑定到一个节点,并且已经创建了所有容器。至少有一个容器正在运行中,或正在启动或重新启动。 Succeeded Pod中的所有容器都已成功终止,不会重新启动。 Failed Pod的所有容器均已终止,且至少有一个容器已在故障中终止。也就是说,容器要么以非零状态退出,要么被系统终止。kubectl logs [POD_NAME] Unknown 由于某种原因apiserver无法获得Pod的状态,通常是由于Master与Pod所在主机kubelet通信时出错。 - 处理总结:

kubectl describe [TYPE] [NAME_PREFIX]:创建报错,查看Events事件

kubectl logs [POD_NAME]:查看容器日志

kubectl exec –it [POD_NAME] bash:运行中问题,进入容器查看应用状态

- 处理总结:

Service

- Service作用

- 防止Pod失联

- 定义一组Pod的访问策略

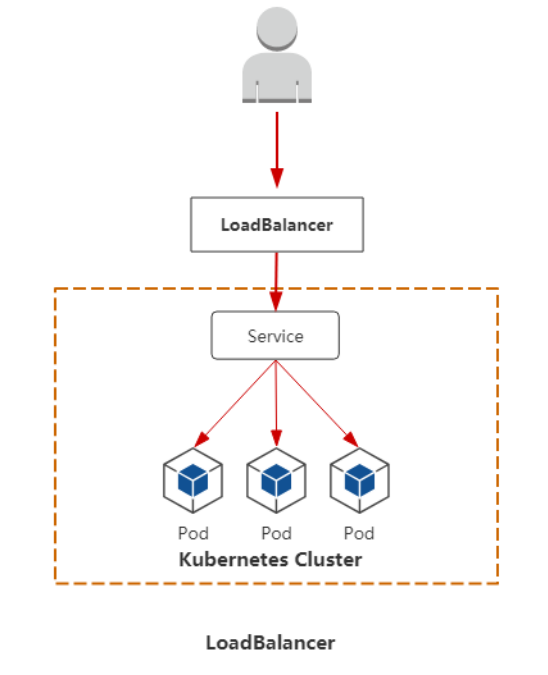

- 支持ClusterIP,NodePort以及LoadBalancer三种类型

- Service的底层实现主要有iptables和ipvs二种网络模式

- 饭粒1:创建Service

# vim my-service.yaml apiVersion: v1 kind: Service metadata: name: my-service namespace: default spec: clusterIP: 10.0.0.123 selector: app: nginx ports: - protocol: TCP name: http port: 80 # service端口 targetPort: 8080 # 容器端口 # kubectl apply -f my-service.yaml # kubectl get svc # 查看所有service # kubectl get ep # 查看后端ENDPOINTS # kubectl describe svc my-service # 查看service详细信息 - Pod和service的关系

- 通过label-selector相关联

- 通过Service实现Pod的负载均衡,轮询分发( TCP/UDP 4层)

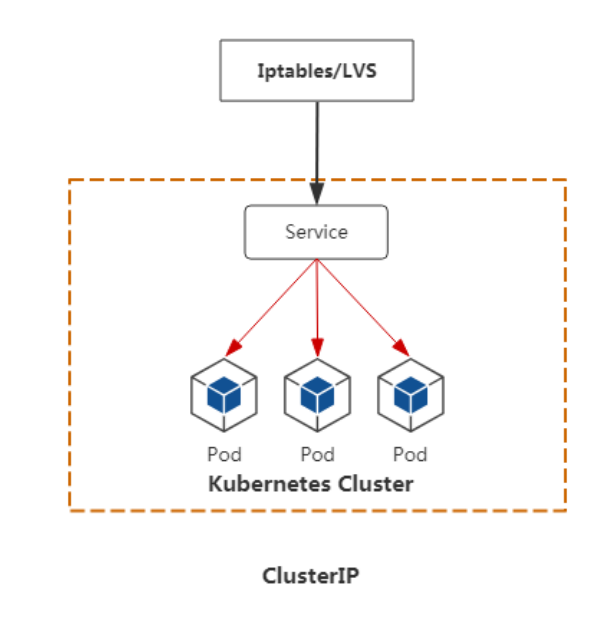

- Service类型

- ClusterIP:

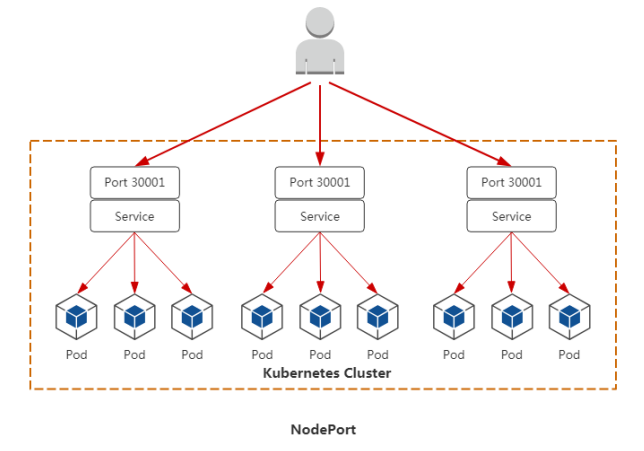

默认,分配一个集群内部可以访问的虚拟IP(VIP)。上述饭粒1 - NodePort:在每个Node上分配一个

端口作为外部访问入口 - LoadBalancer:工作在特定的Cloud Provider上,例如Google Cloud,AWS,OpenStack

NodePort访问流程:

用户 -> 域名 -> 负载均衡器 -> NodeIP:Port -> PodIP:Port

- ClusterIP:

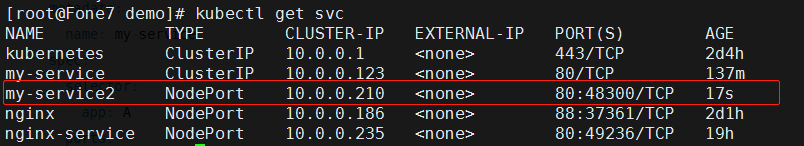

- 饭粒2:创建NodePort service

apiVersion: v1 kind: Service metadata: name: my-service2 spec: selector: app: nginx ports: - protocol: TCP port: 80 targetPort: 8080 nodePort: 48300 type: NodePort

在各节点上查看端口监听情况:ss -antpu | grep 48300

查看负载均衡绑定后端容器(需要安装ipvsadm):ipvsadm -ln

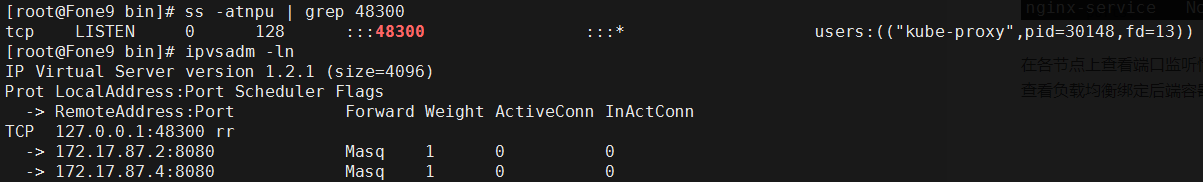

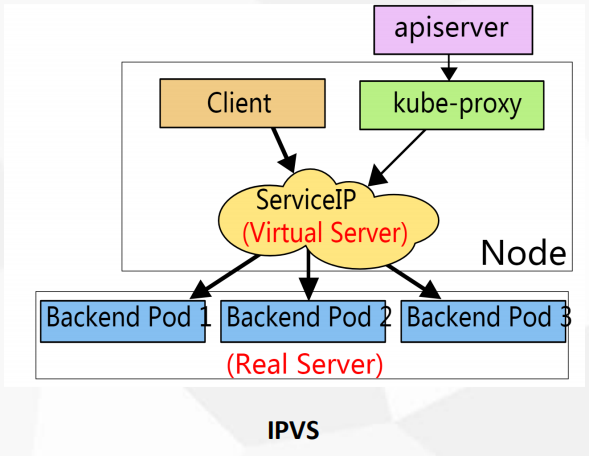

- Service代理模式

- 底层流量转发与负载均衡实现:

- Iptables(默认)

查看规则:iptables-save | grep 10.0.0.123

优点:灵活,功能强大(可以在数据包不同阶段对包进行操作)

缺点:

- 工作在用户态,创建很多iptables规则(更新是非增量式)

- iptables规则从上到下逐条匹配(延时大)

- IPVS

LVS是基于IPVS内核调度模块实现的负载均衡。(阿里云SLB,基于LVS实现四层负载均衡)

优点:

- 工作在内核态,有更好的性能

- 调度算法丰富:rr,wrr,lc,wlc,ip hash…

在配置文件/opt/kubernetes/cfg/kube-proxy中加上参数--ipvs-scheduler=wrr可修改调度算法。

- Iptables(默认)

- 底层流量转发与负载均衡实现:

部署集群内部的DNS网络

-

部署Yaml文件

需要修改:- image:去掉前面的域名

- $DNS_DOMAIN:域名,如cluster.local

- $DNS_MEMORY_LIMIT:最大可用内存值,如170Mi

- $DNS_SERVER_IP:node中/opt/kubernetes/cfg/kubelet.config设置的clusterDNS值

- 删除Corefile里面这一行(用于对集群以外的域名解析):

proxy . /etc/resolv.conf

-

执行

kubectl apply -f coredns.yaml进行部署

执行kubectl get pods -n kube-system查看pod是否运行正常 -

测试能否正常解析

# kubectl run -it --image=busybox:1.28.4 --rm --restart=Never sh

/ # nslookup kubernetes

- DNS服务监视Kubernetes API,为每一个Service创建DNS记录用于域名解析。

- ClusterIP A记录格式:[service-name].[namespace-name].svc.cluster.local

示例:my-svc.my-namespace.svc.cluster.local

.svc.cluster.local 可省略

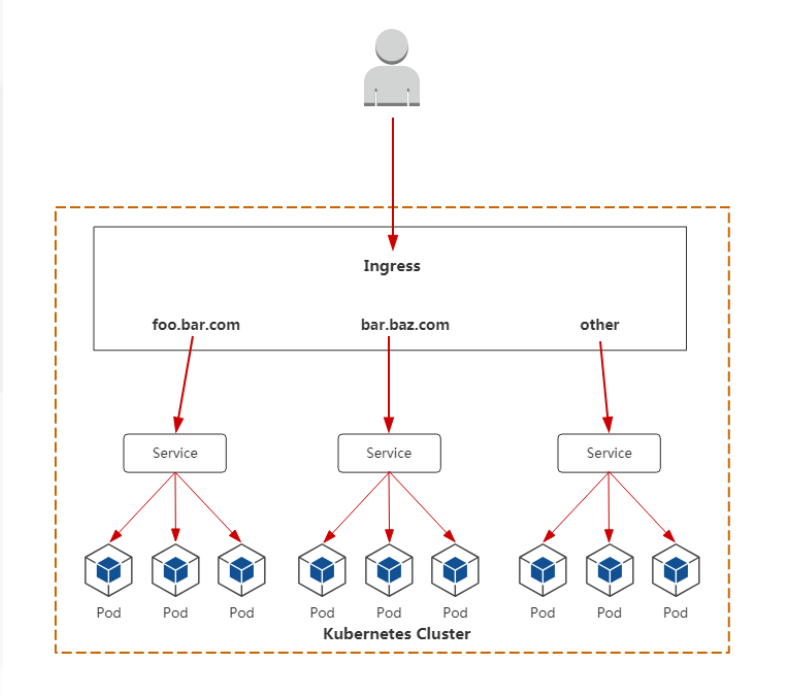

Ingress

- 建议使用这种方式对外暴露接口:

用户 -> 域名 -> 负载均衡器 -> Ingress Controller(Node) -> Pod - 支持自定义service访问策略;

- 只支持基于域名的访问策略;

- 支持TLS;

-

Ingress通过service关联pod

-

通过Ingress Controller实现Pod的负载均衡

- 支持TCP/UDP 4层和HTTP 7层

Ingress运行在node节点。

Ingress部署文档

官文

- 支持TCP/UDP 4层和HTTP 7层

-

注意事项:

• 镜像地址修改成国内的:lizhenliang/nginx-ingress-controller:0.20.0

• 使用宿主机网络:hostNetwork: true

• 保证节点的80/443端口没有被占用

• 注意所有node节点的kube-proxy都要配置为ipvs调度,调度算法需要统一,使用ipvsadm -ln查看。 -

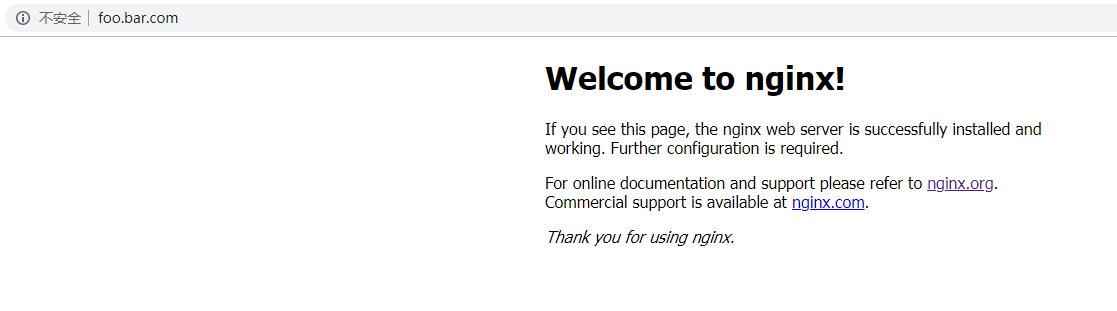

饭粒1:Ingress实现

http转发

ingress_test.yaml(修改最下面两项即可)apiVersion: extensions/v1beta1 kind: Ingress metadata: name: simple-fanout-example annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: rules: - host: foo.bar.com http: paths: - path: / backend: serviceName: nginx-service servicePort: 80# kubectl apply -f ingress_test.yaml ingress.extensions/simple-fanout-example created # kubectl get ingress NAME HOSTS ADDRESS PORTS AGE simple-fanout-example foo.bar.com 80 35s在宿主机添加hosts解析:

192.168.33.8 foo.bar.com

在浏览器访问:http://foo.bar.com/ 即可访问对应service服务

-

实现原理

进入Ingress实例# kubectl get pods -n ingress-nginx NAME READY STATUS RESTARTS AGE nginx-ingress-controller-7dcb4bbb8d-jtfvr 1/1 Running 0 15h # kubectl exec -it nginx-ingress-controller-7dcb4bbb8d-jtfvr bash -n ingress-nginx www-data@Fone8:/etc/nginx$ ps -ef | grep nginx ... www-data 7 6 1 May21 ? 00:10:19 /nginx-ingress-controller --configmap=ingress-nginx/nginx-configuration --publish-service=ingress-nginx/ingress-nginx --annotations-prefix=nginx.ingress.kubernetes.io ...上面的进程会实时监控api-server所有 service变化,当发生改变就立刻更新nginx配置文件/etc/nginx/nginx.conf

-

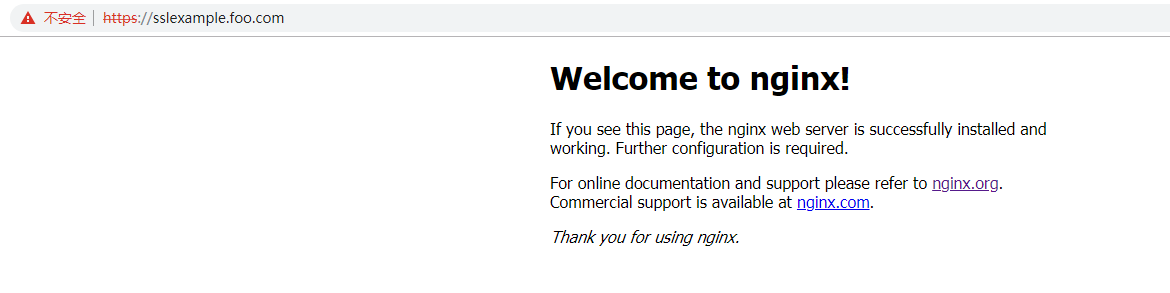

饭粒2:Ingress实现

https转发

- 自签证书

# vim certs.sh cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF cat > ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - cat > sslexample.foo.com-csr.json <<EOF { "CN": "sslexample.foo.com", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes sslexample.foo.com-csr.json | cfssljson -bare sslexample.foo.com kubectl create secret tls sslexample.foo.com --cert=sslexample.foo.com.pem --key=sslexample.foo.com-key.pem # sh certs.sh # ls sslexample.*.pem sslexample.foo.com-key.pem sslexample.foo.com.pem # 生成认证 # kubectl create secret tls sslexample-foo-com --cert=sslexample.foo.com.pem --key=sslexample.foo.com-key.pem # kubectl get secret NAME TYPE DATA AGE ... sslexample-foo-com kubernetes.io/tls 2 19s ... - ingress_https.yaml(修改secretName和最下面两行即可)

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: tls-example-ingress spec: tls: - hosts: - sslexample.foo.com secretName: sslexample-foo-com rules: - host: sslexample.foo.com http: paths: - path: / backend: serviceName: nginx servicePort: 88# kubectl apply -f ingress_https.yaml ingress.extensions/tls-example-ingress created # kubectl get ingress NAME HOSTS ADDRESS PORTS AGE simple-fanout-example foo.bar.com 80 48m tls-example-ingress sslexample.foo.com 80, 443 21s - 在宿主机添加hosts解析:192.168.33.8 sslexample.foo.com

在浏览器访问https://sslexample.foo.com

数据卷

- Volume 官文

-

Kubernetes中的Volume提供了在容器中挂载外部存储的能力。

-

Pod需要设置

卷来源(spec.valume)和挂载点(spec.containers.volumeMounts)两个信息后才可以使用响应的Volume。 -

emptyDir

- 创建一个空卷,挂载到Pod中的容器。Pod删除该卷也会被删除。

- 应用场景:Pod中容器之间数据共享。

- 饭粒:emptyDir.yaml

创建apiVersion: v1 kind: Pod metadata: name: my-pod spec: containers: - name: write image: centos command: ["bash","-c","for i in {1..100};do echo $i >> /data/hello;sleep 1;done"] volumeMounts: - name: data mountPath: /data - name: read image: centos command: ["bash","-c","tail -f /data/hello"] volumeMounts: - name: data mountPath: /data volumes: - name: data emptyDir: {}kubectl apply -f emptyDir.yaml并查看状态kubectl get pods

查看实例logs:

kubectl logs my-pod -c write

kubectl logs my-pod -c read -f

-

hostPath

- 挂载Node文件系统上文件或者目录到Pod中的容器。

- 应用场景:Pod中容器需要访问宿主机文件

- 饭粒

创建apiVersion: v1 kind: Pod metadata: name: my-pod2 spec: containers: - name: busybox image: busybox args: - /bin/sh - -c - sleep 36000 volumeMounts: - name: data mountPath: /data volumes: - name: data hostPath: path: /tmp type: Directorykubectl apply -f hostPath.yaml并查看状态和实例Node位置kubectl get pods -o wide

进入实例查看/data目录内容与对应Node机器的/tmp目录内容是否一致:

kubectl exec -it my-pod2 sh

-

持久化NFS

- 配置NFS

- 所有Node节点安装nfs客户端:

yum install -y nfs-utils - 对外暴露访问接口(在一个Node节点配置即可,下一步同)

# vim /etc/exports /data/nfs *(rw,no_root_squash)- 启动守护进程

systemctl start nfs

-

饭粒

NFS_test.yamlapiVersion: apps/v1beta1 kind: Deployment metadata: name: nfs-deployment spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx volumeMounts: - name: wwwroot mountPath: /usr/share/nginx/html ports: - containerPort: 80 volumes: - name: wwwroot nfs: server: 192.168.33.9 path: /data/nfs启动实例

# kubectl apply -f NFS_test.yaml # kubectl get pods # kubectl get svc -o wide # 查看SELECTOR对应的端口 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 4d1h <none> my-service ClusterIP 10.0.0.123 <none> 80/TCP 47h app=nginx my-service2 NodePort 10.0.0.210 <none> 80:30008/TCP 45h app=nginx nginx NodePort 10.0.0.186 <none> 88:37361/TCP 3d22h run=nginx nginx-service NodePort 10.0.0.235 <none> 80:49236/TCP 2d16h app=nginx在NFS服务端写入/data/nfs/index.html内容

<h1>Hello World!!!</h1>

进入实例查看是否同步:# kubectl exec -it nfs-deployment-6b86fcf776-7kzmv bash root@nfs-deployment-6b86fcf776-7kzmv:/# ls /usr/share/nginx/html/ root@nfs-deployment-6b86fcf776-7kzmv:/# ls /usr/share/nginx/html/ index.html浏览器访问:http://192.168.33.8:49236/

删除重建实例,浏览器仍可以访问到index.html内容。

-

将公司项目部署到Kubernetes平台中

-

部署步骤

- 部署的项目情况

- 业务架构及服务(dubbo,spring cloud)

- 第三方服务,例如mysql,redis,zookeeper,eruka,mq

- 服务之间怎么通信

- 资源消耗:硬件资源,带宽

- 部署项目时使用到的K8s资源

- 使用namespace进行不同项目隔离,或者隔离不同环境(test、prod、dev)

- 无状态应用(deployment)

- 有状态应用(statefulset,pv,pvc)

- 暴露外部访问(Service,Ingress)

- secret,configmap

- 项目基础镜像

- 编排部署(YAML)

以镜像为交付物。- 项目构建(Java):CI/CD环境在这个阶段是自动完成的(代码拉取->代码编译构建->镜像打包->推送到镜像仓库)

- 编写YAML文件,使用这个镜像

- 工作流程

kubectl -> Yaml -> 镜像仓库拉取镜像 -> Service(集群内部访问)/ Ingress 暴露给外部用户

- 安装harbor

- 下载安装包 官方 加速下载1.9版本

- 解压进入解压目录,修改配置文件

harbor.yml中hostname为机器IP - 执行

./prepare进行预配置信息;执行安装脚本./install.sh - 启动容器:

docker-compose up -d

查看容器状态:docker-compose ps - 在宿主机访问harbor镜像仓库(端口是80):192.168.33.9

默认用户名密码 admin:Harbor12345 - 在docker配置

/etc/docker/daemon.json中加入可信任机器(所有Node节点都要配置)并重启docker# vim /etc/docker/daemon.json {"registry-mirrors": ["http://bc437cce.m.daocloud.io"], "insecure-registries": ["192.168.33.9"] } # systemctl restart docker

- 部署Java项目

- 安装Java、maven

- 下载Java项目

- 进入项目目录,使用maven进行构建

/usr/local/src/apache-maven-3.6.3/bin/mvn clean package - 打包:

docker build -t 192.168.33.9/project/java-demo:lastest .

打包完成输出:Successfully built 2de0871198e3 Successfully tagged 192.168.33.9/project/java-demo:lastest

- 推送镜像到harbor仓库中:

# docker login 192.168.33.9 # 输入登陆harbor的用户名密码admin:Harbor12345 # docker push 192.168.33.9/project/java-demo:lastest - 编排部署YAML(一次执行一下YAML文件

kubectl create -f xxx.yaml,确保前一个创建成功再执行下一个kubectl get pod -n test)

namespace.yaml

创建securt:apiVersion: v1 kind: Namespace metadata: name: test

deployment.yaml# kubectl create secret docker-registry registry-pull-secret --docker-username=admin --docker-password=Harbor12345 --docker-email=zouff@yonyou.com --docker-server=192.168.33.9 -n test # kubectl get secret -n test # 查看是否创建成功

service.yamlapiVersion: apps/v1beta1 kind: Deployment metadata: name: tomcat-java-demo namespace: test spec: replicas: 3 selector: matchLabels: project: www app: java-demo template: metadata: labels: project: www app: java-demo spec: imagePullSecrets: - name: registry-pull-secret containers: - name: tomcat image: 192.168.33.9/project/java-demo:lastest imagePullPolicy: Always ports: - containerPort: 8080 name: web protocol: TCP resources: requests: cpu: 0.5 memory: 1Gi limits: cpu: 1 memory: 2Gi livenessProbe: httpGet: path: / port: 8080 initialDelaySeconds: 60 timeoutSeconds: 20 readinessProbe: httpGet: path: / port: 8080 initialDelaySeconds: 60 timeoutSeconds: 20

ingress.yamlapiVersion: v1 kind: Service metadata: name: tomcat-java-demo namespace: test spec: selector: project: www app: java-demo ports: - name: web port: 80 targetPort: 8080

mysql.yamlapiVersion: extensions/v1beta1 kind: Ingress metadata: name: tomcat-java-demo namespace: test spec: rules: - host: java.ctnrs.com http: paths: - path: / backend: serviceName: tomcat-java-demo servicePort: 80

将数据导入数据库中:apiVersion: v1 kind: Service metadata: name: mysql spec: ports: - port: 3306 name: mysql clusterIP: None selector: app: mysql-public --- apiVersion: apps/v1beta1 kind: StatefulSet metadata: name: db spec: serviceName: "mysql" template: metadata: labels: app: mysql-public spec: containers: - name: mysql image: mysql:5.7 env: - name: MYSQL_ROOT_PASSWORD value: "123456" - name: MYSQL_DATABASE value: test ports: - containerPort: 3306 volumeMounts: - mountPath: "/var/lib/mysql" name: mysql-data volumeClaimTemplates: - metadata: name: mysql-data spec: accessModes: ["ReadWriteMany"] storageClassName: "managed-nfs-storage" resources: requests: storage: 2Gi

浏览器访问域名java.ctnrs.com[root@Fone8 tomcat-java-demo]# scp db/tables_ly_tomcat.sql master:/root [root@Fone7 java-demo]# kubectl cp /root/tables_ly_tomcat.sql db-0:/ root@db-0:/# mysql -uroot -p123456 mysql> source /tables_ly_tomcat.sql; [root@Fone7 java-demo]# kubectl describe pod db-0 # 查看pod IP [root@Fone8 tomcat-java-demo]# vim src/main/resources/application.yml # 修改链接后端数据库ip ... url: jdbc:mysql://172.17.87.10:3306/test?characterEncoding=utf-8 ... [root@Fone8 tomcat-java-demo]# /usr/local/src/apache-maven-3.6.3/bin/mvn clean package # 重新构建 [root@Fone8 tomcat-java-demo]# docker build -t 192.168.33.9/project/java-demo:lastest . # 重新打包镜像

Kubernetes集群资源监控

- 监控指标

- 集群监控

• 节点资源利用率

• 节点数

• 运行Pods - Pod监控

• Kubernetes指标

• 容器指标

• 应用程序

- 集群监控

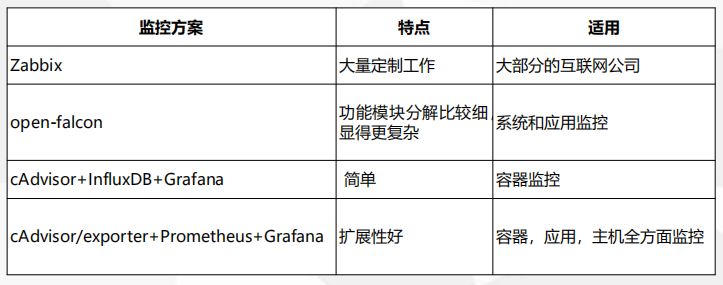

- 监控方案

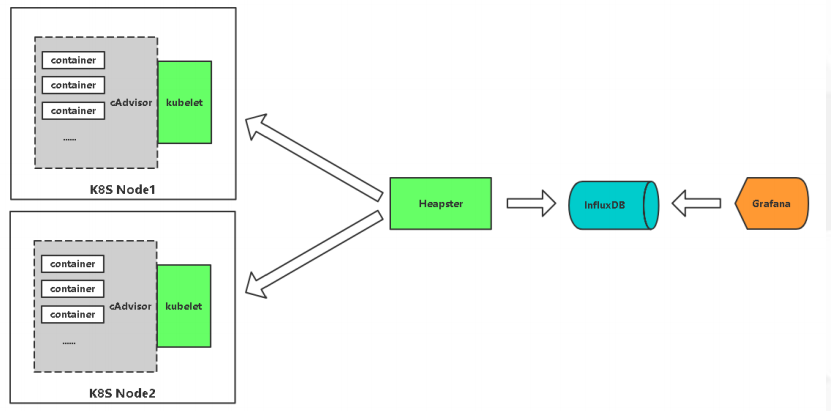

- Heapster+InfluxDB+Grafana监控方案部署

- 架构图

- 架构图

- 开启所有节点采集数据端口

# vim /opt/kubernetes/cfg/kubelet.config ... readOnlyPort: 10255 ... # systemctl restart kubelet # curl 192.168.33.8:10255/metrics - 部署influxdb、heapster、grafana

influxdb.yaml

heapster.yamlapiVersion: extensions/v1beta1 kind: Deployment metadata: name: monitoring-influxdb namespace: kube-system spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: influxdb spec: containers: - name: influxdb image: registry.cn-hangzhou.aliyuncs.com/google-containers/heapster-influxdb-amd64:v1.1.1 volumeMounts: - mountPath: /data name: influxdb-storage volumes: - name: influxdb-storage emptyDir: {} --- apiVersion: v1 kind: Service metadata: labels: task: monitoring kubernetes.io/cluster-service: 'true' kubernetes.io/name: monitoring-influxdb name: monitoring-influxdb namespace: kube-system spec: ports: - port: 8086 targetPort: 8086 selector: k8s-app: influxdb

grafana.yamlapiVersion: v1 kind: ServiceAccount metadata: name: heapster namespace: kube-system --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: heapster roleRef: kind: ClusterRole name: cluster-admin apiGroup: rbac.authorization.k8s.io subjects: - kind: ServiceAccount name: heapster namespace: kube-system --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: heapster namespace: kube-system spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: heapster spec: serviceAccountName: heapster containers: - name: heapster image: registry.cn-hangzhou.aliyuncs.com/google-containers/heapster-amd64:v1.4.2 imagePullPolicy: IfNotPresent command: - /heapster - --source=kubernetes:https://10.0.0.1 - --sink=influxdb:http://10.0.0.188:8086 --- apiVersion: v1 kind: Service metadata: labels: task: monitoring kubernetes.io/cluster-service: 'true' kubernetes.io/name: Heapster name: heapster namespace: kube-system spec: ports: - port: 80 targetPort: 8082 selector: k8s-app: heapsterapiVersion: extensions/v1beta1 kind: Deployment metadata: name: monitoring-grafana namespace: kube-system spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: grafana spec: containers: - name: grafana image: registry.cn-hangzhou.aliyuncs.com/google-containers/heapster-grafana-amd64:v4.4.1 ports: - containerPort: 3000 protocol: TCP volumeMounts: - mountPath: /var name: grafana-storage env: - name: INFLUXDB_HOST value: monitoring-influxdb - name: GF_AUTH_BASIC_ENABLED value: "false" - name: GF_AUTH_ANONYMOUS_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ORG_ROLE value: Admin - name: GF_SERVER_ROOT_URL value: / volumes: - name: grafana-storage emptyDir: {} --- apiVersion: v1 kind: Service metadata: labels: kubernetes.io/cluster-service: 'true' kubernetes.io/name: monitoring-grafana name: monitoring-grafana namespace: kube-system spec: type: NodePort ports: - port : 80 targetPort: 3000 selector: k8s-app: grafana- 启动以后查看service grafana运行端口

kubectl get pods -n kube-system,在浏览器中访问masterIP:端口

- 启动以后查看service grafana运行端口

Kubernetes平台中日志收集

- 收集日志类别

• K8S系统的组件日志

• K8S Cluster里面部署的应用程序日志 - 容器中的日志怎么收集

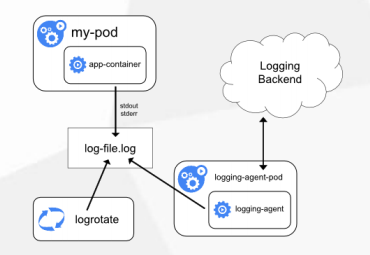

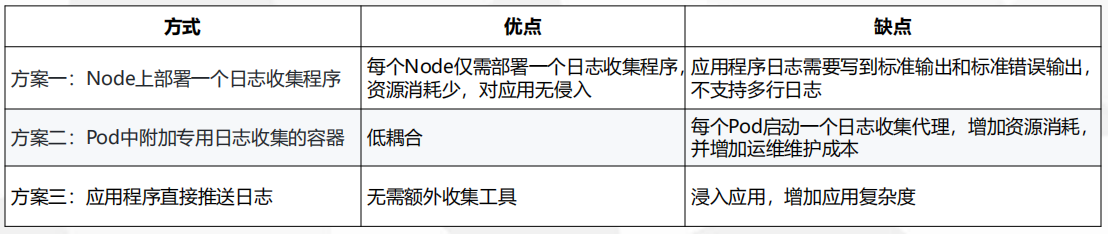

- Node上部署一个日志收集程序

• DaemonSet方式部署日志收集程序

• 对本节点/var/log和/var/lib/docker/containers/

两个目录下的日志进行采集

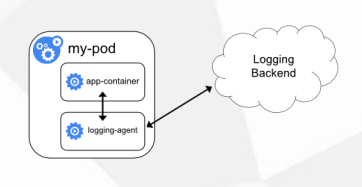

- Pod中附加专用日志收集的容器

• 每个运行应用程序的Pod中增加一个日志收集容器,使用emtyDir共享日志目录让日志收集程序读取到

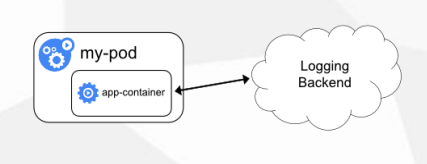

- 应用程序直接推送日志

• 超出Kubernetes范围

- 对比

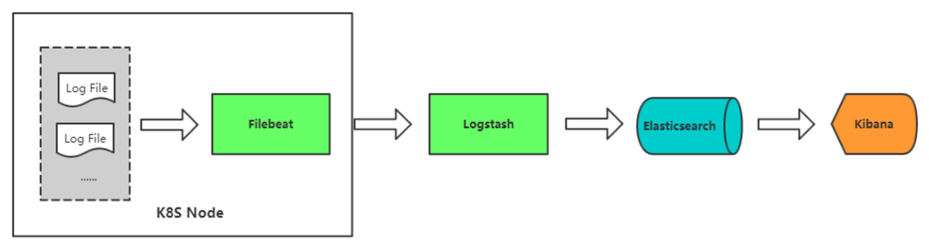

- 日志方案:Filebeat+ELK

- 安装logstash、elasticsearch、kibana

- 配置文件

/etc/kibana/kibana.yml

下面注释打开:

新建/etc/logstash/conf.d/logstash-to-es.confserver.port: 5601 server.host: "0.0.0.0" elasticsearch.hosts: ["http://localhost:9200"]input { beats { port => 5044 } } filter { } output { elasticsearch { hosts => ["http://127.0.0.1:9200"] index => "k8s-log-%{+YYYY.MM.dd}" } stdout { codec => rubydebug } } - 执行filebeat部署(在各节点收集日志)

apiVersion: v1 kind: ConfigMap metadata: name: k8s-logs-filebeat-config namespace: kube-system data: filebeat.yml: | filebeat.inputs: - type: log paths: - /var/log/messages fields: app: k8s type: module fields_under_root: true setup.ilm.enabled: false setup.template.name: "k8s-module" setup.template.pattern: "k8s-module-*" output.elasticsearch: hosts: ['192.168.33.8:5044'] index: "k8s-module-%{+yyyy.MM.dd}" --- apiVersion: apps/v1 kind: DaemonSet metadata: name: k8s-logs namespace: kube-system spec: selector: matchLabels: project: k8s app: filebeat template: metadata: labels: project: k8s app: filebeat spec: containers: - name: filebeat image: elastic/filebeat:7.7.0 args: [ "-c", "/etc/filebeat.yml", "-e", ] resources: requests: cpu: 100m memory: 100Mi limits: cpu: 500m memory: 500Mi securityContext: runAsUser: 0 volumeMounts: - name: filebeat-config mountPath: /etc/filebeat.yml subPath: filebeat.yml - name: k8s-logs mountPath: /var/log/messages volumes: - name: k8s-logs hostPath: path: /var/log/messages - name: filebeat-config configMap: name: k8s-logs-filebeat-config

- k8s部署consul

- iptables SNAT优化

- 删除原有SNAT规则:

yum install -y iptables-service

iptables-save | grep -i postrouting

iptables -t nat -D POSTROUTING -s 172.17.6.0/24 ! -o docker0 -j MASQUERADE - 更新SNAT策略:

iptables -t nat -I POSTROUTING -s 172.17.6.0/24 ! -d 172.17.0.0/16 ! -o docker0 -j MASQUERADE

iptables-save | grep -i POSTROUTING

iptables-save > /etc/sysconfig/iptables

注意:

如果更新后网络不通,需要检查是否有reject规则,并将其删除

iptables-save | grep -i reject

iptables -D …

-

k8s平滑升级

- 注意事项:

- 在流量低估升级

- 优先升级po数量比较少的节点

- 步骤:

- 挨个下线节点

将负载均衡中节点下线(如nginx)

kubectl delete node node02

kubectl get node - 将kubernetes二进制包解压,安装目录下建立软连接指向解压目录

- 进入解压目录和bin目录,删除压缩包、_tag文件

将旧版本bin目录下的所有.sh脚本拷贝到新版本bin目录 - 在解压目录创建cert和conf目录,分别将证书文件和配置文件拷贝到里面

- 依次重启kube-apiserver、etcd、controller-manager、kubelet、kube-proxy、flunneld

完成后会自动加入集群

其他节点依次执行以上步骤即可

本文详细介绍了Kubernetes的部署,包括Dashboard、多master集群、负载均衡和kubectl命令行工具的使用。深入探讨了Pod、Service、数据卷、Ingress以及日志收集等方面,还涉及了将公司项目部署到Kubernetes平台的步骤和集群资源监控策略。

本文详细介绍了Kubernetes的部署,包括Dashboard、多master集群、负载均衡和kubectl命令行工具的使用。深入探讨了Pod、Service、数据卷、Ingress以及日志收集等方面,还涉及了将公司项目部署到Kubernetes平台的步骤和集群资源监控策略。

2849

2849

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?