1.配置hadoop

使用符号连接的方式,让三种配置形态共存。(独立模式,伪分布模式,完全分布模式)

(1)创建三个配置目录,内容等同于hadoop目录

${hadoop_home}/etc/local

${hadoop_home}/etc/pesudo

${hadoop_home}/etc/full

因为centos的hadoop读不到环境变量JAVA_HOME,所以需要手动指定jdk路径,修改改路径下的文件 ${hadoop_home}/etc/hadoop/hadoop-env.sh

手动指定jdk路径

(2) 进入{hadoop_home}/etc目录下 进行cp -r hadoop local(pesudo,full)

(3)创建符号连接 ln -s local(pesudo,full) hadoop

(4)对hdfs进行格式化

$>hadoop namenode -format

(5)启动hadoop的所有进程

$>start-all.sh

(6)查看进程 jps

出现如上节点即为成功。

(7)创建目录

$>hdfs dfs -mkdir -p /user/centos/hadoop

(8)查看目录

$>hdfs dfs -ls /

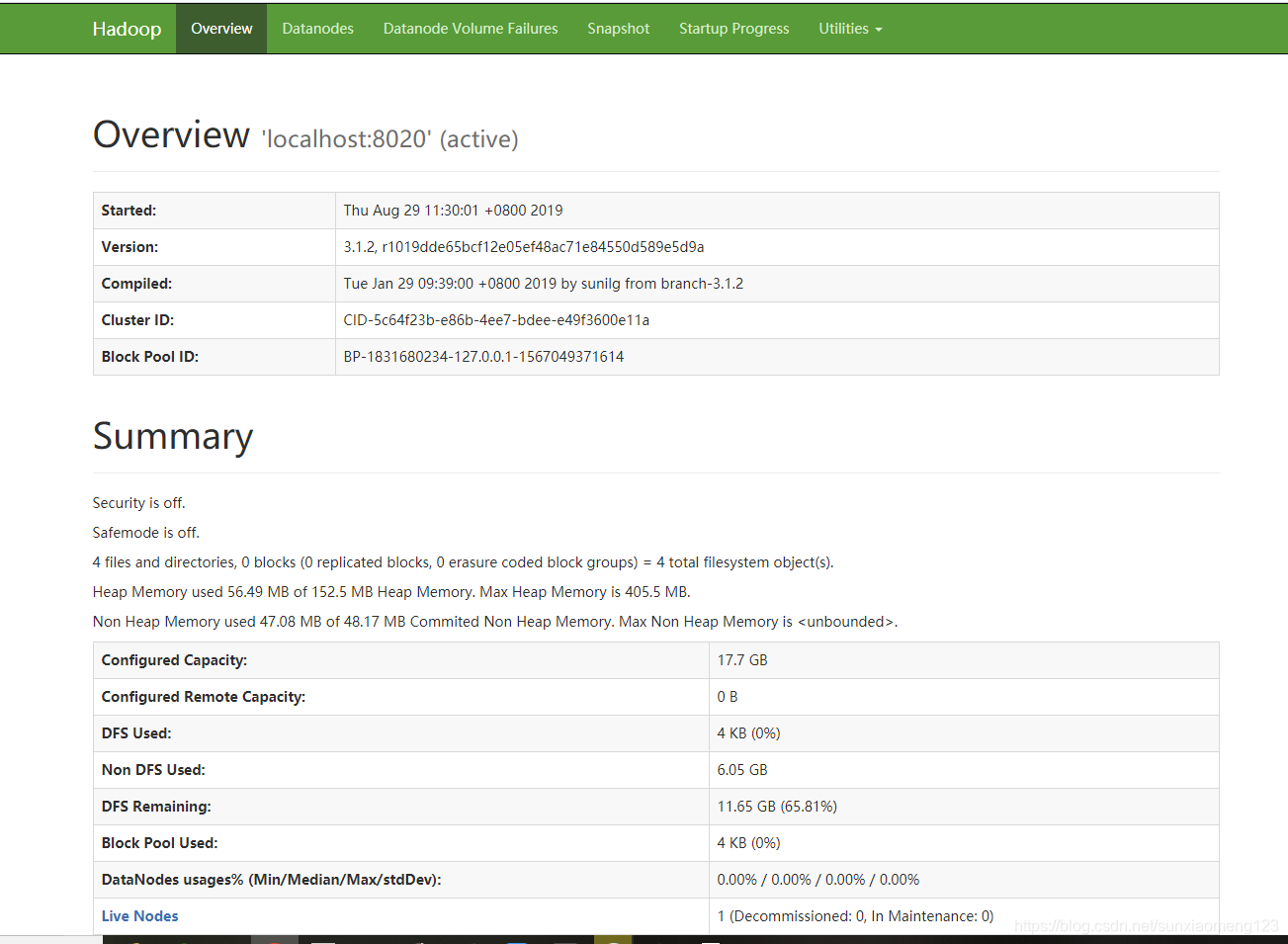

(9)登陆网址查看hadoop文件系统

http://localhost:9870/ #localhost可改为虚拟机ip

出现如下画面证明成功

2. hadoop脚本主要有以下四个

1.start-all.sh //启动所有进程

2.stop-all.sh //停止所有进程

3.start-dfs.sh stop-dfs.sh //启动 NN DN 2NN进程

4.start-yarn.sh stop-yarn.sh //启动 RM NM进程

3.HADOOP完全分布式配置

将安装好hadoop的虚拟机克隆四份,克隆方法如下:

1.克隆3台client(centos7)

右键centos-7-->管理->克隆-> ... -> 完整克隆

2.启动client

3.启用客户机共享文件夹。

修改四台虚拟机的 /etc/hostname文件 分别为s128 s129 s130 s131

修改四台虚拟机的/etc/hosts文件 ,内容一样如下所示

127.0.0.1 localhost

192.168.90.128 s128

192.168.90.129 s129

192.168.90.130 s130

192.168.90.121 s131

修改四台虚拟机的IP文件[/etc/sysconfig/network-scripts/ifcfg-ethxxxx]分别为:

IPADDR=192.168.90.128

IPADDR=192.168.90.129

IPADDR=192.168.90.130

IPADDR=192.168.90.131

最后分别修改四台虚拟机的/etc/resolv.conf文件,内容如下:

nameserver 192.168.90.2

重启四台服务器,然后互相测试能否ping通

4.完全分布式配置(全部操作均在主机128上)

(1)删除所有主机上的/home/centos/.ssh/*

(2)在s128主机上生成密钥对

$>ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

(3)将s128的公钥文件id_rsa.pub远程复制到129~ 131主机上。

并放置/home/centos/.ssh/authorized_keys

$>scp id_rsa.pub centos@s128:/home/centos/.ssh/authorized_keys(主机128上未必能执行改命令 可选择cat追加方式

cat方式:cd ~/.ssh ; cat id_rsa.pub >> authorized_keys)

$>scp id_rsa.pub centos@s129:/home/centos/.ssh/authorized_keys

$>scp id_rsa.pub centos@s130:/home/centos/.ssh/authorized_keys

$>scp id_rsa.pub centos@s131:/home/centos/.ssh/authorized_keys

(4)配置完全分布式(${hadoop_home}/etc/hadoop/)

[core-site.xml]文件配置如下

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://s128/</value>

</property>

</configuration>

[hdfs-site.xml]配置如下

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

[mapred-site.xml]

不变

[yarn-site.xml]

<?xml version="1.0"?>

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>s128</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

[workers]文件配置如下 (hadoop3为workers文件。hadoop2位slave文件)

s129

s130

s131

(5)分发配置

$>cd /soft/hadoop/etc/

$>scp -r full centos@s129:/soft/hadoop-3.1.2/etc/

$>scp -r full centos@s130:/soft/hadoop-3.1.2/etc/

$>scp -r full centos@s131:/soft/hadoop-3.1.2/etc/

(6)删除符号连接

$>cd /soft/hadoop-3.1.2/etc

$>rm hadoop

$>ssh s129 rm /soft/hadoop-3.1.2/etc/hadoop

$>ssh s130 rm /soft/hadoop-3.1.2/etc/hadoop

$>ssh s131 rm /soft/hadoop-3.1.2/etc/hadoop

(7)创建符号连接

$>cd /soft/hadoop-3.1.2/etc/

$>ln -s full hadoop

$>ssh s202 ln -s /soft/hadoop-3.1.2/etc/full /soft/hadoop-3.1.2/etc/hadoop

$>ssh s203 ln -s /soft/hadoop-3.1.2/etc/full /soft/hadoop-3.1.2/etc/hadoop

$>ssh s204 ln -s /soft/hadoop-3.1.2/etc/full /soft/hadoop-3.1.2/etc/hadoop

(8)删除临时目录文件

$>cd /tmp

$>rm -rf hadoop-centos

$>ssh s129 rm -rf /tmp/hadoop-centos

$>ssh s130 rm -rf /tmp/hadoop-centos

$>ssh s131 rm -rf /tmp/hadoop-centos

(9)删除hadoop日志

$>cd /soft/hadoop/logs

$>rm -rf *

$>ssh s129 rm -rf /soft/hadoop/logs/*

$>ssh s130 rm -rf /soft/hadoop/logs/*

$>ssh s131 rm -rf /soft/hadoop/logs/*

(10)格式化文件系统

$>hadoop namenode -format

(11)启动hadoop进程

$>start-all.sh

输入192.168.90.128:9870后点击datenote有三个节点 即成功

5.使用脚本方便操作(非必要)

(1)远程执行命令脚本xcall.sh(最好实现root用户免密登陆)

#!/bin/bash

params=$@

i=201

for (( i=201 ; i <= 204 ; i = $i + 1 )) ; do

echo ============= s$i $params =============

ssh s$i "$params"

done

使用方法 xcall.sh rm -rf /tmp/hadoop-centos

(2)远程同步命令脚本xsync.sh(需要安装rsync)

#!/bin/bash

if [[ $# -lt 1 ]] ; then echo no params ; exit ; fi

p=$1

#echo p=$p

dir=`dirname $p`

#echo dir=$dir

filename=`basename $p`

#echo filename=$filename

cd $dir

fullpath=`pwd -P .`

#echo fullpath=$fullpath

user=`whoami`

for (( i = 202 ; i <= 204 ; i = $i + 1 )) ; do

echo ======= s$i =======

rsync -lr $p ${user}@s$i:$fullpath

done ;

rsync -lr /home/etc/a.txt centos@s129:/home/etc

8690

8690

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?