KNN是什么

KNN的原理就是当预测一个新的值x的时候,根据它距离最近的K个点是什么类别来判断x属于哪个类别

KNN实现步骤

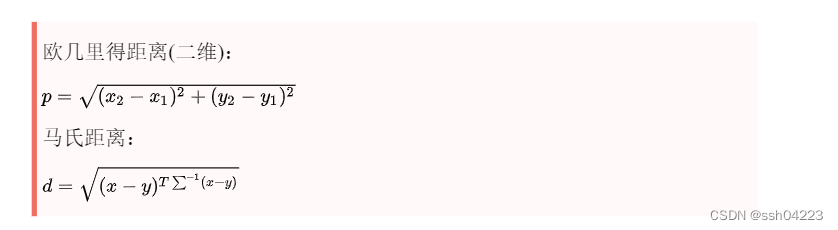

1.计算距离

欧几里得适用于二维

马氏适用于多维

2.升序排列

3.取前k个

4.加权平均

实践

1.打乱数据并分组

with open(".\Prostate_Cancer.csv","r") as f:

render = csv.DictReader(f)

datas = [row for row in render]

# 分组,打乱数据

random.shuffle(datas)#打乱数据

n = len(datas)//3#n为数据数的十分之三

test_data = datas[0:n]#零到n的数据为检测数据

train_data = datas[n:]#n到最后一位为训练数据

# print (train_data[0])

# print (train_data[0]["id"])

2.计算距离

def distance(x, y):#设置方法有xy参数

res = 0#res为0

for k in ("radius","texture","perimeter","area","smoothness","compactness","symmetry","fractal_dimension"):#

res += (float(x[k]) - float(y[k]))**2

return res ** 0.5

def knn(data,K):

# 1. 计算距离

res = [

{"result":train["diagnosis_result"],"distance":distance(data,train)}

for train in train_data

]

3.加权平均

# 2. 排序

sorted(res,key=lambda x:x["distance"])

# print(res)

# 3. 取前K个

res2 = res[0:K]

# 4. 加权平均

result = {"B":0,"M":0}

# 4.1 总距离

sum = 0

for r in res2:

sum += r["distance"]

# 4.2 计算权重

for r in res2 :

result[r['result']] += 1-r["distance"]/sum

# 4.3 得出结果

if result['B'] > result['M']:

return "B"

else:

return "M"

4.

# 预测结果和真实结果对比,计算准确率

for k in range(1,11):

correct = 0

for test in test_data:

result = test["diagnosis_result"]

result2 = knn(test,k)

if result == result2:

correct += 1

print("k="+str(k)+"时,准确率{:.2f}%".format(100*correct/len(test_data)))

本文详细介绍了KNN(K-Nearest Neighbors)算法的原理和步骤,并通过一个实际的癌症诊断案例展示了如何使用Python进行实现。首先,算法通过计算样本间的距离找到K个最近邻居,然后对这些邻居进行加权平均来预测新样本的类别。文章还提供了预测准确率的计算方法,以评估模型性能。

本文详细介绍了KNN(K-Nearest Neighbors)算法的原理和步骤,并通过一个实际的癌症诊断案例展示了如何使用Python进行实现。首先,算法通过计算样本间的距离找到K个最近邻居,然后对这些邻居进行加权平均来预测新样本的类别。文章还提供了预测准确率的计算方法,以评估模型性能。

1902

1902

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?