背景

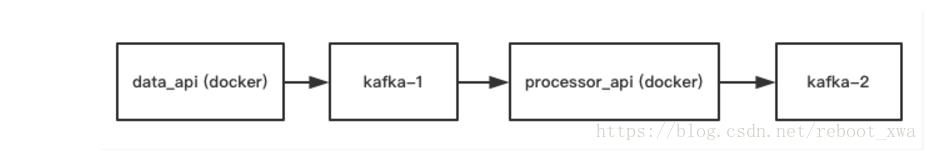

我们有个数据处理平台,有两个用 docker 运行的数据处理模块,分别是:data_api, 和 processor_api,故名思义:

data_api: 接受数据;

processor_api: 处理数据;

数据处理简单架构

1024程序员专属大礼(只限今天),82小节 Python 零基础视频,想要的加小助手wechat:17812796384

踩坑经过

一直以来,这两个模块都是相安无事,稳定得很,然而在九月份因为更新 kafka 连接地址重启了容器,就出了问题。

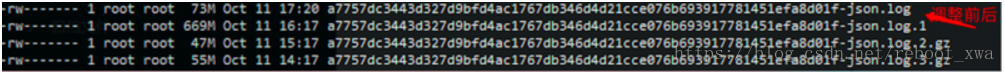

只要用过 docker 的童鞋,都会对 docker logs 很熟悉,这次问题就是,因为 docker 的日志狂刷,按照默认的配置,日志会全部写入 json.log,大约一小时就能刷出 2G 的日志;

于是感觉特别的神奇,跑了快两年都没这问题,改下链接地址就有这么多日志输出,但是明明容器是正常在工作的。

排查半天一直找不出原因,就先配置了日志转储才免得磁盘告警。

今天看到那一堆日志时,发现很多 kafka 链接失败日志:

...

[W 181011 14:18:24 conn:625] <BrokerConnection node_id=18 host=xxxx/xxxx(马赛克) port=9093>: close() called on disconnected connection with error: ConnectionError: Unable to connect to any of the names for xxxx/xxxx(马赛克):9093

[E 181011 14:18:24 conn:289] Unable to connect to any of the names for xxxx/xxxx(马赛克):9093

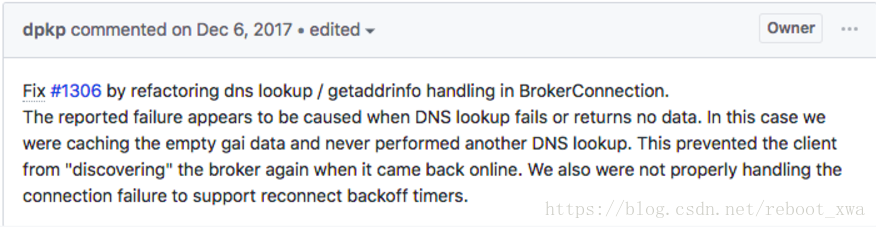

....之前以为是kafka架构的问题没去管,现在还是去谷歌一下,比较幸运地似乎找到一些原因和解决方案,

相关的链接:

大约的意思是因为查找域名失败导致这个bug触发了。

于是事不延迟,找台机器升级下 kafka-python 版本到 1.4.0 看看,升级完之后发现日志大幅度减少了。

升级后的日志大约是升级前的九分之一了,这样来看很明显就是 1.3.5 的问题了。本想着这样就愉快的解决了,然而调整完就有 kafka 消费延迟的告警了,因为一直时不时有少量的消费延迟,所以也没在意。

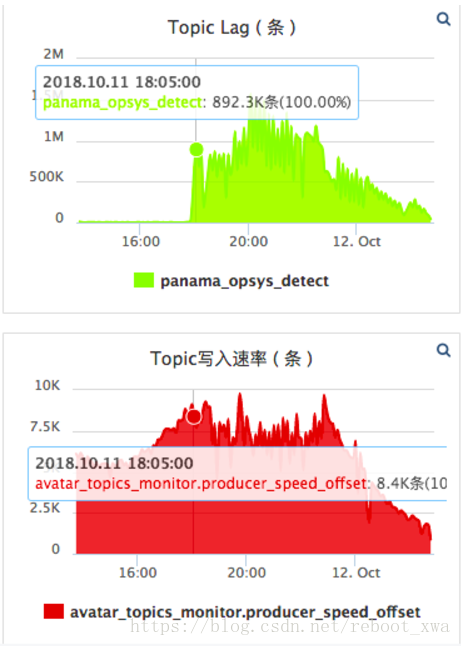

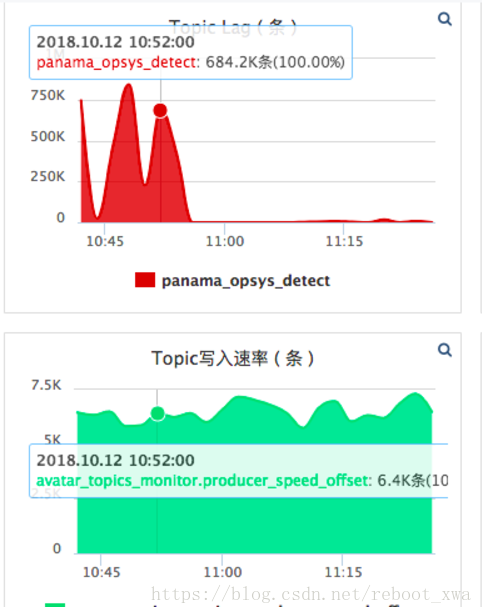

直到第二天,累积的延迟量已经触发了第二级别的阈值了,消费延迟超过 30 万条了,立马上监控看看

lag 图就是延迟条数了,大约 11 号 18点的时候,也就是我们更新版本重启容器之后,在数据写入并没多大改变情况下,lag 数拼命增长,直接去到 80 万了,而且后面还在持续上涨;

首先排除因素就是 processor_api 消费速度,因为在更新前,一直是不会有延迟这么多的。

先回滚到旧版本看看,看到延迟立马消失了。

基本就能定位这个消费延迟的问题是版本导致的。

既然是消费延迟,那就得看消费速度监控了。刚才已经说了,消费速度是绝对够的,只是不知道为什么还是有延迟而已。

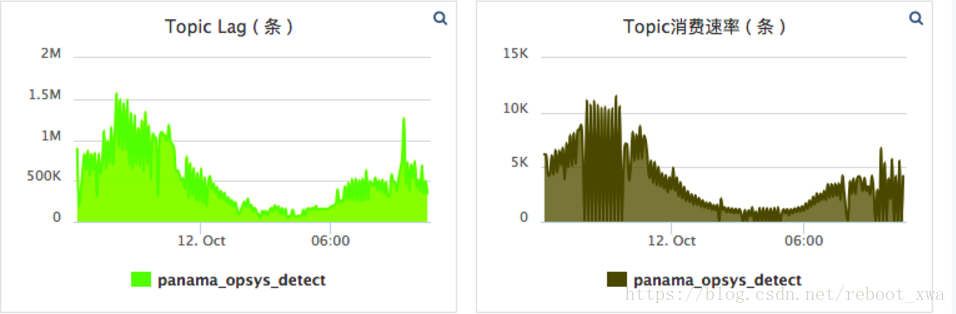

昨天到今天高延迟时的监控图图:

时间太长看不出什么问题,选小区间再看看:

这次看到消费图表,是断断续续的,而看消费者的日志,也看到时不时没有东西打印,仿佛消费完了那样。但是从延迟来看,数据应该是一直有的,不应该出现没有日志打印的情况。

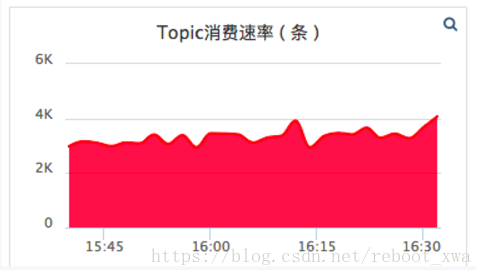

对比下正常时候的消费速率图:

正常消费是连续的平稳的,不应该是断断续续有尖峰的,怀疑是 kafka 消费权重没有均匀等问题,找了 kafka 的童鞋,看能不能看到当前 kafka 消费者分配情况。

kafka 童鞋给了一个神奇的回复,说 kafka 正在 rebalance ...

Consumer group `panama_opsys_detect` is rebalancing当 kafka 在 rebalancing 状态,是不能够消费的。这样看起来的话,应该是 kafka 在频繁的 rebalance 了。。

既然消费者进程和链接都没有变化,其实不应该短时间内频繁 rebalance 的。

因为前面的经验,所以现在都很大可能是版本问题了。

直接去 kafka-python 官网,找了较新的版本 1.4.2,更新之后,消费和日志都正常了。

转载请注明来源:https://segmentfault.com/a/1190000016667454

1076

1076

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?