0. Spark源码包下载

mirror.bit.edu.cn/apache/spark/

1. 集群环境

Master 172.16.71.10

Slave1 172.16.71.11

Slave2 172.16.71.12

2. 下载软件包

#Master

wget http://mirror.bit.edu.cn/apache/spark/spark-2.4.4/spark-2.4.4-bin-hadoop2.6.tgz

tar zxvf spark-2.4.4-bin-hadoop2.6.tgz

wget https://downloads.lightbend.com/scala/2.12.4/scala-2.12.4.tgz

tar zxvf scala-2.12.4.tgz

3. 修改Spark配置文件

cd /usr/local/src/spark-2.4.4-bin-hadoop2.6/conf

mv spark-env.sh.template spark-env.sh

vim spark-env.sh

export SCALA_HOME=/usr/local/src/scala-2.11.12

export JAVA_HOME=/usr/local/src/jdk1.8.0_272

export HADOOP_HOME=/usr/local/src/hadoop-2.6.5

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

SPARK_MASTER_IP=master

SPARK_LOCAL_DIRS=/usr/local/src/spark-2.4.4-bin-hadoop2.6

SPARK_DRIVER_MEMORY=1Gmv slaves.template slaves

vim slaves

slave1

slave2

4. 配置环境变量

vim ~/.bashrc

export SPARK_HOME=/usr/local/src/spark-2.4.4-bin-hadoop2.6

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbinsource ~/.bashrc

5. 拷贝安装包及环境变量

scp -r /usr/local/src/spark-2.4.4-bin-hadoop2.6 root@slave1:/usr/local/src/spark-2.4.4-bin-hadoop2.6

scp -r /usr/local/src/spark-2.4.4-bin-hadoop2.6 root@slave2:/usr/local/src/spark-2.4.4-bin-hadoop2.6

scp -r ~/.bashrc root@slave1:~/

scp -r ~/.bashrc root@slave2:~/

source ~/.bashrc

在slave1,slave2 执行

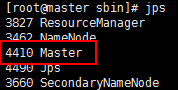

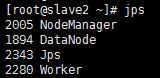

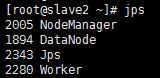

6. 启动集群

在master

cd /usr/local/src/spark-2.4.4-bin-hadoop2.6/sbin

./start-all.sh

7. 网页监控面板

master:8080

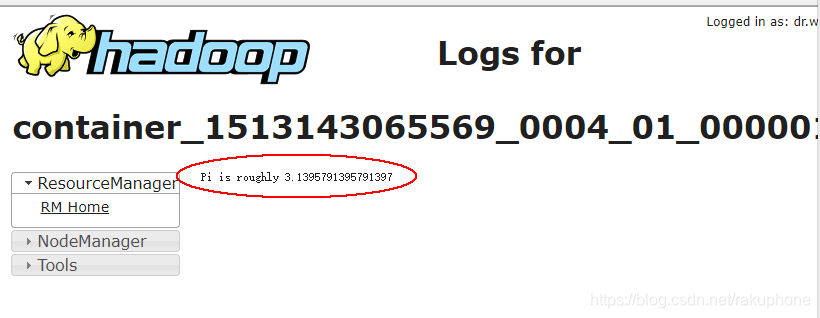

8. 验证

#本地模式

./bin/run-example SparkPi 10 --master local[2]

#集群Standlone

./bin/spark-submit --class org.apache.spark.examples.SparkPi --master spark://master:7077 lib/spark-examples-2.4.4-hadoop2.6.0.jar 10

#集群

spark2.0 on yarn

./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn-cluster examples/jars/spark-examples_2.11-2.0.2.jar 10

阿布的进击

Blog:www.topabu.com

使用站内搜索,解决跟多问题!

扫码关注公众号,获得更多帮助!

本文档详细介绍了如何在集群环境下安装和配置Spark2.4.4,包括从下载源码到启动集群的每一步骤,以及验证安装成功的实践操作。通过这个指南,你可以学会在Master和Slave节点上安装Spark,并使用网页监控面板进行集群状态监控。

本文档详细介绍了如何在集群环境下安装和配置Spark2.4.4,包括从下载源码到启动集群的每一步骤,以及验证安装成功的实践操作。通过这个指南,你可以学会在Master和Slave节点上安装Spark,并使用网页监控面板进行集群状态监控。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?