项目地址:

github地址:https://github.com/hwua-hi168/quantanexus

gitee地址:https://gitee.com/hwua_1/quantanexus-docs

公有云版地址: https://www.hi168.com

整体规划:

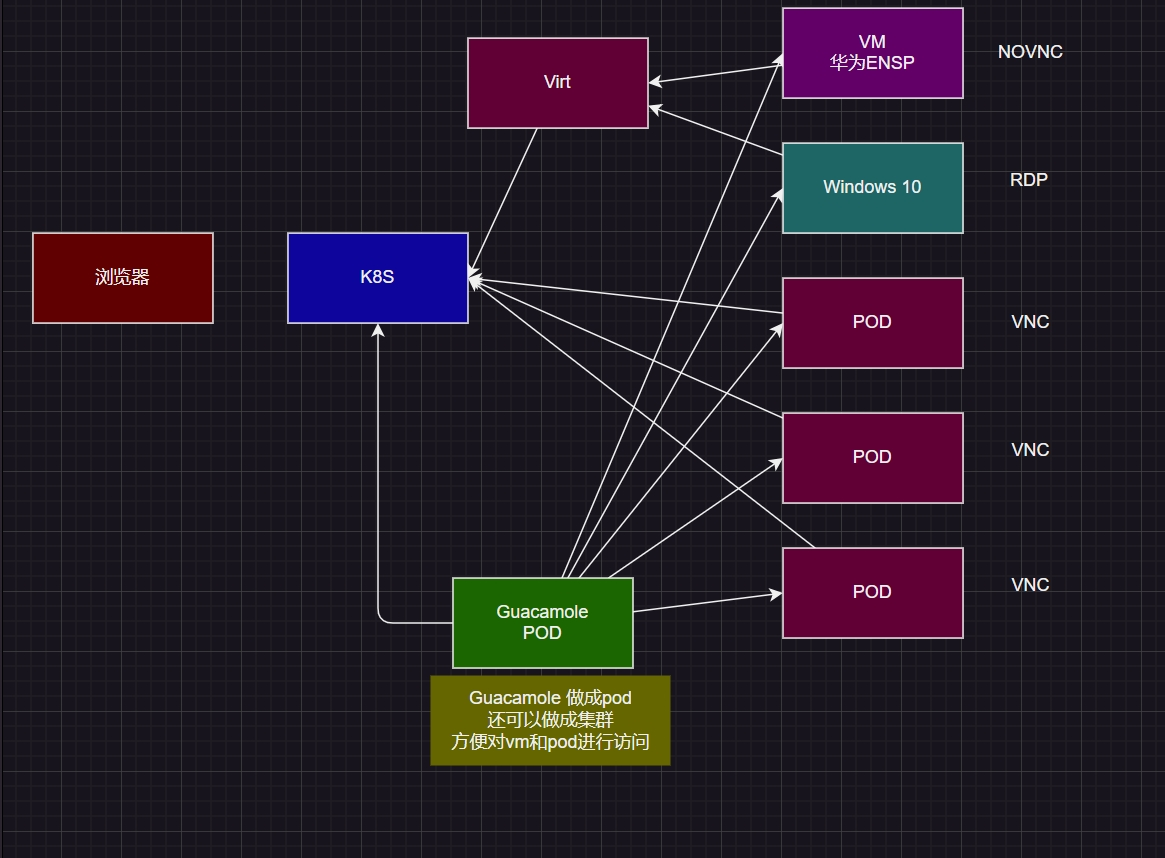

完成基于guacamole的桌面网关的VM培训平台

要求如下:

-

设置IP地址

-

设置主机名

-

设置时区

-

设置节点间免密登录

-

设置grub

GRUB_DEFAULT=0

GRUB_TIMEOUT_STYLE=hidden

GRUB_TIMEOUT=0

GRUB_DISTRIBUTOR=`lsb_release -i -s 2> /dev/null || echo Debian`

#GRUB_CMDLINE_LINUX_DEFAULT=""

GRUB_CMDLINE_LINUX_DEFAULT="intel_iommu=on iommu=pt "

GRUB_CMDLINE_LINUX=""

# update-grub

# reboot 小伙伴们 可 以 注 & 册 https://www.hi168.com 白 & 飘 算 & 力哦。

安装k8s+containerd

Installing kubeadm | Kubernetes k8s安装官方文档。

systemctl stop firewalld关闭Swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

free -h #检查配置路由和桥接

# hostnamectl set-hostname k8s-master

# cat <<EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

#sysctl --system

# modprobe br_netfilter #临时加载

# echo br_netfilter >> /etc/modules-load.d/modules.conf

#cp /etc/apt/sources.list /etc/apt/sources.list.bak

#sed -i 's/archive.ubuntu.com/mirrors.aliyun.com/g' /etc/apt/sources.list && apt update

apt-get remove docker docker-engine docker.io containerd runc

apt-get install -y \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-commonk8s 直接安装containerd 不再使用 cri-dockerd

# 通过阿里云的镜像安装containerd

# apt install -y containerd

#

#mkdir -p /etc/containerd && containerd config default | tee /etc/containerd/config.toml

#vi /etc/containerd/config.toml

1>将 pause 修改为国内源镜像,找到 sanbox_image修改前缀为国内源

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

2>【重要】修改SystemdCgroup配置为true。

SystemdCgroup = true

执行下面步骤是为了使用ctr命令【需要先下载crictl命令,如果不日常维护,可以不用】

#crictl config runtime-endpoint unix:///var/run/containerd/containerd.sock

#systemctl restart containerd

#ps -ef|grep containerd

3>加速器

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d"

mkdir -p /etc/containerd/certs.d/docker.io

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

[host."https://docker.oracleoaec.com"]

capabilities = ["pull", "resolve"]

EOF

# registry.k8s.io镜像加速

mkdir -p /etc/containerd/certs.d/registry.k8s.io

tee /etc/containerd/certs.d/registry.k8s.io/hosts.toml << 'EOF'

server = "https://registry.k8s.io"

[host."https://k8s.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# docker.elastic.co镜像加速

mkdir -p /etc/containerd/certs.d/docker.elastic.co

tee /etc/containerd/certs.d/docker.elastic.co/hosts.toml << 'EOF'

server = "https://docker.elastic.co"

[host."https://elastic.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# gcr.io镜像加速

mkdir -p /etc/containerd/certs.d/gcr.io

tee /etc/containerd/certs.d/gcr.io/hosts.toml << 'EOF'

server = "https://gcr.io"

[host."https://gcr.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# ghcr.io镜像加速

mkdir -p /etc/containerd/certs.d/ghcr.io

tee /etc/containerd/certs.d/ghcr.io/hosts.toml << 'EOF'

server = "https://ghcr.io"

[host."https://ghcr.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# k8s.gcr.io镜像加速

mkdir -p /etc/containerd/certs.d/k8s.gcr.io

tee /etc/containerd/certs.d/k8s.gcr.io/hosts.toml << 'EOF'

server = "https://k8s.gcr.io"

[host."https://k8s-gcr.oracleoaec.io"]

capabilities = ["pull", "resolve", "push"]

EOF

# mcr.m.daocloud.io镜像加速

mkdir -p /etc/containerd/certs.d/mcr.microsoft.com

tee /etc/containerd/certs.d/mcr.microsoft.com/hosts.toml << 'EOF'

server = "https://mcr.microsoft.com"

[host."https://mcr.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# nvcr.io镜像加速

mkdir -p /etc/containerd/certs.d/nvcr.io

tee /etc/containerd/certs.d/nvcr.io/hosts.toml << 'EOF'

server = "https://nvcr.io"

[host."https://nvcr.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# quay.io镜像加速

mkdir -p /etc/containerd/certs.d/quay.io

tee /etc/containerd/certs.d/quay.io/hosts.toml << 'EOF'

server = "https://quay.io"

[host."https://quay.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# registry.jujucharms.com镜像加速

mkdir -p /etc/containerd/certs.d/registry.jujucharms.com

tee /etc/containerd/certs.d/registry.jujucharms.com/hosts.toml << 'EOF'

server = "https://registry.jujucharms.com"

[host."https://jujucharms.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

#systemctl restart containerd.service安装nerdctl :

wget https://github.com/containerd/nerdctl/releases/download/v2.0.0-rc.0/nerdctl-2.0.0-rc.0-linux-amd64.tar.gz 安装k8s ,安装CRIU【 criu - checkpoint/restore in userspace 】

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | gpg --dearmor -o /usr/share/keyrings/kubernets.gpg

echo "deb [arch=amd64 signed-by=/usr/share/keyrings/kubernets.gpg] https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main" | tee /etc/apt/sources.list.d/kubernetes.list

apt-get update

#安装kubenet 会自动检测cri 为containerd

apt install -y kubelet kubeadm kubectl criukubeadm.yaml修改:【一定要加上子网,否则无法初始化】

#查看我们kubeadm版本,这里为GitVersion:"v1.28.2"

[root@k8s-master ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"25", GitVersion:"v1.28.2", GitCommit:"434bfd82814af038ad94d62ebe59b133fcb50506", GitTreeState:"clean", BuildDate:"2022-10-12T10:55:36Z", GoVersion:"go1.19.2", Compiler:"gc", Platform:"linux/amd64"}

# 生成默认配置文件

# kubeadm config print init-defaults > kubeadm.yaml

# vi kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.247.100 # 1)修改为宿主机IP

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock # 2)不用docker

imagePullPolicy: IfNotPresent

name: hwua # 3)修改为宿主机名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 4)修改为阿里镜像

kind: ClusterConfiguration

kubernetesVersion: 1.28.2

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.16.0.0/16 ## 4)设置pod网段

scheduler: {}

###5)添加内容:配置kubelet的CGroup为systemd

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd#提前下载镜像

# kubeadm config images pull --config kubeadm.yaml

#kubeadm init --config kubeadm.yaml

#按照提示完成

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config安装网络套件,kube-ovn

# wget https://get.helm.sh/helm-v3.14.3-linux-amd64.tar.gz

# tar xvf helm-v3.14.3-linux-amd64.tar.gz

# cp -rp ./linux-amd64/helm /usr/bin

# helm

root@k8s-master:~/linux-amd64# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready control-plane 2d4h v1.28.2 192.168.145.142 <none> Ubuntu 22.04.3 LTS 5.15.0-101-generic containerd://1.7.2

k8s-worker1 Ready <none> 2d4h v1.28.2 192.168.145.143 <none> Ubuntu 22.04.3 LTS 5.15.0-101-generic containerd://1.7.2

root@k8s-master:~/linux-amd64# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5fc7d6cf67-mtwxm 1/1 Running 1 (3m36s ago) 2d4h

kube-system calico-node-c6fz6 1/1 Running 0 2d4h

kube-system calico-node-t9md8 1/1 Running 1 (3m36s ago) 2d4h

kube-system coredns-66f779496c-fmvbf 1/1 Running 1 (3m36s ago) 2d4h

kube-system coredns-66f779496c-lvb4f 1/1 Running 1 (3m36s ago) 2d4h

kube-system etcd-k8s-master 1/1 Running 1 (3m36s ago) 2d4h

kube-system kube-apiserver-k8s-master 1/1 Running 1 (3m36s ago) 2d4h

kube-system kube-controller-manager-k8s-master 1/1 Running 1 (3m36s ago) 2d4h

kube-system kube-proxy-dkwdc 1/1 Running 0 2d4h

kube-system kube-proxy-pqk2j 1/1 Running 1 (3m36s ago) 2d4h

kube-system kube-scheduler-k8s-master 1/1 Running 1 (3m36s ago) 2d4h

root@k8s-master:~/linux-amd64# kubectl taint node kube-ovn-control-plane node-role.kubernetes.io/control-plane:NoSchedule- # 主机也可以调度

Error from server (NotFound): nodes "kube-ovn-control-plane" not found

root@k8s-master:~/linux-amd64# kubectl label node -lbeta.kubernetes.io/os=linux kubernetes.io/os=linux --overwrite

node/k8s-master not labeled

node/k8s-worker1 not labeled

root@k8s-master:~/linux-amd64# kubectl label node -lnode-role.kubernetes.io/control-plane kube-ovn/role=master --overwrite

node/k8s-master labeled

root@k8s-master:~/linux-amd64# helm repo add kubeovn https://kubeovn.github.io/kube-ovn/

"kubeovn" has been added to your repositories

root@k8s-master:~/linux-amd64# helm repo list

NAME URL

kubeovn https://kubeovn.github.io/kube-ovn/

root@k8s-master:~/linux-amd64# helm search repo kubeovn

NAME CHART VERSION APP VERSION DESCRIPTION

kubeovn/kube-ovn v1.12.11 1.12.11 Helm chart for Kube-OVN

root@k8s-master:~/linux-amd64# helm install kube-ovn kubeovn/kube-ovn --set MASTER_NODES=192.168.145.142

NAME: kube-ovn

LAST DEPLOYED: Sun Apr 7 11:57:15 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

root@k8s-master:~/linux-amd64# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5fc7d6cf67-mtwxm 1/1 Running 1 (5m40s ago) 2d4h

kube-system calico-node-c6fz6 1/1 Running 0 2d4h

kube-system calico-node-t9md8 1/1 Running 1 (5m40s ago) 2d4h

kube-system coredns-66f779496c-fmvbf 1/1 Running 1 (5m40s ago) 2d4h

kube-system coredns-66f779496c-lvb4f 1/1 Running 1 (5m40s ago) 2d4h

kube-system etcd-k8s-master 1/1 Running 1 (5m40s ago) 2d4h

kube-system kube-apiserver-k8s-master 1/1 Running 1 (5m40s ago) 2d4h

kube-system kube-controller-manager-k8s-master 1/1 Running 1 (5m40s ago) 2d4h

kube-system kube-ovn-cni-qx8dp 0/1 Init:0/1 0 15s

kube-system kube-ovn-cni-vxql5 0/1 Init:0/1 0 15s

kube-system kube-ovn-controller-78f4d64759-qpf4l 0/1 ContainerCreating 0 15s

kube-system kube-ovn-monitor-6c65669bb7-56rhx 0/1 ContainerCreating 0 15s

kube-system kube-ovn-pinger-6spd9 0/1 ContainerCreating 0 15s

kube-system kube-ovn-pinger-mjwkw 0/1 ContainerCreating 0 15s

kube-system kube-proxy-dkwdc 1/1 Running 0 2d4h

kube-system kube-proxy-pqk2j 1/1 Running 1 (5m40s ago) 2d4h

kube-system kube-scheduler-k8s-master 1/1 Running 1 (5m40s ago) 2d4h

kube-system ovn-central-6dbfd4c9f8-8f9qg 0/1 ContainerCreating 0 15s

kube-system ovs-ovn-kp5kp 0/1 ContainerCreating 0 15s

kube-system ovs-ovn-kpxxj 0/1 ContainerCreating 0 15s

root@k8s-master:/etc/cni/net.d# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-66f779496c-fmvbf 1/1 Running 1 (58m ago) 2d5h

kube-system coredns-66f779496c-lvb4f 1/1 Running 1 (58m ago) 2d5h

kube-system etcd-k8s-master 1/1 Running 1 (58m ago) 2d5h

kube-system kube-apiserver-k8s-master 1/1 Running 1 (58m ago) 2d5h

kube-system kube-controller-manager-k8s-master 1/1 Running 1 (58m ago) 2d5h

kube-system kube-ovn-cni-qx8dp 1/1 Running 0 52m

kube-system kube-ovn-cni-vxql5 1/1 Running 0 52m

kube-system kube-ovn-controller-78f4d64759-qpf4l 1/1 Running 0 52m

kube-system kube-ovn-monitor-6c65669bb7-56rhx 1/1 Running 0 52m

kube-system kube-ovn-pinger-6spd9 1/1 Running 0 52m

kube-system kube-ovn-pinger-mjwkw 1/1 Running 0 52m

kube-system kube-proxy-dkwdc 1/1 Running 0 2d5h

kube-system kube-proxy-pqk2j 1/1 Running 1 (58m ago) 2d5h

kube-system kube-scheduler-k8s-master 1/1 Running 1 (58m ago) 2d5h

kube-system ovn-central-6dbfd4c9f8-8f9qg 1/1 Running 0 52m

kube-system ovs-ovn-kp5kp 1/1 Running 0 52m

kube-system ovs-ovn-kpxxj 1/1 Running 0 52m

root@k8s-master:/etc/cni/net.d# kubectl get daemonset -A

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system kube-ovn-cni 2 2 2 2 2 kubernetes.io/os=linux 61m

kube-system kube-ovn-pinger 2 2 2 2 2 kubernetes.io/os=linux 61m

kube-system kube-proxy 2 2 2 2 2 kubernetes.io/os=linux 2d5h

kube-system ovs-ovn 2 2 2 2 2 kubernetes.io/os=linux 61m

root@k8s-master:/etc/cni/net.d# kubectl describe daemonset kube-ovn-cni -n kube-system #可以调整参数

Name: kube-ovn-cni

Selector: app=kube-ovn-cni

Node-Selector: kubernetes.io/os=linux

Labels: app.kubernetes.io/managed-by=Helm

Annotations: deprecated.daemonset.template.generation: 1

kubernetes.io/description: This daemon set launches the kube-ovn cni daemon.

meta.helm.sh/release-name: kube-ovn

meta.helm.sh/release-namespace: default

Desired Number of Nodes Scheduled: 2

Current Number of Nodes Scheduled: 2

Number of Nodes Scheduled with Up-to-date Pods: 2

Number of Nodes Scheduled with Available Pods: 2

Number of Nodes Misscheduled: 0

Pods Status: 2 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=kube-ovn-cni

component=network

type=infra

Service Account: kube-ovn-cni网络套件安装(可选)(calico+ipvs)小型网络

Master节点安装网络套件Calico 或者测试 flannel

# 直接通过calico.yaml安装

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.0/manifests/calico.yaml

watch kubectl get pods -n calico-system修改iptables为ipvs模式(不需要了):

1、查看当前系统支持的ip_vs

lsmod|grep ip_vs

2、如果没有ipvs的支持,则添加

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

3、修改kube-proxy的默认配置

kubectl edit configmap kube-proxy -n kube-system

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: “”

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

metricsBindAddress: "127.0.0.1:10249"

mode: "ipvs"

4、删除原先的kube-proxy的pod,会重新生成新的pod

kubectl get pods -n kube-system

kubectl -n kube-system delete pod kube-proxy-qfmqg

kubectl -n kube-system delete pod kube-proxy-sdc9d

5、查看新的kube-proxy pod日志,显示“Using ipvs Proxier” 表示开启了ipvs模式

kubectl -n kube-system logs kube-proxy-jw2ct

I0512 20:46:39.128357 1 node.go:172] Successfully retrieved node IP: 192.168.10.136

I0512 20:46:39.128553 1 server_others.go:142] kube-proxy node IP is an IPv4 address (192.168.10.136), assume IPv4 operation

I0512 20:46:39.153956 1 server_others.go:258] Using ipvs Proxier.

I0512 20:46:39.166860 1 proxier.go:372] missing br-netfilter module or unset sysctl br-nf-call-iptables; proxy may not work as intended

E0512 20:46:39.167001 1 proxier.go:389] can't set sysctl net/ipv4/vs/conn_reuse_mode, kernel version must be at least 4.1

W0512 20:46:39.167105 1 proxier.go:445] IPVS scheduler not specified, use rr by default

I0512 20:46:39.167274 1 server.go:650] Version: v1.20.6改变master可以部署pod

kubectl taint nodes --all node-role.kubernetes.io/control-plane-

kubectl taint nodes --all node-role.kubernetes.io/master- #没这个标签k8s添加节点

systemctl stop firewalld关闭Swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

free -h #检查配置路由和桥接

# hostnamectl set-hostname k8s-node1

# cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

#sysctl --system

# modprobe br_netfilter

#echo br_netfilter >> /etc/modules-load.d/modules.conf

#cp /etc/apt/sources.list /etc/apt/sources.list.bak

#vim /etc/apt/source.list

deb https://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb https://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb https://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

# deb https://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

# deb-src https://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb https://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

apt update

apt-get remove docker docker-engine docker.io containerd runc

apt-get install -y \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-commonk8s 直接安装containerd 不再使用 cri-dockerd

#apt install -y apt-transport-https ca-certificates curl software-properties-common

#curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

#echo "deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

# apt update

# apt install -y containerd

#

#mkdir -p /etc/containerd && containerd config default | tee /etc/containerd/config.toml

#vi /etc/containerd/config.toml

# 将 pause 修改为国内源镜像,找到 sanbox_image修改前缀为国内源

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

SystemdCgroup = true #【重要】修改SystemdCgroup配置为true。

#配置加速器目录

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d"直接复制到shell 直接执行下面命令即可建立所有的加速镜像站

mkdir -p /etc/containerd/certs.d/docker.io

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

[host."https://docker.oracleoaec.com"]

capabilities = ["pull", "resolve"]

EOF

# registry.k8s.io镜像加速

mkdir -p /etc/containerd/certs.d/registry.k8s.io

tee /etc/containerd/certs.d/registry.k8s.io/hosts.toml << 'EOF'

server = "https://registry.k8s.io"

[host."https://k8s.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# docker.elastic.co镜像加速

mkdir -p /etc/containerd/certs.d/docker.elastic.co

tee /etc/containerd/certs.d/docker.elastic.co/hosts.toml << 'EOF'

server = "https://docker.elastic.co"

[host."https://elastic.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# gcr.io镜像加速

mkdir -p /etc/containerd/certs.d/gcr.io

tee /etc/containerd/certs.d/gcr.io/hosts.toml << 'EOF'

server = "https://gcr.io"

[host."https://gcr.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# ghcr.io镜像加速

mkdir -p /etc/containerd/certs.d/ghcr.io

tee /etc/containerd/certs.d/ghcr.io/hosts.toml << 'EOF'

server = "https://ghcr.io"

[host."https://ghcr.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# k8s.gcr.io镜像加速

mkdir -p /etc/containerd/certs.d/k8s.gcr.io

tee /etc/containerd/certs.d/k8s.gcr.io/hosts.toml << 'EOF'

server = "https://k8s.gcr.io"

[host."https://k8s-gcr.oracleoaec.io"]

capabilities = ["pull", "resolve", "push"]

EOF

# mcr.m.daocloud.io镜像加速

mkdir -p /etc/containerd/certs.d/mcr.microsoft.com

tee /etc/containerd/certs.d/mcr.microsoft.com/hosts.toml << 'EOF'

server = "https://mcr.microsoft.com"

[host."https://mcr.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# nvcr.io镜像加速

mkdir -p /etc/containerd/certs.d/nvcr.io

tee /etc/containerd/certs.d/nvcr.io/hosts.toml << 'EOF'

server = "https://nvcr.io"

[host."https://nvcr.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# quay.io镜像加速

mkdir -p /etc/containerd/certs.d/quay.io

tee /etc/containerd/certs.d/quay.io/hosts.toml << 'EOF'

server = "https://quay.io"

[host."https://quay.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF

# registry.jujucharms.com镜像加速

mkdir -p /etc/containerd/certs.d/registry.jujucharms.com

tee /etc/containerd/certs.d/registry.jujucharms.com/hosts.toml << 'EOF'

server = "https://registry.jujucharms.com"

[host."https://jujucharms.oracleoaec.com"]

capabilities = ["pull", "resolve", "push"]

EOF重启containerd

systemctl restart containerd.service安装k8s ,安装CRIU【 criu - checkpoint/restore in userspace 】

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | gpg --dearmor -o /usr/share/keyrings/kubernets.gpg

echo "deb [arch=amd64 signed-by=/usr/share/keyrings/kubernets.gpg] https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main" | tee /etc/apt/sources.list.d/kubernetes.list

apt-get update

#安装kubenet 会自动检测cri 为containerd

apt install -y kubelet kubeadm kubectl criu1、master上执行

root@k8s:~# kubeadm token create --print-join-command

kubeadm join 192.168.145.138:6443 --token y49fom.ukdwsad9tsund2ta --discovery-token-ca-cert-hash sha256:83d63e7fd8a6f48ee840a8ce5f5ec4cb579b29c76d57928423ada99fee06de75 2、node上执行加入

# systemctl status kubelet

# journalctl -xe

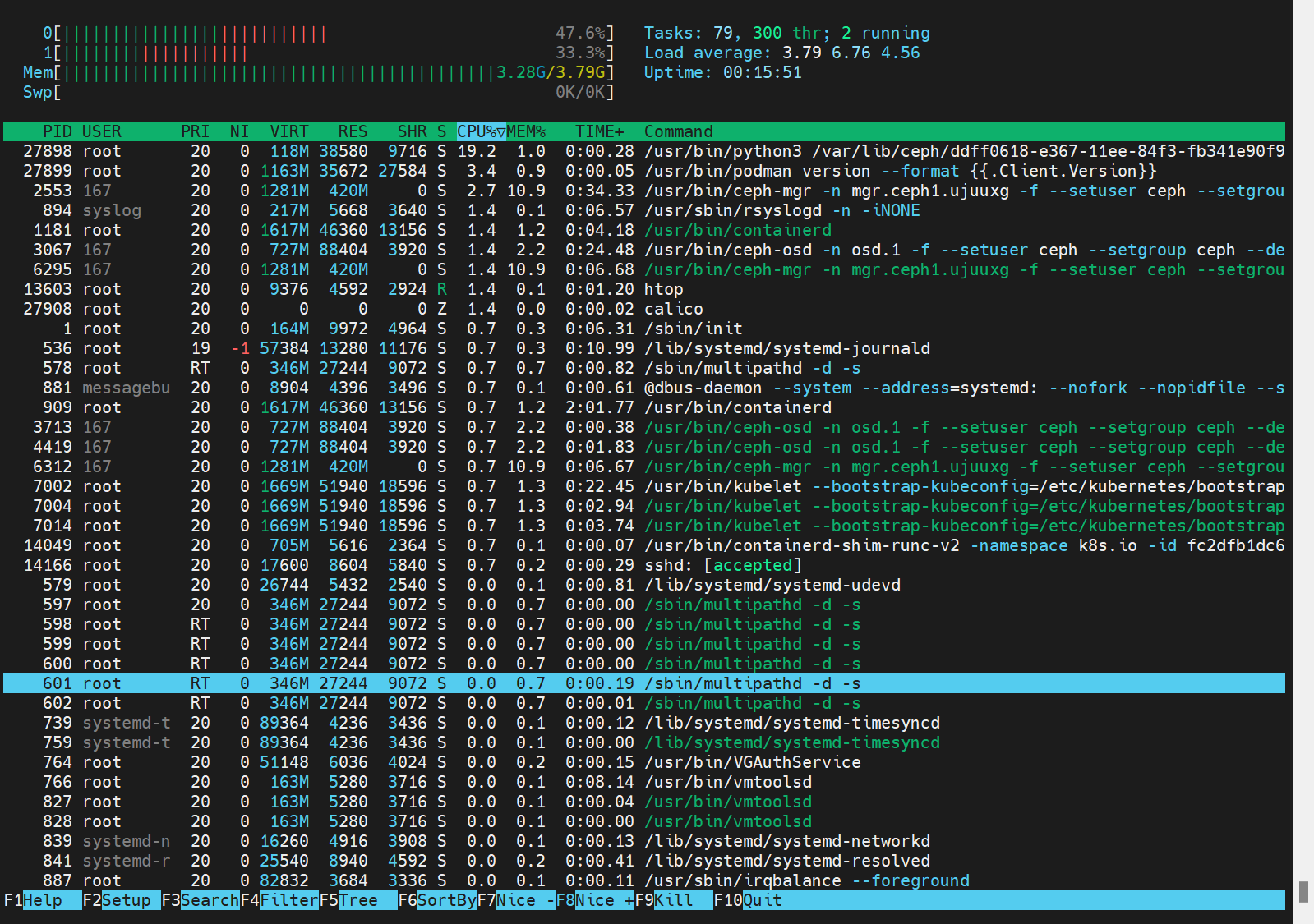

# kubeadm join 192.168.145.138:6443 --token y49fom.ukdwsad9tsund2ta --discovery-token-ca-cert-hash sha256:83d63e7fd8a6f48ee840a8ce5f5ec4cb579b29c76d57928423ada99fee06de753、node上查看k8s+ceph的CPU,内存占用,管理界面要占4G内存+2个CPU。(每个OSD都应该分配2个CPU)

1827

1827

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?