大数据平台搭建与配置

- 大数据平台的搭建与配置

- 一、搭建IDEA开发环境集linux虚拟机

- 1、windows中JDK(jdk8)的安装与配置

- (1)JDK的安装

- (2)环境变量

- 2、Maven的安装与配置

- (1)Maven下载

- (2)Maven安装

- (3)配置Maven环境变量

- (4)验证Maven是否安装成功

- 3、IDEA的安装与配置

- (1)IDEA下载

- (2) IDEA安装

- (3)为IDEA配置SDK

- (4)配置Maven

- (5)使用IDEA构建Maven项目

- 4、搭建linux虚拟机

- (1) 安装linux系统

- (2) 修改主机名并关闭防火墙

- (3) 配置静态IP(master,slave1,slave2分别输入)

- (5) Linux主机名与IP映射(master输入)

- (6) Linux SSH免密登录(master,slave1,slave2分别输入)

- 二、基于Hadoop构建大数据平台```(注我的安装包存放位置为:/opt/software,系统没有,需需自己下载存放)```

- 1、安装配置JDK运行环境

- (1)解压JDK(master)

- (2)重命名(master)

- (3)配置环境变量(master)

- (4)同步JDK到集群其他节点(master)

- (5) 刷新环境变量(master,slave1,slave2分别输入)

- (6) 验证Java是否安装成功(master,slave1,slave2分别输入)

- 2、Zookeeper集群安装部署

- (1) 解压Zookeeper(master)

- (2) 重命名(master)

- (3) 配置Zookeeper(master)

- (4) 配置环境变量(master)

- (5) 同步Zookeeper到集群其他节点(master)

- (6) 创建Zookeeper数据和日志目录(master,slave1,slave2分别输入)

- (7) 为Zookeeper集群各个节点创建服务编号(master,slave1,slave2分别输入)```注:编号为整数且不能重复```

- (8) 刷新环境变量(master,slave1,slave2分别输入)

- (9) 启动Zookeeper集群(master,slave1,slave2分别输入)

- (10)查看zookeeper状态(master,slave1,slave2分别输入)

- (11) Zookeeper shell操作(master) ```测试所用```

- 三、Hadoop Ha分布式集群的构建

- 1、解压Hadoop(master)

- 2、重命名(master)

- 3、配置环境变量(master)

- 4、修改配置文件

- (1)修改hadoop-env.sh

- (2)修改core-site.sh

- (3)修改hdfs-site.sh

- (4)修改mapred-env.sh

- (5)修改mapred-site.sh

- (6)修改yarn-env.sh

- (7)修改yarn-site.sh

- (8)配置slaves文件

- 5、同步Hadoop到集群其他节点(master)

- 6、刷新环境变量(master,slave1,slave2分别输入)

- 7、启动Zookeeper集群(master,slave1,slave2分别输入)```注,由于前面已经启动过集群,建议需要重启集群,避免资源冲突```

- 8、启动JournalNode守护进程

- 9、初始化namenode

- 10、注册ZNode

- 11、启动namenode(master)

- 12、备用NameNode同步主节点元数据(slave1)

- 13、启动Hadoop集群(master)

- 14、启动namenode进程(slave1)

- 15、启动resourcemanager(slave1,slave2)

- 16、启动 MapReduce任务历史服务器

- 17、查看端口和进程

- 18、web端口验证

- 基于Hbase与Kafka构建海量数据存储与交换系统

- 用户行为离线分析——构建日志采集与分析平台

- 基于Spark的用户行为实时分析

- 基于Flink的用户行为实时分析

- 用户行为数据可视化

大数据平台的搭建与配置

一、搭建IDEA开发环境集linux虚拟机

1、windows中JDK(jdk8)的安装与配置

(1)JDK的安装

(2)环境变量

2、Maven的安装与配置

(1)Maven下载

(2)Maven安装

(3)配置Maven环境变量

(4)验证Maven是否安装成功

3、IDEA的安装与配置

(1)IDEA下载

(2) IDEA安装

(3)为IDEA配置SDK

(4)配置Maven

(5)使用IDEA构建Maven项目

4、搭建linux虚拟机

(1) 安装linux系统

-

安装前的准备

VMware 17安装包

VMware激活码MC60H-DWHD5-H80U9-6V85M-8280D

CentOS-7-x86_64-DVD-2009.iso -

开始安装

**2.1~2.8为创建虚拟机,伪分布只需要执行一次,全分布需要根据需要创建多个虚拟机,丛机配置可以适当减小配置**

2.1打开VMware Workstation,选择创建新的虚拟机

2.2一般选择典型即可

2.3选择稍后安装操作系统

2.4选择Linux操作系统CentOS7的版本

2.5输入虚拟机名称和安装路径

2.6设置磁盘大小(建议根据实际来),一般默认就够用了,如果需求较大可根据需要自行调整。接着勾选将虚拟磁盘拆分成多个文件,这样可以使虚拟机方便用储存设备拷贝复制。

2.7自定义硬件(根据实际需求设置内存大小与处理器)

2.8选择下载的CentOS安装镜像文件你自己的下载路径

**2.1~2.8为创建虚拟机,伪分布只需要执行一次,全分布需要根据需要创建多个虚拟机,丛机配置可以适当减小配置**

2.9修改虚拟网络编辑器

对ip地址进行修改,修改第三组ip数,可取1~255,第四组ip数为0,不进行修改,随后修改NAT设置与DHCP设置中的第三组IP,例如下图我使用的第三组IP为1,修改完毕后点击应用即可

注:默认网关一般以"2"结尾

2.10修改网络连接

注:此处IP以一般以“1”结尾,网关需与虚拟网络编辑器中一致,同时修改完毕后不要直接退休,点击确认,直到退出完毕,避免修改没进行保存

2.11开启虚拟机,进行配置(可借鉴)中的第三、1~三、16将其第三、5替换为带GUI的服务器:

(2) 修改主机名并关闭防火墙

注意我登录的是root用户

修改主机名

master

hostnamectl set-hostname master

slave1

hostnamectl set-hostname slave1

slave2

hostnamectl set-hostname slave2

(master,slave1,slave2分别输入)

bash

关闭防⽕墙(master,slave1,slave2分别输入):

systemctl stop firewalld

禁⽌防⽕墙开机启动(master,slave1,slave2分别输入):

systemctl disable firewalld

(3) 配置静态IP(master,slave1,slave2分别输入)

vim /etc/sysconfig/network-scripts/ifcfg-ens33

master,slave1,slave2分别修改

BOOTPROTO=dhcp

为

BOOTPROTO=static

master,slave1,slave2末尾分别追加

IPADDR=192.168.1.100

NETMASK=255.255.255.0

GATEWAY=192.168.1.2

DNS1=114.114.114.114

DNS2=8.8.8.8

IPADDR=192.168.1.110

NETMASK=255.255.255.0

GATEWAY=192.168.1.2

DNS1=114.114.114.114

DNS2=8.8.8.8

IPADDR=192.168.1.120

NETMASK=255.255.255.0

GATEWAY=192.168.1.2

DNS1=114.114.114.114

DNS2=8.8.8.8

重启网卡(master,slave1,slave2分别输入)

systemctl restart network

(5) Linux主机名与IP映射(master输入)

vim /etc/hosts

追加

192.168.1.100 master

192.168.1.110 slave1

192.168.1.120 slave2

分发(未配置免密需要输入yes确认和输入密码)

scp -r /etc/hosts root@slave1:/etc

scp -r /etc/hosts root@slave2:/etc

(6) Linux SSH免密登录(master,slave1,slave2分别输入)

ssh-keygen -t rsa

ssh-copy-id master

ssh-copy-id slave1

ssh-copy-id slave2

二、基于Hadoop构建大数据平台(注我的安装包存放位置为:/opt/software,系统没有,需需自己下载存放)

1、安装配置JDK运行环境

(1)解压JDK(master)

tar -zxvf /opt/software/jdk-8u51-linux-x64.tar.gz -C /opt/module

(2)重命名(master)

mv /opt/module/jdk1.8.0_51 /opt/module/jdk

(3)配置环境变量(master)

vim /etc/profile

# JAVA_HOME

export JAVA_HOME=/opt/module/jdk

export PATH="$JAVA_HOME/bin:$PATH"

(4)同步JDK到集群其他节点(master)

scp -r /opt/module/jdk root@slave1:/opt/module

scp -r /opt/module/jdk root@slave2:/opt/module

scp -r /etc/profile root@slave1:/etc

scp -r /etc/profile root@slave2:/etc

(5) 刷新环境变量(master,slave1,slave2分别输入)

source /etc/profile

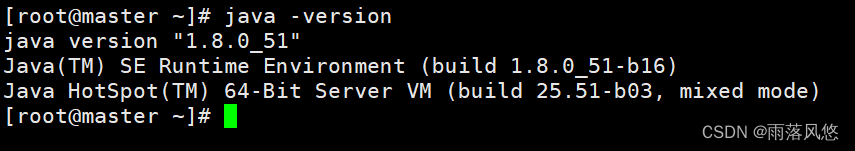

(6) 验证Java是否安装成功(master,slave1,slave2分别输入)

java -version

2、Zookeeper集群安装部署

(1) 解压Zookeeper(master)

tar -zxvf /opt/software/zookeeper-3.4.6.tar.gz -C /opt/module/

(2) 重命名(master)

mv /opt/module/zookeeper-3.4.6 /opt/module/zookeeper

(3) 配置Zookeeper(master)

修改默认配置文件为zoo.cfg

cp /opt/module/zookeeper/conf/zoo_sample.cfg /opt/module/zookeeper/conf/zoo.cfg

配置zoo.cfg

vim /opt/module/zookeeper/conf/zoo.cfg

修改dataDir与dataLogDir路径分别为/opt/module/zookeeper/zkdata,/opt/module/zookeeper/zkdatalog

dataDir=/opt/module/zookeeper/zkdata

dataLogDir=/opt/module/zookeeper/zkdatalog

clientPort=2181

server.1=master:2888:3888

server.2=slave1:2888:3888

server.3=slave2:2888:3888

(4) 配置环境变量(master)

vim /etc/profile

追加

# zookeeper

export ZOOKEEPER_HOME=/opt/module/zookeeper

export PATH="$ZOOKEEPER_HOME/bin:$PATH"

(5) 同步Zookeeper到集群其他节点(master)

scp -r /opt/module/zookeeper root@slave1:/opt/module/

scp -r /opt/module/zookeeper root@slave2:/opt/module/

scp -r /etc/profile root@slave1:/etc

scp -r /etc/profile root@slave2:/etc

(6) 创建Zookeeper数据和日志目录(master,slave1,slave2分别输入)

mkdir -p /opt/module/zookeeper/zkdata

mkdir -p /opt/module/zookeeper/zkdatalog

(7) 为Zookeeper集群各个节点创建服务编号(master,slave1,slave2分别输入)注:编号为整数且不能重复

touch /opt/module/zookeeper/zkdata/myid

master,slave1,slave2分别输入

vim /opt/module/zookeeper/zkdata/myid

master

1

slave1

2

slave2

3

(8) 刷新环境变量(master,slave1,slave2分别输入)

source /etc/profile

(9) 启动Zookeeper集群(master,slave1,slave2分别输入)

启动zookeeper

zookeeper.sh start

(10)查看zookeeper状态(master,slave1,slave2分别输入)

zookeeper.sh status

(11) Zookeeper shell操作(master) 测试所用

进入Zookeeper shell客户端

zkCli.sh -server localhost:2181

查看Zookeeper根目录结构

ls /

创建node节点

create /test helloworld

查看node节点

get /test

修改node节点

set /test zookeeper

删除node节点

delete /test

三、Hadoop Ha分布式集群的构建

master

1、解压Hadoop(master)

tar -zxvf /opt/software/hadoop-2.9.1.tar.gz -C /opt/module/

2、重命名(master)

mv /opt/module/hadoop-2.9.1 /opt/module/hadoop

3、配置环境变量(master)

vim /etc/profile

追加

#hadoop

export HADOOP_HOME=/opt/module/hadoop

export PATH="$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH"

export HADOOP_CLASSPATH=$(hadoop classpath)

export HDFS_JOURNALNODE_USER=root

export HDFS_ZKFC_USER=root

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

4、修改配置文件

(1)修改hadoop-env.sh

vim /opt/module/hadoop/etc/hadoop/hadoop-env.sh

追加

export JAVA_HOME=/opt/module/jdk

(2)修改core-site.sh

vim /opt/module/hadoop/etc/hadoop/core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<!-- 指定HDFS的通信地址,在NameNode HA中连接到nameservice的,值为mycluster是>需要在下一个hdfs-site.xml中配置的 -->

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>

<property>

<!-- 指定hadoop的临时目录,默认情况下NameNode和DataNode产生的数据都会存放在该

路径 -->

<name>hadoop.tmp.dir</name>

<value>/opt/hadoopHA/tmp</value>

</property>

<property>

<!-- 指定Zookeeper地址(2181端口参考zoo.cfg配置文件) -->

<name>ha.zookeeper.quorum</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>

</configuration>

(3)修改hdfs-site.sh

vim /opt/module/hadoop/etc/hadoop/hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<configuration>

<property>

<!-- 给NameNode集群定义一个services name(NameNode集群服务名) -->

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<property>

<!-- 设置nameservices包含的NameNode名称,分别命名为nn1,nn2 -->

<name>dfs.ha.namenodes.mycluster</name>

<value>nn1,nn2</value>

</property>

<property>

<!-- 设置名为nn1的NameNode的RPC的通信地址和端口号 -->

<name>dfs.namenode.rpc-address.mycluster.nn1</name>

<value>master:9000</value>

</property>

<property>

<!-- 设置名为nn1的NameNode的HTTP的通信地址和端口号 -->

<name>dfs.namenode.http-address.mycluster.nn1</name>

<value>master:50070</value>

</property>

<property>

<!-- 设置名为nn2的NameNode的RPC的通信地址和端口号 -->

<name>dfs.namenode.rpc-address.mycluster.nn2</name>

<value>slave1:9000</value>

</property>

<property>

<!-- 设置名为nn2的NameNode的HTTP的通信地址和端口号 -->

<name>dfs.namenode.http-address.mycluster.nn2</name>

<value>slave1:50070</value>

</property>

<property>

<!-- 设置NameNode的元数据在JournalNode上的存放路径

(即NameNode集群间的用于共享的edits日志的journal节点列表) -->

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://master:8485;slave1:8485;slave2:8485/mycluster</value>

</property>

<property>

<!-- 指定JournalNode上存放的edits日志的目录位置 -->

<name>dfs.journalnode.edits.dir</name>

<value>/opt/hadoopHA/tmp/dfs/journal</value>

</property>

<property>

<!-- 开启NameNode失败自动切换 -->

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<!-- 配置NameNode失败后自动切换实现方式(客户端连接可用状态的NameNode所用的代理类) -->

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<!-- 配置隔离机制 -->

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<!-- 设置使用隔离机制时需要的SSH免密登录 -->

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<!-- 设置HDFS副本数量 -->

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<!-- 设置NameNode存放的路径 -->

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoopHA/tmp/dfs/name</value>

</property>

<property>

<!-- 设置DataNode存放的路径 -->

<name>dfs.datanode.data.dir</name>

<value>/opt/hadoopHA/tmp/dfs/data</value>

</property>

</configuration>

</configuration>

(4)修改mapred-env.sh

vim /opt/module/hadoop/etc/hadoop/mapred-env.sh

追加

export JAVA_HOME=/opt/module/jdk

(5)修改mapred-site.sh

vim /opt/module/hadoop/etc/hadoop/mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistroy.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

(6)修改yarn-env.sh

vim /opt/module/hadoop/etc/hadoop/yarn-env.sh

追加

export JAVA_HOME=/opt/module/jdk

(7)修改yarn-site.sh

vim /opt/module/hadoop/etc/hadoop/yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<!-- 启用ResourceManager的HA功能 -->

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<!-- 开启ResourceManager失败自动切换 -->

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<!-- 给ResourceManager HA集群命名id -->

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-cluster</value>

</property>

<property>

<!-- 指定ResourceManager HA有哪些节点 -->

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<!-- 指定第一个节点在那一台机器 -->

<name>yarn.resourcemanager.hostname.rm1</name>

<value>master</value>

</property>

<property>

<!-- 指定第二个节点在那一台机器 -->

<name>yarn.resourcemanager.hostname.rm2</name>

<value>slave1</value>

</property>

<property>

<!-- 指定ResourceManager HA所用的Zookeeper节点 -->

<name>yarn.resourcemanager.zk-address</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>

<property>

<!-- 启用RM重启的功能,默认为false -->

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<!-- 用于状态存储的类 -->

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<!-- NodeManager启用server的方式 -->

<name>yarn-nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<!-- NodeManager启用server使用算法的类 -->

<name>yarn-nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<!-- 启用日志聚合功能 -->

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<!-- 聚集的日志在HDFS上保存最长的时间 -->

<name>yarn.log-aggregation.retain-seconds</name>

<value>106800</value>

</property>

<property>

<!-- 聚集的日志在HDFS上保存最长的时间 -->

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/opt/hadoopHA/logs</value>

</property>

<property>

<!-- ApplicationMaster通过该地址向RM申请资源、释放资源,

本设置是ResourceManager对ApplicationMaster暴露的地址 -->

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>master:8030</value>

</property>

<property>

<!-- NodeManager通过该地址交换信息 -->

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>master:8031</value>

</property>

<property>

<!-- 客户机通过该地址向RM提交对应应用程序操作 -->

<name>yarn.resourcemanager.address.rm1</name>

<value>master:8032</value>

</property>

<property>

<!-- 管理员通过该地址向RM发送管理命令 -->

<name>yarn.resourcemanager.admin.address.rm1</name>

<value>master:8033</value>

</property>

<property>

<!-- RM HTTP访问地址,查看集群信息 -->

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>master:8088</value>

</property>

<property>

<!-- 同rm1对应内容 -->

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>slave1:8030</value>

</property>

<property>

<!-- NodeManager通过该地址交换信息 -->

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>slave1:8031</value>

</property>

<property>

<!-- 客户机通过该地址向RM提交对应应用程序操作 -->

<name>yarn.resourcemanager.address.rm2</name>

<value>slave1:8032</value>

</property>

<property>

<!-- 管理员通过该地址向RM发送管理命令 -->

<name>yarn.resourcemanager.admin.address.rm2</name>

<value>slave1:8033</value>

</property>

<property>

<!-- RM HTTP访问地址,查看集群信息 -->

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>slave1:8088</value>

</property>

</configuration>

(8)配置slaves文件

vim /opt/module/hadoop/etc/hadoop/slaves

master

slave1

slave2

5、同步Hadoop到集群其他节点(master)

scp -r /opt/module/hadoop root@slave1:/opt/module/

scp -r /opt/module/hadoop root@slave2:/opt/module/

scp -r /etc/profile root@slave1:/etc

scp -r /etc/profile root@slave2:/etc

6、刷新环境变量(master,slave1,slave2分别输入)

source /etc/profile

7、启动Zookeeper集群(master,slave1,slave2分别输入)注,由于前面已经启动过集群,建议需要重启集群,避免资源冲突

zkServer.sh start

查看zookeeper节点是否正常

zkServer.sh status

8、启动JournalNode守护进程

hadoop-daemon.sh start journalnode

9、初始化namenode

hdfs namenode -format

10、注册ZNode

hdfs zkfc -formatZK

11、启动namenode(master)

hdfs --daemon start namenode

12、备用NameNode同步主节点元数据(slave1)

hdfs namenode -bootstrapStandby

13、启动Hadoop集群(master)

start-all.sh

14、启动namenode进程(slave1)

hadoop-daemon.sh start namenode

15、启动resourcemanager(slave1,slave2)

yarn-daemon.sh start resourcemanager

16、启动 MapReduce任务历史服务器

yarn-daemon.sh start proxyserver

mr-jobhistory-daemon.sh start historyserver

17、查看端口和进程

jps

jpsall脚本

#! /bin/bash

for host in master slave1 slave2

do

echo ----------$host----------

ssh $host jps

done

18、web端口验证

master:50070

基于Hbase与Kafka构建海量数据存储与交换系统

下载解压HBase

tar -zxvf /opt/software/hbase-1.2.0-bin.tar.gz -C /opt/module/

mv /opt/module/hbase-1.2.0/ /opt/module/hbase

修改配置文件

配置hbase-site.xml

配置RegionServers

vim /opt/module/hbase/conf/regionservers

master

slave1

slave2

配置backup-master文件

vim /opt/module/hbase/conf/backup-masters

slave1

配置hbase-env.sh文件

vim /opt/module/hbase/conf/hbase-env.sh

export HBASE_MANAGES_ZK=false

export JAVA_HOME=/opt/module/jdk

配置环境变量

vim /etc/profile

# Hbase

export HBASE_HOME=/opt/module/hbase

export PATH="$HBASE_HOME/bin:$PATH"

HBase分发到集群其他节点

scp -r /opt/module/hbase root@slave1:/opt/module/

scp -r /opt/module/hbase root@slave2:/opt/module/

scp -r /etc/profile root@slave1:/etc

scp -r /etc/profile root@slave2:/etc

刷新环境变量

source /etc/profile

用户行为离线分析——构建日志采集与分析平台

tar -xvf /opt/software/apache-flume-1.8.0-bin.tar.gz -C /opt/module/

mv /opt/module/apache-flume-1.8.0-bin/ /opt/module/flume

vim /etc/profile

# Flume

export FLUME_HOME=/opt/module/flume

export PATH="$FLUME_HOME/bin:$PATH"

mv /opt/module/flume/conf/flume-conf.properties.template /opt/module/flume/conf/flume-conf.properties

scp -r /opt/module/flume root@slave1:/opt/module/

scp -r /opt/module/flume root@slave2:/opt/module/

scp -r /etc/profile root@slave1:/etc

scp -r /etc/profile root@slave2:/etc

source /etc/profile

tar -zxvf /opt/software/kafka_2.12-1.1.1vi.tgz -C /opt/module/

mv /opt/module/kafka_2.12-1.1.1/ /opt/module/kafka

vim /etc/profile

# kafka

export KAFKA_HOME=/opt/module/kafka

export PATH="$KAFKA_HOME/bin:$PATH"

vim /opt/module/kafka/config/zookeeper.propertie

dataDir=/opt/module/zookeeper/zkdata

vim /opt/module/kafka/config/consumer.properties

zookeeper.connect=master:2181,slave1:2181,slave2:2181

vim /opt/module/kafka/config/producer.properties

metadata.broker.list=master:9092,slave1:9092,slave2:9092

vim /opt/module/kafka/config/server.properties

zookeeper.connect=master:2181,slave1:2181,slave2:2181

scp -r /opt/module/kafka root@slave1:/opt/module/

scp -r /opt/module/kafka root@slave2:/opt/module/

scp -r /etc/profile root@slave1:/etc

scp -r /etc/profile root@slave2:/etc

vim /opt/module/kafka/config/server.properties

broker.id=1

broker.id=2

broker.id=3

source /etc/profile

zkServer-start

zkServer-status

kafka-server-start.sh /opt/module/kafka/config/server.properties

kafka-topics.sh --zookeeper localhost:2181 --create --topic final-project --replication-factor 3 --partitions 3

kafka-topics.sh --zookeeper localhost:2181 --list

kafka-topics.sh --zookeeper localhost:2181 --describe --topic final-project

kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic final-project

kafka-console-producer.sh --broker-list localhost:9092 --topic final-project

kafka-topics.sh --zookeeper localhost:2181 --delete --topic final-project

vim /opt/module/flume/conf/taildir-file-selector-avro.properties

agent1.sources = taildirSource

agent1.channels = fileChannel

agent1.sinkgroups = g1

agent1.sinks = k1 k2

# Define and configure an exec source

agent1.sources.taildirSource.type = TAILDIR

agent1.sources.taildirSource.positionFile = /opt/module/flume/taildir_position.json

agent1.sources.taildirSource.filegroups = f1

agent1.sources.taildirSource.filegroups.f1 = /opt/module/flume/logs/sogou.log

agent1.sources.taildirSource.channels = fileChannel

# Configure channel

agent1.channels.fileChannel.type = file

agent1.channels.fileChannel.checkpointDir = /opt/module/flume/checkpointDir

agent1.channels.fileChannel.dataDirs = /opt/module/flume/dataDirs

# Define and configure a sink

agent1.sinkgroups.g1.sinks = k1 k2

# load_balance 负载均衡 failover故障切换

agent1.sinkgroups.g1.processor.type = load_balance

agent1.sinkgroups.g1.processor.backoff = true

# round_robin 轮询 random随机

agent1.sinkgroups.g1.processor.selector = round_robin

agent1.sinkgroups.g1.processor.selector.maxTimeOut=10000

agent1.sinks.k1.type = avro

agent1.sinks.k1.channel = fileChannel

agent1.sinks.k1.batchSize = 1

agent1.sinks.k1.hostname = slave1

agent1.sinks.k1.port = 1234

agent1.sinks.k2.type = avro

agent1.sinks.k2.channel = fileChannel

agent1.sinks.k2.batchSize = 1

agent1.sinks.k2.hostname = slave2

agent1.sinks.k2.port = 1234

agent1.sources = taildirSource

agent1.channels = fileChannel

agent1.sinkgroups = g1

agent1.sinks = k1 k2 hdfsSink

# Define and configure an exec source

agent1.sources.taildirSource.type = TAILDIR

agent1.sources.taildirSource.positionFile = /opt/module/flume/taildir_position.json

agent1.sources.taildirSource.filegroups = f1

agent1.sources.taildirSource.filegroups.f1 = /opt/module/flume/logs/sogou.log

agent1.sources.taildirSource.channels = fileChannel

# Configure channel

agent1.channels.fileChannel.type = file

agent1.channels.fileChannel.checkpointDir = /opt/module/flume/checkpointDir

agent1.channels.fileChannel.dataDirs = /opt/module/flume/dataDirs

# Define and configure a sink group

agent1.sinkgroups.g1.sinks = k1 k2 hdfsSink

# Load balancing among sinks

agent1.sinkgroups.g1.processor.type = load_balance

agent1.sinkgroups.g1.processor.backoff = true

agent1.sinkgroups.g1.processor.selector = round_robin

agent1.sinkgroups.g1.processor.selector.maxTimeOut = 10000

# Avro Sink 1

agent1.sinks.k1.type = avro

agent1.sinks.k1.channel = fileChannel

agent1.sinks.k1.batchSize = 1

agent1.sinks.k1.hostname = slave1

agent1.sinks.k1.port = 1234

# Avro Sink 2

agent1.sinks.k2.type = avro

agent1.sinks.k2.channel = fileChannel

agent1.sinks.k2.batchSize = 1

agent1.sinks.k2.hostname = slave2

agent1.sinks.k2.port = 1234

# HDFS Sink

agent1.sinks.hdfsSink.type = hdfs

agent1.sinks.hdfsSink.hdfs.path = hdfs://master:9000/sogou.log

agent1.sinks.hdfsSink.hdfs.fileType = DataStream

agent1.sinks.hdfsSink.hdfs.writeFormat = Text

agent1.sinks.hdfsSink.channel = fileChannel

vim /opt/module/flume/conf/avro-file-selector-logger.properties

agent1.sources = r1

agent1.channels = c1

agent1.sinks = k1

# Define and configure an avro

agent1.sources.r1.type = avro

agent1.sources.r1.channels = c1

agent1.sources.r1.bind = 0.0.0.0

agent1.sources.r1.port = 1234

# Configure channel

agent1.channels.c1.type = file

agent1.channels.c1.checkpointDir = /opt/module/flume/checkpointDir

agent1.channels.c1.dataDirs = /opt/module/flume/dataDirs

# Define and configure a sink

agent1.sinks.k1.type = logger

agent1.sinks.k1.channel = c1

flume-ng agent -n agent1 -c /opt/module/flume/conf/ -f /opt/module/flume/conf/avro-file-selector-logger.properties -Dflume.root.logger=INFO,console

flume-ng agent -n agent1 -c /opt/module/flume/conf/ -f /opt/module/flume/conf/taildir-file-selector-avro.properties -Dflume.root.logger=INFO,console

echo '00:00:00 四川邮电职业技术学院 210320501050 黄俞宁1 www.sptc.edu.cn' >> sogou.log

agent1.sources = r1

agent1.channels = c1

agent1.sinks = k1

# Define and configure an avro

agent1.sources.r1.type = avro

agent1.sources.r1.channels = c1

agent1.sources.r1.bind = 0.0.0.0

agent1.sources.r1.port = 1234

# Configure channel

agent1.channels.c1.type = file

agent1.channels.c1.checkpointDir = /opt/module/flume/checkpointDir

agent1.channels.c1.dataDirs = /opt/module/flume/dataDirs

# Define and configure a sink

agent1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

agent1.sinks.k1.topic = sogoulogs

agent1.sinks.k1.brokerList = master:9092,slave1:9092,slave2:9092

agent1.sinks.k1.producer.acks = 1

agent1.sinks.k1.channel = c1

flume-ng agent -n agent1 -c /opt/module/flume/conf/ -f /opt/module/flume/conf/avro-file-selector-kafka.properties -Dflume.root.logger=INFO,console

flume-ng agent -n agent1 -c /opt/module/flume/conf/ -f /opt/module/flume/conf/taildir-file-selector-avro.properties -Dflume.root.logger=INFO,console

- 编写爬虫程序(附录)

- 启动zookeeper

zkServer-start

zkServer-status

- 启动hadoop集为群(HDFS)

start-all.sh

- 启动Kafka服务

- 启动flume聚合服务

- 启动flume采集服务

- 启动爬虫程序

本文详细介绍大数据平台的搭建与配置。先搭建IDEA开发环境和Linux虚拟机,接着基于Hadoop构建大数据平台,包括JDK、Zookeeper集群安装等。还构建Hadoop Ha分布式集群,基于Hbase与Kafka构建存储交换系统。最后进行用户行为离线、实时分析及数据可视化。

本文详细介绍大数据平台的搭建与配置。先搭建IDEA开发环境和Linux虚拟机,接着基于Hadoop构建大数据平台,包括JDK、Zookeeper集群安装等。还构建Hadoop Ha分布式集群,基于Hbase与Kafka构建存储交换系统。最后进行用户行为离线、实时分析及数据可视化。

2880

2880

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?