- 启动集群

- 在root用户下启动MySQL;ps -ef | grep mysql查看3306是否启动:

- 启动hive,use bigdata02,show create table student:

得到文件目录/user/hive/warehouse/bigdata02.db/student

- 创建表student

create table bigdata02.student

(

xh String,

xm String

)

row format delimited fields terminated by ',' stored as textfile

;

- 在根目录下创建一个数据vim studentdata:

20117701,张仲景

20117702,华佗

20117703,扁鹊

20117704,李时珍

- 将studentdata的数据上传到hdfs中:

hadoop fs -put studentdata /user/hive/warehouse/bigdata02.db/student

在hive中查看:

- 在hive中建一张课程表:

create table bigdata02.course

(

kch String,

xh String,

kcmc String,

xf String

)

row format delimited fields terminated by ',' stored as textfile

location ‘/course01’;

- 在根目录下创建一个数据:vim coursedata:

C001 ,20117703,ETL技术,2

C002 ,20117702,数据可视化,3

C001,20117703,ETL技术,2

C003 ,20117702,MySQL ,2

C004,20117 703,Linux,2

C001,20117701,ETL技术,2

- 将coursedata的数据上传到hdfs中:

在hive中查看:

- 前端的需求:

扁鹊同学选修了哪些课程,课程学分是多少

SELECT

s.xm,c.kcmc,c.xf

FROM student s

JOIN course c

ON s.xh=c.xh

WHERE s.xm="扁鹊";

- 为查询结果创建一个表:

create table bigdata02.jgb

(

xm String,

kcmc String,

xf String

)

row format delimited fields terminated by ',' stored as textfile;

接着再执行:

INSERT INTO bigdata02.jgb

SELECT

s.xm,c.kcmc,c.xf

FROM student s

JOIN course c

ON s.xh=c.xh

WHERE s.xm="扁鹊";

执行结果如下:

查询表jgb数据:

select * from jgb;

- 查看结果表存储再hdfs得目录:

SHOW create table jgb;

获取导入路径:

/user/hive/warehouse/bigdata02.db/jgb/000000_0

- 将数据导入MySQL:

①在MySQL得db02数据库中创建表:

create table hive_to_mysql(name VARCHAR(50),cname VARCHAR(50),score int);

sqoop export -connect jdbc:mysql://192.168.43.216:3306/db02 -username root -password 123456 -table hive_to_mysql --fields-terminated-by ','

--export-dir /user/hive/warehouse/bigdata02.db/jgb/000000_0

导入成功:

在MySQL中查看:

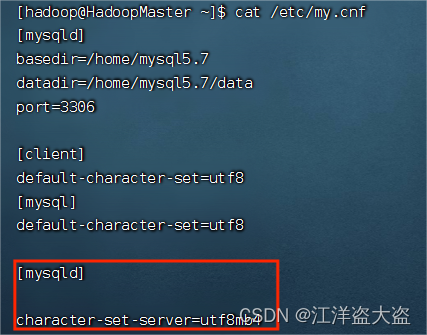

存在中文乱码,输入指令查看SHOW VARIABLES LIKE 'character%';

在/etc/my.cnf文件中添加:

[mysqld]

character-set-server=utf8mb4

再在MySQL中查看:

查看表没有乱码:

本文介绍了如何在Linux下启动MySQL和Hive集群,创建表,处理数据(包括从文本文件到HDFS,Hive查询,以及使用Sqoop将Hive数据导入MySQL并解决字符编码问题)。

本文介绍了如何在Linux下启动MySQL和Hive集群,创建表,处理数据(包括从文本文件到HDFS,Hive查询,以及使用Sqoop将Hive数据导入MySQL并解决字符编码问题)。

2033

2033

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?