一、线性回归

import torch

x_data = [1, 2, 3]

y_data = [2, 4, 6]

w = torch.Tensor([1.0])

w.requires_grad = True

def forward(x):

return x * w

def loss(x, y):

y_prediction = forward(x)

return (y_prediction - y) ** 2

for epoch in range(100):

for x, y in zip(x_data, y_data):

l = loss(x, y)

l.backward()

print("\tgrad:", x, y, w.grad.item())

w.data = w.data - 0.01 * w.grad.data

w.grad.data.zero_()

print("progress:", epoch, l.item())

二、pytorch线性回归

import torch

x_data = torch.Tensor([[1.0], [2.0], [3.0]])

y_data = torch.Tensor([[2.0], [4.0], [6.0]])

class LinearModel(torch.nn.Module):

def __init__(self):

super(LinearModel, self).__init__()

self.linear = torch.nn.Linear(1, 1)

def forward(self, x):

y_prediction = self.linear(x)

return y_prediction

model = LinearModel()

criterion = torch.nn.MSELoss(size_average=False, reduce=True)

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

for epoch in range(1000):

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

print("w=", model.linear.weight.item())

print("b=", model.linear.bias.item())

x_test = torch.Tensor([4.0])

y_test = model(x_test)

print("y_pred=", y_test.data)

三、二分类

import torch

import torch.nn.functional as F

import numpy as np

import matplotlib.pyplot as plt

x_data = torch.Tensor([[1.0], [2.0], [3.0]])

y_data = torch.Tensor([[0], [0], [1]])

class LogisticRegressionModel(torch.nn.Module):

def __init__(self):

super(LogisticRegressionModel, self).__init__()

self.linear = torch.nn.Linear(1, 1)

def forward(self, x):

y_prediction = F.sigmoid(self.linear(x))

return y_prediction

model = LogisticRegressionModel()

criterion = torch.nn.BCELoss(size_average=False, reduce=True)

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

for epoch in range(1000):

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

x = np.linspace(0, 10, 200)

x_t = torch.Tensor(x).view((200, 1))

y_t = model(x_t)

y = y_t.data.numpy()

plt.plot(x, y)

plt.show()

四、多输入单输出二分类

import numpy as np

import torch

xy = np.loadtxt("diabetes.csv", delimiter=",", dtype=np.float32)

x_data = torch.from_numpy(xy[:, :-1])

y_data = torch.from_numpy(xy[:, -1]).view((759, 1))

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1 = torch.nn.Linear(8, 6)

self.linear2 = torch.nn.Linear(6, 4)

self.linear3 = torch.nn.Linear(4, 1)

self.sigmoid = torch.nn.Sigmoid()

def forward(self, x1):

x2 = self.sigmoid(self.linear1(x1))

x3 = self.sigmoid(self.linear2(x2))

y_prediction = self.sigmoid(self.linear3(x3))

return y_prediction

model = Model()

criterion = torch.nn.BCELoss(reduction='sum')

optimizer = torch.optim.SGD(model.parameters(), lr=0.1)

for epoch in range(100):

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

五、mini batch

Dataset 构造数据集 支持索引访问

DataLoader 提供一组mini batch供训练使用

Epoch 一轮使用过所有数据

Batch-Size 一轮中一次训练样本数量

Iteration 内层迭代多少次(总样本数/Batch-Size)

DataLoader 需要支持索引 知道长度

shuffle 是否打乱

如果能用DataLoader进行小批量生成数据

Dataset至少满足两个条件

可以通过索引访问 且 总长度已知

可以用 for in 语句拿出每一个batch

标准格式

class DiabetesDataset(Dataset):

def __init__(self, filepath):

pass

def __getitem__(self, index):

pass

def __len__(self):

pass

dataset = DiabetesDataset()

train_loader = DataLoader(dataset=dataset, batch_size=32, shuffle=True, num_workers=2)

for epoch in range(100):

for i, data in enumerate(train_loader, 0):

__init__ 读取并预处理数据

__getitem__ 输入索引返回数据 如果返回多个数据 解包时候就是元组

__len__ 总数据长度(训练一个epoch用了多少数据,要分为多个batch)

import torch

import numpy as np

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

class DiabetesDataset(Dataset):

def __init__(self, filepath):

xy = np.loadtxt(filepath, delimiter=",", dtype=np.float32)

print(xy.shape)

self.len = xy.shape[0]

self.x_data = torch.from_numpy(xy[:, :-1])

print(self.x_data.shape)

self.y_data = torch.from_numpy(xy[:, -1]).view((759, 1))

print(self.y_data.shape)

def __getitem__(self, index):

return self.x_data[index], self.y_data[index]

def __len__(self):

return self.len

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1 = torch.nn.Linear(8, 6)

self.linear2 = torch.nn.Linear(6, 4)

self.linear3 = torch.nn.Linear(4, 1)

self.sigmoid = torch.nn.Sigmoid()

def forward(self, x1):

x2 = self.sigmoid(self.linear1(x1))

x3 = self.sigmoid(self.linear2(x2))

y_prediction = self.sigmoid(self.linear3(x3))

return y_prediction

model = Model()

criterion = torch.nn.BCELoss(reduction='mean')

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

dataset = DiabetesDataset("diabetes.csv")

train_loader = DataLoader(dataset=dataset, batch_size=32, shuffle=True, num_workers=2)

for epoch in range(100):

for i, data in enumerate(train_loader, 0):

print(type(train_loader))

print(i)

inputs, labels = data

y_pred = model(inputs)

loss = criterion(y_pred, labels)

print(epoch, i, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

特殊用法 当__init__初始化数据是 字典 且值为嵌套列表 嵌套列表的第一内层维度相同

此时可逐步提取第一维度

import torch.utils.data as data

import torch

class build_dataset(data.Dataset):

def __init__(self):

self.data = {

'a' : [[1,2],[3,4],[5,6],[7,8],[9,10],[11,12],[13,14],[15,16],[17,18],[19,20],[21,22],[23,24]],

'b' : [[24,25,26],[27,28,29],[30,31,32],[33,34,35],[36,37,38],[39,40,41],[42,43,44],[45,46,47],[48,49,50],[51,52,53],[54,55,56],[57,58,59]]

}

def __getitem__(self, index):

ret = {

'c':torch.FloatTensor(self.data['a'][index]),

'd':torch.FloatTensor(self.data['b'][index])

}

return ret

def __len__(self):

return 12

def build_data_loader():

data_loaders = data.DataLoader(dataset=build_dataset(),batch_size = 2)

return data_loaders

train_gen = build_data_loader()

for batch_idx, dic in enumerate(train_gen):

print(dic['c'])

print(dic['d'])

六、十个数字多分类问题

要点 全连接层的输入

必须是(batch, input_dim)输入

torch.nn.Linear(input_dim, output_dim)

必须是(batch, output_dim)输出

import numpy as np

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root="../dataset/mnist/", train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root="../dataset/mnist/", train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

class Net(torch.nn.Module):

def __init__(self):

super().__init__()

self.l1 = torch.nn.Linear(784, 512)

self.l2 = torch.nn.Linear(512, 256)

self.l3 = torch.nn.Linear(256, 128)

self.l4 = torch.nn.Linear(128, 64)

self.l5 = torch.nn.Linear(64, 10)

def forward(self, a):

b = a.view(-1, 784)

c = F.relu(self.l1(b))

d = F.relu(self.l2(c))

e = F.relu(self.l3(d))

f = F.relu(self.l4(e))

g = self.l5(f)

return g

model = Net()

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print("[%d, %5d] loss: %.3f" % (epoch+1, batch_idx+1, running_loss/300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

predicted("Accuracy on test set: %d %%" % (100*correct/total))

if __name__ == "__main__":

for epoch in range(10):

train(epoch)

test()

七、CNN入门

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root="../dataset/mnist/", train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root="../dataset/mnist/", train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10)

def forward(self, x1):

x2 = F.relu(self.pooling(self.conv1(x1)))

x3 = F.relu(self.pooling(self.conv2(x2)))

batch_size_in = x1.size(0)

x4 = x3.view(batch_size_in, -1)

y_prediction = self.fc(x4)

return y_prediction

model = Net()

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print("[%d, %5d] loss: %.3f" % (epoch+1, batch_idx+1, running_loss/300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

predicted("Accuracy on test set: %d %%" % (100*correct/total))

if __name__ == "__main__":

for epoch in range(10):

train(epoch)

test()

八、CNN进阶

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root="../dataset/mnist/", train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root="../dataset/mnist/", train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

class InceptionA(torch.nn.Module):

def __init__(self, in_channels):

super(InceptionA, self).__init__()

self.branch1x1_1 = torch.nn.Conv2d(in_channels=in_channels, out_channels=16, kernel_size=1)

self.branch5x5_1 = torch.nn.Conv2d(in_channels=in_channels, out_channels=16, kernel_size=1)

self.branch5x5_2 = torch.nn.Conv2d(in_channels=16, out_channels=24, kernel_size=5, padding=2)

self.branch3x3_1 = torch.nn.Conv2d(in_channels=in_channels, out_channels=16, kernel_size=1)

self.branch3x3_2 = torch.nn.Conv2d(in_channels=16, out_channels=24, kernel_size=3, padding=1)

self.branch3x3_3 = torch.nn.Conv2d(in_channels=24, out_channels=24, kernel_size=3, padding=1)

self.branch_pool_1 = torch.nn.Conv2d(in_channels=in_channels, out_channels=24, kernel_size=1)

def forward(self, x):

branch_1x1 = self.branch1x1_1(x)

branch_5x5_a = self.branch5x5_1(x)

branch_5x5 = self.branch5x5_2(branch_5x5_a)

branch_3x3_a = self.branch3x3_1(x)

branch_3x3_b = self.branch3x3_2(branch_3x3_a)

branch_3x3 = self.branch3x3_3(branch_3x3_b)

branch_pool_a = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool_1(branch_pool_a)

outputs = [branch_1x1, branch_5x5, branch_3x3, branch_pool]

return torch.cat(outputs, dim=1)

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(88, 20, kernel_size=5)

self.incep1 = InceptionA(in_channels=10)

self.incep2 = InceptionA(in_channels=20)

self.mp = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(1408, 10)

def forward(self, x):

in_size = x.size(0)

a = F.relu(self.mp(self.conv1(x)))

b = self.incep1(a)

c = F.relu(self.mp(self.conv2(b)))

d = self.incep2(c)

e = d.view(in_size, -1)

f = self.fc(e)

return f

model = Net()

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print("[%d, %5d] loss: %.3f" % (epoch+1, batch_idx+1, running_loss/300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

predicted("Accuracy on test set: %d %%" % (100*correct/total))

if __name__ == "__main__":

for epoch in range(10):

train(epoch)

test()

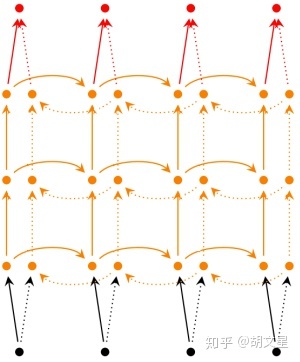

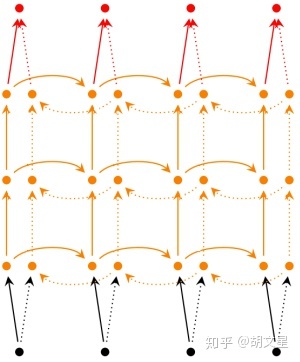

九、RNN入门

import torch

input_size = 4

hidden_size = 4

batch_size = 1

num_layers = 1

seq_len = 5

idx2char = ["e", "h", "l", "o"]

x_data = [1, 0, 2, 2, 3]

y_data = [3, 1, 2, 3, 2]

one_hot_lookup = [[1, 0, 0, 0], [0, 1, 0, 0], [0, 0, 1, 0], [0, 0, 0, 1]]

x_one_hot = [one_hot_lookup[x] for x in x_data]

inputs = torch.Tensor(x_one_hot).view(seq_len, batch_size, input_size)

labels = torch.LongTensor(y_data).view(seq_len, 1)

num_class = 4

input_size = 4

hidden_size = 8

embedding_size = 10

num_layers = 2

batch_size = 1

seq_len = 5

inputs = torch.LongTensor(x_data).view(batch_size, seq_len)

labels = torch.LongTensor(y_data)

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.emb = torch.nn.Embedding(input_size, embedding_size)

self.rnn = torch.nn.RNN(input_size=embedding_size, hidden_size=hidden_size, num_layers=num_layers, batch_first=True)

self.fc = torch.nn.Linear(hidden_size, num_class)

def forward(self, x):

hidden = torch.zeros(num_layers, x.size(0), hidden_size)

x = self.emb(x)

x, _ = self.rnn(x, hidden)

x = self.fc(x)

return x.view(-1, num_class)

net = Model()

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.05)

for epoch in range(15):

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

_, idx = outputs.max(dim=1)

idx = idx.data.numpy()

print("Predicted:", "".join([idx2char[x] for x in idx]), end="")

print(",Epoch[%d/15] loss=%.4f" % (epoch+1, loss.item()))

十、RNN进阶

import torch

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import gzip

import csv

import time

import math

class NameDataset(Dataset):

def __init__(self, is_train_set):

filename = './names_train.csv.gz' if is_train_set else './names_test.csv.gz'

with gzip.open(filename, 'rt') as f:

reader = csv.reader(f)

rows = list(reader)

self.names = [row[0] for row in rows]

self.len = len(self.names)

self.countries = [row[1] for row in rows]

self.country_list = list(sorted(set(self.countries)))

self.country_dict = self.getCountryDict()

self.country_num = len(self.country_list)

def __getitem__(self, index):

return self.names[index], self.country_dict[self.countries[index]]

def __len__(self):

return self.len

def getCountryDict(self):

country_dict = dict()

for idx, country_name in enumerate(self.country_list, 0):

country_dict[country_name] = idx

return country_dict

def idx2country(self, index):

return self.country_list[index]

def getCountriesNum(self):

return self.country_num

HIDDEN_SIZE = 100

BATCH_SIZE = 256

N_LAYER = 2

N_EPOCHS = 100

N_CHARS = 128

trainSet = NameDataset(is_train_set=True)

trainLoader = DataLoader(trainSet, batch_size=BATCH_SIZE, shuffle=True)

testSet = NameDataset(is_train_set=False)

testLoader = DataLoader(testSet, batch_size=BATCH_SIZE, shuffle=False)

N_COUNTRY = trainSet.getCountriesNum()

class RNNClassifier(torch.nn.Module):

def __init__(self, input_size, hidden_size, output_size, n_layers=1, bidirectional=True):

super(RNNClassifier, self).__init__()

self.hidden_size = hidden_size

self.n_layers = n_layers

self.n_directions = 2 if bidirectional else 1

self.embedding = torch.nn.Embedding(input_size, hidden_size)

self.gru = torch.nn.GRU(hidden_size, hidden_size, n_layers, bidirectional=bidirectional)

self.fc = torch.nn.Linear(hidden_size * self.n_directions, output_size)

def _init_hidden(self, batch_size):

hidden = torch.zeros(self.n_layers * self.n_directions, batch_size, self.hidden_size)

return hidden

def forward(self, input, seq_lengths):

input = input.t()

batch_size = input.size(1)

hidden = self._init_hidden(batch_size)

embedding = self.embedding(input)

gru_input = torch.nn.utils.rnn.pack_padded_sequence(embedding, seq_lengths)

output, hidden = self.gru(gru_input, hidden)

if self.n_directions == 2:

hidden_cat = torch.cat([hidden[-1], hidden[-2]], dim=1)

else:

hidden_cat = hidden[-1]

fc_output = self.fc(hidden_cat)

return fc_output

def name2list(name):

arr = [ord(c) for c in name]

return arr, len(arr)

def make_tensors(names, countries):

sequences_and_lengths = [name2list(name) for name in names]

name_sequences = [s1[0] for s1 in sequences_and_lengths]

seq_lengths = torch.LongTensor([s1[1] for s1 in sequences_and_lengths])

countries = countries.long()

seq_tensor = torch.zeros(len(name_sequences), seq_lengths.max()).long()

for idx, (seq, seq_len) in enumerate(zip(name_sequences, seq_lengths), 0):

seq_tensor[idx, :seq_len] = torch.LongTensor(seq)

seq_lengths, perm_idx = seq_lengths.sort(dim=0, descending=True)

seq_tensor = seq_tensor[perm_idx]

countries = countries[perm_idx]

return seq_tensor, seq_lengths, countries

classifier = RNNClassifier(N_CHARS, HIDDEN_SIZE, N_COUNTRY, N_LAYER)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(classifier.parameters(), lr=0.001)

def trainModel():

def time_since(since):

s = time.time() - since

m = math.floor(s / 60)

s -= m * 60

return '%dm %ds' % (m, s)

total_loss = 0

for i, (names, countries) in enumerate(trainLoader, 1):

inputs, seq_lengths, target = make_tensors(names, countries)

output = classifier(inputs, seq_lengths)

loss = criterion(output, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss += loss.item()

if i % 10 == 0:

print(f'[{time_since(start)}] Epoch {epoch} ', end='')

print(f'[{i * len(inputs)}/{len(trainSet)}] ', end='')

print(f'loss={total_loss / (i * len(inputs))}')

return total_loss

def testModel():

correct = 0

total = len(testSet)

print("evaluating trained model ... ")

with torch.no_grad():

for i, (names, countries) in enumerate(testLoader):

inputs, seq_lengths, target = make_tensors(names, countries)

output = classifier(inputs, seq_lengths)

pred = output.max(dim=1, keepdim=True)[1]

correct += pred.eq(target.view_as(pred)).sum().item()

percent = '%.2f' % (100 * correct / total)

print(f'Test set: Accuracy {correct}/{total} {percent}%')

return correct / total

N_EPOCHS = 50

start = time.time()

print("Training for %d epochs..." % N_EPOCHS)

acc_list = []

for epoch in range(1, N_EPOCHS + 1):

trainModel()

acc = testModel()

acc_list.append(acc)

十一、LSTM

import torch

import torch.nn as nn

rnn = nn.LSTM(10, 20, 2)

input = torch.randn(5, 3, 10)

h0 = torch.randn(2, 3, 20)

c0 = torch.randn(2, 3, 20)

output, (hn, cn) = rnn(input, (h0, c0))

print(output.size(), hn.size(), cn.size())

十二、双向GRU

import torch

from torch import nn

batch = 4

input_size = 32

hidden_size = 128

G = nn.GRUCell(input_size=input_size,hidden_size=hidden_size)

inputs = torch.ones([batch,input_size])

hidden = torch.ones([batch,hidden_size])

print(inputs.shape) # [batch,input_size]

print(hidden.shape) # [batch,hidden_size]

output = G(input=inputs,hx=hidden)

print(output.shape) # [batch,hidden_size]

import torch

from torch import nn

batch = 10

seq_len = 32

input_size = 64

hidden_size = 256

num_layers = 4

G = nn.GRU(input_size=input_size,hidden_size=hidden_size,num_layers=num_layers,bidirectional=True,batch_first=True)

inputs = torch.ones(batch,seq_len,input_size) # 输入序列 [batch seq_len input_size]

hid = torch.ones(num_layers*2,batch,hidden_size) # [num_layers*2 batch hidden_size]

pri = G(input=inputs,hx=hid)

print(pri[0].shape) # 输出序列(拼接) torch.Size([10, 32, 512]) [batch seq_len hidden_size*2]

print(pri[1].shape) # torch.Size([8, 10, 256]) [2*num_layers batch hidden_size]

十三、网络的保存与加载

model = LogisticRegressionModel()

net3 = LogisticRegressionModel()

torch.save(model, 'net.pkl')

torch.save(model.state_dict(), 'nek_params.pkl')

net2 = torch.load('net.pkl')

net3.load_state_dict(torch.load('nek_params.pkl'))

8万+

8万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?