词云图

from wordcloud import WordCloud

import matplotlib.pyplot as plt

import jieba

from wordcloud import WordCloud,ImageColorGenerator

from PIL import Image

import numpy as np

text = open(r'E:\data8.txt',"r",encoding="utf-8").read()

wordlist_after_jieba = jieba.cut(text, cut_all=False)

wl_space_split = " ".join(wordlist_after_jieba)

print(wl_space_split)

image = Image.open(r'E:\2.jpeg')

graph = np.array(image)

wc = WordCloud(font_path = r"msyh.ttc",background_color='white',max_font_size=80,mask=graph)

wc.generate(wl_space_split)

image_color = ImageColorGenerator(graph)

wc.recolor(color_func = image_color)

wc.to_file(r'E:\wordcloud.png')

plt.figure("健身卡")

plt.imshow(wc)

plt.axis("off")

plt.show()

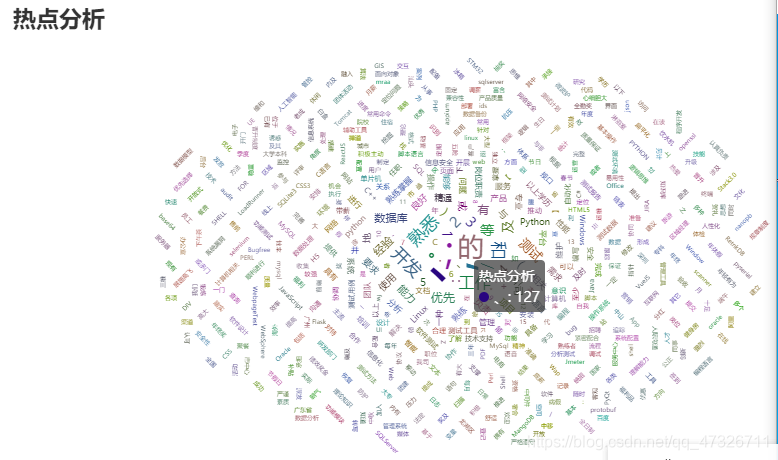

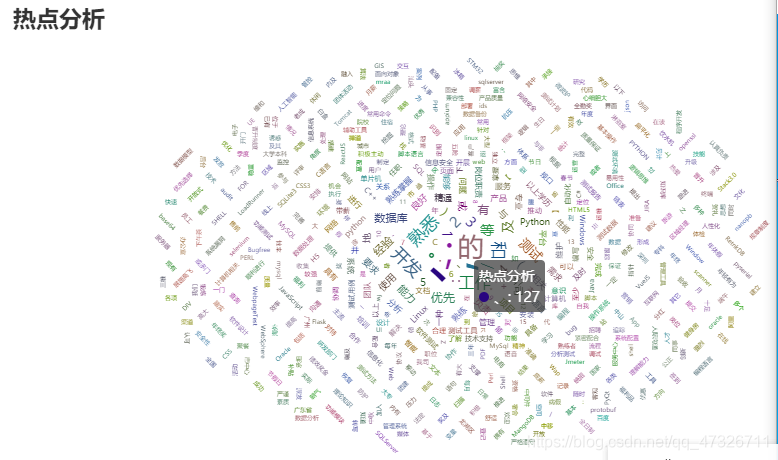

from pyecharts.charts import WordCloud

import jieba

import sqlite3

import pandas as pd

from collections import Counter

import pyecharts.options as opts

from pyecharts.charts import WordCloud

conn = sqlite3.connect(r'C:\Users\JSJSYS\Desktop\分词\数据\数据\recruit.db')

sql = 'select * from recruit'

df = pd.read_sql(sql,conn)

data = df['job_detail'][:10]

data_str = ''

for res in data:

data_str += res

result_cut = jieba.cut(data_str)

result = Counter(result_cut)

items_ = result.items()

items_list = list(items_)

(

WordCloud()

.add(series_name="热点分析", data_pair=items_list, word_size_range=[6, 66])

.set_global_opts(

title_opts=opts.TitleOpts(

title="热点分析", title_textstyle_opts=opts.TextStyleOpts(font_size=23)

),

tooltip_opts=opts.TooltipOpts(is_show=True),

)

.render("wordcloud1.html")

)

停用词处理

stop = []

standard_stop = []

text = []

after_text = []

file_stop = r'C:\Users\JSJSYS\Desktop\分词11\hit_stopwords.txt'

file_text = r'C:\Users\JSJSYS\Desktop\分词11\data.txt'

with open(file_stop, 'r', encoding='utf-8-sig') as f:

lines = f.readlines()

for line in lines:

lline = line.strip()

stop.append(lline)

for i in range(0, len(stop)):

for word in stop[i].split():

standard_stop.append(word)

with open(file_text, 'r', encoding='utf-8-sig') as f:

lines = f.readlines()

for line in lines:

lline = line.strip()

lline = str(line.split())

for i in lline:

if i not in standard_stop:

after_text.append(i)

with open(r'C:\Users\JSJSYS\Desktop\分词11\result.txt', 'w+')as f:

for i in after_text:

f.write(i)

3480

3480

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?