Oracle参考官网的配置

oracle配置如下

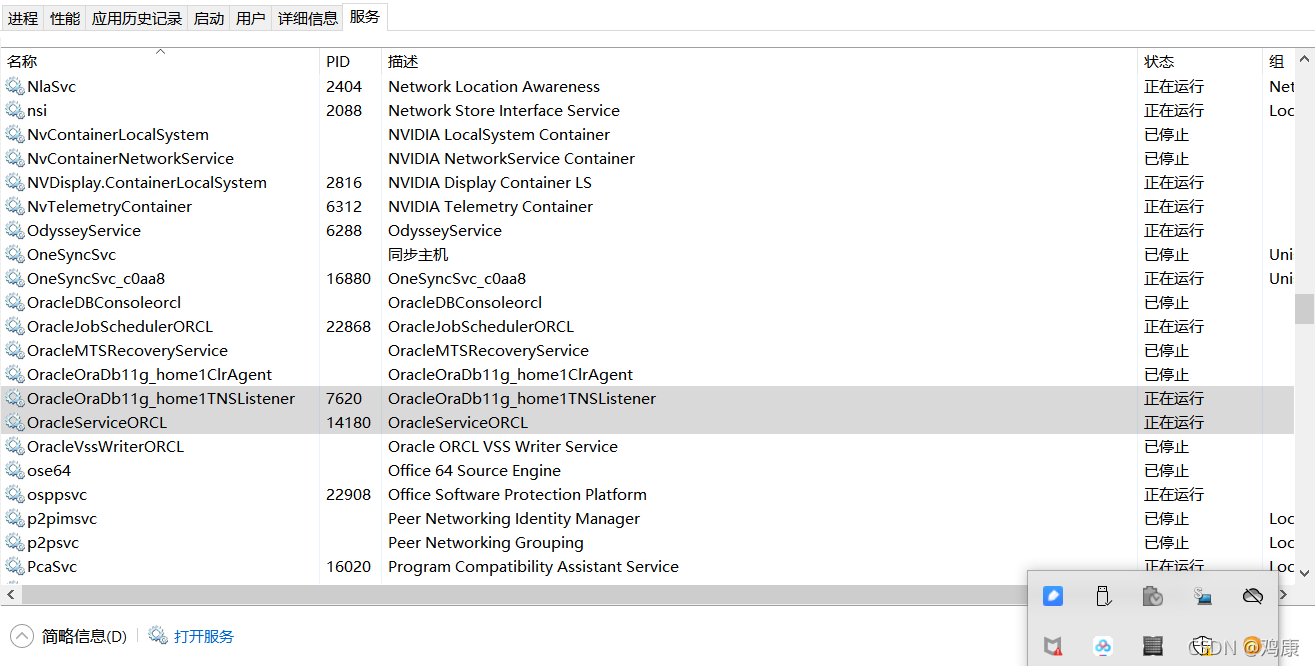

连接oracle首先需要打开这两个服务

提前创建对应目录然后cmd命令行运行以下命令

-- 以 DBA 身份连接到数据库

sqlplus sys/oracleadmin AS SYSDBA

-- 启用日志归档

alter system set db_recovery_file_dest_size = 10G;

alter system set db_recovery_file_dest = 'F:\ORACLE\db_recovery_file_dest' scope=spfile;

shutdown immediate;

startup mount;

alter database archivelog;

alter database open;

-- 检查日志归档是否开启

archive log list;

-- 为捕获的数据库启用补充日志记录,以便数据更改捕获更改的数据库行之前的状态,下面说明了如何在数据库级别进行配置。

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA;

-- 创建表空间

CREATE TABLESPACE logminer_tbs DATAFILE 'F:\ORACLE\namespace\logminer_tbs.dbf' SIZE 25M REUSE AUTOEXTEND ON MAXSIZE UNLIMITED;

-- 创建用户family绑定表空间LOGMINER_TBS

CREATE USER family IDENTIFIED BY chickenkang DEFAULT TABLESPACE LOGMINER_TBS QUOTA UNLIMITED ON LOGMINER_TBS;

-- 授予family用户dba的权限

grant connect,resource,dba to family;

-- 并授予权限

GRANT CREATE SESSION TO family;

GRANT SELECT ON V_$DATABASE to family;

GRANT FLASHBACK ANY TABLE TO family;

GRANT SELECT ANY TABLE TO family;

GRANT SELECT_CATALOG_ROLE TO family;

GRANT EXECUTE_CATALOG_ROLE TO family;

GRANT SELECT ANY TRANSACTION TO family;

GRANT EXECUTE ON SYS.DBMS_LOGMNR TO family;

GRANT SELECT ON V_$LOGMNR_CONTENTS TO family;

GRANT CREATE TABLE TO family;

GRANT LOCK ANY TABLE TO family;

GRANT ALTER ANY TABLE TO family;

GRANT CREATE SEQUENCE TO family;

GRANT EXECUTE ON DBMS_LOGMNR TO family;

GRANT EXECUTE ON DBMS_LOGMNR_D TO family;

GRANT SELECT ON V_$LOG TO family;

GRANT SELECT ON V_$LOG_HISTORY TO family;

GRANT SELECT ON V_$LOGMNR_LOGS TO family;

GRANT SELECT ON V_$LOGMNR_CONTENTS TO family;

GRANT SELECT ON V_$LOGMNR_PARAMETERS TO family;

GRANT SELECT ON V_$LOGFILE TO family;

GRANT SELECT ON V_$ARCHIVED_LOG TO family;

GRANT SELECT ON V_$ARCHIVE_DEST_STATUS TO family;

-- 修改user表让其支持增量日志,这句先在Oracle里创建user表再执行

ALTER TABLE FAMILY."user" ADD SUPPLEMENTAL LOG DATA (ALL) COLUMNS;

其中有条命令不行的,参考另一篇博客https://blog.youkuaiyun.com/eyeofeagle/article/details/119180015

创建表user

flink程序

pom.xml

<properties>

<java.version>1.8</java.version>

<maven.compiler.source>${java.version}</maven.compiler.source>

<maven.compiler.target>${java.version}</maven.compiler.target>

<flink.version>1.13.0</flink.version>

<scala.version>2.12</scala.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-oracle-cdc</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>com.oracle.database.jdbc</groupId>

<artifactId>ojdbc10</artifactId>

<version>19.10.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-cep_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-json</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.68</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.20</version>

</dependency>

<!--Flink 默认使用的是 slf4j 记录日志,相当于一个日志的接口,我们这里使用 log4j 作为

具体的日志实现-->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-to-slf4j</artifactId>

<version>2.14.0</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

OracleSourceExample.java

import com.ververica.cdc.connectors.oracle.table.StartupOptions;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import com.ververica.cdc.debezium.JsonDebeziumDeserializationSchema;

import com.ververica.cdc.connectors.oracle.OracleSource;

public class OracleSourceExample {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SourceFunction<String> sourceFunction = OracleSource.<String>builder()

.hostname("localhost")

.port(1521)

.database("ORCL") // 可以百度看看自己处于哪个数据库中

.schemaList("family") // monitor inventory schema//表头

//.tableList("inventory.products") // monitor products table

.username("family")

.password("chickenkang")

.deserializer(new JsonDebeziumDeserializationSchema()) // converts SourceRecord to JSON String

.startupOptions(StartupOptions.initial())

.build();

DataStreamSource<String> stringDataStreamSource = env.addSource(sourceFunction);

stringDataStreamSource.print(); // use parallelism 1 for sink to keep message ordering

env.execute("JOB");

}

}

对表进行增加删除修改的效果

详细学习可看

https://debezium.io/documentation/reference/1.4/connectors/oracle.html#oracle-create-users-logminer

1945

1945

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?