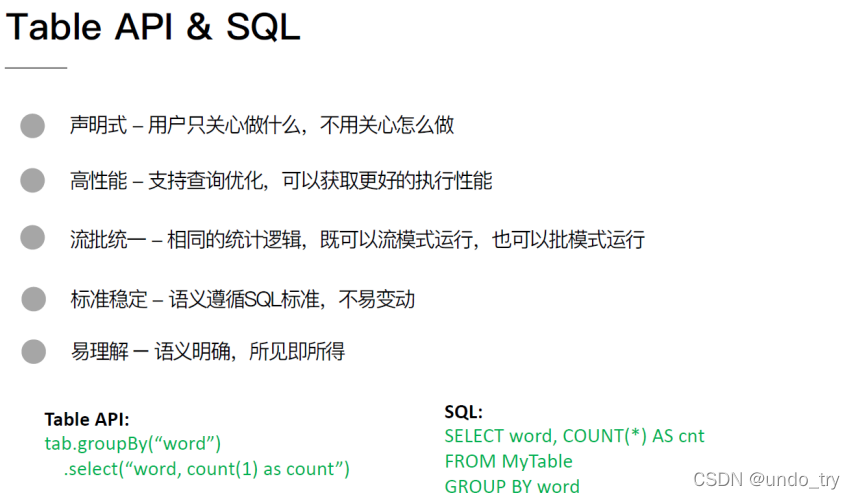

Flink之Table API& SQL

自 2015 年开始,阿里巴巴开始调研开源流计算引擎,最终决定基于 Flink 打造新一代计算引擎,针对 Flink 存在的不足进行优化和改进,并且在 2019 年初将最终代码开源,也就是Blink。Blink 在原来的 Flink 基础上最显著的一个贡献就是 Flink SQL 的实现。

官网地址:https://nightlies.apache.org/flink/flink-docs-release-1.12/dev/table/common.html

注意:目前FlinkSQL性能不如SparkSQL,未来FlinkSQL可能会越来越好

(1)将DataStream注册为Table和View并进行SQL统计

package com.flinksql;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import java.util.Arrays;

import static org.apache.flink.table.api.Expressions.$;

/**

* 将DataStream注册为Table和View并进行SQL统计

*/

public class _01_Flinksql {

public static void main(String[] args) throws Exception {

// TODO 0.准备环境

EnvironmentSettings settings = EnvironmentSettings

.newInstance()

.useBlinkPlanner()

.inStreamingMode()

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, settings);

// TODO 1.Source

DataStream<Order> orderA = env.fromCollection(Arrays.asList(

new Order(1L, "beer", 3),

new Order(1L, "diaper", 4),

new Order(3L, "rubber", 2)));

DataStream<Order> orderB = env.fromCollection(Arrays.asList(

new Order(2L, "pen", 3),

new Order(2L, "rubber", 3),

new Order(4L, "beer", 1)));

// TODO 2.将DataStream转换为table、view,然后进行查询

Table tableA = tableEnv.fromDataStream(orderA, $("user"), $("product"), $("amount"));

tableEnv.createTemporaryView("viewB",orderB,$("user"), $("product"), $("amount"));

// 查询tableA中amount > 2 viewB > 1的数据(合并)

Table resTable = tableEnv.sqlQuery("SELECT * FROM " + tableA + " where amount > 2\n" +

"UNION\n" +

"SELECT * FROM viewB where amount > 1");

// 将表准换为流

// DataStream<Order> orderDataStream = tableEnv.toAppendStream(resTable, Order.class);

DataStream<Tuple2<Boolean, Order>> orderDataStream = tableEnv.toRetractStream(resTable, Order.class);

// TODO 3.sink

orderDataStream.print("orderDataStream----");

// TODO 4.execute

env.execute("_01_Flinksql");

}

@Data

@NoArgsConstructor

@AllArgsConstructor

public static class Order {

public Long user;

public String product;

public int amount;

}

}

(2)使用SQL和Table两种方式对DataStream中的单词进行统计

SQL风格:

package com.flinksql;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import static org.apache.flink.table.api.Expressions.$;

/**

* 使用SQL和Table两种方式对DataStream中的单词进行统计

*/

public class _02_Flinksql {

public static void main(String[] args) throws Exception {

// TODO 0.准备环境

EnvironmentSettings settings = EnvironmentSettings

.newInstance()

.useBlinkPlanner()

.inStreamingMode()

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, settings);

// TODO 1.Source

DataStream<WordCount> input = env.fromElements(

new WordCount("Hello", 1),

new WordCount("World", 1),

new WordCount("Hello", 1)

);

// TODO 2.将DataStream转换为view,然后进行查询

tableEnv.createTemporaryView("wc",input,$("word"),$("frequency"));

// select word,sum(frequency) from wc group by word

Table resTable = tableEnv.sqlQuery("select word,sum(frequency) as frequency from wc group by word");

DataStream<Tuple2<Boolean, WordCount>> resDS = tableEnv.toRetractStream(resTable, WordCount.class);

// TODO 3.sink

resDS.print();

// TODO 4.execute

env.execute("_02_Flinksql");

}

@Data

@NoArgsConstructor

@AllArgsConstructor

public static class WordCount {

public String word;

public long frequency;

}

}

API风格:

package com.flinksql;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import static org.apache.flink.table.api.Expressions.$;

/**

* 使用SQL和Table两种方式对DataStream中的单词进行统计

*/

public class _02_Flinksql_DSL {

public static void main(String[] args) throws Exception {

// TODO 0.准备环境

EnvironmentSettings settings = EnvironmentSettings

.newInstance()

.useBlinkPlanner()

.inStreamingMode()

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, settings);

// TODO 1.Source

DataStream<WordCount> input = env.fromElements(

new WordCount("Hello", 1),

new WordCount("World", 1),

new WordCount("Hello", 1)

);

// TODO 2.将DataStream转换为view,然后进行查询

Table table = tableEnv.fromDataStream(input, $("word"), $("frequency"));

// DSL

Table resTable = table

.groupBy($("word"))

.select($("word"), $("frequency").sum())

.as("word","frequency");

// 准换为DS

DataStream<Tuple2<Boolean, WordCount>> resDS =

tableEnv.toRetractStream(resTable, WordCount.class);

// TODO 3.sink

resDS.print();

// TODO 4.execute

env.execute("_02_Flinksql_DSL");

}

@Data

@NoArgsConstructor

@AllArgsConstructor

public static class WordCount {

public String word;

public long frequency;

}

}

(3)每隔5秒统计最近5秒的每个用户的订单总数、订单的最大金额、订单的最小金额

SQL风格:

package com.flinksql;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.RichSourceFunction;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

import java.time.Duration;

import java.util.Random;

import java.util.UUID;

import java.util.concurrent.TimeUnit;

import static org.apache.flink.table.api.Expressions.$;

/**

* 每隔5秒统计最近5秒的每个用户的订单总数、订单的最大金额、订单的最小金额

*/

public class _03_Flinksql {

public static void main(String[] args) throws Exception {

// TODO 0.准备环境

EnvironmentSettings settings = EnvironmentSettings

.newInstance()

.useBlinkPlanner()

.inStreamingMode()

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, settings);

// TODO 1.Source

DataStreamSource<Order> orderDS = env.addSource(new RichSourceFunction<Order>() {

private Boolean isRunning = true;

@Override

public void run(SourceContext<Order> ctx) throws Exception {

Random random = new Random();

while (isRunning) {

Order order = new Order(

UUID.randomUUID().toString(),

random.nextInt(3),

random.nextInt(101),

System.currentTimeMillis()

);

TimeUnit.SECONDS.sleep(1);

ctx.collect(order);

}

}

@Override

public void cancel() {

isRunning = false;

}

});

SingleOutputStreamOperator<Order> orderWithWatermarks = orderDS.assignTimestampsAndWatermarks(

WatermarkStrategy.<Order>forBoundedOutOfOrderness(Duration.ofSeconds(5))

.withTimestampAssigner(new SerializableTimestampAssigner<Order>() {

@Override

public long extractTimestamp(Order order, long recordTimestamp) {

return order.getCreateTime();

}

})

);

// TODO 2.将DataStream转换为view,然后进行查询

tableEnv.createTemporaryView("t_order",orderWithWatermarks,

$("orderId"), $("userId"), $("money"), $("createTime").rowtime());

// select userId,count(orderId) as cntOrder,sum(money) as sumMoney,max(money) as maxMoney ,min(money) as minMoney

// from order group by TUMBLE(rowtime, INTERVAL '5' SECOND),userId

Table table = tableEnv.sqlQuery("SELECT\n" +

"\tuserId,\n" +

"\tcount(orderId) AS cntOrder,\n" +

"\tsum(money) AS sumMoney,\n" +

"\tmax(money) AS maxMoney,\n" +

"\tmin(money) AS minMoney\n" +

"FROM\n" +

"\tt_order\n" +

"GROUP BY\n" +

"\tTUMBLE (createTime, INTERVAL '5' SECOND),\n" +

"\tuserId");

DataStream<Tuple2<Boolean, Row>> resDS = tableEnv.toRetractStream(table, Row.class);

// TODO 3.sink

resDS.print();

// TODO 4.execute

env.execute("_03_Flinksql");

}

@Data

@AllArgsConstructor

@NoArgsConstructor

public static class Order {

private String orderId;

private Integer userId;

private Integer money;

private Long createTime;

}

}

API风格:

package com.flinksql;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.RichSourceFunction;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.Tumble;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

import java.time.Duration;

import java.util.Random;

import java.util.UUID;

import java.util.concurrent.TimeUnit;

import static org.apache.flink.table.api.Expressions.$;

import static org.apache.flink.table.api.Expressions.lit;

/**

* 每隔5秒统计最近5秒的每个用户的订单总数、订单的最大金额、订单的最小金额

*/

public class _03_Flinksql_DSL {

public static void main(String[] args) throws Exception {

// TODO 0.准备环境

EnvironmentSettings settings = EnvironmentSettings

.newInstance()

.useBlinkPlanner()

.inStreamingMode()

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, settings);

// TODO 1.Source

DataStreamSource<Order> orderDS = env.addSource(new RichSourceFunction<Order>() {

private Boolean isRunning = true;

@Override

public void run(SourceContext<Order> ctx) throws Exception {

Random random = new Random();

while (isRunning) {

Order order = new Order(

UUID.randomUUID().toString(),

random.nextInt(3),

random.nextInt(101),

System.currentTimeMillis()

);

TimeUnit.SECONDS.sleep(1);

ctx.collect(order);

}

}

@Override

public void cancel() {

isRunning = false;

}

});

SingleOutputStreamOperator<Order> orderWithWatermarks = orderDS.assignTimestampsAndWatermarks(

WatermarkStrategy.<Order>forBoundedOutOfOrderness(Duration.ofSeconds(5))

.withTimestampAssigner(new SerializableTimestampAssigner<Order>() {

@Override

public long extractTimestamp(Order order, long recordTimestamp) {

return order.getCreateTime();

}

})

);

// TODO 2.将DataStream转换为view,然后进行查询

tableEnv.createTemporaryView("t_order",orderWithWatermarks,

$("orderId"), $("userId"), $("money"), $("createTime").rowtime());

Table resTable = tableEnv.from("t_order")

.window(

Tumble.over(lit(5).second())

.on($("createTime"))

.as("window")

)

.groupBy($("window"), $("userId"))

.select(

$("userId"),

$("orderId").count(),

$("money").max(),

$("money").min()

)

.as("userId", "cnt", "max", "min");

DataStream<Tuple2<Boolean, Row>> resDS = tableEnv.toRetractStream(resTable, Row.class);

// TODO 3.sink

resDS.print();

// TODO 4.execute

env.execute("_03_Flinksql_DSL");

}

@Data

@AllArgsConstructor

@NoArgsConstructor

public static class Order {

private String orderId;

private Integer userId;

private Integer money;

private Long createTime;

}

}

(4)从Kafka中消费数据并过滤出状态为success的数据再写入到Kafka

package com.flinksql;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableResult;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

/**

* 从Kafka中消费数据并过滤出状态为success的数据再写入到Kafka

*

* {"user_id": "1", "page_id":"1", "status": "success"}

* {"user_id": "1", "page_id":"1", "status": "success"}

* {"user_id": "1", "page_id":"1", "status": "success"}

* {"user_id": "1", "page_id":"1", "status": "success"}

* {"user_id": "1", "page_id":"1", "status": "fail"}

*/

public class _04_Flinksql {

public static void main(String[] args) throws Exception {

// TODO 0.准备环境

EnvironmentSettings settings = EnvironmentSettings

.newInstance()

.useBlinkPlanner()

.inStreamingMode()

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, settings);

// TODO 1.Source

// https://nightlies.apache.org/flink/flink-docs-release-1.12/dev/table/connectors/kafka.html

TableResult inputTable = tableEnv.executeSql(

"CREATE TABLE input_kafka (\n" +

" `user_id` BIGINT,\n" +

" `page_id` BIGINT,\n" +

" `status` STRING\n" +

") WITH (\n" +

" 'connector' = 'kafka',\n" +

" 'topic' = 'input_kafka',\n" +

" 'properties.bootstrap.servers' = 'centos01:9092',\n" +

" 'properties.group.id' = 'testGroup',\n" +

" 'scan.startup.mode' = 'latest-offset',\n" +

" 'format' = 'json'\n" +

")"

);

// TODO 2.过滤

String sql = "select " +

"user_id," +

"page_id," +

"status " +

"from input_kafka " +

"where status = 'success'";

Table ResultTable = tableEnv.sqlQuery(sql);

// TODO 3.sink 写到kafka中

TableResult outputTable = tableEnv.executeSql(

"CREATE TABLE output_kafka (\n" +

" `user_id` BIGINT,\n" +

" `page_id` BIGINT,\n" +

" `status` STRING\n" +

") WITH (\n" +

" 'connector' = 'kafka',\n" +

" 'topic' = 'output_kafka',\n" +

" 'properties.bootstrap.servers' = 'node1:9092',\n" +

" 'format' = 'json',\n" +

" 'sink.partitioner' = 'round-robin'\n" +

")"

);

tableEnv.executeSql("insert into output_kafka select * from "+ResultTable);

// TODO 4.execute

env.execute("_04_Flinksql");

}

}

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?