1.keepalived高可用lvs

简介:

用lvs做负载均衡,作为架构的最前端,随着日益增长的访问量,需要给负载均衡做高可用架构,利用keepalived解决单点风险,一旦主节点宕机能快速切换到备节点服务器

配置前提:

(1)各节点时间必须同步:(ntpdate,chronyd)

(2)确保iptables和selinux不会成为阻碍

(3)确保各节点的用于集群服务的主机接口支持multicast通信(多播)

拓扑图:

下载:yum -y install keepalived ipvsadm

keep1配置文件: /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost #故障发生时给谁发邮件通知

}

notification_email_from keepalived@localhost #邮件由那个用户发出

smtp_server 127.0.0.1 #邮箱地址

smtp_connect_timeout 30 #邮箱连接超时时长

router_id keep1 #路由主机名

vrrp_mcast_group4 224.1.11.111 #组播地址,地址范围224-239,与keep2保持一致

}

vrrp_instance VI_1 {

state MASTER #主keepalived

interface ens33 #网卡接口

virtual_router_id 111 #当前虚拟路由器的惟一标识,范围是0-255,与keep2保持一致

priority 100 #优先级,范围1-254,数字越大优先级越高,keep2必须小于keep1

advert_int 1 #vrrp通告的时间间隔

authentication {

auth_type PASS

auth_pass like5453 #随机数,与keep2保持一致

}

virtual_ipaddress {

10.117.20.99/24 dev ens33 label ens33:0 #vip地址

}

}

virtual_server 10.117.20.99 80 {

delay_loop 1 #隔一秒检测一次后端主机

lb_algo wrr #调度算法,wrr为加权轮询

lb_kind DR #lvs类型

protocol TCP #协议

sorry_server 127.0.0.1 80 #表示如果后端主机都掉线,转到本机的80端口服务

real_server 10.117.20.225 80 {

weigth 1 #权重

HTTP_GET { #健康检测方式

url { #指定对那个url发请求,url可以重复多次,默认对主页发送请求

path /index.html

status_code 200

}

nb_get_retry 3 #尝试几次来做检测

delay_before_retry 2 #延迟重试时长

connect_timeout 3 #连接超时时长,默认5秒

}

}

real_server 10.117.20.226 80 {

weigth 1

HTTP_GET {

url {

path /index.html

status_code 200

}

nb_get_retry 3

delay_before_retry 2

connect_timeout 3

}

}

}

keep2 配置文件: 需要修改的地方下面都已经指出来,其他参数保持不变

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id keep2 #此处需要修改

vrrp_mcast_group4 224.1.11.111

}

vrrp_instance VI_1 {

state BACKUP #此处需要修改

interface ens33

virtual_router_id 111

priority 90 #此处需要修改

advert_int 1

authentication {

auth_type PASS

auth_pass like5453

}

virtual_ipaddress {

10.117.20.99/24 dev ens33 label ens33:0

}

}

virtual_server 10.117.20.99 80 {

delay_loop 1

lb_algo wrr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 10.117.20.225 80 {

weigth 1

HTTP_GET {

url {

path /index.html

status_code 200

}

nb_get_retry 3

delay_before_retry 2

connect_timeout 3

}

}

real_server 10.117.20.226 80 {

weigth 1

HTTP_GET {

url {

path /index.html

status_code 200

}

nb_get_retry 3

delay_before_retry 2

connect_timeout 3

}

}

}

修改RS主机内核参数:

#!/bin/bash

#修改rs主机内核参数,以及添加vip地址和路由

vip='10.117.20.99'

mask='255.255.255.255'

iface='lo:0'

broad='10.117.20.99'

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig $iface $vip netmask $mask broadcast $broad up

route add -host $vip $iface

;;

stop)

ifconfig $iface down

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announ ce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

;;

*)

echo "usege start|stop"

exit 1

;;

esac

#分别在两台RS主机运行此脚本

启动服务:

[root@keep2 ~]#systemctl start keepalived #先启动keep2,等一下在启动keep1,可以看看主备切换的效果

[root@s1 ~]#systemctl start nginx

[root@s2 ~]#systemctl start nginx

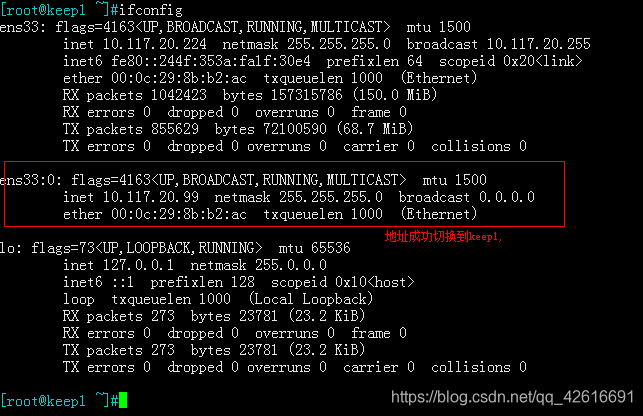

keep2 检查vip地址:

检查lvs状态,成功检测到RS主机:

客户端访问测试:

把keep1启动起来:

vip地址自动切换到keep1

keep2 检查vip地址

keep1查看lvs状态,成功检测到RS主机:

测试把RS1主机关掉, 实现自动移除的功能:

客户端测试,现在只能请求到RS2主机 :

测试把两台RS主机都关掉,看能不能切换到keepalive本机的错误提示页:

客户端访问:

最后在把两台RS主机启动起来,都成功的被自动添加:

基于脚本的方法切换主备节点:

功用:不用把keepalived关闭,就能实现主备切换,降低主keep优先级的方式实现

1.编写脚本:

[root@keep1 ~]#vim /etc/keepalived/chk_down.sh

#!/bin/bash

[[-f /etc/keepalived/down ]] && exit 1 || exit 0

2.配置文件定义脚本参数:

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id keep1

vrrp_mcast_group4 224.1.11.111

}

#定义检查参数

vrrp_script chk_down {

script "/etc/keepalived/chk_down.sh" #指定脚本的绝对路径

weight -10 #降权10,降权以后必须小于备用节点.

interval 1 #一秒检查一次

fall 1 #检查几次

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 111

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass like5453

}

virtual_ipaddress {

10.117.20.99/24 dev ens33 label ens33:0

}

#引用脚本

track_script {

chk_down

}

}

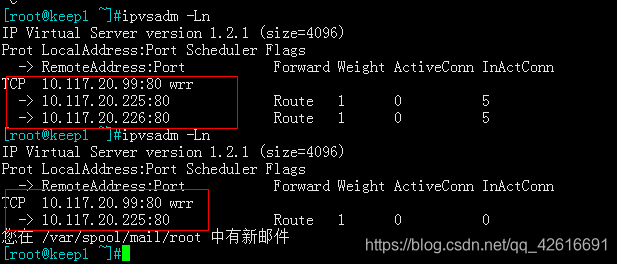

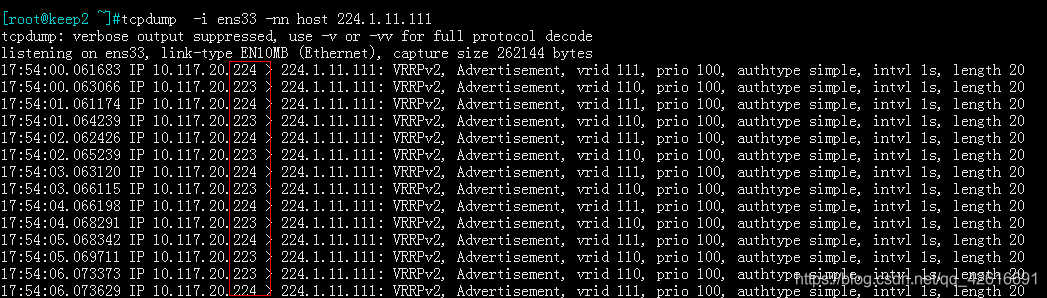

测试主备转换过程:

先把两台主机都启动起来,然后在keep1主机的/etc/keepalived/目录下创建一个down文件,这时脚本检测到down文件存在,然后keep1主机自动从100降权到90,变成备用节点,在keep2主机上抓包可以清楚看到地址的转换过程,

如果想把主节点切换回来只需要删除down文件:

====================================================================

2.nginx+keepalived实现高可用

提前准备:分别在两台主机上安装,nginx+keepalivd

1.keepalived配置文件: vim /etc/keepalived/keepalived.conf #两台主机除了主备参数需要修改以外,其他配置一样

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id keep1 #备服务器修改为 keep2

vrrp_mcast_group4 224.1.11.111

}

vrrp_instance VI_1 {

state MASTER #备服务器,修改为BACKUP

interface ens33

virtual_router_id 111

priority 100 #备服务器,优先级修改为90

advert_int 1

authentication {

auth_type PASS

auth_pass like5453

}

virtual_ipaddress {

10.117.20.99/24 dev ens33 label ens33:0

}

}

2.修改nginx配置文件:vim /etc/nginx/nginx.conf #两台nginx配置一样, 在http段中定义一个后端服务器组,然后在location中引用

3.测试:

4.把server1关掉,测试健康状态检查:

5.再次把server1启动:

=====================================================================

3.实现 keeplived主主模型

下面的配置文件中没有配置virtual_server,其配置方法和主备模式一样,这里主要看一下主主模式的效果

keep1 配置文件:

[root@keep1 /etc/keepalived]#cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id keep1

vrrp_mcast_group4 224.1.11.111

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 111

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass like5453

}

virtual_ipaddress {

10.117.20.99/24 dev ens33 label ens33:0

}

}

vrrp_instance VI_2 { #需要修改

state BACKUP #需要修改

interface ens33

virtual_router_id 110 #需要修改

priority 95 #需要修改

advert_int 1

authentication {

auth_type PASS

auth_pass like #需要修改

}

virtual_ipaddress {

10.117.20.98/24 dev ens33 label ens33:1 #需要修改

}

}

keep2配置文件:

[root@keep2 ~]#cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id keep2

vrrp_mcast_group4 224.1.11.111

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 111

priority 95

advert_int 1

authentication {

auth_type PASS

auth_pass like5453

}

virtual_ipaddress {

10.117.20.99/24 dev ens33 label ens33:0

}

}

vrrp_instance VI_2 {

state MASTER

interface ens33

virtual_router_id 110

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass like

}

virtual_ipaddress {

10.117.20.98/24 dev ens33 label ens33:1

}

}

查看状态:双主模型已经成功

4.采用varnish为nginx实现缓存加速

准备工作:

1.一台varnish缓存服务器,一台nginx服务器

2.关闭防火墙和selinux

3.两台服务器同步时间:ntpdate ntp6.aliyun.com

注意:重启varnish缓存失效

安装varnish:

yum -y install varnish

修改配置文件: /etc/varnish/varnish.params

创建目录:mkdir -pv /data/varnish/cache

修改属主:chown varnish.varnish /data/varnish/cache

default.vcl配置文件:/etc/varnish/default.vcl

如果平时修改了default.vcl文件可以用varnish_reload_vcl命令重载

启动:systemctl start varnish

测试:

后台管理命令行: varnishadm -S /etc/varnish/secret -T 127.0.0.1:6082

5.LNMP结合varnish实现动静分离

#new 4.0 format.

vcl 4.0;

backend server1 {

.host = "10.117.20.226";

.port = "80";

}

backend server2 {

.host = "10.117.20.225";

.port = "80";

}

sub vcl_recv {

if(req.url ~ "\.(html|jpeg|png)$"){

set req.backend_hint = server1;

}else{

if(req.url ~ "\.(php|js|perl)$"){

set req.backend_hint = server2;

}

}

}

测试:

附加内容:

-------------------------------------------------------------------------------

设置多个后端主机:

示例:

#new 4.0 format.

vcl 4.0;

import directors; #定义后端主机组,需要用到的模块

#Default backend definition. Set this to point to your content server.

backend server1{

.host = "192.168.1.111";

.port = "80";

}

backend server2{

.host = "192.168.1.112";

.port = "80";

}

sub vcl_init {

new webserver = directors.round_robin(); #声明一个组为webserver,里面填定义好的后端主机.

webserver.add_backend(server1);

webserver.add_backend(server2);

}

sub vcl_recv {

set req.backend_hint = webserver.backend(); #调用webserver组

}

--------------------------------------------------------------------

后端主机健康状态检测:

示例:

#new 4.0 format.

vcl 4.0;

#Default backend definition. Set this to point to your content server.

probe web_probe {

.url = "/index.html" ; #检测请求的文件内容

.interval = 1s; #1秒发一次请求

.timeout = 1s; #超时时间

.window = 5; #一共检测5次

.threshold = 3; #必须成功三次,才认为是健康的

}

backend server1{

.host = "192.168.1.111";

.port = "80";

.probe = web_probe; #调用上面定义probe

}

本文详细介绍使用Keepalived和LVS实现高可用负载均衡的配置步骤,包括主备切换、健康检查及主主模式的实现,适用于大规模访问场景。

本文详细介绍使用Keepalived和LVS实现高可用负载均衡的配置步骤,包括主备切换、健康检查及主主模式的实现,适用于大规模访问场景。

756

756

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?