为何要用进程池?

当我们在写并发服务器时,若每当一个客户端连接请求到来时,我们就为其创建一个进程来服务。这样动态的创建进程,无疑是比较耗时的,这将导致较慢的客户端相应。

进程池的概念

在服务器启动前我们就创建一组进程,我们将这组进程在逻辑抽象成一个池子。

当我们需要一个工作进程来处理新到来的客户端请求时,我们可以直接从进程池中取得一个执行实体(进程),而无需动态的调用fork函数来创建进程。相比于动态创建子进程,选择一个已经存在的子进程代价显然要小得多。至于主进程如何选择子进程,有两种方式:

- 主进程使用某种算法来主动选择子进程,最简单、最常用的算法是随机算法和轮流选取算法,但更优秀、更智能的算法将使任务在各个工作进程中更均匀地分配,从而减轻服务器的整体压。

- 主进程和所有子进程通过一个共享的工作队列来同步。(即生产者消费者模型)

进程池的实现

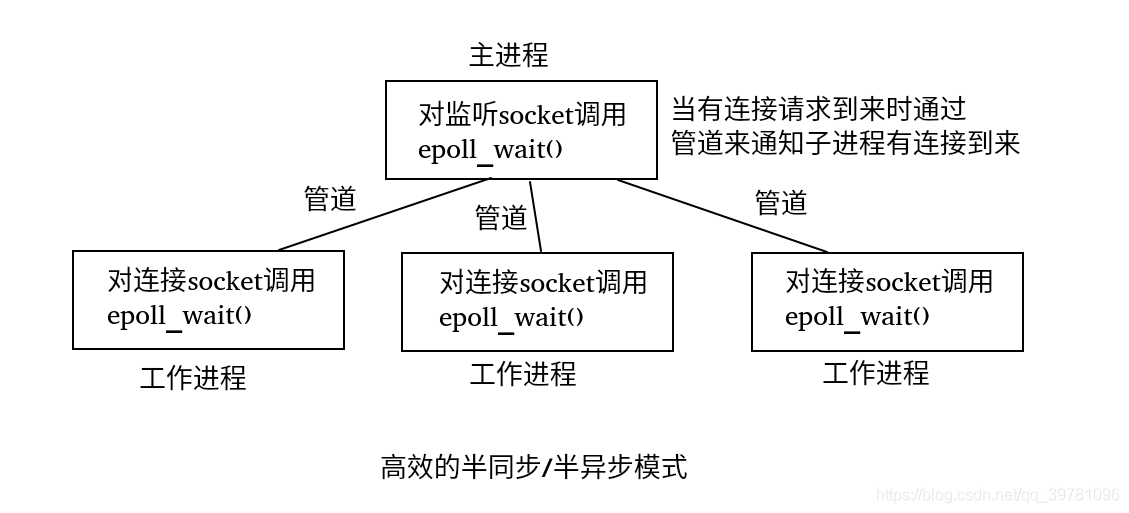

进程池的概念很简单,重要的是我们怎么通过代码实现它,下面给出高效的半同步/半异步并发模式的进程池实现。

在该模式中,主进程只负责监听连接请求的到来,当有连接请求到来时,选取一个子进程 通过与子进程的管道来通知子进程有连接请求到来,子进程负责接受连接和对该连接服务。可以看到,每个进程都维持自己的事件循环,它们各自独立监听不同的事件。因此,在这种高效的半同步/半异步模式中,每个进程都工作在异步模式下,所以它并非严格意义上的半同步/半异步模式。

进程池的代码:

#ifndef _PROCESS_POOL_H

#define _PROCESS_POOL_H

#include<arpa/inet.h>

#include<assert.h>

#include<sys/socket.h>

#include<sys/types.h>

#include<stdlib.h>

#include<string.h>

#include<unistd.h>

#include<sys/epoll.h>

#include<errno.h>

#include<fcntl.h>

#include<stdio.h>

#include<sys/wait.h>

/*描述一个子进程的类,pid是目标子进程的pid,pipefd是父子进程之间通信的管道*/

class process{

public:

pid_t pid; //子进程pid

int pipefd[2]; //子进程与父进程之间的管道

public:

process():pid(-1){}

};

/*进程池类,定义为模板方便代码复用,模板参数是处理逻辑任务的类*/

template<typename T>

class process_pool{

private:

/*进程池允许的最大子进程数量*/

static const int MAX_PROCESS_NUMBER = 16;

/*一个子进程最多能处理多少个客户端*/

static const int MAX_CLIENT_NUMBER = 65535;

/*epoll的监听上限*/

static const int MAX_EVENT_NUMBER = 10000;

/*进程池中子进程的个数*/

int process_number;

/*进程在进程池中的编号*/

int process_id;

/*监听的socket*/

int listenfd;

/*进程的epollfd*/

int epollfd;

/*是否停止运行的标志*/

int stop;

/*进程池中所有子进程的描述信息*/

process* sub_process;

/*进程池静态实例*/

static process_pool<T>* instance;

private:

/*单例模式中的懒汉式,将构造函数私有化,通过下面的getinstance静态函数来获取process_pool实例,保证全局只有唯一一个实例,参数listenfd是监听socket,它必须在创建进程池之前被创建,否则子进程无法直接引用它,参数process_number指定进程池中子进程的数量*/

process_pool(int listenfd,int process_number = 0):listenfd(listenfd),process_number(process_number),process_id(-1)

{

sub_process = new process[process_number];

assert(sub_process != nullptr);

/*创建process_number个子进程,并建立它们和父进程之间的管道*/

for(int i = 0;i < process_number;i++)

{

int ret = socketpair(AF_UNIX,SOCK_STREAM,0,sub_process[i].pipefd);

assert(ret == 0);

sub_process[i].pid = fork();

assert(sub_process[i].pid >= 0);

if(sub_process[i].pid > 0)

{

close(sub_process[i].pipefd[0]);

}

else{

close(sub_process[i].pipefd[1]);

process_id = i;

break;

}

}

}

void set_sig_pipe(bool isparent);

void run_child();

void run_parent();

public:

static process_pool<T>* getinstance(int listenfd,int process_number = 8)

{

if(instance == nullptr)

{

instance = new process_pool<T>(listenfd,process_number);

}

return instance;

}

~process_pool()

{

delete []sub_process;

}

/*启动进程池*/

void run();

};

template<typename T>

process_pool<T>* process_pool<T>::instance = nullptr;

/*处理信号的管道,以实现统一信号源*/

static int sig_pipefd[2];

static void set_nonblock(int fd)

{

int flag = fcntl(fd,F_GETFL);

flag |= O_NONBLOCK;

fcntl(fd,F_SETFL,flag);

}

static void addfd(int epollfd,int fd)

{

epoll_event event;

event.data.fd = fd;

event.events = EPOLLIN | EPOLLET;

epoll_ctl(epollfd,EPOLL_CTL_ADD,fd,&event);

set_nonblock(fd);

}

static void sig_handler(int sig)

{

send(sig_pipefd[1],(char*)&sig,1,0);

}

static void addsig(int sig,void(handler)(int))

{

struct sigaction sa;

sa.sa_handler = handler;

sa.sa_flags = SA_RESTART;

sigfillset(&sa.sa_mask);

sigaction(sig,&sa,NULL);

}

/*统一事件源*/

template<typename T>

void process_pool<T>::set_sig_pipe(bool isparent)

{

epollfd = epoll_create(5);

assert(epollfd != -1);

int ret = socketpair(AF_UNIX,SOCK_STREAM,0,sig_pipefd);

assert(ret != -1);

addfd(epollfd,sig_pipefd[0]);

addsig(SIGINT,sig_handler);

addsig(SIGPIPE,SIG_IGN);

/*若为父进程则设置SIGCHLD信号,用来回收子进程*/

if(isparent)

addsig(SIGCHLD,sig_handler);

}

/*父进程的编号为-1,子进程的编号大于等于0,利用编号来判断启动父进程还是子进程代码*/

template<typename T>

void process_pool<T>::run()

{

if(process_id == -1)

run_parent();

else run_child();

}

template<typename T>

void process_pool<T>::run_child()

{

set_sig_pipe(false);

/*子进程监听与父进程的管道*/

addfd(epollfd,sub_process[process_id].pipefd[0]);

epoll_event events[MAX_EVENT_NUMBER];

T *users = new T[MAX_CLIENT_NUMBER];

stop = false;

while(!stop)

{

int n = epoll_wait(epollfd,events,MAX_EVENT_NUMBER,-1);

if(n < 0)

{

if(errno == EINTR)

continue;

perror("epoll_wait error:");

break;

}

for(int i = 0;i < n;i++)

{

int socketfd = events[i].data.fd;

if(socketfd == sub_process[process_id].pipefd[0])

{

int num = -1;

while(1)

{

int ret = recv(socketfd,(char*)&num,sizeof(num),0);

if(ret < 0)

{

if(errno != EAGAIN)

perror("sub_process pipefd recv error:");

break;

}

//父进程关闭与该子进程的管道一端,则跳出

if(ret == 0)

break;

struct sockaddr_in conn_addr;

socklen_t conn_addr_len = sizeof(conn_addr);

int connfd = accept(listenfd,(struct sockaddr*)&conn_addr,&conn_addr_len);

if(connfd < 0)

{

perror("accept error:");

break;

}

char ip[100];

printf("process_id is %d accept client address = %s\n",process_id,inet_ntop(AF_INET,&conn_addr.sin_addr,ip,sizeof(ip)));

addfd(epollfd,connfd);

/*模板类必须实现init方法,以初始化一个客户端连接*/

users[connfd].init(connfd,conn_addr,epollfd);

}

}

/*只有SIGINT信号才会使信号管道有事件发生,直接使进程结束即可*/

else if(socketfd == sig_pipefd[0])

{

char buf[BUFSIZ];

while(true)

{

int ret = recv(socketfd,buf,sizeof(buf),0);

if(ret < 0)

{

if(errno != EAGAIN)

perror("sub_process sig_pipefd recv error:");

break;

}

}

stop = true;

}

/*其他可读事件必然是客户端请求到来,调用逻辑处理对象的process方法处理即可*/

else if(events[i].events & EPOLLIN)

{

printf("users process=========\n");

users[socketfd].process();

}

}

}

printf("child pid = %d exit\n",getpid());

/*向父进程发送自己的编号,以便父进程及时释放与该进程通信的管道资源*/

send(sub_process[process_id].pipefd[0],(char*)&process_id,1,0);

delete[]users;

close(epollfd);

close(sig_pipefd[0]);

close(sig_pipefd[1]);

close(sub_process[process_id].pipefd[0]);

}

template<typename T>

void process_pool<T>::run_parent()

{

set_sig_pipe(true);

/*父进程监听listenfd*/

addfd(epollfd,listenfd);

/*监听与子进程通信的所有管道*/

for(int i = 0;i < process_number;i++)

{

addfd(epollfd,sub_process[i].pipefd[1]);

}

epoll_event events[MAX_EVENT_NUMBER];

int cnt = 0;

int sub_process_number = process_number;

stop = false;

while(!stop)

{

int n = epoll_wait(epollfd,events,MAX_EVENT_NUMBER,-1);

if(n < 0)

{

if(errno != EINTR)

{

perror("parent process epoll_wait error:");

break;

}

continue;

}

for(int i = 0;i < n;i++)

{

int socketfd = events[i].data.fd;

/*若有客户端连接请求到来,轮流选取子进程来此处理客户端请求,若无子进程,则服务终止*/

if(socketfd == listenfd)

{

int num = 0;

int j = cnt;

do{

j = (j + 1) % process_number;

}while(sub_process[j].pid == -1 && j != cnt);

if(sub_process[j].pid == -1)

{

stop = true;

break;

}

cnt = j;

send(sub_process[j].pipefd[1],(char*)&num,1,0);

printf("send request to child %d\n",j);

}

/*处理父进程接收到的信号*/

else if(socketfd == sig_pipefd[0])

{

char signals[BUFSIZ];

while(1)

{

memset(signals,'\0',sizeof(signals));

int ret = recv(socketfd,signals,sizeof(signals),0);

if(ret == -1)

{

if(errno != EAGAIN)

perror("parent process error:");

break;

}

for(int i = 0;i < ret;i++)

{

switch(signals[i])

{

case SIGCHLD:

{

printf("parents get SIGCHLD\n");

int stat;

pid_t pid;

while((pid = waitpid(-1,&stat,WNOHANG)) > 0)

{

sub_process_number--;

printf("waitpid is %d\n",pid);

/*若所有子进程退出了,则父进程也退出*/

if(!sub_process_number)

{

stop = true;

}

}

break;

}

case SIGINT:

{

printf("parents get SIGINT\n");

sleep(1);

printf("kill all the child now\n");

//若父进程接收到终止信号,就将所有子进程杀死

for(int i = 0;i < process_number;i++)

{

pid_t pid = sub_process[i].pid;

if(pid != -1)

{

printf("send kill to process pid = %d\n",pid);

int ret = kill(pid,SIGINT);

if(ret == -1)

{

perror("kill error:");

}

}

}

break;

}

}

}

}

}

/*若是其他事件,则必然为子进程发送的编号*/

else if(events[i].events & EPOLLIN)

{

char id;

int ret = recv(socketfd,&id,sizeof(id),0);

if(ret < 0)

{

perror("parent_process_pipefd recv error:");

}

printf("sub_process send id = %d\n",(int)id);

/*将子进程的pid设置为-1,以标记子进程已经退出*/

sub_process[(int)id].pid = -1;

/*将管道的文件描述符从epoll中移除*/

ret = epoll_ctl(epollfd,EPOLL_CTL_DEL,socketfd,NULL);

if(ret == -1)

perror("epoll_ctl error");

/*释放与子进程通信的管道一端*/

close(sub_process[(int)id].pipefd[1]);

}

}

}

close(epollfd);

close(sig_pipefd[0]);

close(sig_pipefd[1]);

}

#endif

应用进程池的server代码,该代码的功能是通过客户端请求服务器运行一个程序,并将运行结果返回给客户端。

#include"process_pool.h"

#include<arpa/inet.h>

/*用于处理客户CGI请求的类,它可以作为process_pool类的模板参数*/

class cgi_conn

{

private:

int sockfd;

struct sockaddr_in address;

static int epollfd;

char buf[BUFSIZ];

/*标记缓冲区中已经读入的客户端数据的最后一个字节的下一个字节*/

int read_indx;

void removefd()

{

epoll_ctl(epollfd,EPOLL_CTL_DEL,sockfd,NULL);

close(sockfd);

}

public:

/*初始化客户端连接,清空缓冲区*/

void init(int sockfd,struct sockaddr_in &address,int epollfd)

{

this->sockfd = sockfd;

this->address = address;

this->epollfd = epollfd;

memset(buf,'\0',sizeof(buf));

read_indx = 0;

}

void process()

{

int indx = 0;

while(true)

{

indx = read_indx;

int ret = recv(sockfd,buf + indx,sizeof(buf)-indx,0);

if(ret < 0)

{

if(errno != EAGAIN)

{

perror("client recv error:");

/*关闭连接*/

removefd();

}

break;

}

/*若对端关闭,则关闭连接*/

else if(ret == 0)

{

removefd();

break;

}

read_indx += ret;

/*判断是否有\n*/

for(;indx < read_indx;indx++)

{

if(buf[indx] == '\n')

{

break;

}

}

/*若没有\n,则还需读取数据*/

if(indx == read_indx)

continue;

buf[indx] = '\0';

char *file_name = buf;

/*判断客户要运行的CGI程序是否存在*/

if(access(file_name,F_OK) == -1)

{

perror("access error");

removefd();

break;

}

/*创建子进程来执行CGI程序*/

pid_t pid = fork();

if(pid == -1)

{

perror("fork error:");

removefd();

break;

}

else if(pid > 0)

{

/*父进程只需要关闭连接*/

removefd();

break;

}

else{

/*子进程将标准输出重定向到sockfd上,并运行CGI程序*/

dup2(sockfd,STDOUT_FILENO);

execl(buf,buf,NULL);

exit(0);

}

}

}

};

int cgi_conn::epollfd = -1;

int main(int argc,const char* argv[])

{

if(argc < 3)

{

printf("usage: %s ip_adress port_number\n",argv[0]);

exit(1);

}

const char *ip = argv[1];

int port = atoi(argv[2]);

struct sockaddr_in address;

address.sin_family = AF_INET;

address.sin_port = htons(port);

inet_pton(AF_INET,ip,&address.sin_addr);

int listenfd = socket(AF_INET,SOCK_STREAM,0);

bind(listenfd,(struct sockaddr*)&address,sizeof(address));

listen(listenfd,3);

process_pool<cgi_conn>* pool = process_pool<cgi_conn>::getinstance(listenfd);

if(pool)

{

pool->run();

delete pool;

}

close(listenfd);

return 0;

}

参考:Linux高性能服务器编程 游双著

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?