kafka不会部署的参考我另一篇文章

https://blog.youkuaiyun.com/qq_39272466/article/details/131512504

kafka基础知识可以去看bilibili

生产者代码(异步同步都有)

package main

import (

"fmt"

"time"

"github.com/Shopify/sarama"

)

// 基于sarama第三方库开发的kafka client

var address = []string{"myhao.com:29092", "myhao.com:29093", "myhao.com:29094"}

func main() {

// AsyncProducer()

SaramaProducer()

}

// 同步生产

func AsyncProducer() {

config := sarama.NewConfig()

config.Producer.RequiredAcks = sarama.WaitForAll // 发送完数据需要leader和follow都确认

config.Producer.Partitioner = sarama.NewRandomPartitioner // 新选出一个partition

config.Producer.Return.Successes = true // 成功交付的消息将在success channel返回

// config.Version = sarama.V3_3_1_0

// config.Consumer.Group.InstanceId = "group_mytest"

// 构造一个消息

msg := &sarama.ProducerMessage{}

msg.Topic = "bigdata"

msg.Value = sarama.StringEncoder("new this is a test log6")

// 连接kafka docker安装的记得绑定kafka(KAFKA_ADVERTISED_LISTENERS)配置的host

client, err := sarama.NewSyncProducer(address, config)

if err != nil {

fmt.Println("producer closed, err:", err)

return

}

defer client.Close()

// 发送消息

pid, offset, err := client.SendMessage(msg)

if err != nil {

fmt.Println("send msg failed, err:", err)

return

}

fmt.Printf("pid:%v offset:%v\n", pid, offset)

}

// 异步生产

func SaramaProducer() {

config := sarama.NewConfig()

//等待服务器所有副本都保存成功后的响应

config.Producer.RequiredAcks = sarama.WaitForAll

//随机向partition发送消息

config.Producer.Partitioner = sarama.NewRandomPartitioner

//是否等待成功和失败后的响应,只有上面的RequireAcks设置不是NoReponse这里才有用.

config.Producer.Return.Successes = true

config.Producer.Return.Errors = true

//设置使用的kafka版本,如果低于V0_10_0_0版本,消息中的timestrap没有作用.需要消费和生产同时配置

//注意,版本设置不对的话,kafka会返回很奇怪的错误,并且无法成功发送消息

config.Version = sarama.V3_3_1_0

fmt.Println("start make producer")

//使用配置,新建一个异步生产者

producer, e := sarama.NewAsyncProducer(address, config)

if e != nil {

fmt.Println(e)

return

}

defer producer.AsyncClose()

//循环判断哪个通道发送过来数据.

fmt.Println("start goroutine")

go func(p sarama.AsyncProducer) {

for {

select {

case suc := <-p.Successes():

fmt.Println("offset: ", suc.Offset, "timestamp: ", suc.Timestamp.String(), "partitions: ", suc.Partition)

case fail := <-p.Errors():

fmt.Println("err: ", fail.Err)

}

}

}(producer)

var value string

for i := 0; ; i++ {

time11 := time.Now()

value = "this is a message 0606 " + time11.Format("15:04:05")

// 发送的消息,主题。

// 注意:这里的msg必须得是新构建的变量,不然你会发现发送过去的消息内容都是一样的,因为批次发送消息的关系。

msg := &sarama.ProducerMessage{

Topic: "bigdata",

}

//将字符串转化为字节数组

msg.Value = sarama.ByteEncoder(value)

//fmt.Println(value)

//使用通道发送

producer.Input() <- msg

time.Sleep(2 * time.Second)

}

}

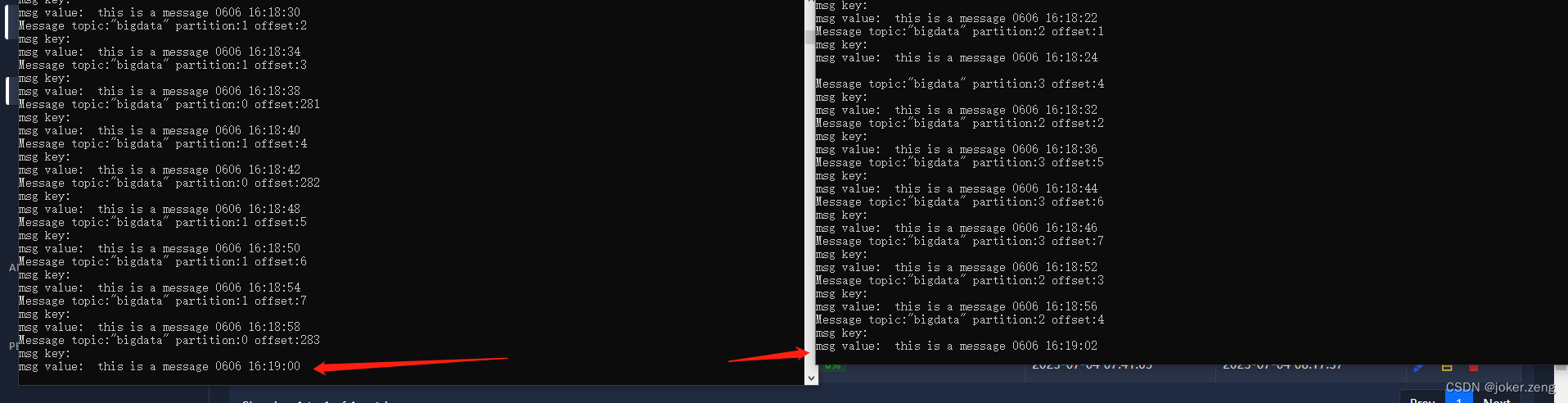

运行截图

消费者代码

实时消费topic所有分区消息

package main

import (

"fmt"

"sync"

"github.com/Shopify/sarama"

)

var address = []string{"myhao.com:29092", "myhao.com:29093", "myhao.com:29094"}

func main() {

var wg sync.WaitGroup

config := sarama.NewConfig()

config.ClientID = "joker"

config.Version = sarama.V3_3_1_0

fmt.Println(config.Version)

consumer, err := sarama.NewConsumer(address, config)

// sarama.NewConsumerGroup()

if err != nil {

fmt.Println("Failed to start consumer:", err)

return

}

partitionList, err := consumer.Partitions("bigdata") // 通过topic获取到所有的分区

if err != nil {

fmt.Println("Failed to get the list of partition: ", err)

return

}

fmt.Println(partitionList)

for partition := range partitionList { // 遍历所有的分区

pc, err := consumer.ConsumePartition("bigdata", int32(partition), sarama.OffsetNewest) // 针对每个分区创建一个分区消费者

if err != nil {

fmt.Printf("Failed to start consumer for partition %d: %s\n", partition, err)

}

wg.Add(1)

go func(pc sarama.PartitionConsumer) { // 为每个分区开一个go协程取值

for msg := range pc.Messages() { // 阻塞直到有值发送过来,然后再继续等待

fmt.Printf("Partition:%d, Offset:%d, key:%s, value:%s\n", msg.Partition, msg.Offset, string(msg.Key), string(msg.Value))

}

defer pc.AsyncClose()

wg.Done()

}(pc)

}

wg.Wait()

consumer.Close()

}

注意分区消费多个脚本是可以重复消费的

实时根据消费者组消费topic

package main

import (

"context"

"fmt"

"github.com/Shopify/sarama"

)

var address = []string{"myhao.com:29092", "myhao.com:29093", "myhao.com:29094"}

// // 实现 github.com/Shopify/sarama/consumer_group.go/ConsumerGroupHandler 这个接口

type AAAConsumerGroupHandler struct {

}

func (AAAConsumerGroupHandler) Setup(_ sarama.ConsumerGroupSession) error {

return nil

}

func (AAAConsumerGroupHandler) Cleanup(_ sarama.ConsumerGroupSession) error {

return nil

}

// 这个方法用来消费消息的

func (h AAAConsumerGroupHandler) ConsumeClaim(sess sarama.ConsumerGroupSession, claim sarama.ConsumerGroupClaim) error {

// 获取消息

for msg := range claim.Messages() {

fmt.Printf("Message topic:%q partition:%d offset:%d\n", msg.Topic, msg.Partition, msg.Offset)

fmt.Println("msg key: ", string(msg.Key))

fmt.Println("msg value: ", string(msg.Value))

// 将消息标记为已使用

sess.MarkMessage(msg, "")

}

return nil

}

// 接收数据

func main() {

// 先初始化 kafka

config := sarama.NewConfig()

// Version 必须大于等于 V0_10_2_0

config.Version = sarama.V3_3_1_0

config.Consumer.Return.Errors = true

fmt.Println("start connect kafka")

// 开始连接kafka服务器

group, err := sarama.NewConsumerGroup(address, "group_mytest2", config)

if err != nil {

fmt.Println("connect kafka failed; err", err)

return

}

// 检查错误

go func() {

for err := range group.Errors() {

fmt.Println("group errors : ", err)

}

}()

ctx := context.Background()

fmt.Println("start get msg")

// for 是应对 consumer rebalance

for {

// 需要监听的主题

topics := []string{"bigdata"}

handler := AAAConsumerGroupHandler{}

// 启动kafka消费组模式,消费的逻辑在上面的 ConsumeClaim 这个方法里

err := group.Consume(ctx, topics, handler)

if err != nil {

fmt.Println("consume failed; err : ", err)

return

}

}

}

可以看出来多个消费者都在一个组是不会重复消费的

1023

1023

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?