必备软件:

jdk+solr+sookeeper+tomcat

环境+vamware14+centos6.9

步骤:

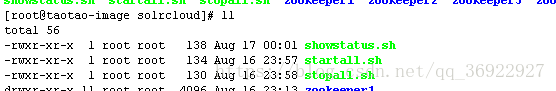

1上传必要的软件到centos

如上截图

2解压

tar -zxvf ***.tar.gz

3拷贝解压后的zookeeper到 /usr/local/solrcloud

4复制三份,分别为zookeeper1,2,3(我是弄了四份)

5进入zookeeper1创建data目录,

data目录中创建文件myid,文件内容是 “1”(不需要引号)

vi myid

里面输入 1

然后wq保存

6进入conf文件夹,将zoo_sample.cfg改名为zoo.cfg

mv A B

将文件A命名为 B

改变后:

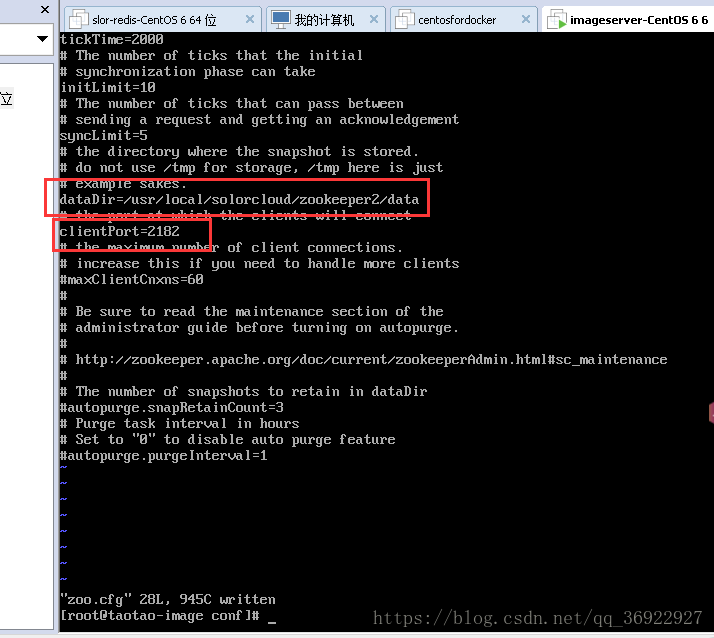

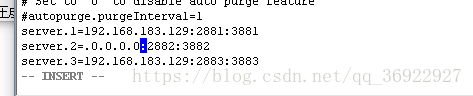

7编辑zoo.cfg文件,

注意 server. 1 这个1是zookeeper1的id,对应myid文件中的那个数字

clientPort是监听客户端端口号

2881是通信端口,与leader通信,3881是选举端口,leader挂掉后,follower通过此端口投票选举新的leader

dataDir=/usr/local/solrcloud/zookeeper1/data

clientPort=2181

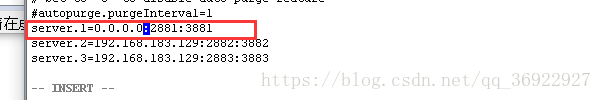

server.1=192.168.183.129:2881:3881

server.2=192.168.183.129:2882:3882

server.3=192.168.183.129:2883:3883

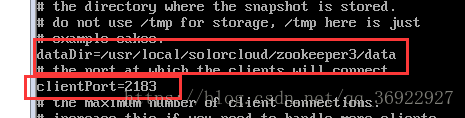

8对zookeeper2,3做上述5,6,7步修改

myid分别为2,3

clientport分别为 2182,2183

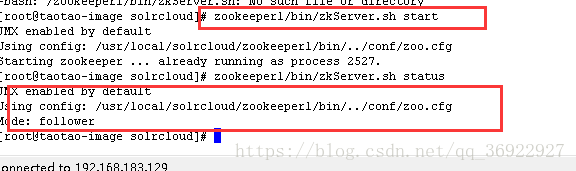

9启动三个zookeeper

/usr/local/solrcloud/zookeeper1/bin/zkServer.sh start

/usr/local/solrcloud/zookeeper2/bin/zkServer.sh start

/usr/local/solrcloud/zookeeper3/bin/zkServer.sh start

查看集群状态:

/usr/local/solrcloud/zookeeper1/bin/zkServer.sh status

/usr/local/solrcloud/zookeeper2/bin/zkServer.sh status

/usr/local/solrcloud/zookeeper3/bin/zkServer.sh status

问题:

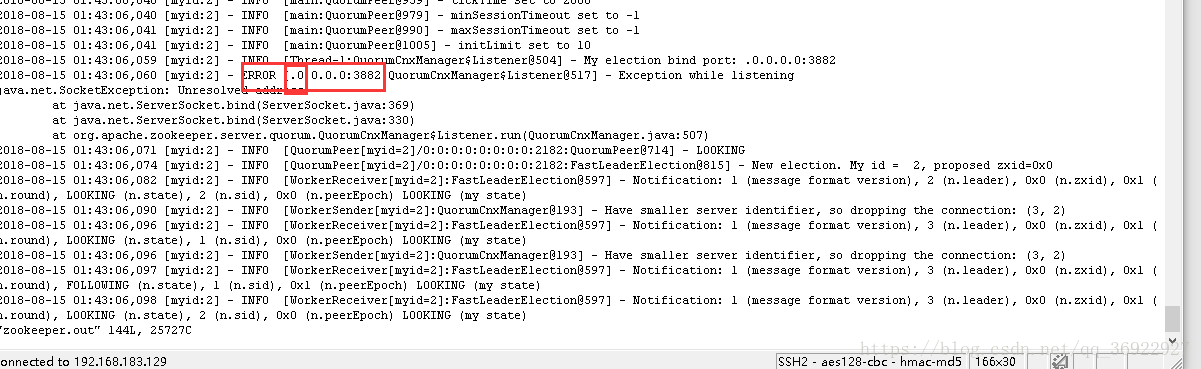

查看zookeeper2,3的status都是显示standalone

zookeeper1显示 Error,contacting service. It is probably not running

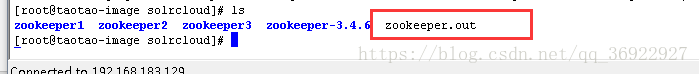

查看zookeeper.out日志文件:

- Cannot open channel to 2 at election address /192.168.183.129:3882

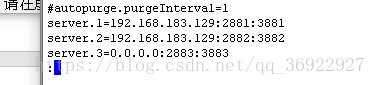

查找资料,需要将当前结点(假设为id=1的节点) server.1=0.0.0.0:端口1:端口2

发现成功了!

其他两个节点也得如此设置!

zookeeper2:

如果出现查看状态失败,可能是myid配置不对,查看zookeeper.out即可

![]()

修改对应的myid文件即可(server.* 后面的* 就是id值)

配置文件的ip写错也是不行的,比如:

zookeeper3

但是问题又出现了,现在启动zookeeper2,zookeeper3,查看status还是提示 可能未运行,但是查看zookeeper.out文件里面也没有报错

2018-08-15 01:49:38,795 [myid:] - INFO [main:QuorumPeerConfig@103] - Reading configuration from: /usr/local/solrcloud/zookeeper3/bin/../conf/zoo.cfg

2018-08-15 01:49:38,799 [myid:] - INFO [main:QuorumPeerConfig@340] - Defaulting to majority quorums

2018-08-15 01:49:38,802 [myid:3] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 3

2018-08-15 01:49:38,802 [myid:3] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 0

2018-08-15 01:49:38,803 [myid:3] - INFO [main:DatadirCleanupManager@101] - Purge task is not scheduled.

2018-08-15 01:49:38,820 [myid:3] - INFO [main:QuorumPeerMain@127] - Starting quorum peer

2018-08-15 01:49:38,832 [myid:3] - INFO [main:NIOServerCnxnFactory@94] - binding to port 0.0.0.0/0.0.0.0:2183

2018-08-15 01:49:38,857 [myid:3] - INFO [main:QuorumPeer@959] - tickTime set to 2000

2018-08-15 01:49:38,857 [myid:3] - INFO [main:QuorumPeer@979] - minSessionTimeout set to -1

2018-08-15 01:49:38,857 [myid:3] - INFO [main:QuorumPeer@990] - maxSessionTimeout set to -1

2018-08-15 01:49:38,858 [myid:3] - INFO [main:QuorumPeer@1005] - initLimit set to 10

2018-08-15 01:49:38,879 [myid:3] - INFO [Thread-1:QuorumCnxManager$Listener@504] - My election bind port: /0.0.0.0:3883

2018-08-15 01:49:38,890 [myid:3] - INFO [QuorumPeer[myid=3]/0:0:0:0:0:0:0:0:2183:QuorumPeer@714] - LOOKING

2018-08-15 01:49:38,892 [myid:3] - INFO [QuorumPeer[myid=3]/0:0:0:0:0:0:0:0:2183:FastLeaderElection@815] - New election. My id = 3, proposed zxid=0x0

2018-08-15 01:49:38,905 [myid:3] - INFO [WorkerReceiver[myid=3]:FastLeaderElection@597] - Notification: 1 (message format version), 2 (n.leader), 0x100000000 (n.zxid), 0x2 (n.round), LOOKING (n.state), 1 (n.sid), 0x1 (n.peerEpoch) LOOKING (my state)

2018-08-15 01:49:38,906 [myid:3] - INFO [WorkerReceiver[myid=3]:FastLeaderElection@597] - Notification: 1 (message format version), 3 (n.leader), 0x0 (n.zxid), 0x1 (n.round), LOOKING (n.state), 3 (n.sid), 0x1 (n.peerEpoch) LOOKING (my state)

2018-08-15 01:49:38,913 [myid:3] - INFO [WorkerReceiver[myid=3]:FastLeaderElection@597] - Notification: 1 (message format version), 2 (n.leader), 0x100000000 (n.zxid), 0x2 (n.round), LOOKING (n.state), 3 (n.sid), 0x1 (n.peerEpoch) LOOKING (my state)

2018-08-15 01:49:38,914 [myid:3] - INFO [WorkerReceiver[myid=3]:FastLeaderElection@597] - Notification: 1 (message format version), 2 (n.leader), 0x100000000 (n.zxid), 0x2 (n.round), FOLLOWING (n.state), 1 (n.sid), 0x2 (n.peerEpoch) LOOKING (my state)

2018-08-15 01:49:38,914 [myid:3] - INFO [WorkerReceiver[myid=3]:FastLeaderElection@597] - Notification: 1 (message format version), 2 (n.leader), 0x100000000 (n.zxid), 0x2 (n.round), FOLLOWING (n.state), 1 (n.sid), 0x2 (n.peerEpoch) LOOKING (my state)

2018-08-15 01:49:38,914 [myid:3] - INFO [WorkerReceiver[myid=3]:FastLeaderElection@597] - Notification: 1 (message format version), 2 (n.leader), 0x100000000 (n.zxid), 0x2 (n.round), LOOKING (n.state), 2 (n.sid), 0x1 (n.peerEpoch) LOOKING (my state)

2018-08-15 01:49:38,915 [myid:3] - INFO [WorkerReceiver[myid=3]:FastLeaderElection@597] - Notification: 1 (message format version), 2 (n.leader), 0x100000000 (n.zxid), 0x2 (n.round), LOOKING (n.state), 3 (n.sid), 0x1 (n.peerEpoch) LOOKING (my state)

解决:

上面提到的当前节点(加入id=1) server.1=0.0.0.0:端口1:端口2不是必须的,改成0.0.0.0结果全是error,查询状态出问题,日志没输出error,全部挂掉

需要新建启动脚本,一次启动即可

我的脚本是这样的:

startall.sh:启动全部

在 /usr/local/solrcloud/下面

执行: vi startall.sh

内容:

zookeeper1/bin/zkServer.sh start

zookeeper2/bin/zkServer.sh start

zookeeper3/bin/zkServer.sh start

zookeeper4/bin/zkServer.sh start

wq保存

chmod +x startall.sh

将startall.sh变为可执行文件

./startall.sh 即可一次全部启动

其余两个如法炮制:

stopall.sh:停止全部

shostatus.sh:显示全部状态

命令

启动:zkServer.sh start

查看状态:zkServer.sh stop

停止: zkServer.sh stop

本文详细介绍了在CentOS上配置Zookeeper集群的步骤,包括上传和解压软件、创建data目录、编辑zoo.cfg文件,以及启动和检查Zookeeper节点状态。在配置过程中遇到的问题,如standalone状态和通信错误,以及解决方案,如修改myid和server配置,以及编写启动脚本来批量管理Zookeeper实例。

本文详细介绍了在CentOS上配置Zookeeper集群的步骤,包括上传和解压软件、创建data目录、编辑zoo.cfg文件,以及启动和检查Zookeeper节点状态。在配置过程中遇到的问题,如standalone状态和通信错误,以及解决方案,如修改myid和server配置,以及编写启动脚本来批量管理Zookeeper实例。

129

129

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?