第一部分:基础知识

1.Mat

①Mat由:矩阵头(矩阵的描述:dims,cols,rows)和指向存储数据(uchar* data)的指针构成

②Mat的常用函数与属性:

src.empty();//判断Mat是否为空

src.cols;//Mat的cols属性代表宽度

src.rows;//Mat的rows属性代表高度

src.channels();//获取Mat的通道数*

src.depth();//获取Mat的深度,如8U

src.type();//获取Mat的数据类型,如8UC3

src.size();//返回Size对象,Size(width,height);

③Mat的创建:

a.使用Mat构造函数传入宽高和type

Mat t1=Mat(256,256,CV_8UC3);

t1=Scalar(0,0,255);

b.使用Mat的构造函数,传入Size和type实例化

Mat t2=Mat(Size(512,512),CV_8UC3);

c.使用zeros函数,参数为Size和type

Mat t3=Mat::zeros(Size(512,512),CV_8UC3);

d.将一个Mat对象赋值给另一个Mat对象

Mat t4=src;

e.使用Mat的copyTo()函数

src.copyTo(t4);

f.使用Rect截取区域

Mat mask=Mat::zeros(Size(640,640),CV_8UC1);

mask(Rect(320,50,260,310))=255;//mask的其他部分是0,Rect区域为255

④Mat像素值的索引

a.Vec3b pixel=src.at<Vec3b>(row,col);

pixel[0]

pixel[1]

pixel[2]

b.int pv=src.at<unchar>(row,col);

c.Mat一行的指针

unchar* curr_row=src.ptr<unchar>(row);

int blue=*curr_row++//指针移动位置并取值

⑤Mat的元素操作:

a.加

Mat dst;

add(src1,src2,dst);

dst=src1+src2;

addWeighted(src,0.5,src1,0.5,0.0,dst2);

b.减

Mat dst;

substract(src1,src2,dst);

dst=src1-src2;

c.乘

矩阵乘:

Mat dst=src1*src2;

内积:

*Mat dst=src1.dot(src2);*

对应元素相乘

Mat dst=src1.mul(src2);

Mat dst;

multiply(src1,src2,dst);

d.除

Mat dst;

divide(src1,src2,dst);

dst=src1/src2;

e.加权和

int w = 0, h = 0;

Mat src1 = Mat::zeros(Size(w, h), CV_8UC3);

Mat src2 = Mat::ones(Size(w, h), CV_8UC3);

Mat dst;

addWeighted(src1, 0.3, src2, 0.6,0.0, dst);//0.0是偏移量

⑥按位操作

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png");

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

Mat m1;

Mat mask = Mat::zeros(src.size(), CV_8UC1);

Mat mask2 = Mat::zeros(src.size(), CV_8UC1);

int w = src.cols / 2;

int h = src.rows / 2;

for (int row = 50; row < h; row++) {

for (int col = 100; col < w + 100; col++) {

mask.at<uchar>(row, col) = 127;

mask2.at<uchar>(row, col) = 0;

}

}

imshow("input", src);

Mat m2;

bitwise_and(src, src, m2, mask);//其他部分为0,唯独mask区域为src与src的bitwise_and

imshow("bitwise and", m2);

waitKey(0);

destroyAllWindows();

return 0;

}

⑦直方图、均值、方差、最大最小值

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

int w = src.cols;

int h = src.rows;

int ch = src.channels();

printf("w: %d, h: %d, channels : %d \n", w, h, ch);

double min_val;

double max_val;

Point minLoc;

Point maxLoc;

minMaxLoc(src, &min_val, &max_val, &minLoc, &maxLoc);//求Mat的最大值、最小值、最大值位置、最小值位置

printf("min:%.2f, max: %.2f\n", min_val, max_val);

//获取Mat的直方图,存储在256个bins中

vector<int> hist(256);

for (int i = 0; i < 256; i++) {

hist[i] = 0;

}

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

int pv = src.at<uchar>(row, col);

hist[pv]++;

}

}

//获取Mat的均值和方差

Scalar s = mean(src);//三个通道的均值

Mat mm, mstd;

meanStdDev(src, mm, mstd);

cout << mm.rows << endl;

waitKey(0);

destroyAllWindows();

return 0;

}

⑧RotatedRect属性,Rect属性,正态分布随机数

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

//RotatedRect相关属性

//1..center

RotatedRect rrt;

rrt.center = Point2f(256, 256);

//2..angle

rrt.angle = 45.0;

//3..size

rrt.size = Size(100, 200);

//生成正态分布的随机数

RNG rng(12345);

int x1 = (int)rng.uniform(0, 512);

//Rect属性

Rect rct;

rct.x = x1;

rct.y = (int)rng.uniform(0, 512);

rct.width = 10;

rct.height = 10;

waitKey(0);

destroyAllWindows();

return 0;

}

⑨通道拆分与合并,roi截取区域

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

//拆分

vector<Mat> mv;

split(src, mv);

int size = mv.size();

//合并

Mat dst;

merge(mv, dst);

//ROI区域截取

Rect roi;

roi.x = 100;

roi.y = 100;

roi.width = 250;

roi.height = 250;

Mat sub = src(roi).clone();//截取roi区域数据并拷贝数据

Mat sub = src(roi);//截取区域,浅拷贝

waitKey(0);

destroyAllWindows();

return 0;

}

⑩直方图计算、直方图均衡化、直方图归一化、直方图比较

1.直方图均衡化原理

a.计算原图像的各灰度级的频率,得到图像灰度直方图

b.计算各灰度级累积频率。

c.各灰度级累积频率256-1得到每个灰度级对应的新的灰度。

d.将原图灰度映射到新的图像灰度。得到均衡化后的直方图。

*2.直方图均衡化作用。

a.直方图均衡化后,灰度范围会变大,对比度、清晰度会增加。有利于增强图像。

b.使图像间距拉开,图像灰度分布均匀。

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

//计算直方图

vector<Mat> mv;

split(src, mv);

int histSize = 256;

Mat b_hist, g_hist, r_hist;

float range[] = { 0,255 };

const float* histRanges = { range };

calcHist(&mv[0], 1, 0, Mat(), b_hist, 1, &histSize, &histRanges, true, false);

//直方图归一化

Mat result = Mat::zeros(Size(600, 400),CV_8UC3);

int margin = 50;

int nm = result.rows - 2 * margin;

normalize(b_hist, b_hist, 0, nm, NORM_MINMAX, -1, Mat());

//直方图均衡化

Mat gray, dst;

cvtColor(src, gray, COLOR_BGR2GRAY);

equalizeHist(gray, dst);

//直方图计算距离

//*1.巴士距离

Mat hist1, hist2;

double hl2 = compareHist(hist1, hist2, HISTCMP_BHATTACHARYYA);

//*2.相关系数

double cl1 = compareHist(hist1, hist2, HISTCMP_CORREL);

waitKey(0);

destroyAllWindows();

return 0;

}

⑩①LUT查找表

*1.作用

a.做像素的映射,比如0-100映射到0-50,100到255映射到50-255

b.运算速度快

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

//自定义颜色的映射

Mat lut = Mat::zeros(256, 1, CV_8UC3);

for (int i = 0; i < 256; i++) {

lut.at<Vec3b>(i, 0) = src.at<Vec3b>(10, i);

}

Mat dst;

LUT(src, lut, dst);

//系统定义颜色映射

applyColorMap(src, dst, COLORMAP_OCEAN);

waitKey(0);

destroyAllWindows();

return 0;

}

⑩②图像边界填充

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

int border = 8;

Mat border_m;

copyMakeBorder(src, border_m, border, border, border, border, BORDER_WRAP, Scalar(0, 0, 255));//上、下、左、右填充的宽度,填充方式、默认像素值

waitKey(0);

destroyAllWindows();

return 0;

}

⑩②图像模糊

*1.常见图形模糊原理

a.均值模糊

*原理:固定大小的卷积核在原图上滑动,每到一个位置计算卷积核覆盖下像素点的均值,并用计算得到的均值替代卷积核中心点对应的元素的像素值。

*优点:计算简单,运算速度快。

*缺点:降低噪声的同时,使图像变得模糊,特别是边缘变得模糊。

b.中值模糊

*原理:中值替代均值

*优点:对椒盐噪声效果较好

*缺点:可能产生不连续。

c.高斯模糊

*原理:高斯分布替代均值

*优点:对高斯噪声效果好。

d.双边模糊

*原理:同时考虑空间邻近度和像素相似度。滤波是两个高斯权重函数,一个负责计算空间邻近度信息,一个计算像素相似度信息。

*优点:能够达到保边去噪目的

*缺点:计算慢。

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

Mat dst;

GaussianBlur(src, dst, Size(0, 0), 15);

Mat box_dst;

boxFilter(src, box_dst, -1, Size(25, 25), Point(-1, -1), true, BORDER_DEFAULT);

waitKey(0);

destroyAllWindows();

return 0;

}

⑩③自定义卷积,自定义滤波器

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

int k = 15;

Mat mkernel = Mat::ones(k, k, CV_32F / (float)(k * k));

Mat dst;

filter2D(src, dst, -1, mkernel, Point(-1, -1), 0, BORDER_DEFAULT);

//自定义滤波器

Mat robot = (Mat_<int>(2, 2) << 1, 0, 0, -1);

Mat result;

filter2D(src, result, CV_32F, robot, Point(-1, -1), 127, BORDER_DEFAULT);

convertScaleAbs(result, result);//src*alpha+belta,看情况取绝对值

waitKey(0);

destroyAllWindows();

return 0;

}

⑩④图像梯度算子

*1.Sobel算子

*a.(Mat_(3,3)<<-1,0,1,-2,0,2,-1,0,1)

*2.Scharr算子

*a.(Mat_(3,3)<<-3,0,3,-10,0,10,-3,0,3)

强化了距离的权重

*3.Robert算子

*a.(Mat_(2,2)<<1,0,0,-1)

*b.求取对角线梯度

*4.Laplacian算子

*a.具有旋转不变性,满足各个方向图像锐化。

*b.(Mat_(3,3)<<0,1,0,1,-4,1,0,1,0)

*c.二阶导数算子对边缘平滑过渡的边缘具有较好的检测效果。但是二阶算子对噪声敏感。

*5.canny算子。

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

Mat robot_x = (Mat_<int>(2, 2) << 1, 0, 0, -1);

Mat robot_y = (Mat_<int>(2, 2) << 0, 1, -1, 0);

Mat grad_x, grad_y;

filter2D(src, grad_x, CV_32F, robot_x, Point(-1, -1), 0, BORDER_DEFAULT);

filter2D(src, grad_y, CV_32F, robot_y, Point(-1, -1), 0, BORDER_DEFAULT);

convertScaleAbs(grad_x, grad_x);

convertScaleAbs(grad_y, grad_y);

Mat result;

add(grad_x, grad_y, result);

Scharr(src, grad_x, CV_32F, 1, 0);

Scharr(src, grad_y, CV_32F, 0, 1);

convertScaleAbs(grad_x, grad_y);

convertScaleAbs(grad_y, grad_y);

Mat result2;

addWeighted(grad_x, 0.5, grad_y, 0.5, 0, result2);

Mat result3;

Laplacian(src,result3, -1, 3, 1.0, 0, BORDER_DEFAULT);

waitKey(0);

destroyAllWindows();

return 0;

}

⑩⑤USM锐化

*1.高斯模糊、拉普拉斯锐化,高斯模糊结果与拉普拉斯锐化结果相减

*2.拉普拉斯锐化:就是拉普拉斯滤波。

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

Mat blur_image, dst;

GaussianBlur(src, blur_image, Size(3, 3), 0);

Laplacian(src, dst, -1, 1, 1.0, 0, BORDER_DEFAULT);

//使用高斯模糊的结果减去拉普拉斯变换的结果,得到锐化结果

Mat usm_image;

addWeighted(blur_image, 1.0, dst, -1.0, 0, usm_image);

waitKey(0);

destroyAllWindows();

return 0;

}

⑩⑥高斯随机数

Mat noise=Mat::zeros(src.size(),src.type());

randn(noise,Scalar(25, 15, 45),Scalar(60, 40, 30));

⑩⑦图像边缘提取

*1.使用canny检测结果作为掩码,对原图进行与操作

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

int t1 = 50;

Mat edges, dst;

Canny(src, edges, t1, t1 * 3);

bitwise_and(src, src, dst, edges);//提取出原图像的边缘。

waitKey(0);

destroyAllWindows();

return 0;

}

⑩⑧图像二值化的几种阈值方式

*1.固定阈值

*a.THRESH_BINARY :大于255,小于0

*b.THRESH_BINARY_INV :大于0,小于255

*c.THRESH_TRUNC :大于阈值,小于原样

*d.THRESH_TOZERO :小于置0,大于不变

*e.THRESH_TOZERO_INV :大于置0,小于不变

*2.自适应阈值

*a.全局阈值对整幅图使用同一个阈值,对亮度分布不均的,二值化效果不好

*b.ADPTIVE_THRESH_MEAN_C:阈值取值相邻区域像素值平均值

*c.ADPTIVE_THRESH_GAUSSIAN_C:阈值取值相邻区域像素值加权和,权重是高斯分布

*3.OTSU二值化—最大类间方差法

*a.直方图呈双峰的图像,取直方图双峰中间的像素值为阈值。

*b.对非双峰图像效果可能不太好。

*c.相当于找到一个阈值,使类间像素值相差最大,类内像素值相差最小。

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

//全局固定阈值

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 127, 255, THRESH_BINARY_INV);

//最大类间方差查找阈值、三角法查找阈值

double t1 = threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

double t2 = threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_TRIANGLE);

//自适应阈值

adaptiveThreshold(gray, binary, 255, ADAPTIVE_THRESH_MEAN_C, THRESH_BINARY, 25, 10);

waitKey(0);

destroyAllWindows();

return 0;

}

⑩⑨连通域发现

*1.连通域:具有相同的像素值且位置相邻的前景像素点。

*2.应用:ocr字符分割提取;视觉跟踪中运动前景目标分割提取;医学图像感兴趣区域提取;

凡是需要将前景目标提取出来以便后续分析处理的场景都需要连通域发现。

*3.Two-Pass算法原理

*a.图像二值化,第一遍扫描:

若像素点左边的像素值和上边的像素值都是0,则给该像素值新的标签。

*b.若像素点左边的像素值和上边的像素值,有一个是1,则该像素点的标签是像素值为1的标签。

*c.若像素点左边的像素值和上边的像素值都是1,且标签相同,则该像素点的标签就是该标签。

*d.若像素点左边的像素值和上边的像素值都是1,且标签不同,则该像素点的标签就是其中较小的标签。并且将左边像素点和上边像素点标记为相等关系。

*e.第二遍扫描,将标记相等的像素点的标签改为其中较小的标签。

*4.种子填充算法原理Seed Fill

*a.图像二值化

*b.扫描像素点,值为1的像素点。

**把它当做种子,赋予一个新的标签,将此元素压入栈底。

**判断栈是否为空,不为空则给该元素标记为上一步的标签。将栈里的元素取出,依次访问该元素周围四元素。将四元素像素值为1的,压入栈中。

**重复直到栈为空。

如此构成一个连通域

*c.继续扫描,重复步骤b。

RNG rng(12345);

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

//全局固定阈值

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY|THRESH_OTSU);

Mat labels = Mat::zeros(binary.size(), CV_32S);

int num_labels = connectedComponents(binary, labels, 8, CV_32S, CCL_DEFAULT);

vector<Vec3b> colorTable(num_labels);

//为每种标签赋予一种颜色

colorTable[0] = Vec3b(0, 0, 0);

for (int i = 1; i < num_labels; i++) {

colorTable[i] = Vec3b(rng.uniform(0, 256), rng.uniform(0, 256), rng.uniform(0, 256));

}

Mat result = Mat::zeros(src.size(), src.type());

int w = result.cols;

int h = result.rows;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

int label = labels.at<int>(row, col);//原图每个点的标签矩阵

result.at<Vec3b>(row, col) = colorTable[label];//根据标签为每个点选择颜色

}

}

/*Mat labels = Mat::zeros(src.size(), CV_32S);

Mat stats, centroids;

int num_labels = connectedComponentsWithStats(src, labels, stats, centroids, 8, CV_32S, CCL_DEFAULT);

vector<Vec3b> colorTable(num_labels);

colorTable[0] = Vec3b(0, 0, 0);

for (int i = 1; i < num_labels; i++) {

colorTable[i] = Vec3b(rng.uniform(0, 256), rng.uniform(0, 256), rng.uniform(0, 256));

}

Mat result = Mat::zeros(src.size(), CV_8UC3);

int w = result.cols;

int h = result.rows;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

int label = labels.at<int>(row, col);

result.at<Vec3b>(row, col) = colorTable[label];

}

}

for (int i = 1; i < num_labels; i++) {

int cx = centroids.at<double>(i, 0);

int cy = centroids.at<double>(i, 1);

int x = stats.at<int>(i, CC_STAT_LEFT);

int y = stats.at<int>(i, CC_STAT_TOP);

int width = stats.at<int>(i, CC_STAT_WIDTH);

int height = stats.at<int>(i, CC_STAT_HEIGHT);

int area = stats.at<int>(i, CC_STAT_AREA);

}*/

waitKey(0);

destroyAllWindows();

return 0;

}

②⑩图像轮廓形状匹配

*1.图像轮廓Hu矩形状匹配

**a.图像矩用于分析、描述分割后的形状

**b.通过图像矩可以获得简单的图像信息,比如面积、形心、方向信息等。

**c.Hu矩对平移、旋转、缩放、镜像都不敏感的7个二维不变矩的集合。常用作特征描述符。

**d.面积:area=m00,x_=m10/m00,y_=m01/m00

*2.matchShapes的方法:

**a.I1:矩的倒数差的绝对值

**b.I2:矩的差的绝对值

**c.I3:矩的百分比差的绝对值。

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

vector<vector<Point>> contours1;

vector<vector<Point>> contours2;

Moments mm2 = moments(contours2[0]);//对图像2的第一个轮廓求矩

Mat hu2;

HuMoments(mm2, hu2);//由矩得到Hu矩

//遍历图像1的轮廓,同样求矩和Hu矩

for (size_t t = 0; t < contours1.size(); t++) {

Moments mm = moments(contours1[t]);

//用0阶矩和1阶矩求形心

double cx = mm.m10 / mm.m00;//

double cy = mm.m01 / mm.m00;

Mat hu;

HuMoments(mm, hu);//由7个具有旋转、平移、缩放、镜像不变性的二维不变矩得到Hu矩

double dist = matchShapes(hu, hu2, CONTOURS_MATCH_I1, 0);//使用Hu矩进行形状匹配

if (dist < 1.0) {

drawContours(src, contours1, t, Scalar(0, 0, 255), 2, 8);

}

}

waitKey(0);

destroyAllWindows();

return 0;

}

②①图像轮廓逼近与拟合

*1.轮廓逼近的本质是减少编码点

*2.通过限定轮廓到近似轮廓的最大距离,得到近似轮廓。忽略局部细节。

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

////对轮廓拟合椭圆

//Mat gray, binary;

//cvtColor(src, gray, COLOR_BGR2GRAY);

//threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

//vector<vector<Point>> contours;

//vector<Vec4i> hirearchy;

//findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

//for (size_t t = 0; t < contours.size(); t++) {

// RotatedRect rrt = fitEllipse(contours[t]);

// float w = rrt.size.width;

// float h = rrt.size.height;

// Point center = rrt.center;

// circle(src, center, 3, Scalar(255, 0, 0), 2, 8, 0);

// ellipse(src, rrt, Scalar(0, 255, 0), 2, 8);

//}

GaussianBlur(src,src,Size(3,3),0);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

for (size_t t = 0; t < contours.size(); t++) {

Mat result;

approxPolyDP(contours[t], result, 4, true);

}

waitKey(0);

destroyAllWindows();

return 0;

}

②②霍夫直线检测

*过某个点有无数条直线,每条直线可由唯一的原点到直线的垂线确定。

*将垂线的长度作为横坐标,角度作为纵坐标。得到新空间的一点。也就是原空间的一条直线映射到新空间的一点。

*原空间的一点映射成新空间的一条曲线。

*新空间两条曲线的交点,代表唯一的长度和角度,也就是原空间的一条直线。

*通过新空间的交点的曲线越多,代表原空间共线的点越多。

*因此在计算直线时,会对像素点按角度和原点到直线的距离取样。统计相同角度和距离的点的个数。点的累积个数越大,

越可能是直线。

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

GaussianBlur(src,src,Size(3,3),0);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

//霍夫直线检测

vector<Vec3f> lines;

HoughLines(binary, lines, 1, CV_PI / 180.0, 100, 0, 0);

//绘制直线

Point pt1, pt2;

for (size_t i = 0; i < lines.size(); i++) {

//Vec3f的内容:距离,角度,累加值

float rho = lines[i][0];//距离

float theta = lines[i][1];//角度

float acc = lines[i][2];//累加值

//求垂足坐标

double a = cos(theta);

double b = sin(theta);

double x0 = a * rho, y0 = b * rho;

//求端点

pt1.x = cvRound(x0 + 1000 * (-b));

pt1.y = cvRound(y0 + 1000 * (a));

pt2.x = cvRound(x0 - 1000 * (-b));

pt2.y = cvRound(y0 - 1000 * (a));

int angle = round((theta / CV_PI) * 180);

if (rho > 0) {//右倾

line(src, pt1, pt2, Scalar(0, 0, 255), 1, 8, 0);

if (angle = 90) {

line(src, pt1, pt2, Scalar(0, 255, 255), 2, 8, 0);

}

if (angle <= 1) {

line(src, pt1, pt2, Scalar(255, 255, 0), 4, 8, 0);

}

}

}

//HoughLinesP---返回两个端点

Canny(src, binary, 80, 160, 3, false);

vector<Vec4i> lines;

HoughLinesP(binary, lines, 1, CV_PI / 180, 80, 200, 10);

Mat result = Mat::zeros(src.size(), src.type());

for (int i = 0; i < lines.size(); i++) {

line(result, Point(lines[i][0], lines[i][1]), Point(lines[i][2], lines[i][3]), Scalar(0, 0, 255), 1, 8);

}

waitKey(0);

destroyAllWindows();

return 0;

}

②③霍夫圆检测

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

GaussianBlur(gray, gray, Size(9, 9), 2, 2);

vector<Vec3f> circles;

double minDist = 20;

double min_radius = 10;

double max_radius = 50;

HoughCircles(gray, circles, HOUGH_GRADIENT, 3, minDist, 100, 100, min_radius, max_radius);

for (size_t t = 0; t < circles.size(); t++) {

Point center(circles[t][0], circles[t][1]);

int radius = round(circles[t][2]);

circle(src, center, radius, Scalar(0, 0, 255), 2, 8, 0);

}

waitKey(0);

destroyAllWindows();

return 0;

}

②④形态学变换

*1.腐蚀—针对较亮区域而言。

**与膨胀相反

**与膨胀操作不具有可逆性

*2.膨胀—针对较量区域而言

**求局部最大值,卷积核中心点对应像素值用核覆盖下元素值的最大值替代

**与膨胀操作不具有可逆性

*3.开运算

**先腐蚀后膨胀

**消除细小物体,在纤细点处分离物体

*4.闭运算

**先膨胀后腐蚀,填充细小空洞,连接邻近物体

*5.顶帽

**原图与开运算之差

**将开运算去掉的毛刺、细点等提取出来

*6.黑帽

**闭运算与原图之差

**将闭运算填充掉的孔洞提取出来

*7.基本梯度

**膨胀-腐蚀

*8.内梯度

**原图-腐蚀

*9.外梯度

**膨胀-原图

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY_INV | THRESH_OTSU);

Mat dst;

Mat kernel = getStructuringElement(MORPH_RECT, Size(15, 1), Point(-1, -1));

morphologyEx(binary, dst, MORPH_OPEN, kernel, Point(-1, -1), 1);//开运算

Mat basic_grad, inter_grad, exter_grad;

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

morphologyEx(gray, basic_grad, MORPH_GRADIENT, kernel, Point(-1, -1), 1);

Mat dst3, dst4;

erode(gray, dst3, kernel);

dilate(gray, dst4, kernel);

subtract(gray, dst3, inter_grad);

subtract(dst4, gray, exter_grad);

Mat dst1, dst2;

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

erode(src, dst1, kernel);

dilate(src, dst2, kernel);

waitKey(0);

destroyAllWindows();

return 0;

}

②⑤击中击不中变换HitAndMiss

*1.用于寻找二值图像中存在的某些结构模式,寻找具有某种像素排列特征的目标。

只有当结构元素与覆盖区域完全相同时,中心点才会置1,否则为0。

*2.在原图中寻找满足第一个结构元素的元素,找到叫做击中,然后用第二个结构元素在该位置进行匹配,如果匹配不上叫做击不中

*3.用结构元素B1腐蚀A,结构元素B2腐蚀A的补集,结果为两者的与

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY_INV | THRESH_OTSU);

Mat hitmiss;

Mat k = getStructuringElement(MORPH_CROSS, Size(15, 15), Point(-1, -1));

morphologyEx(binary, hitmiss, MORPH_HITMISS, k);

waitKey(0);

destroyAllWindows();

return 0;

}

②⑥提取轮廓和轮廓逼近

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY_INV | THRESH_OTSU);

//闭操作

Mat se = getStructuringElement(MORPH_RECT, Size(15, 15), Point(-1, -1));

morphologyEx(binary, binary, MORPH_CLOSE, se);

//轮廓发现

int height = binary.rows;

int width = binary.cols;

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

double max_area = -1;

int cindex = -1;

for (size_t t = 0; t < contours.size(); t++) {

Rect rect = boundingRect(contours[t]);//对轮廓求正外界矩形

if (rect.height >= height || rect.width >= width) {

continue;

}

double area = contourArea(contours[t]);//对轮廓求面积

double len = arcLength(contours[t], true);//对轮廓求周长

//找面积最大的轮廓

if (area > max_area) {

max_area = area;

cindex = t;

}

}

drawContours(src, contours, cindex, Scalar(0, 0, 255), 2, 8);

Mat pts;

approxPolyDP(contours[cindex], pts, 4, true);

Mat result = Mat::zeros(src.size(), src.type());

drawContours(result, contours, cindex, Scalar(0, 0, 255), 2, 8);

for (int i = 0; i < pts.rows; i++) {

Vec2i pt = pts.at<Vec2i>(i, 0);

circle(src, Point(pt[0], pt[1]), 2, Scalar(0, 255, 0), 2, 8, 0);

circle(result, Point(pt[0], pt[1]), 2, Scalar(0, 255, 0), 2, 8, 0);

}

waitKey(0);

destroyAllWindows();

return 0;

}

②⑦图像色彩空间做掩码提取感兴趣区域

*1.原图转颜色到HSV

*2.使用inRange颜色区间,制作掩码mask

*3.使用掩码mask提取原图指定区域

int main(int argc, char** argv) {

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

VideoCapture capture("/.mp4");

if (!capture.isOpened()) {

printf("could not open the cammera...\n");

return -1;

}

Mat frame, hsv, mask, result;

while (true) {

bool ret = capture.read(frame);

if (!ret) break;

cvtColor(frame, hsv, COLOR_BGR2HSV);

inRange(hsv, Scalar(35, 42, 46), Scalar(77, 255, 255), mask);

bitwise_not(mask, mask);

bitwise_and(frame, frame, result, mask);

char c = waitKey(5);

if (c == 27) {

break;

}

}

capture.release();

waitKey(0);

destroyAllWindows();

return 0;

}

②⑧直方图反向投影

*1.作用:

**用来做图像分割,从原图中找到感兴趣区域

*2.输出和原图一样大小的矩阵,每个像素点代表直方图值

*3.原理:

**对目标区域建立直方图

**计算原图像素点对应直方图中的直方值

**输出目标矩阵。

**使用阈值过滤

int main(int argc, char** argv) {

Mat model = imread("D:/projects/opencv_tutorial/data/images/sample.png");

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()||model.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

//转到hsv颜色空间

Mat model_hsv, image_hsv;

cvtColor(model, model_hsv, COLOR_BGR2HSV);

cvtColor(src, image_hsv, COLOR_BGR2HSV);

//计算模板的直方图

int h_bins = 48, s_bins = 48;

int histSize[] = { h_bins,s_bins };//在h和s两个方向上计算直方图

int channels[] = { 0,1 };//指定通道h和s

Mat roiHist;

float h_range[] = { 0,180 };

float s_range[] = { 0,255 };

const float* ranges[] = { h_range,s_range };

calcHist(&model_hsv, 1, channels, Mat(), roiHist, 2, histSize, ranges, true, false);

normalize(roiHist, roiHist, 0, 255, NORM_MINMAX, -1, Max());

//反向投影

MatND backproj;

calcBackProject(&image_hsv, 1, channels, roiHist, backproj, ranges, 1.0);

waitKey(0);

destroyAllWindows();

return 0;

}

②⑨Harris角点检测

*1.什么是角点:在x,y方向都有较大的梯度变化的点。

*2.角点作用:图像匹配、拼接。

*3.harris角点检测具有旋转不变性,但是不具有尺度不变性。

*4.滑动窗口在原图上滑动,每经过一个位置,计算x,y方向的梯度变化。

*5.对矩阵求特征值,根据特征值的大小划分区域:特征值满足一定条件的为角点区域,满足一定条件的为边缘区域,满足一定条件的为平坦区域。

①求行列式的值。②求迹的值。

*6.shi-tomas角点检测,使用行列式的最小值,来划定区域

可以具有更高的角点稳定性。

void harris_demo(Mat& image) {

Mat gray;

cvtColor(image, gray, COLOR_BGR2GRAY);

Mat dst;

double k = 0.04;

int blocksize = 2;

int ksize = 3;

cornerHarris(gray, dst, blocksize, ksize, k);

Mat dst_norm = Mat::zeros(dst.size(), dst.type());

normalize(dst, dst_norm, 0, 255, NORM_MINMAX, -1, Mat());

convertScaleAbs(dst_norm, dst_norm);

RNG rng(12345);

for (int row = 0; row < image.rows; row++) {

for (int col = 0; col < image.cols; col++) {

int rsp = dst_norm.at<uchar>(row, col);//harris检测结果大于150的地方,原图对应像素值变成纯白的圆

if (rsp > 150) {

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

circle(image, Point(col, row), 5, Scalar(b, g, r), 2, 8, 0);

}

}

}

}

void shitomas_demo(Mat& image) {

Mat gray;

cvtColor(image, gray, COLOR_BGR2GRAY);

vector<Point2f> corners;

double quality_level = 0.01;

RNG rng(12345);

goodFeaturesToTrack(gray, corners, 200, quality_level, 3, Mat(), 3, false);//shi-tomas角点检测

for (int i = 0; i < corners.size(); i++) {

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

circle(image, corners[i], 5, Scalar(b, g, r), 2, 8, 0);

}

}

③⑩inRange寻找目标轮廓

void process_frame(Mat& image) {

/*

使用inRange提取掩码

对掩码使用开运算

提取掩码的轮廓

选择最大的轮廓

*/

Mat hsv, mask;

cvtColor(image, hsv, COLOR_BGR2GRAY);

inRange(hsv, Scalar(0, 43, 46), Scalar(10, 255, 255), mask);

Mat se = getStructuringElement(MORPH_RECT, Size(15, 15));

morphologyEx(mask, mask, MORPH_OPEN, se);

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

findContours(mask, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

int index = -1;

double max_area = 0;

for (size_t t = 0; t < contours.size(); t++) {

double area = contourArea(contours[t]);

if (area > max_area) {

max_area = area;

index = t;

}

}

if (index >= 0) {

RotatedRect rrt = minAreaRect(contours[index]);

ellipse(image, rrt, Scalar(255, 0, 0), 2, 8);

circle(image, rrt.center, 4, Scalar(0, 255, 0), 2, 8, 0);

}

}

③①前景与背景分离

*1.背景初始化–>前景提取–>背景更新

*2.当前帧-背景帧,取阈值得到前景帧

*3.KNN:当前待预测值与它邻近K个值是什么值,它就是什么类别。

*4.高斯混合背景建模:

①在有限时间内,对某位置的像素点的像素值建立高斯模型

②利用3σ原则判断像素点是前景还是背景。

auto pMOG2 = createBackgroundSubtractorMOG2(500, 100, false);

int main(int argc, char** argv) {

Mat model = imread("D:/projects/opencv_tutorial/data/images/sample.png");

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()||model.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

waitKey(0);

destroyAllWindows();

return 0;

}

void process2(Mat& image) {

Mat mask, bg_image;

pMOG2->apply(image, mask);

Mat se = getStructuringElement(MORPH_RECT, Size(1, 5), Point(-1, -1));

morphologyEx(mask, mask, MORPH_OPEN, se);

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

findContours(mask, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

for (size_t t = 0; t < contours.size(); t++) {

double area = contourArea(contours[t]);

if (area < 100) {

continue;

}

Rect box = boundingRect(contours[t]);

RotatedRect rrt = minAreaRect(contours[t]);

circle(image, rrt.center, 2, Scalar(255, 0, 0), 2, 8, 0);

ellipse(image, rrt, Scalar(0, 0, 255), 2, 8);

}

}

void process_frame(Mat& image) {

Mat mask, bg_image;

pMOG2->apply(image, mask);

pMOG2->getBackgroundImage(bg_image);

}

③②光流分析

*1.稠密光流:图像上所有像素点的光流都表示出来。

*2.光流:空间运动物体在像素平面上的瞬时速度。

*3.基本假设:a.同一目标在不同帧之间亮度相同。

b.相邻帧之间位移要比较小。

③③基于均值迁移MeanShift的视频分析

*1.选择首帧图片选取目标

*2.将目标ROI提取出来,并转化为HSV空间

*3.用inRange()提取ROI内容作为mask;

*4.通过mask计算ROI区域直方图

*5.视频流每帧转化为HSV空间,并做反向投影。

6.对反向投影做均值迁移

7.均值迁移:

①用一个窗口求窗口像素均值。

②求其他像素到均值距离。找到最大距离像素点。

③窗口中心向该像素点迁移。

④重复直到中心位置不再变化。

此中心为目标物中心位置。

int main(int argc, char** argv) {

Mat model = imread("D:/projects/opencv_tutorial/data/images/sample.png");

Mat src = imread("C:/Users/00072300/source/repos/opencv_samples/opencv_samples/resources/test.png", IMREAD_GRAYSCALE);

if (src.empty()||model.empty()) {

printf("could not find image file");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

VideoCapture capture("D:/images/video/balltest.mp4");

if (!capture.isOpened()) {

printf("could not open the camera...\n");

}

Mat frame, hsv, hue, mask, hist, backproj;

capture.read(frame);

bool init = true;

Rect trackWindow;

int hsize = 16;

float hranges[] = { 0,180 };

const float* ranges = hranges;

Rect selection = selectROI("MeanShift Demo", frame, true, false);//选取第一帧感兴趣的ROI区域

while (true) {

bool ret = capture.read(frame);

if (!ret) break;

cvtColor(frame, hsv, COLOR_BGR2HSV);//新的帧转化为hsv空间

inRange(hsv, Scalar(26, 43, 46), Scalar(34, 255, 255), mask);//inRange按颜色提取目标

int ch[] = { 0,0 };

hue.create(hsv.size(), hsv.depth());//使用Mat.create的方式创建矩阵

mixChannels(&hsv, 1, &hue, 1, ch, 1);

if (init) {

Mat roi(hue, selection), maskroi(mask, selection);

calcHist(&roi, 1, 0, maskroi, hist, 1, &hsize, &ranges);//计算感兴趣区域的直方图

normalize(hist, hist, 0, 255, NORM_MINMAX);

trackWindow = selection;

init = false;

}

calcBackProject(&hue, 1, 0, hist, backproj, &ranges);//计算直方图反向投影

backproj &= mask;

RotatedRect rrt = CamShift(backproj, trackWindow, TermCriteria(TermCriteria::COUNT | TermCriteria::EPS, 10, 1));

ellipse(frame, rrt, Scalar(255, 0, 0), 2, 8);

}

waitKey(0);

destroyAllWindows();

return 0;

}

第二部分:特征提取

①图像特征的定义与表示

*1.CNN/DNN

*2.传统特征工程:

a.HOG,LPB,HAAR

b.SIFT,SURE,ORB,AKAZE

*3.特征是图像的基因,是图像的唯一表述。

*4.特征是将图像表示成抽象的向量。

②图像特征提取概述

*1.传统特征提取依赖于:颜色分布、角点、边缘、梯度、纹理等。

*2.深度学习特征提取依赖于:监督学习、自动特征提取。

*3.特征的特性:

a.尺度空间不变性—不随着尺度空间改变而改变。

b.旋转不变性。----不因为旋转而改变。

c.光照一致性—不因光照的不同而不同。

d.像素迁移不变性。—位置变动

③图像特征应用一:角点检测

**E(u,v)=w(x,y)[I(x+u,y+v),I(x,y)]**2

**求特征值

*harrirs角点检测:R=λ1λ2-k(λ1+λ2)**2-----根据R的大小确定是角点还是边缘。

void cv::cornerHarris()

**Shi-Tomas角点检测:R=min(λ1,λ2)

void cv::goodFeaturesToTrack()

int main(int argc, char** argv) {

Mat src = imread("D:/projects/opencv_tutorial/data/images/sample.png");

Mat gray;

cvtColor(src, gray, COLOR_BGR2GRAY);

vector<Point> corners;

goodFeaturesToTrack(gray, corners, 400, 0.015, 10);//400:角点最大数目,0.015:角点品质因子,10:10范围内有更强角点则删除此角点

for (size_t t = 0; t < corners.size(); t++) {

circle(src, corners[t], 2, Scalar(0, 0, 255), 2, 8, 0);

}

waitKey(0);

destroyAllWindows();

return 0;

}

④图像特征应用二:SIFT关键点检测

*1.harris角点检测和Shi-Tomos角点检测,虽然具有旋转不变性,但是不具有尺度不变性,因为尺度放大后,原来的角点就不再是角点了。

*2.SIFT关键点检测的是十分突出,不受尺度、光照等影响的关键点。用于提取局部特征。角点、边缘点、暗区的亮点等。

*3.步骤:

**a.尺度空间极值检测。

不同尺度空间的高斯金字塔—>DOG高斯金字塔之间差分—>上下三层空间中找极值

**b.关键点定位。

极值点求导拟合—>删除低对比度和低响应候选点。

**c.方向指派。

计算梯度幅值和幅角—>加权处理—>求方向梯度直方图—>选择峰值作为特征点主方向和辅方向

**d.生成特征描述子

***1.关键点梯度方向和大小

**2.关键点邻域分成44的区域

***3.每个区域统计方向梯度直方图。得到8维的向量

*4.最终得到448=128的特征描述子。

int main(int argc, char** argv) {

Mat src = imread("D:/projects/opencv_tutorial/data/images/sample.png");

auto sift = SIFT::create(500);

vector<KeyPoint> kypts;

sift->detect(src, kypts);

Mat result;

drawKeypoints(src, kypts, result, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

for (int i = 0; i < kypts.size(); i++) {

std::cout << "pt:" << kypts[i].pt << " angle:" << kypts[i].angle << "size: " << kypts[i].size << std::endl;

}

Mat desc_orb;

sift->compute(src, kypts, desc_orb);//计算特征描述子矩阵

std::cout << desc_orb.rows << " x " << desc_orb.cols << std::endl;

waitKey(0);

destroyAllWindows();

return 0;

}

④图像特征应用二:ORB关键点检测

*1.优点是速度快。

*2.关键点采用fast关键点

*3.fast关键点:

*①是一种角点,检测局部灰度变化明显的地方。

*②采用像素变化来检测。

首先:选择一个点,测定像素。

然后:设定像素阈值

再然后:选择像素点邻域的16个像素点。看是否满足连续N个点像素值大于像素值+阈值。

*③fast关键点不具有尺度不变性和旋转不变性。

*4.ORB解决尺度不变性采用图像金字塔。

*5.ORB采用像素点邻域灰度质心,像素点到质心的方向就是特征方向。

*6.BRIEF特征描述子:

首先:关键点周围的点对p和q。

然后:比较p和q相对大小,大于则取1,小于则取0。

最后:按高斯分布取256个点对,得到一个256维的描述子。

int main(int argc, char** argv) {

Mat src = imread("D:/projects/opencv_tutorial/data/images/sample.png");

auto orb = ORB::create(500);

vector<KeyPoint> kypts;

orb->detect(src, kypts);

Mat result;

drawKeypoints(src, kypts, result, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

for (int i = 0; i < kypts.size(); i++) {

std::cout << "pt:" << kypts[i].pt << " angle:" << kypts[i].angle << "size: " << kypts[i].size << std::endl;

}

Mat desc_orb;

orb->compute(src, kypts, desc_orb);//计算特征描述子矩阵

std::cout << desc_orb.rows << " x " << desc_orb.cols << std::endl;

waitKey(0);

destroyAllWindows();

return 0;

}

⑤图像特征应用三:HOG特征检测

*1.灰度化–>gamma校正

*2.计算图像梯度。

3.划分88的cell

*4.每个cell计算方向梯度直方图。

5.得到的结果在1616的block内做归一化,以抵消光照的变化。

*6.block内cell的向量串联,得到该block的HOG特征

⑥图像特征应用三:特征匹配算法

*1.图像匹配是通过一定算法在两幅图或多幅图中寻找同名点。

*2.模板匹配:matchTemplate:目标模板在原图上滑动,按像素点计算匹配度,挑出最大匹配度的点。

*3.形状匹配:matchShape:计算模板的轮廓的Hu矩,与原图轮廓的Hu矩阵比较。挑出最相似的轮廓。

*4.特征匹配:

*①图像匹配算法:找到两个图像特征点矩阵之间的对应关系。

*②BFMatcher暴力搜索算法:

*③FLANN匹配-----快速最近邻搜索包:KD树等。

int main(int argc, char** argv) {

Mat src = imread("D:/projects/opencv_tutorial/data/images/sample.png");

Mat book = imread("book.jpg");//第一张图

Mat book_on_desk = imread("book_on_desk.jpg");//第二张图

auto orb = ORB::create(500);

vector<KeyPoint> kypts_book;

vector<KeyPoint> kypts_book_on_desk;//关键点

Mat desc_book, desc_book_on_desk;//特征向量

//对图一求关键点和特征向量

orb->detectAndCompute(book, Mat(), kypts_book, desc_book);

//对图二求关键点和特征向量

orb->detectAndCompute(book_on_desk, Mat(), kypts_book_on_desk, desc_book_on_desk);

Mat result;

auto bf_matcher = BFMatcher::create(NORM_HAMMING, false);//实例化暴力匹配对象

vector<DMatch> matches;//vector用于放匹配结果

bf_matcher->match(desc_book, desc_book_on_desk, matches);

//绘制匹配结果

drawMatches(book, kypts_book, book_on_desk, kypts_book_on_desk, matches, result);

waitKey(0);

destroyAllWindows();

return 0;

}

⑦图像特征应用四:特征发现->特征匹配->单应性矩阵->透视变换

*1.单应性变换与透视变换:

①透视变换:将一个平面通过投影矩阵投影到另一个平面

②透视中心,像点,目标点三点共线。

③透视面绕透视轴旋转某一角度,仍能保持投影几何关系不变。

④findHomography与getPerspectiveTransform结果一样,方式不同。

int main(int argc, char** argv) {

Mat src = imread("D:/projects/opencv_tutorial/data/images/sample.png");

Mat book = imread("book.jpg");//模板书

Mat book_on_desk = imread("book_on_desk.jpg");//桌子上的书

auto orb = ORB::create(500);

vector<KeyPoint> kypts_book;

vector<KeyPoint> kypts_book_on_desk;

Mat desc_book, desc_book_on_desk;

orb->detectAndCompute(book, Mat(), kypts_book, desc_book);

orb->detectAndCompute(book_on_desk, Mat(), kypts_book_on_desk, desc_book_on_desk);

Mat result;

auto bf_matcher = BFMatcher::create(NORM_HAMMING, false);

vector<DMatch> matches;

bf_matcher->match(desc_book, desc_book_on_desk, matches);

//选择前15%的匹配结果

float good_rate = 0.15f;

int num_good_matches = matches.size() * good_rate;

sort(matches.begin(), matches.end());

matches.erase(matches.begin() + num_good_matches, matches.end());//erase删除vector的15%后的特征

//绘制剩下的匹配

drawMatches(book, kypts_book, book_on_desk, kypts_book_on_desk, matches, result);

vector<Point2f> obj_pts;

vector<Point2f> scene_pts;

for (size_t t = 0; t < matches.size(); t++) {

obj_pts.push_back(kypts_book[matches[t].queryIdx].pt);

scene_pts.push_back(kypts_book_on_desk[matches[t].trainIdx].pt);

}

//求单应性矩阵

Mat h = findHomography(obj_pts, scene_pts, RANSAC);

vector<Point2f> srcPts;

srcPts.push_back(Point2f(0, 0));

srcPts.push_back(Point2f(book.cols, 0));

srcPts.push_back(Point2f(book.cols, book.rows));

srcPts.push_back(Point2f(0, book.rows));

vector<Point2f> dstPts(4);

perspectiveTransform(srcPts, dstPts, h);

waitKey(0);

destroyAllWindows();

return 0;

}

⑧图像特征应用五:文档对齐

int main(int argc, char** argv) {

Mat ref_img = imread("D:/images/form.png");//表单模板

Mat img = imread("D://images/form_in_doc.jpg");//桌面上待转换的表单

auto orb = ORB::create(500);//

vector<KeyPoint> kypts_ref;

vector<KeyPoint> kypts_img;

Mat desc_book, desc_book_on_desk;

orb->detectAndCompute(ref_img, Mat(), kypts_ref, desc_book);//对模板表单检测关键点和计算描述特征

orb->detectAndCompute(img, Mat(), kypts_img, desc_book_on_desk);//对桌面待检测表单检测关键点和计算描述特征

Mat result;

auto bf_matcher = BFMatcher::create(NORM_HAMMING, false);

vector<DMatch> matches;

bf_matcher->match(desc_book_on_desk, desc_book, matches);

float good_rate = 0.15f;

int num_good_matches = matches.size() * good_rate;

sort(matches.begin(), matches.end());

matches.erase(matches.begin() + num_good_matches, matches.end());

drawMatches(ref_img, kypts_ref, img, kypts_img, matches, result);

vector<Point2f> points1, points2;

//从匹配关系里面获取对应点

for (size_t i = 0; i < matches.size(); i++) {

points1.push_back(kypts_img[matches[i].queryIdx].pt);

points2.push_back(kypts_ref[matches[i].trainIdx].pt);

}

//从匹配关系点中寻找单应性矩阵

Mat h = findHomography(points1, points2, RANSAC);

Mat aligned_doc;

//将桌面上的矩阵映射到目标矩阵

warpPerspective(img, aligned_doc, h, ref_img.size());

waitKey(0);

destroyAllWindows();

return 0;

}

⑨图像特征应用六:图像拼接

void linspace(Mat& image, float begin, float finish, int number, Mat& mask);

void generate_mask(Mat& img, Mat& mask);

int main(int argc, char** argv) {

Mat left = imread("D://images/q11.jpg");

Mat right = imread("D://images/q22.jpg");

if (left.empty() || right.empty()) {

printf("could not load images...\n");

return -1;

}

//寻找特征点以及特征描述子

vector<KeyPoint> keypoints_right, keypoints_left;

Mat descriptors_right, descriptors_left;

auto detector = AKAZE::create();

detector->detectAndCompute(left, Mat(), keypoints_left, descriptors_left);

detector->detectAndCompute(right, Mat(), keypoints_right, descriptors_right);

//特征点匹配

vector<DMatch> matches;

auto matcher = DescriptorMatcher::create(DescriptorMatcher::BRUTEFORCE);

vector<vector<DMatch>> knn_matches;

matcher->knnMatch(descriptors_left, descriptors_right, knn_matches, 2);//k个最近匹配点

const float ratio_thresh = 0.7f;

vector<DMatch> good_matches;

for (size_t i = 0; i < knn_matches.size(); i++) {

//两个最近匹配,其中一个小于另一个的0.7倍,选第一个

if (knn_matches[i][0].distance < ratio_thresh * knn_matches[i][1].distance) {

good_matches.push_back(knn_matches[i][0]);

}

}

Mat dst;

drawMatches(left, keypoints_left, right, keypoints_right, good_matches, dst);

//将匹配上的点放在左右点集里面去

vector<Point2f> left_pts;

vector<Point2f> right_pts;

for (size_t i = 0; i < good_matches.size(); i++) {

left_pts.push_back(keypoints_left[good_matches[i].queryIdx].pt);

right_pts.push_back(keypoints_right[good_matches[i].trainIdx].pt);

}

//求单应性矩阵

Mat H = findHomography(right_pts, left_pts, RANSAC);

//求全景图的大小

int h = max(left.rows, right.rows);

int w = left.cols + right.cols;

Mat panorama_01 = Mat::zeros(Size(w, h), CV_8UC3);//两幅图的宽度之和作为拼接图的宽度,两幅图的最大高度作为拼接图的高度

Rect roi;

roi.x = 0;

roi.y = 0;

roi.width = left.cols;

roi.height = left.rows;

//把左边图截取至拼接图

left.copyTo(panorama_01(roi));

//对右边矩阵做透视变换

Mat panorama_02;

warpPerspective(right, panorama_02, H, Size(w, h));

Mat mask = Mat::zeros(Size(w, h), CV_8UC1);

generate_mask(panorama_02, mask);//panorama_02三个通道均是0的地方就是mask要取值的地方

//mask是右边图投影到全图后,无图像的区域

Mat mask1 = Mat::ones(Size(w, h), CV_32FC1);

Mat mask2 = Mat::ones(Size(w, h), CV_32FC1);

linspace(mask1, 1, 0, left.cols, mask);

linspace(mask2, 0, 1, left.cols, mask);

Mat m1;

vector<Mat> mv;

mv.push_back(mask1);

mv.push_back(mask1);

mv.push_back(mask1);

merge(mv, m1);

panorama_01.convertTo(panorama_01, CV_32F);

multiply(panorama_01, m1, panorama_01);

// 右侧融合

mv.clear();

mv.push_back(mask2);

mv.push_back(mask2);

mv.push_back(mask2);

Mat m2;

merge(mv, m2);

panorama_02.convertTo(panorama_02, CV_32F);

multiply(panorama_02, m2, panorama_02);

// 合并全景图

Mat panorama;

add(panorama_01, panorama_02, panorama);

panorama.convertTo(panorama, CV_8U);

waitKey(0);

destroyAllWindows();

return 0;

}

void linspace(Mat& image, float begin, float finish, int w1, Mat& mask) {

int offsetx = 0;

float interval = 0;

float delta = 0;

for (int i = 0; i < image.rows; i++) {

//对于每一行

offsetx = 0;

interval = 0;

delta = 0;

for (int j = 0; j < image.cols; j++) {

//对于每个像素点

int pv = mask.at<uchar>(i, j);

if (pv == 0 && offsetx == 0) {

offsetx = j;

delta = w1 - offsetx;

interval = (finish - begin) / (delta - 1);

image.at<float>(i, j) = begin + (j - offsetx) * interval;

}

else if (pv == 0 && offsetx > 0 && (j - offsetx) < delta) {

image.at<float>(i, j) = begin + (j - offsetx) * interval;

}

}

}

}

void generate_mask(Mat& img, Mat& mask) {

int w = img.cols;

int h = img.rows;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

Vec3b p = img.at<Vec3b>(row, col);

int b = p[0];

int g = p[1];

int r = p[2];

if (b == g && g == r && r == 0) {

mask.at<uchar>(row, col) = 255;

}

}

}

imwrite("D:/mask.png", mask);

}

第三部分:案例实战

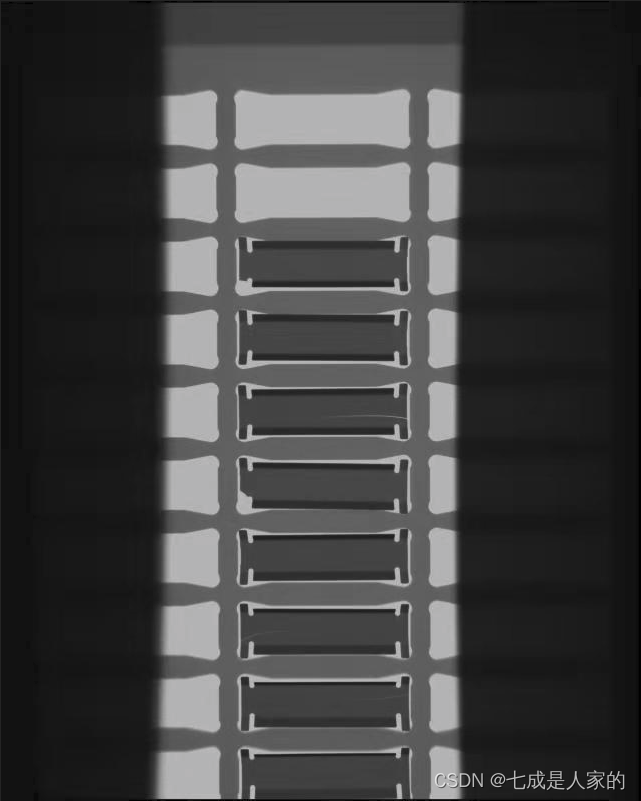

①刀片缺陷检测

*1.项目背景:从一排刀片中找到有瑕疵的刀片

*1.算法思路

灰度–>二值化–>开运算去毛刺–>轮廓发现–>使用面积和高度过滤轮廓–>对剩下的轮廓按y值排序–>截取一个轮廓下的二值图为模板–>

遍历矩形框–>模板与矩形框相减得到掩膜–>对掩膜开运算去掉小瑕疵–>掩膜二值化–>对掩膜数白点。

Mat tpl;

void sort_box(vector<Rect> &boxes);//对矩形框进行排序

void detect_defect(Mat &binary, vector<Rect> rects, vector<Rect> &defect);

int main(int argc, char** argv) {

/*

*

灰度->二值化->开运算->找轮廓->用面积和高度过滤轮廓->对轮廓按y值排序->选第二个轮廓为模板->模板与其他轮廓对比->对比的结果绘在原图上

*/

Mat src = imread("D:/images/ce_01.jpg");//输入图片

if (src.empty()) {

printf("could not load image file...");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

// 转灰度--->全局阈值图像二值化

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY_INV | THRESH_OTSU);

imshow("binary", binary);

// 定义结构元素--->开运算去除毛刺和噪声

Mat se = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

morphologyEx(binary, binary, MORPH_OPEN, se);

// 轮廓发现

vector<vector<Point>> contours;//vector<vector<Point>>

vector<Vec4i> hierarchy;//vector<Vec4i>

vector<Rect> rects;//Vector<Rect>

findContours(binary, contours, hierarchy, RETR_LIST, CHAIN_APPROX_SIMPLE);

int height = src.rows;

for (size_t t = 0; t < contours.size(); t++) {

Rect rect = boundingRect(contours[t]);//轮廓的正外接矩形

double area = contourArea(contours[t]);//轮廓的面积

if (rect.height > (height / 2)) {//用轮廓的正外接矩形的高过滤轮廓

continue;

}

if (area < 150) {//用轮廓的面积过滤轮廓

continue;

}

rects.push_back(rect);//有效的轮廓矩形放入vector

// rectangle(src, rect, Scalar(0, 0, 255), 2, 8, 0);

// drawContours(src, contours, t, Scalar(0, 0, 255), 2, 8);

}

sort_box(rects);//对有效轮廓进行排序-------按Rect的y进行排序

tpl = binary(rects[1]);//Mat(Rect)截取第二个矩形框作为模板

// for (int i = 0; i < rects.size(); i++) {

// putText(src, format("%d", i), rects[i].tl(), FONT_HERSHEY_PLAIN, 1.0, Scalar(0, 255, 0), 1, 8);

// }

vector<Rect> defects;

detect_defect(binary, rects, defects);//每个矩形框和模板比较计算的缺陷Rect放入vector<Rect>

for (int i = 0; i < defects.size(); i++) {

rectangle(src, defects[i], Scalar(0, 0, 255), 2, 8, 0);

putText(src, "bad", defects[i].tl(), FONT_HERSHEY_PLAIN, 1.0, Scalar(0, 255, 0), 1, 8);

}

imshow("detect result", src);

imwrite("D:/detection_result.png", src);

waitKey(0);

return 0;

}

void sort_box(vector<Rect> &boxes) {//按Rect的y进行排序

/*

*/

int size = boxes.size();

for (int i = 0; i < size - 1; i++) {//每个Rect依次与后面的Rect进行比较

for (int j = i; j < size; j++) {

int x = boxes[j].x;

int y = boxes[j].y;

if (y < boxes[i].y) {//后面的比前面的y小,交换前后的Rect

Rect temp = boxes[i];

boxes[i] = boxes[j];

boxes[j] = temp;

}

}

}

}

void detect_defect(Mat &binary, vector<Rect> rects, vector<Rect> &defect) {

/*

遍历矩形框->模板与矩形框相减得到掩膜->对掩膜开运算去掉细小瑕疵->掩膜二值化->对掩膜数白点

*/

int h = tpl.rows;//模板的高

int w = tpl.cols;//模板的宽

int size = rects.size();//矩形框的集合

for (int i = 0; i < size; i++) {

// 构建diff

Mat roi = binary(rects[i]);

resize(roi, roi, tpl.size());//resize到和模板一样的大小

Mat mask;

subtract(tpl, roi, mask);//模板-待检测区域得到瑕疵

//去除细小瑕疵--->二值化

Mat se = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

morphologyEx(mask, mask, MORPH_OPEN, se);

threshold(mask, mask, 0, 255, THRESH_BINARY);

imshow("mask", mask);

waitKey(0);

// 根据diff查找缺陷,阈值化

//数白点的点数

int count = 0;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

int pv = mask.at<uchar>(row, col);

if (pv == 255) {

count++;

}

}

}

// 填充一个像素宽

int mh = mask.rows + 2;

int mw = mask.cols + 2;

Mat m1 = Mat::zeros(Size(mw, mh), mask.type());

Rect mroi;

mroi.x = 1;

mroi.y = 1;

mroi.height = mask.rows;

mroi.width = mask.cols;

mask.copyTo(m1(mroi));

// 轮廓分析

vector<vector<Point>> contours;

vector<Vec4i> hierarchy;

findContours(m1, contours, hierarchy, RETR_LIST, CHAIN_APPROX_SIMPLE);

bool find = false;

for (size_t t = 0; t < contours.size(); t++) {

Rect rect = boundingRect(contours[t]);

float ratio = (float)rect.width / ((float)rect.height);

if (ratio > 4.0 && (rect.y < 5 || (m1.rows - (rect.height + rect.y)) < 10)) {

continue;

}

double area = contourArea(contours[t]);

if (area > 10) {

printf("ratio : %.2f, area : %.2f \n", ratio, area);

find = true;

}

}

if (count > 50 && find) {

printf("count : %d \n", count);

defect.push_back(rects[i]);

}

}

}

②方向梯度直方图做特征检测是否包含水表

*1.算法思路

SVM+HOG

本文详细介绍了OpenCV库在图像处理中的应用,包括基础操作如矩阵操作、直方图、边缘检测、轮廓提取,以及更高级的特征提取方法如SIFT、ORB和HOG。此外,还讨论了图像色彩空间、光流分析、图像拼接等概念,并探讨了特征匹配、单应性矩阵在图像识别中的应用。

本文详细介绍了OpenCV库在图像处理中的应用,包括基础操作如矩阵操作、直方图、边缘检测、轮廓提取,以及更高级的特征提取方法如SIFT、ORB和HOG。此外,还讨论了图像色彩空间、光流分析、图像拼接等概念,并探讨了特征匹配、单应性矩阵在图像识别中的应用。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?