1、要解决的问题:

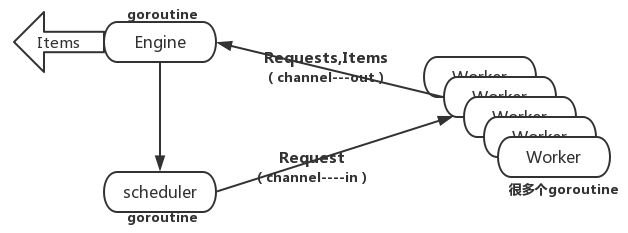

1)、把request直接发给worker会卡住,如下架构图。

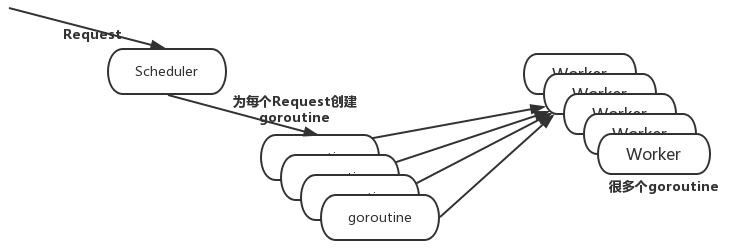

2)也不想每个request都开一个goroutine,控制力比较小,如下架构图。同时希望对worker加以控制。

2、解决上面问题:

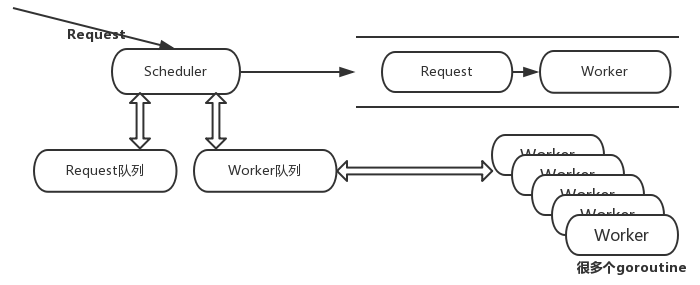

把request和worker都放到相应队列,把我们选择的request发给我们选择的worker。如下架构图

3、代码实现:

发送请求:

1、把对engine的请求全部交给scheduler

//把对engine的请求全部交给scheduler

for _,r := range seeds {

e.Scheduler.Submit(r)

}

2、往scheduler的requestchan里面发任务

//往scheduler的requestchan里面发任务

func (s *QueuedScheduler) Submit(r engine.Request){

s.requestChan <- r

}

3、收到requestchan,将requestchan排队

case r := <-s.requestChan:

requestQ = append(requestQ,r) //收到request,排队

处理请求:

1、开WorkerCount个work goroutine去处理请求

for i:=0; i < e.WorkerCount; i++ {

//每个work goroutine都有自己的in channel,这里开了10个

createWorker(out,e.Scheduler)

}

2、每个goroutine都有自己的workchan

in := make(chan Request)

s.WorkerReady(in)

func (s *QueuedScheduler) WorkerReady(w chan engine.Request){

s.workerChan <- w

}

3、将workchan都放到队列里

case w := <-s.workerChan:

workerQ = append(workerQ,w) //收到worker,排队

4、把我们选择的request发给我们选择的worker。

if len(requestQ)>0 && len(workerQ)>0{

activeWorker = workerQ[0]

activeRequest = requestQ[0]

}

具体代码:

schedular:

type QueuedScheduler struct {

requestChan chan engine.Request

workerChan chan chan engine.Request

//每个worker对外的接口都是一个channel request,每个work都是一个channel

//把每个任务都送给channel request

// 将开的N个goroutine都放在一个channel里面

}

//构造chan

func (s *QueuedScheduler) ConfigureMasterWorkerChan(c chan engine.Request){

panic("implement me")

}

//往scheduler的requestchan里面发任务

func (s *QueuedScheduler) Submit(r engine.Request){

s.requestChan <- r

}

func (s *QueuedScheduler) WorkerReady(w chan engine.Request){

s.workerChan <- w

}

func (s *QueuedScheduler) Run(){

s.workerChan = make(chan chan engine.Request)

s.requestChan = make(chan engine.Request)

go func(){

var requestQ []engine.Request //请求队列

var workerQ []chan engine.Request //work队列,此队列中每个元素是可以放request的channel

for {

var activeRequest engine.Request

var activeWorker chan engine.Request

if len(requestQ)>0 && len(workerQ)>0{

activeWorker = workerQ[0]

activeRequest = requestQ[0]

}

select {

case r := <-s.requestChan:

requestQ = append(requestQ,r) //收到request,排队

case w := <-s.workerChan:

workerQ = append(workerQ,w) //收到worker,排队

case activeWorker <- activeRequest:

workerQ = workerQ[1:]

requestQ = requestQ[1:]

}

}

}()

}

engine:

type ConcurrentEngine struct {

Scheduler Scheduler

WorkerCount int

}

type Scheduler interface {

Submit(Request) //往scheduler的chan里面发任务

ConfigureMasterWorkerChan(chan Request) //构造chan_in

WorkerReady(chan Request)

Run()

}

func (e *ConcurrentEngine) Run(seeds ... Request){

out := make(chan ParseResult)

e.Scheduler.Run() //等待新的任务的到来

for i:=0; i < e.WorkerCount; i++ {

//每个work goroutine都有自己的in channel,这里开了10个

createWorker(out,e.Scheduler)

}

//把对engine的请求全部交给scheduler

for _,r := range seeds {

e.Scheduler.Submit(r)

}

itemCount := 0 //计数器

for{

result := <- out

for _,item := range result.Items{

fmt.Printf("Got item #%d: %v",itemCount,item)

itemCount++

}

for _,request := range result.Requests {

e.Scheduler.Submit(request)

}

}

}

func createWorker(out chan ParseResult,s Scheduler){

in := make(chan Request)

go func() {

for{

// 每个work goroutine都有自己的in channel,从channel里取出要执行的request

s.WorkerReady(in)

Request := <- in

result,err := worker(Request)

if err != nil {

continue

}

out <- result

}

}()

}

func worker(r Request)(ParseResult,error){

log.Printf("Fetching %s",r.Url)

body,err := fetcher.Fetcher(r.Url) //从网络上获取数据,然后由不同的解析器解析数据

if err != nil {

log.Printf("Fetcher:error fetching url %s,%v",r.Url,err)

return ParseResult{},err

}

return r.ParserFunc(body),nil

}

并发爬虫调度器设计

并发爬虫调度器设计

本文介绍了一种并发爬虫的调度器设计方案,通过将请求和工作进程分别放入队列来提高系统的稳定性和可控性。文章详细解释了如何通过调度器实现对请求的合理分配,避免了直接将请求发送给工作进程可能导致的阻塞问题。

本文介绍了一种并发爬虫的调度器设计方案,通过将请求和工作进程分别放入队列来提高系统的稳定性和可控性。文章详细解释了如何通过调度器实现对请求的合理分配,避免了直接将请求发送给工作进程可能导致的阻塞问题。

1424

1424

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?