ELK的集成与使用

使用docker-compose部署ELK

ELK是Elasticsearch、Logstash、Kibana的简称,ELK是一个搜索引擎,Logstash用于收集、解析日志,Kibana是一个Web可视化界面,其中部署ELK使用的docker-compose.yml文件如下:

version: "3"

services:

es01:

image: docker.elastic.co/elasticsearch/elasticsearch:7.7.1

environment:

- node.name=es01

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es02,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./elasticsearch/data01:/usr/share/elasticsearch/data

ports:

- 9200:9200

es02:

image: docker.elastic.co/elasticsearch/elasticsearch:7.7.1

environment:

- node.name=es02

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./elasticsearch/data02:/usr/share/elasticsearch/data

es03:

image: docker.elastic.co/elasticsearch/elasticsearch:7.7.1

environment:

- node.name=es03

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./elasticsearch/data03:/usr/share/elasticsearch/data

logstash:

image: docker.elastic.co/logstash/logstash:7.7.1

volumes:

- ./logstash/logstash.conf:/usr/share/logstash/pipeline/logstash.conf

- ./logstash/logstash.yml:/usr/share/logstash/config/logstash.yml

ports:

- 5044:5044

depends_on:

- es01

kibana:

image: docker.elastic.co/kibana/kibana:7.7.1

restart: always

environment:

ELASTICSEARCH_HOSTS: http://es01:9200

volumes:

- ./kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

depends_on:

- es01

ports:

- 5601:5601

挂载文件修改

挂载的三个文件logstash.conf、logstash.yml、kibana.yml修改如下:

input {

tcp {

port => 5044

codec => "json"

}

}

output {

elasticsearch {

action => "index"

hosts => ["http://es01:9200"]

index => "logstash-%{project-name}"

}

# 打印日志,调试时可打开

#stdout {

# codec => rubydebug

#}

}

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://es01:9200" ]

server.name: kibana

server.host: 0.0.0.0

elasticsearch.hosts: [ "http://es01:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

i18n.locale: "zh-CN"

目录授权

elasticsearch启动的容器会报错,在三个节点挂载的目录下授权chmod -R 777 *,千万不要在服务器的根目录授权,服务器可能会被改坏掉。

Spring Boot集成Logstash

添加maven依赖

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>6.4</version>

</dependency>

修改logback-spring.xml

直接连接Logstash传输日志,配置dev环境不传输STASH日志,只在prod环境传输。

<!-- 日志收集 -->

<appender name="STASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>IP:PORT</destination>

<!-- encoder必须配置,有多种可选 -->

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder">

<customFields>{"project-name":"测试项目"}</customFields>

</encoder>

</appender>

<springProfile name="dev">

<!-- 打印 日志级别 -->

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="DEBUG"/>

<appender-ref ref="INFO"/>

<appender-ref ref="WARN"/>

<appender-ref ref="ERROR"/>

</root>

</springProfile>

<springProfile name="prod">

<!-- 打印 日志级别 -->

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="DEBUG"/>

<appender-ref ref="INFO"/>

<appender-ref ref="WARN"/>

<appender-ref ref="ERROR"/>

<appender-ref ref="STASH"/>

</root>

</springProfile>

Kibana界面配置

创建索引模式

选中自己项目创建的索引,按照提示下一步就好了

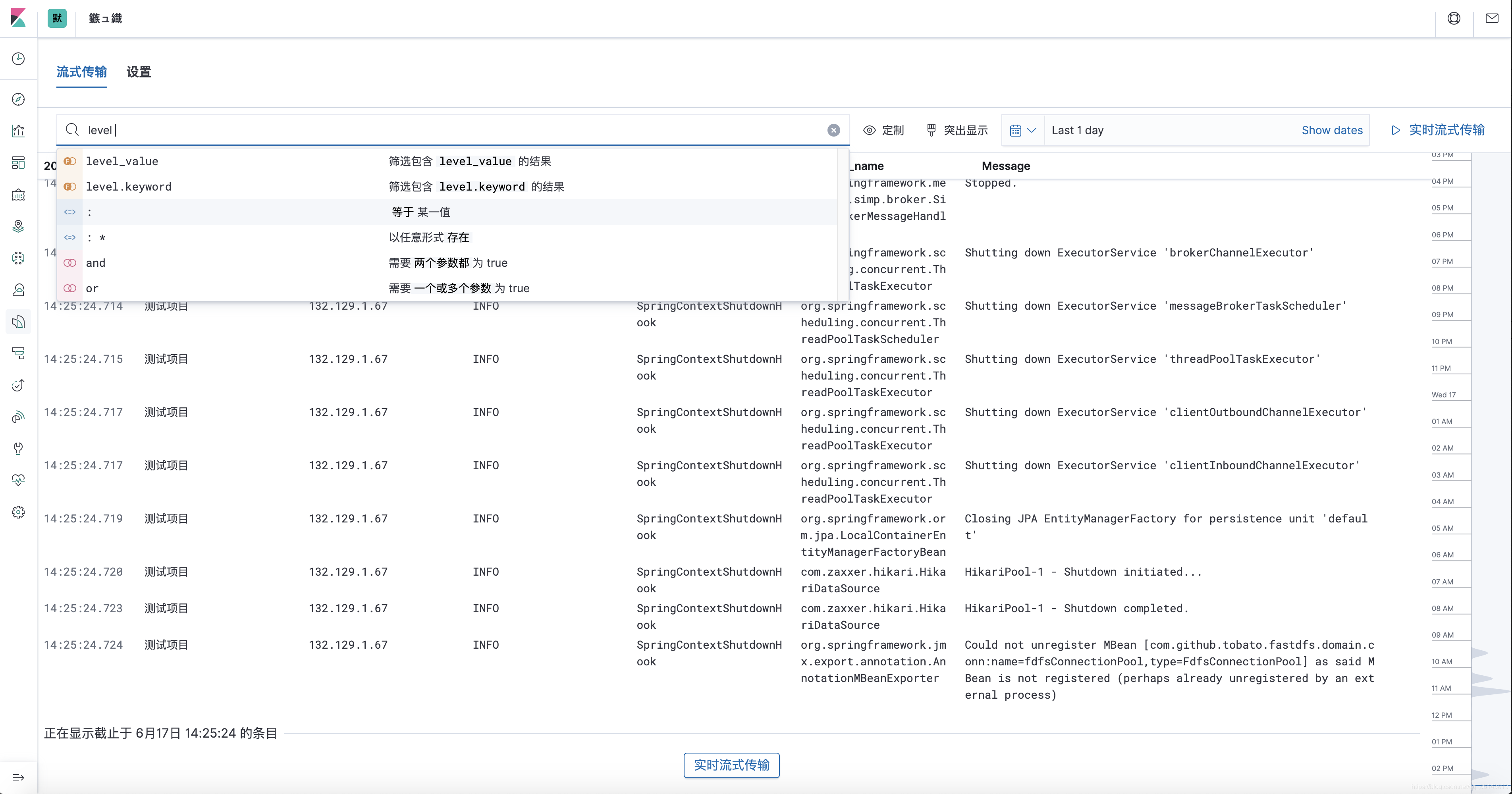

查看日志

查看日志的界面如下,可以进行条件搜索

本文介绍了如何使用docker-compose部署ELK stack,包括挂载文件修改、目录授权等步骤。同时,详细讲解了Spring Boot如何集成Logstash,通过添加maven依赖和修改logback-spring.xml配置日志传输。最后,展示了在Kibana中创建索引模式和查看日志的方法。

本文介绍了如何使用docker-compose部署ELK stack,包括挂载文件修改、目录授权等步骤。同时,详细讲解了Spring Boot如何集成Logstash,通过添加maven依赖和修改logback-spring.xml配置日志传输。最后,展示了在Kibana中创建索引模式和查看日志的方法。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?