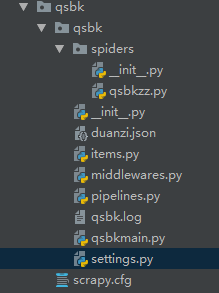

创建糗事百科项目

进入虚拟环境,cd进入创建目录(这一步没写出来),创建项目,进入项目目录,创建爬虫

conda activate Scrapy

scrapy startproject qsbk

cd qsbk

scrapy genspider qsbkzz qiushibaike.com

新建 qsbkmain.py 让我们能在pycharm运行spider

from scrapy import cmdline

#输入命令

cmdline.execute('scrapy crawl qsbkzz'.split())

确认需要爬取内容

import scrapy

class QsbkItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

author = scrapy.Field()

neirong = scrapy.Field()

编写爬虫

# -*- coding: utf-8 -*-

import scrapy

from qsbk.items import QsbkItem

class QsbkzzSpider(scrapy.Spider):

name = 'qsbkzz'

allowed_domains = ['qiushibaike.com']

start_urls = ['https://www.qiushibaike.com/text/page/1/']

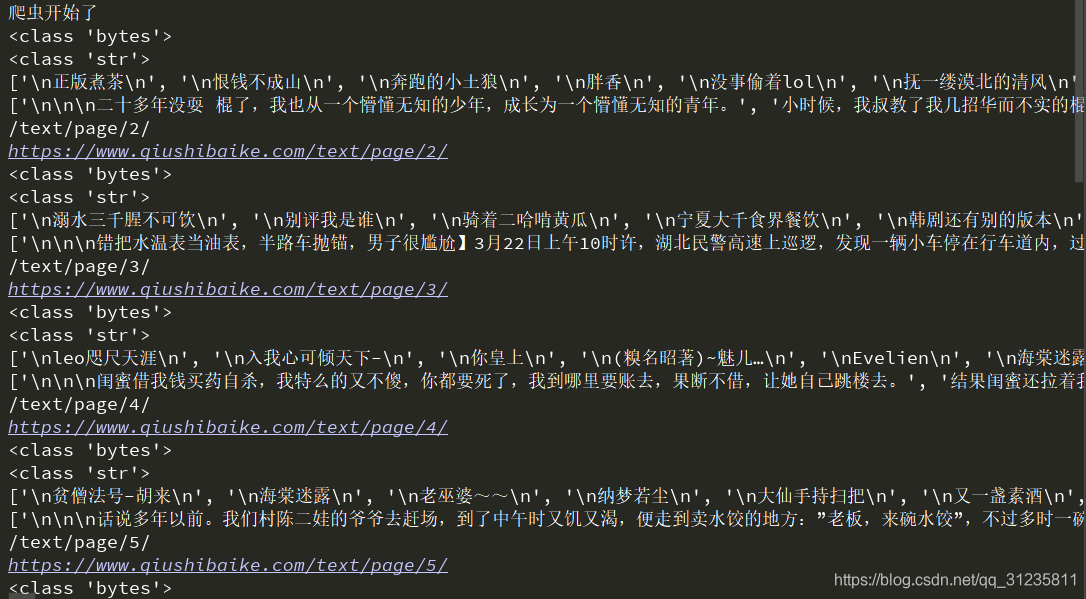

def parse(self, response):

# response.body输出bytes格式网页

# response.text输出为str格式网页

print(type(response.body))

print(type(response.text))

#查找每个内容标签

contents = response.xpath('//div[@id="content-left"]')

#遍历每个查找内容

for content in contents:

# 筛选作者

author = content.xpath('.//h2/text()').extract()

print(author)

# 筛选段子内容

neirong = content.xpath('.//div[@class="content"]/span/text()').extract()

print(neirong)

# 为item赋值

item = QsbkItem(author=author,neirong=neirong)

yield item

# 查询下一页网址,需要拼接

next_url = response.xpath('//ul[@class="pagination"]/li[last()]/a/@href').extract()[0]

if next_url is not None:

next_url = 'https://www.qiushibaike.com'+next_url

print(next_url)

# 使用yield调用函数self.parse解析下一页

yield scrapy.Request(next_url,callback=self.parse)

存储爬取信息为json格式文件

import json

class QsbkPipeline(object):

def __init__(self):

self.fp = open('duanzi.json','w',encoding='utf-8')

def open_spider(self,spider):

print('爬虫开始了')

def process_item(self, item, spider):

item_json = json.dumps(dict(item),ensure_ascii=False)

self.fp.write(item_json+'\n')

return item

def close_spider(self,spider):

self.fp.close()

print('爬虫结束了')

修改settings文件

修改机器人协议

ROBOTSTXT_OBEY = False

等待时间1s

DOWNLOAD_DELAY = 1

构建请求头

DEFAULT_REQUEST_HEADERS = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'

}

打开pipelines

ITEM_PIPELINES = {

'qsbk.pipelines.QsbkPipeline': 300,

}

开启日志,在文件夹出现日志文件qsbk.json

#开启日志

LOG_FILE = 'qsbk.log'

LOG_LEVEL = 'ERROR'

L

本文介绍如何使用Python Scrapy框架创建一个糗事百科爬虫项目,实现内容抓取并保存为JSON格式。从环境搭建到爬虫编写及数据存储全过程详解。

本文介绍如何使用Python Scrapy框架创建一个糗事百科爬虫项目,实现内容抓取并保存为JSON格式。从环境搭建到爬虫编写及数据存储全过程详解。

1079

1079

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?