1.下载源码

wget http://archive.cloudera.com/cdh5/cdh/5/hive-1.1.0-cdh5.7.0-src.tar.gz

2.解压下载的源码

3.自己定义一个Java类继承UDF

…

package com.spark.udf.hive;

import org.apache.hadoop.hive.ql.exec.Description;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.apache.hadoop.io.Text;

/**

- 一个UDF: sayhello

*/

@Description(name = “sayhello”,

value = "FUNC(input_str) - returns Hello : input_str “,

extended = “Example:\n "

+ " > SELECT FUNC(‘wxk’) FROM src LIMIT 1;\n”

+ " ‘Hello : wxk’\n”)

public class sayhello extends UDF {

public Text evaluate(Text input){

System.out.println("sayhello methond start......");

return new Text("Hello"+input);

}

}

…

4.复制继承UDF的自定义的类到指定目录

将java类复制一份到/hive-1.1.0-cdh5.7.0/ql/src/java/org/apache/hadoop/hive/ql/udf文件夹中!!!

打开sayhello类,将包名改为org.apache.hadoop.hive.ql.udf,因为将这个类拷在了/org/apache/hadoop/hive/ql/udf这个目录

中。

5.在FunctionRegistry这个java类给sayhello注册!!!

a.import org.apache.hadoop.hive.ql.udf.sayhello;

b.文件头部 static 块中添加 system.registerUDF(“sayhello”, sayhello.class, false);

如下:

static {

system.registerGenericUDF("concat", GenericUDFConcat.class);

system.registerUDF("sayhello", sayhello.class, false);

system.registerUDF("substr", UDFSubstr.class, false);

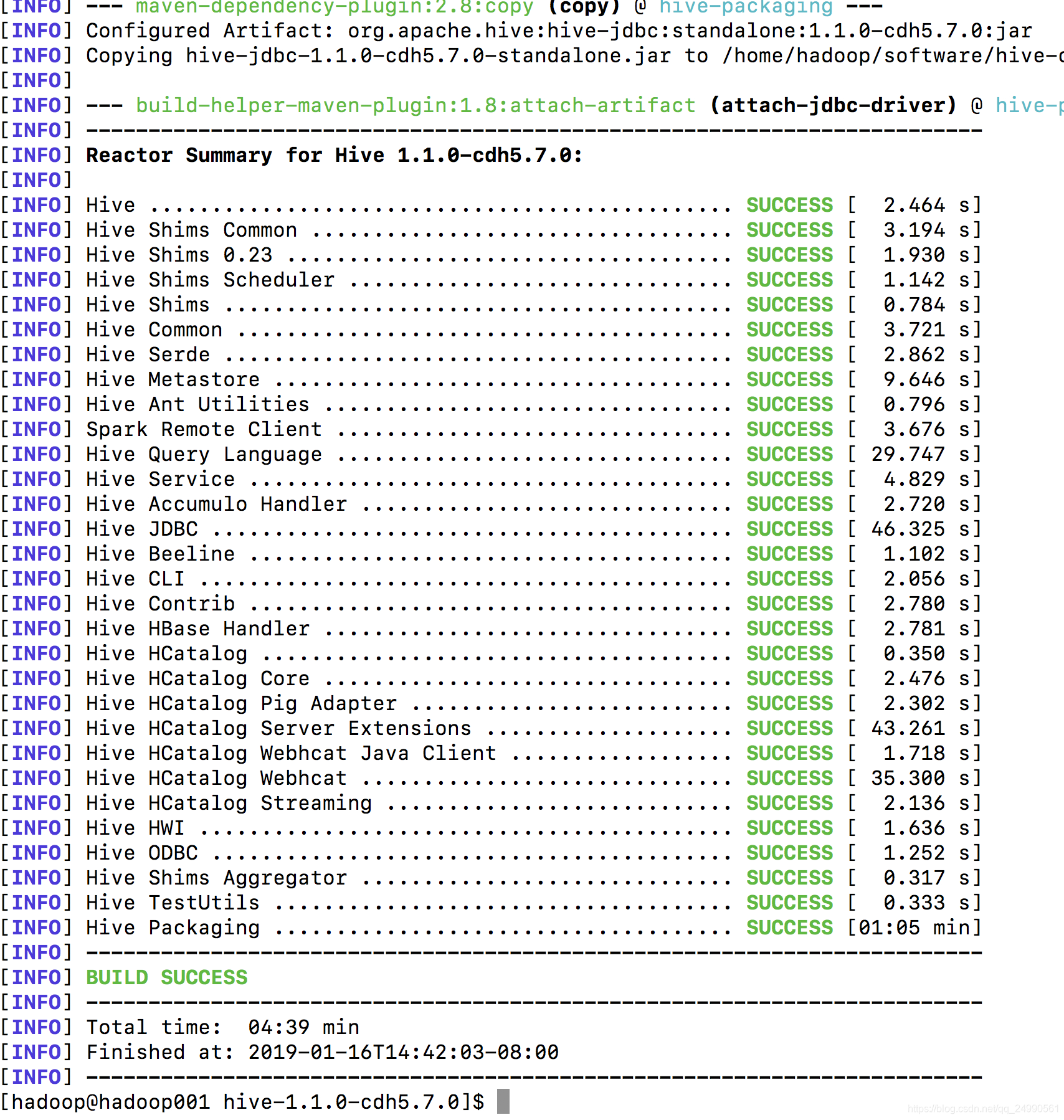

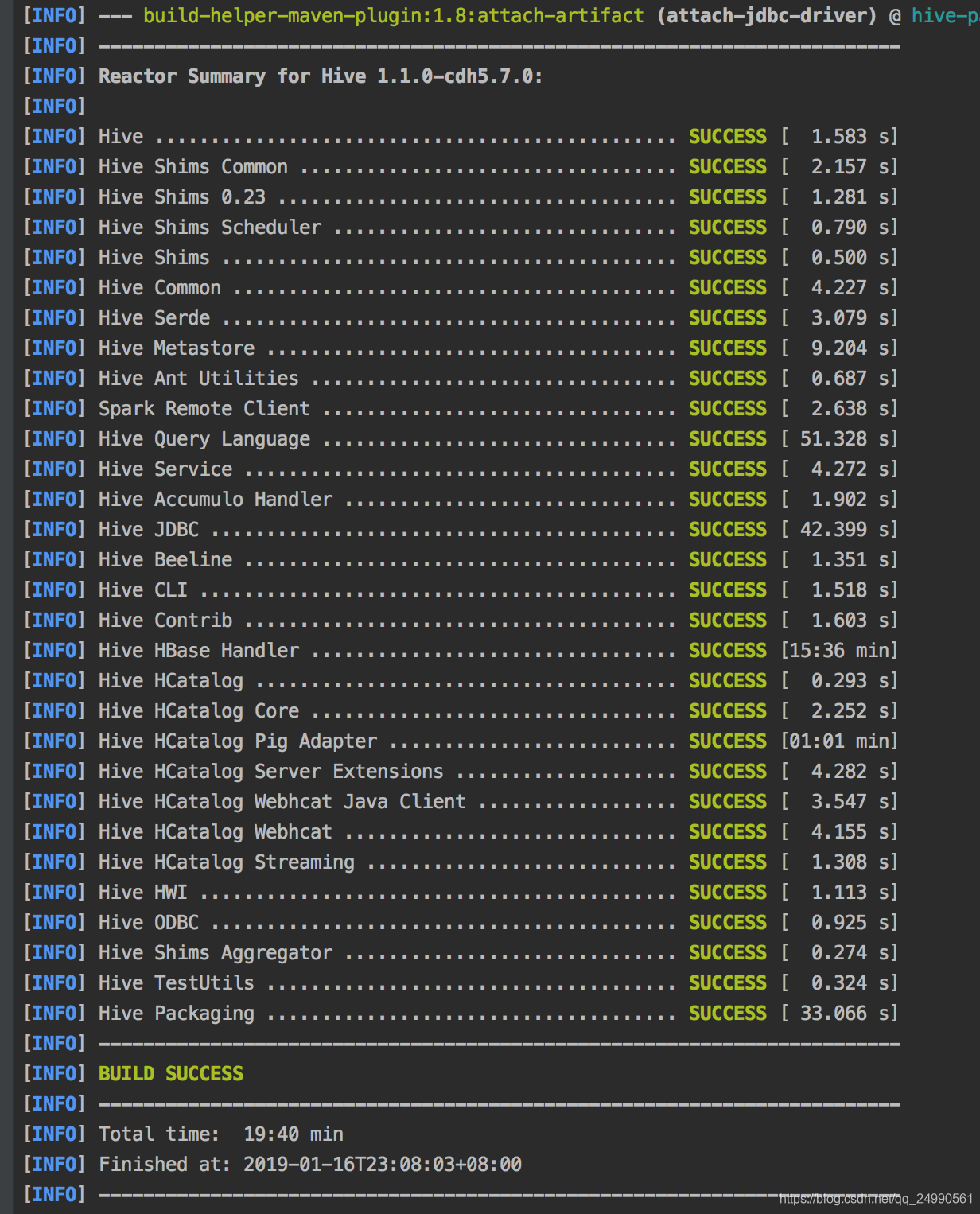

6.编译

Linux :

/home/hadoop/app/apache-maven-3.6.0/bin/mvn -Phadoop-2 -Pdist -DskipTests -Dmaven.javadoc.skip=true clean package

坑:Total time: 39:55 min

[INFO] Finished at: 2019-01-16T06:37:14-08:00

[INFO] ------------------------------------------------------------------------

[WARNING] The requested profile “hadoop-2.6.0-cdh5.7.0” could not be activated because it does not exist.

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.1:compile (default-compile) on project hive-common: Compilation failure: Compilation failure:

[ERROR] /home/hadoop/software/hive-compile/hive-1.1.0-cdh5.7.0/common/src/java/org/apache/hadoop/hive/conf/HiveConf.java:[52,32] package org.apache.hadoop.mapred does not exist

[ERROR] /home/hadoop/software/hive-compile/hive-1.1.0-cdh5.7.0/common/src/java/org/apache/hadoop/hive/conf/HiveConf.java:[2640,20] cannot find symbol

[ERROR] symbol: class JobConf

[ERROR] location: class org.apache.hadoop.hive.conf.HiveConf

改为在mac中 /Users/liujingmao/app/apache-maven-3.6.0/bin/mvn -Phadoop-2 -Pdist -DskipTests -Dmaven.javadoc.skip=true clean package

成功了!!!

两种方法

1.重新部署(麻烦)

2.把编译成功后lib下的hive-exec-1.1.0-cdh5.7.0.jar拷到HIVE_HOME/lib下,这个比较简单

启动 hive

用 show functions;

可以看到自己的UDF了!!

369 SMITH CLERK 7902 1980-12-17 800.00 20

7499 ALLEN SALESMAN 7698 1981-2-20 1600.00 300.00 30

7521 WARD SALESMAN 7698 1981-2-22 1250.00 500.00 30

7566 JONES MANAGER 7839 1981-4-2 2975.00 20

7654 MARTIN SALESMAN 7698 1981-9-28 1250.00 1400.00 30

7698 BLAKE MANAGER 7839 1981-5-1 2850.00 30

7782 CLARK MANAGER 7839 1981-6-9 2450.00 10

7788 SCOTT ANALYST 7566 1987-4-19 3000.00 20

7839 KING PRESIDENT 1981-11-17 5000.00 10

7844 TURNER SALESMAN 7698 1981-9-8 1500.00 0.00 30

7876 ADAMS CLERK 7788 1987-5-23 1100.00 20

7900 JAMES CLERK 7698 1981-12-3 950.00 30

7902 FORD ANALYST 7566 1981-12-3 3000.00 20

7934 MILLER CLERK 7782 1982-1-23 1300.00 10

8888 HIVE PROGRAM 7839 1988-1-23 10300.00

create table

create table emp(

id int,

dname string,

position string,

depid string,

birthdate string,

salary double,

com double,

age int

)row format delimited fields terminated by ‘\t’;

2

load data local inpath ‘/home/hadoop/data/emp.txt’ into table emp;

hive>desc emp;

id int

dname string

position string

depid string

birthdate string

salary double

com double

select dname, sayhello(dname) from emp;

bin/flume-ng agent --name a1 --conf $FLUME_HOME/conf --conf-file example.conf -Dflume.root.logger=INFO,console

bin/flume-ng agent -n a1 -c /$FLUME_HOME/conf -f example.conf -Dflume.root.logger=INFO,console

flume-ng agent

–name a1

–conf $FLUME_HOME/conf

–conf-file $FLUME_HOME/conf/example.conf

-Dflume.root.logger=INFO,console

本文介绍了如何在Hive中创建自定义UDF,包括下载源码、编写Java类、注册函数、解决编译问题,并提供两种部署方式。详细步骤包括:下载和解压Hive源码,定义Java类继承UDF,修改包名,注册自定义函数,编译源码,最后通过部署或替换jar文件来使用自定义的sayhello函数。

本文介绍了如何在Hive中创建自定义UDF,包括下载源码、编写Java类、注册函数、解决编译问题,并提供两种部署方式。详细步骤包括:下载和解压Hive源码,定义Java类继承UDF,修改包名,注册自定义函数,编译源码,最后通过部署或替换jar文件来使用自定义的sayhello函数。

1525

1525

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?