文章目录

配置环境

- 安装pip

sudo apt-get install python3-pip - 安装显卡驱动

- 安装cuda10.1

- 安装cuDNN7.6.5

使用sudo dpkg -i命令 - 安装torch和torchversion,见pytorch官网

- 验证是否安装成功:

机器学习中的分类与回归问题

PyTorch的基本概念

Tensor:张量,任意维度的数据

Variable:变量

nn.Module:模型

Tensor的类型

Tensor的创建

import torch

a = torch.Tensor([[1, 2], [3, 4]])

print(a)

print(a.type())

a = torch.Tensor(2, 3)

print(a)

print(a.type())

'''几种特殊的Tensor'''

a = torch.ones(2, 3)

print(a)

print(a.type())

# 对角线为1,其他为0

a = torch.eye(2, 2)

print(a)

a = torch.ones(2, 3)

print(a)

b = torch.Tensor(2, 3)

b = torch.zeros_like(b)

c = torch.ones_like(b)

print(b)

print(b.type())

print(c)

'''随机Tensor'''

a = torch.rand(2, 3)

print(a)

print(a.type())

# 正态分布

a = torch.normal(mean=0.0, std=torch.rand(2))

print(a)

print(a.type())

# 均匀分布

a = torch.Tensor(2, 2).uniform_(-1, 1)

print(a)

print(a.type())

'''序列'''

a = torch.arange(0, 11, 3)

print(a)

print(a.type())

# 拿到等间隔的n个数字

a = torch.linspace(2, 10, 4)

print(a)

print(a.type())

# 拿到从0-9打乱的序列

a = torch.randperm(10)

print(a)

print(a.type())

##########

'''对比numpy'''

import numpy as np

a = np.array([[1,2],[3,4]])

print(a)

Tensor的属性

# 定义设备

dev = torch.device('cpu')

dev = torch.device('cuda:0')

a = torch.tensor([2, 3], dtype=torch.float32, device=dev)

print(a)

# 稀疏张量

i = torch.tensor([[0, 1, 2], [0, 1, 2]])

v = torch.tensor([1, 2, 3])

a = torch.sparse_coo_tensor(i, v, (4, 4))

print(a)

print(a.to_dense())

- 为什么有的数据放在CPU有的放在GPU上?

- 提高系统资源的利用率

- 数据的读取\预处理放在CPU上

- 数据的参数运算放在GPU上

Tensor的算术运算

a = torch.rand(2, 3)

b = torch.rand(2, 3)

# add

print(a)

print(b)

print(a + b)

print(torch.add(a, b))

print(a.add(b))

a.add_(b)

print(a)

# sub

print(a - b)

print(torch.sub(a, b))

print(a.sub(b))

print(a.sub_(b))

print(a)

# mul

print('======mul=======')

print(a * b)

print(torch.mul(a, b))

print(a.mul(b))

print(a)

print(a.mul_(b))

print(a)

# div

print('=====div=========')

print(a / b)

print(torch.div(a, b))

print(a.div(b))

print(a.div_(b))

print(a)

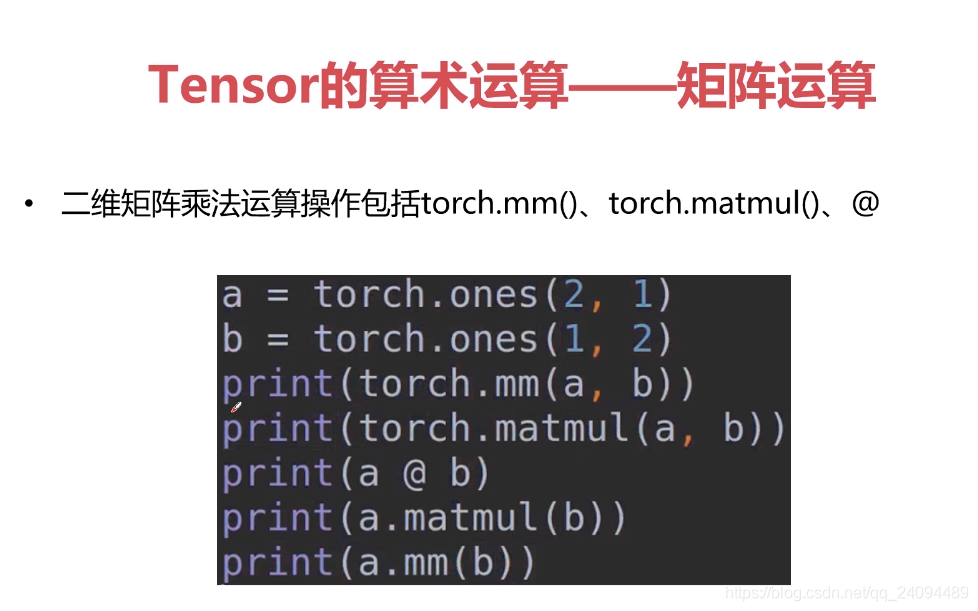

# matmul矩阵运算

a = torch.ones(2, 1)

b = torch.ones(1, 2)

print(a @ b)

print(a.matmul(b))

print(torch.matmul(a, b))

print(torch.mm(a, b))

print(a.mm(b))

# 高维tensor

a = torch.ones(1, 2, 3, 4)

b = torch.ones(1, 2, 4, 3)

print(a)

print(b)

print(a @ b)

print(a.matmul(b).shape)

# pow

a = torch.tensor([1, 2])

print(torch.pow(a, 3))

print(a.pow(3))

print(a ** 3)

print(a.pow_(3))

print(a)

# exp

a = torch.tensor([1,2],dtype=torch.float32)

print(a.type())

print(torch.exp(a))

print(torch.exp_(a))

print(a.exp())

print(a.exp_())

# log

a = torch.tensor([10,2],dtype=torch.float32)

print(torch.log(a))

print(torch.log_(a))

print(a.log())

print(a.log_())

# sprt

a = torch.tensor([10,2],dtype=torch.float32)

print(torch.sqrt(a))

print(torch.sqrt_(a))

print(a.sqrt())

print(a.sqrt_())

in-place操作

Pytorch中的广播机制

- 广播机智:张量参数可以自动扩展为相同大小

- 广播机智需要满足两个条件:

- 每个张量至少有一个维度

- 满足右对齐

- torch.rand(2,1,1) + torch.rand(3)

a = torch.rand(2, 1)

b = torch.rand(3)

c = a + b

print(a)

print(b)

print(c)

取整、取余运算

a = torch.rand(2, 2)

a = a * 10

print(a)

print(a.floor())

print(a.ceil())

print(a.round())

print(a.trunc())

print(a.frac())

print(a % 2)

Tensor的比较运算

a = torch.rand(2, 3)

b = torch.rand(2, 3)

print(a)

print(b)

print(torch.eq(a, b))

print(torch.equal(a, b))

print(torch.ge(a, b))

print(torch.le(a, b))

print(torch.gt(a, b))

print(torch.lt(a, b))

print(torch.ne(a, b))

# 排序

a = torch.tensor([[1, 4, 4, 3, 5],

[2, 3, 1, 3, 5]])

print(torch.sort(a, dim=1, descending=True))

# topk

a = torch.tensor([[2, 4, 3, 1, 5],

[2, 3, 5, 1, 4]])

print(a.shape)

print(torch.topk(a, k=2, dim=1))

print(torch.kthvalue(a, k=2, dim=1))

a = torch.rand(2, 3)

print(a)

print(a / 0)

print(torch.isfinite(a))

print(torch.isfinite(a / 0))

print(torch.isinf(a / 0))

print(torch.isnan(a))

import numpy as np

a = torch.tensor([1, 2, np.nan])

print(torch.isnan(a))

Tensor的三角函数

a = torch.zeros(2, 3)

b = torch.cos(a)

print(a)

print(b)

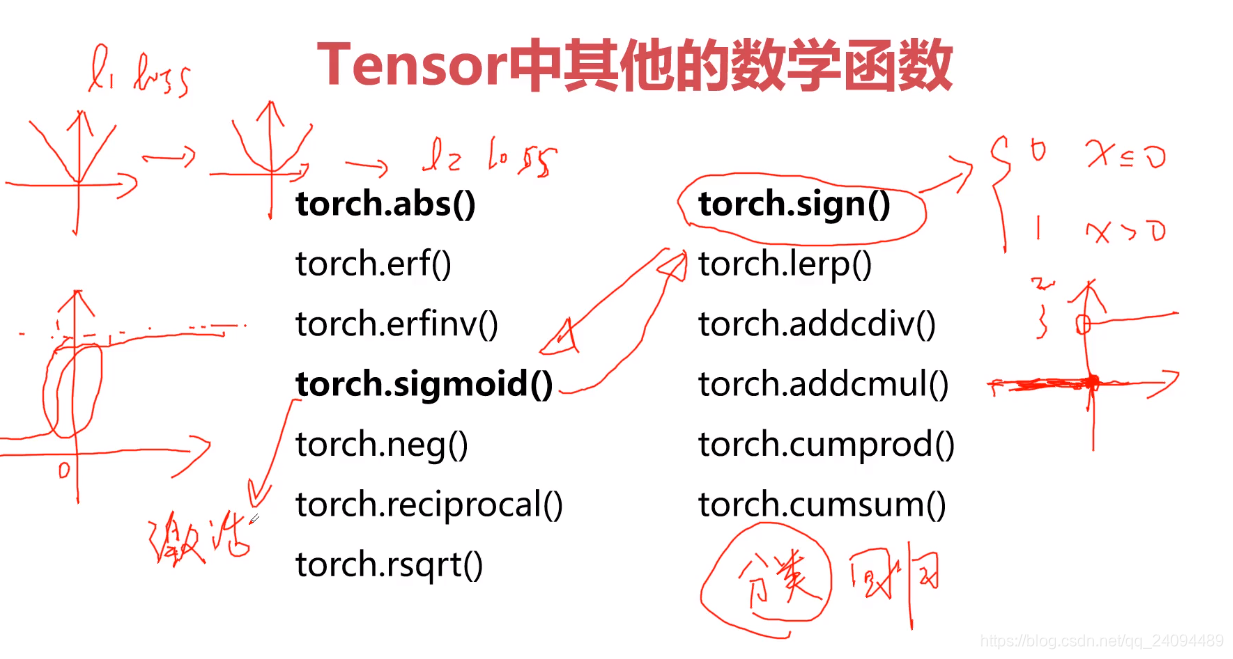

Tensor中其他的数学函数

PyTorch与统计学方法

Pytorch的分布函数

Tensor中的随机抽样

torch.manual_seed(1)

mean = torch.rand(1, 2)

std = torch.rand(1, 2)

print(torch.normal(mean, std))

Tensor中范数的运算

a = torch.rand(2, 1)

b = torch.rand(2, 1)

print(a, b)

print(torch.dist(a, b, 1))

print(torch.dist(a, b, 2))

print(torch.dist(a, b, 3))

print(torch.norm(a))

print(torch.norm(a, p=1))

print(torch.norm(a, p=2))

print(torch.norm(a, p=3))

Tensor中的矩阵分解

Tensor的裁剪运算

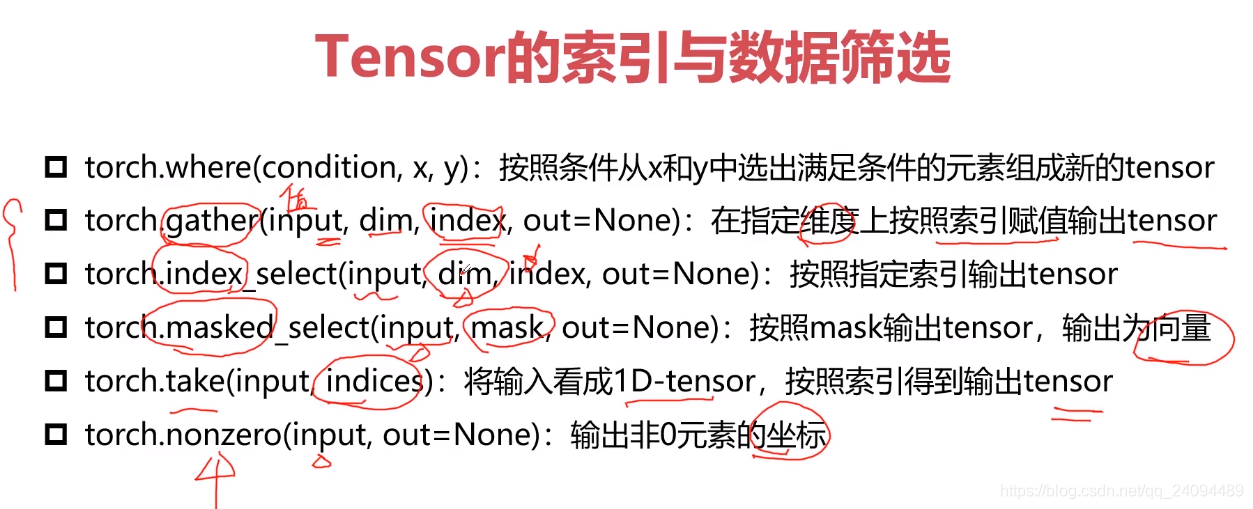

Tensor的索引与数据筛选

# torch.where

a = torch.rand(4, 4).mul(10).trunc()

b = torch.rand(4, 4).mul(10).trunc()

print(a)

print(b)

out = torch.where(a > b, a, b)

print(out)

# torch.index_select

print('=====index_select======')

a = torch.rand(4, 4).mul(10).trunc()

print(a)

out = torch.index_select(a, dim=0, index=torch.tensor([0, 3, 2]))

print(out, out.shape)

# torch.gather

# dim=0, out[i, j, k] = input[index[i, j, k], j, k]

# dim=1, out[i, j, k] = input[i, index[i, j, k], k]

# dim=2, out[i, j, k] = input[i, j, index[i, j, k]]

print('==================gather==================')

a = torch.linspace(1, 16, steps=16).view(4, 4)

out = torch.gather(a,

dim=0,

index=torch.tensor([[0, 1, 1, 1],

[0, 1, 2, 2],

[0, 1, 2, 3]]))

print(a)

print(out)

# torch.masked_select

print('==================masked_select==================')

a = torch.linspace(1, 16, steps=16).view(4, 4)

mask = torch.gt(a, 8)

print(a)

print(mask)

out = torch.masked_select(a, mask)

print(out)

# torch.take

print('==================take==================')

a = torch.linspace(1, 16, steps=20).view(4, 5)

b = torch.take(a, index=torch.tensor([0, 1, 3, 5, 15]))

print(a)

print(b)

# torch.nonzero

# 返回非0元素的索引

# 矩阵的稀疏表示

print('==================nonzero==================')

a = torch.tensor([[0, 1, 2, 0],

[2, 3, 0, 1]])

out = torch.nonzero(a, as_tuple=False)

print(a)

print(out)

Tensor的组合\拼接

# torch.cat

a = torch.zeros(2, 4)

b = torch.ones(2, 4)

print(a)

print(b)

out = torch.cat((a, b), dim=1)

print(out)

# torch.stack

a = torch.linspace(1, 6, 6).view(2, 3)

b = torch.linspace(7, 12, 6).view(2, 3)

print(a)

print(b)

out = torch.stack((a, b), dim=0)

print(out)

print(out.shape)

Tensor的切片

a = torch.rand(10, 4)

out = torch.chunk(a, 2, dim=1)

print(a)

print(out)

out = torch.split(a, 2, dim=0)

print(a)

print(out)

out = torch.split(a, [1, 3, 6], dim=0)

print(out)

Tensor的变形操作

a = torch.rand(2, 3)

print(a)

out = torch.reshape(a, (3, 2))

print(out)

print(out.t())

a = torch.rand(1, 2, 3)

out = torch.transpose(a, 0, 1)

print(a, a.shape)

print(out, out.shape)

out = torch.squeeze(a)

print(out, out.shape)

out = torch.unsqueeze(a, dim=-1)

print(out, out.shape)

out = torch.unbind(a, dim=2)

print(out)

print(a)

print(torch.flip(a, dims=[1, 2]))

print(a)

torch.rot90(a)

Tensor的填充操作

a = torch.full((2, 3), 10, dtype=torch.long)

print(a)

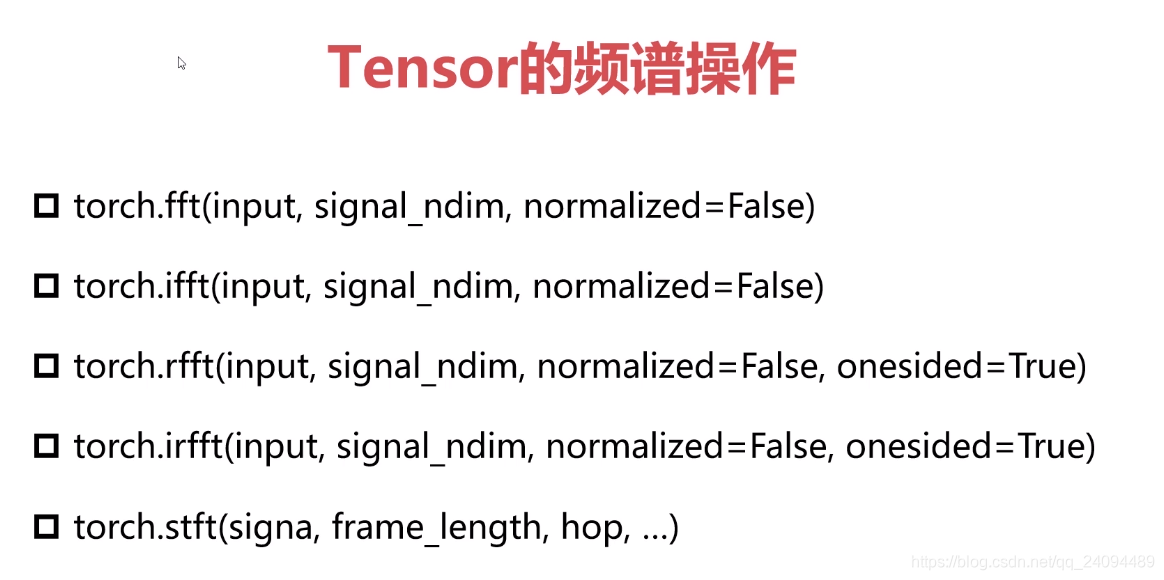

Tensor的频谱操作

Pytorch简单编程技巧

模型的保存/加载

并行化

分布式

Tensor on GPU

Tensor的相关配置

Tensor与numpy的相互转换

data = cv2.imread('hjy1.jpg')

cv2.imshow('test1', data)

cv2.waitKey(0)

# a = np.zeros([2, 2])

out = torch.from_numpy(data)

out = out.to('cuda')

print(out)

print(out.is_cuda)

out = torch.flip(out, dims=[2])

out = out.to('cpu')

print(out.is_cuda)

data = out.numpy()

cv2.imshow('test2', data)

cv2.waitKey(0)

Pytorch与autograd-导数-方向导数-偏导数-梯度的概念

导数

- 导数(一元函数)是变化率、是切线的斜率、是瞬时速度

方向导数

- 函数在A点无数个切线的斜率的定义。每个切线都代表一个变化的方向

偏导数

- 多元函数降维时候的变化,比如:二元函数固定y,只让x单独变化,从而看成是关于x的一元函数的变化来研究

f x ( x , y ) = lim Δ → 0 f ( x + Δ x , y ) − f ( x , y ) Δ x f_x(x,y)=\lim_{\Delta\rightarrow0}\cfrac{f(x+{\Delta}x,y)-f(x,y)}{{\Delta}x} fx(x,y)=Δ→0limΔxf(x+Δx,y)−f(x,y)

梯度

- 函数在A点无数个变化方向中变化最快的那个方向

- 记为:

∇

f

\nabla f

∇f或者

g

r

a

d

f

=

(

∂

φ

∂

x

,

∂

φ

∂

y

,

∂

φ

∂

z

)

gradf=(\dfrac{\partial\varphi}{\partial x},\dfrac{\partial\varphi}{\partial y},\dfrac{\partial \varphi}{\partial z})

gradf=(∂x∂φ,∂y∂φ,∂z∂φ)

梯度与机器学习中的最优解

- 有监督学习,无监督学习,半监督学习

- 样本X,标签Y

- Y = f ( X ) Y = f(X) Y=f(X)

Variable in Tensor

- 目前Variable已经与Tensor合并

- 每个tensor通过requires_grad来设置是否计算梯度

- 用来冻结某些层的参数

如何计算梯度

- 链式法则:两个函数组合起来的复合函数,导数等于里面函数带入外函数值的导数乘以里面函数之导数

y = 2 x , z = y 2 , d z d x = d z d y × d y d x = 2 y × 2 = 4 y y = 2x,z=y^2, \frac{dz}{dx}=\frac{dz}{dy}\times\frac{dy}{dx}=2y\times2=4y y=2x,z=y2,dxdz=dydz×dxdy=2y×2=4y

Autograd中的几个重要概念

- 叶子张量

- grad VS grad_fn

- grad:该Tensor的梯度值,每次在计算backword时都需要将前一时刻的梯度归零,否则梯度值会一直累加。

- grad_fn:叶子节点通常为None,只有结果节点的grad_fn才有效,用于指示梯度函数是哪种类型。

- backword函数

class line(torch.autograd.Function):

@staticmethod

def forward(ctx, w, x, b):

# y = w*x+b

ctx.save_for_backward(w, x, b)

return w * x + b

@staticmethod

def backward(ctx, grad_out):

w, x, b = ctx.saved_tensors

grad_w = grad_out * x

grad_x = grad_out * w

grad_b = grad_out

return grad_w, grad_x, grad_b

w = torch.rand(2, 2, requires_grad=True)

x = torch.rand(2, 2, requires_grad=True)

b = torch.rand(2, 2, requires_grad=True)

out = line.apply(w, x, b)

out.backward(torch.ones(2, 2))

print(w, '\n', x, '\n', b)

print(w.grad, x.grad, b.grad)

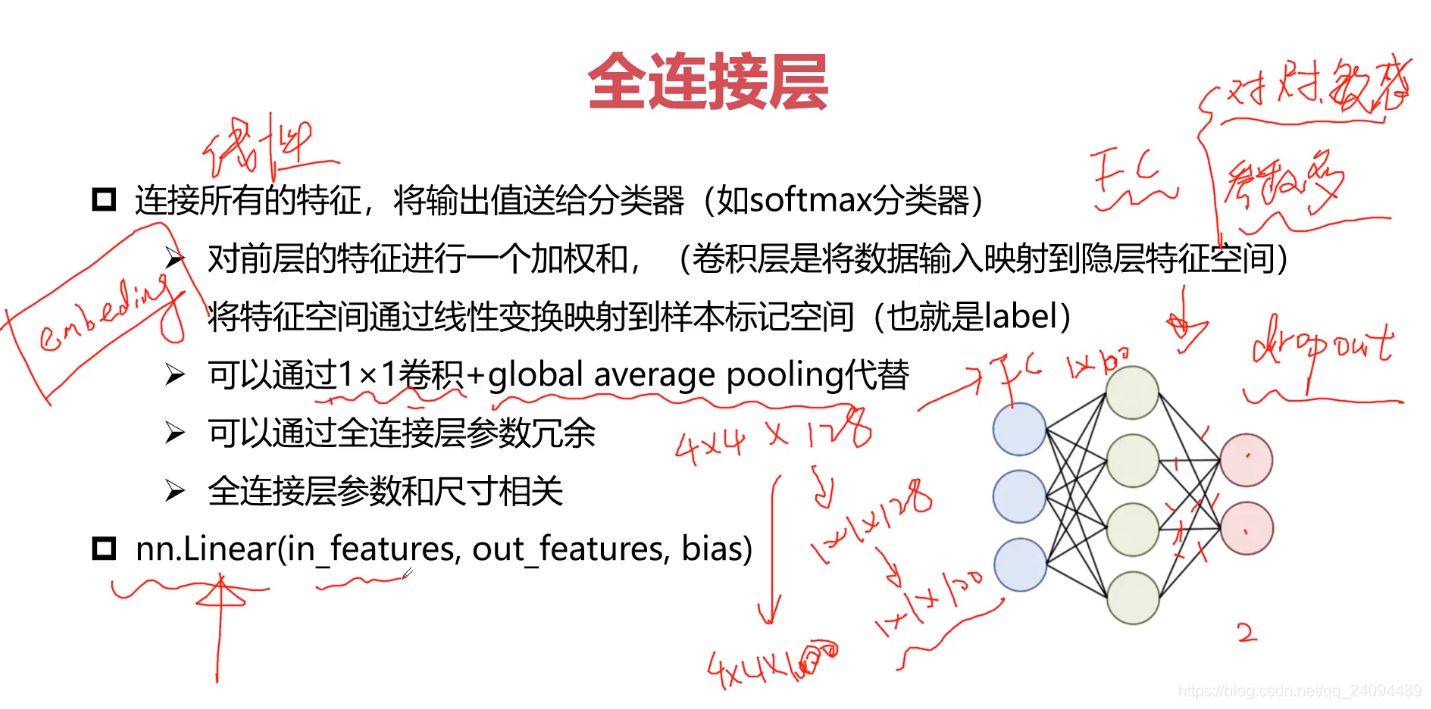

Pytorch与nn库

- torch.nn是专门为神经网络设计的模块化接口

- nn构建于autograd之上,可以用来定义和运行神经网络

- nn.Parameter

- nn.Linear & nn.conv2d等等

- nn.functional

- nn.Module

- nn.Sequential

- nn.Parameter

Pytorch与visdom

Pytorch与tensorboardX

Pytorch与torchvision

机器学习和神经网络的基本概念

神经网络的基本概念

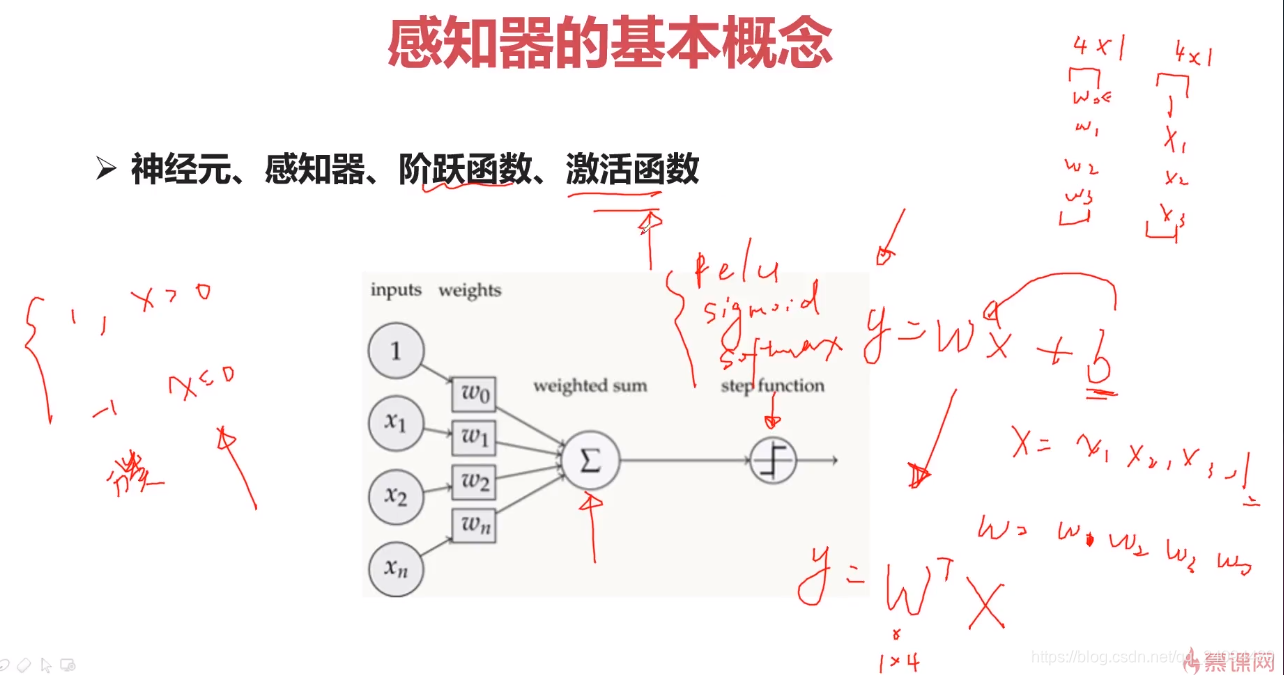

感知器的基本概念

前向运算

反向传播

分类与回归

过拟合与欠拟合

正则化问题

利用神经网络解决分类和回归问题

Pytorch完成波士顿房价预测模型搭建

import torch

import numpy as np

import re

'''训练并保存模型'''

# data

ff = open('housing.data').readlines()

data = []

for item in ff:

out = re.sub(r'\s{2,}', ' ', item).strip()

# print(out)

data.append(out.split(' '))

data = np.array(data, dtype=np.float)

print(data.shape)

Y = data[:, -1]

X = data[:, :-1]

X_train = X[:496, :]

Y_train = Y[:496, ...]

x_data = torch.tensor(X_train, dtype=torch.float32)

y_data = torch.tensor(Y_train, dtype=torch.float32)

X_test = X[496:, ...]

Y_test = Y[496:, ...]

x_test_data = torch.tensor(X_test, dtype=torch.float32)

y_test_data = torch.tensor(Y_test, dtype=torch.float32)

print(X_train.shape)

print(X_test.shape)

print(Y_train.shape)

print(Y_test.shape)

# net 网络

class Net(torch.nn.Module):

def __init__(self, n_feature, n_output):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(n_feature, 100)

self.predict = torch.nn.Linear(100, n_output)

def forward(self, x):

out = self.hidden(x)

out = torch.relu(out)

out = self.predict(out)

return out

net = Net(13, 1)

# loss 损失函数

loss_func = torch.nn.MSELoss()

# optimizer 优化器

optimizer = torch.optim.Adam(net.parameters(), lr=0.01)

# training训练

for i in range(100000):

pred = net.forward(x_data)

pred = torch.squeeze(pred)

loss = loss_func(pred, y_data)

optimizer.zero_grad()

loss.backward()

optimizer.step()

print(f'ite:{i}, loss_train:{loss}')

print(pred[:10])

print(y_data[:10])

# test

pred = net.forward(x_test_data)

pred = torch.squeeze(pred)

loss_test = loss_func(pred, y_test_data)

print(f'ite:{i}, loss_test:{loss_test}')

print(pred[:10])

print(y_test_data[:10])

# 保存模型

torch.save(net, 'model/model.pkl')

# torch.load('')

torch.save(net.state_dict(), 'model/params.pkl')

# net.load_state_dict('')

import re

import numpy as np

import torch

'''加载模型'''

# data

ff = open('housing.data').readlines()

data = []

for item in ff:

out = re.sub(r'\s{2,}', ' ', item).strip()

# print(out)

data.append(out.split(' '))

data = np.array(data, dtype=np.float)

print(data.shape)

Y = data[:, -1]

X = data[:, :-1]

X_train = X[:496, :]

Y_train = Y[:496, ...]

x_data = torch.tensor(X_train, dtype=torch.float32)

y_data = torch.tensor(Y_train, dtype=torch.float32)

X_test = X[496:, ...]

Y_test = Y[496:, ...]

x_test_data = torch.tensor(X_test, dtype=torch.float32)

y_test_data = torch.tensor(Y_test, dtype=torch.float32)

# net 网络

class Net(torch.nn.Module):

def __init__(self, n_feature, n_output):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(n_feature, 100)

self.predict = torch.nn.Linear(100, n_output)

def forward(self, x):

out = self.hidden(x)

out = torch.relu(out)

out = self.predict(out)

return out

net = torch.load('model/model.pkl')

loss_func = torch.nn.MSELoss()

pred = net.forward(x_test_data).squeeze()

lost_test = loss_func(pred, y_test_data)

print(pred)

print(y_test_data)

print(f'lose_test:{lost_test}')

Pytorch完成手写数字分类模型搭建

import torch

import torchvision.datasets as dataset

import torchvision.transforms as transforms

import torch.utils.data as data_utils

from CNN import CNN

'''训练'''

# data

train_data = dataset.MNIST(root='mnist',

train=True,

transform=transforms.ToTensor(),

download=True)

test_data = dataset.MNIST(root='mnist',

train=False,

transform=transforms.ToTensor(),

download=False)

# batch_size

train_loader = data_utils.DataLoader(dataset=train_data,

batch_size=64,

shuffle=True)

test_loader = data_utils.DataLoader(dataset=test_data,

batch_size=64,

shuffle=True)

# net

cnn = CNN()

cnn = cnn.cuda()

# loss

loss_func = torch.nn.CrossEntropyLoss()

# optimizer

optimizer = torch.optim.Adam(cnn.parameters(), lr=0.01)

# training

for epoch in range(10):

for i, (images, labels) in enumerate(train_loader):

images = images.cuda()

labels = labels.cuda()

outputs = cnn(images)

loss = loss_func(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

print(f'epoch is {epoch + 1}, ite is {i}/{len(train_data) // train_loader.batch_size}, '

f'loss is {loss.item()}')

# eval/test

# loss_test = 0

# accuracy = 0

# for i, (images, labels) in enumerate(test_loader):

# images = images.cuda()

# labels = labels.cuda()

# outputs = cnn(images)

# # labels的维度:batch_size

# # outputs的维度:batch_size * cls_num,这里cls_num=10

# loss_test += loss_func(outputs, labels)

# _, pred = outputs.max(1)

# accuracy += (pred == labels).sum().item()

# accuracy = accuracy / len(test_data)

# loss_test = loss_test / (len(test_data) // 64)

#

# print(f'epoch is {epoch + 1}, accuracy is {accuracy}, '

# f'loss_test is {loss_test.item()}')

# save

torch.save(cnn, 'model/mnist_model.pkl')

# load

# inference

import torch

import torchvision.datasets as dataset

import torchvision.transforms as transforms

import torch.utils.data as data_utils

from CNN import CNN

import numpy as np

import cv2

'''测试'''

test_data = dataset.MNIST(root='mnist',

train=False,

transform=transforms.ToTensor(),

download=False)

test_loader = data_utils.DataLoader(dataset=test_data,

batch_size=64,

shuffle=True)

# net

cnn = torch.load('model/mnist_model.pkl')

cnn = cnn.cuda()

accuracy = 0

for i, (images, labels) in enumerate(test_loader):

images = images.cuda()

labels = labels.cuda()

print(images.shape)

outputs = cnn(images)

_, pred = outputs.max(1)

accuracy += (pred == labels).sum().item()

images = images.cpu().numpy()

labels = labels.cpu().numpy()

pred = pred.cpu().numpy()

# batch_size * 1 * 28 * 28

for idx in range(images.shape[0]):

im_data = images[idx]

im_label = labels[idx]

im_pred = pred[idx]

im_data = im_data.transpose(1, 2, 0)

print('label', im_label)

print('pred', im_pred)

cv2.imshow('im_data', im_data)

cv2.waitKey(0)

accuracy = accuracy / len(test_data)

print(accuracy)

模型的性能评价——交叉验证

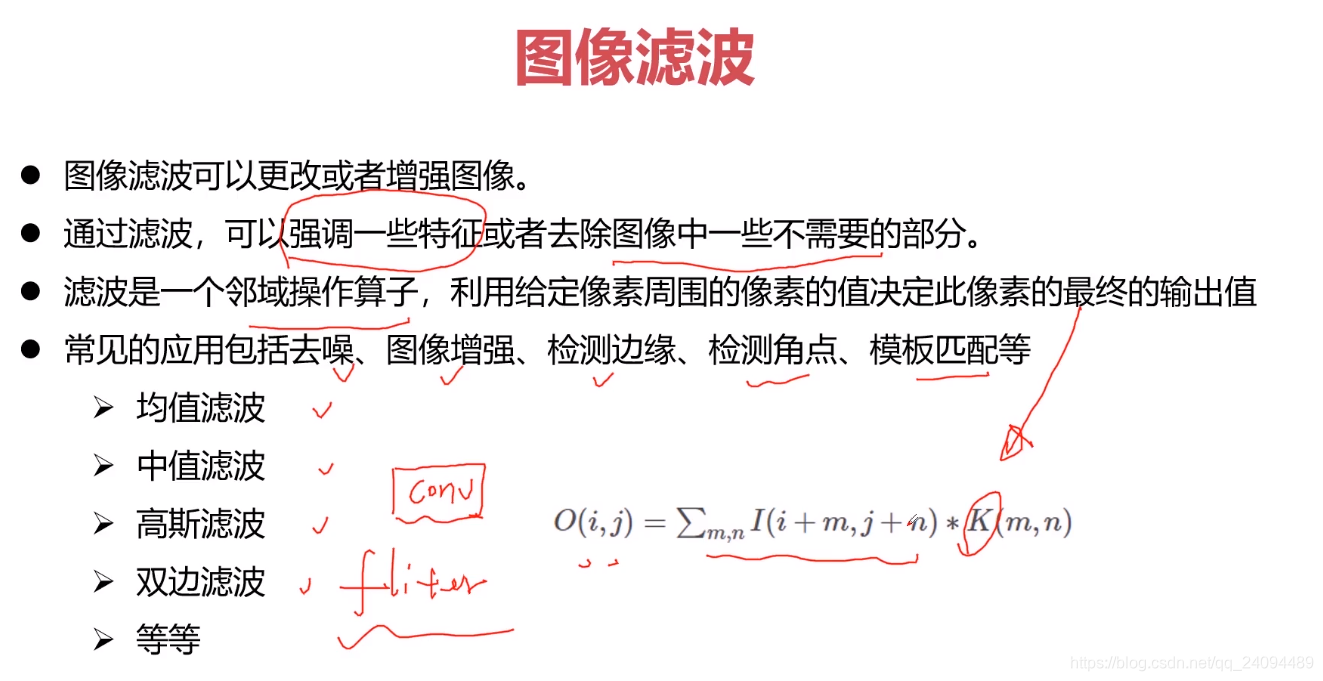

计算机视觉与卷积神经网络基础

特征工程

卷积神经网络

经典卷积神经网络结构

Attention的网络结构

学习率

优化器

卷积神经网添加正则化

计算机视觉任务-Cifar10图像分类

图像分类网络模型框架解读

- 数据加载

- RGB数据 OR BGR数据

- JPEG编码后的数据

- torchversion.datasets中的数据集

- torch.utils.data下的Dataset,DataLoader自定义数据集

- 数据增强

- 为什么需要数据增强?

- 数据增强的时候需要注意什么?

- torchversion.transforms

- 网络结构

- 类别概率分布

- Loss

- 分类问题常用评价指标

- PR曲线 VS ROC曲线

- 优化器选择

Cifar10数据分类

分类模型优化思路

- 调参技巧

- backbone(主干网络)

- 过拟合问题

- 学习率调整

- 优化函数

- 数据增强

- 亮度、对比度、饱和度

- 裁剪、旋转

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?