网上关于java集成datax方法很少,这里我写一个java集成datax的案例,希望能帮到你。

下面直奔主题!

方法很简单,去github下载datax,大概一个g大小。解压它

Pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>Founder</groupId>

<artifactId>justTry</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>commons-codec</groupId>

<artifactId>commons-codec</artifactId>

<version>1.6</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.50</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.3.2</version>

</dependency>

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.4</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.8</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpcore</artifactId>

<version>4.4.11</version>

</dependency>

<dependency>

<groupId>commons-cli</groupId>

<artifactId>commons-cli</artifactId>

<version>1.2</version>

</dependency>

<dependency>

<groupId>com.alibaba.datax</groupId>

<artifactId>datax-core</artifactId>

<version>0.0.1</version>

</dependency>

<dependency>

<groupId>com.alibaba.datax</groupId>

<artifactId>datax-transformer</artifactId>

<version>0.0.1</version>

</dependency>

<dependency>

<groupId>com.alibaba.datax</groupId>

<artifactId>datax-common</artifactId>

<version>0.0.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.slf4j/slf4j-api -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.22</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.slf4j/slf4j-log4j12 -->

<!--<dependency>-->

<!--<groupId>org.slf4j</groupId>-->

<!--<artifactId>slf4j-log4j12</artifactId>-->

<!--<version>1.7.22</version>-->

<!--</dependency>-->

<!-- https://mvnrepository.com/artifact/org.slf4j/slf4j-simple -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-simple</artifactId>

<version>1.7.22</version>

</dependency>

</dependencies>

</project>

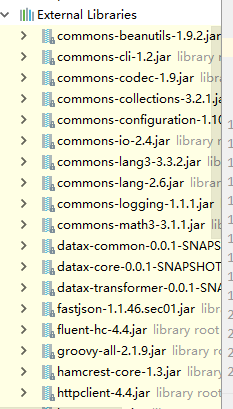

将datax包中lib下的包全部导入到idea中。刚开始我是需要哪个导入哪个包,后来发现各个包之间相互依赖,索性把所有包都放入进来

编写代码

import com.alibaba.datax.core.Engine;

import java.text.SimpleDateFormat;

import java.util.Date;

public class dataxTest {

public static void start(String dataxPath,String jsonPath) throws Throwable {

System.setProperty("datax.home", dataxPath);

System.setProperty("now", new SimpleDateFormat("yyyy/MM/dd-HH:mm:ss:SSS").format(new Date()));// 替换job中的占位符

//String[] datxArgs = {"-job", dataxPath + "/job/text.json", "-mode", "standalone", "-jobid", "-1"};

String[] datxArgs = {"-job", jsonPath, "-mode", "standalone", "-jobid", "-1"};

Engine.entry(datxArgs);

// Engine.getResult();

}

public static void main(String[] args) throws Throwable {

start("D:\\software\\datax.tar\\datax\\datax","D:\\software\\datax.tar\\datax\\datax\\job\\mysql2hdfs.json");

}

}

去hdfs上查看,完事。

看到成功了。

看到成功了。

主要是2参数,一个是datax包的本地路径,一个是jo

b包下的json文件路径,json文件就是你要执行job的文件。

job下的json文件就是你的任务配置文件。

Json文件内容如下

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

"score",

"name"

],

"connection": [

{

"jdbcUrl": [

"jdbc:mysql://172.18.118.198:3306/test"

],

"table": [

"score"

]

}

],

"username": "root",

"password": "root"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [

{

"name": "score",

"type": "int"

},

{

"name": "name",

"type": "string"

}

],

"defaultFS": "hdfs://slave1.big:8020",

"fieldDelimiter": "\t",

"fileName": "student23.txt",

"fileType": "text",

"path": "/",

"writeMode": "append"

}

}

}

],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

完成。有疑问的评论告诉我。

4310

4310

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?