一、定义

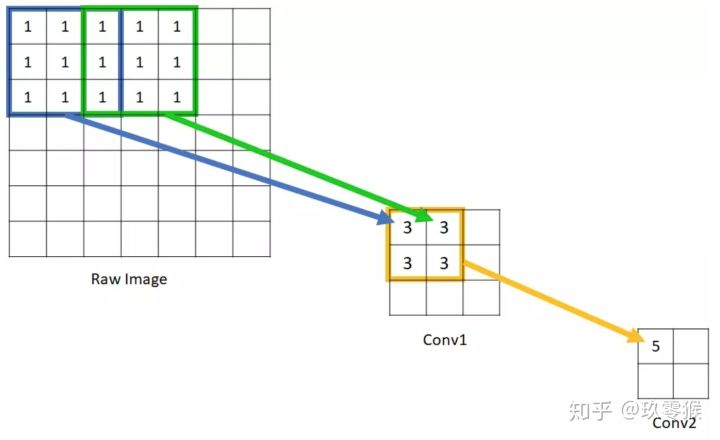

- 卷积神经网络输出特征图上的像素点

在原始图像上所能看到区域的大小,输出特征会受感受野区域内的像素点的影响

在卷积神经网络中,感受野(Receptive Field)是指特征图上的某个点能看到的输入图像的区域,即特征图上的点是由输入图像中感受野大小区域的计算得到的

- 图像的空间联系是局部的,就像人是通过一个

局部的感受野去感受外界图像一样,每一个神经元都不需要对全局图像做感受,每个神经元只感受局部的图像区域,然后在更高层,将这些感受不同局部的神经元综合起来就可以得到全局的信息了

图来自[1]

神经元感受野的值越大表示其能接触到的原始图像范围就越大,也意味着它可能蕴含更为全局,语义层次更高的特征;相反,值越小则表示其所包含的特征越趋向局部和细节。因此感受野的值可以用来大致判断每一层的抽象层次.[1]

二、计算

卷积层(conv)和池化层(pooling)都会影响感受野,而激活函数层通常对于感受野没有影响,当前层的步长并不影响当前层的感受野,感受野和填补(padding)没有关系, 计算当层感受野的公式如下:

其中,![]() 表示当前层的感受野,

表示当前层的感受野, ![]() 表示上一层的感受野,

表示上一层的感受野, ![]() 表示卷积核的大小,例如3*3的卷积核,则

表示卷积核的大小,例如3*3的卷积核,则 ![]() 表示之前所有层的步长的乘积(不包括本层),公式如下:

表示之前所有层的步长的乘积(不包括本层),公式如下:

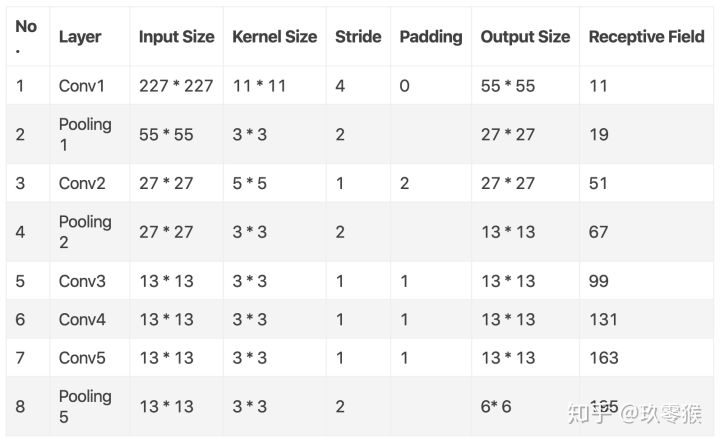

下面来练练手,以AlexNet举个例子

AlexNet(图来自[1])

我的一部分计算过程:

感受野计算的是否正确可使用 此链接:Receptive Field Calculator 提供的工具进行验证

tf 官方感受野计算代码

三、作用

感受野的值可以用来大致判断每一层的抽象层次:

感受野越大表示其能接触到的原始图像范围就越大,也意味着可能蕴含更为 全局、语义层次更高的特征

感受野越小则表示其所包含的特征越趋向于 局部和细节

辅助网络的设计:

一般任务:要求感受野越大越好,如图像分类中最后卷积层的感受野要大于输入图像,网络深度越深感受野越大性能越好

目标检测:设置 anchor 要严格对应感受野,anchor 太大或偏离感受野都会严重影响检测性能

语义分割:要求输出像素的感受野足够的大,确保做出决策时没有忽略重要信息,一般也是越深越好

多个小卷积代替一个大卷积层,在 加深网络深度(增强了网络容量和复杂度)的同时减少参数的个数:

小卷积核(如 )通过多层叠加可取得与大卷积核(如

)同等规模的感受野

多层小卷积核和大卷积核具有同等感受野及减少参数的直观理解

四、拓展:有效感受野

- 实际上,上述的计算是理论感受野,而特征的有效感受野是

远小于理论感受野的- 且越靠近感受野

中心的值被使用次数越多,靠近边缘的值使用次数越少

通常上述公式求取的感受野通常很大,而实际的有效感受野(Effective Receptive Field)往往小于理论感受野,因为输入层中边缘点的使用次数明显比中间点要少,因此作出的贡献不同,所以经过多层的卷积堆叠后,输入层对于特征图点做出的贡献分布呈高斯分布形状

在使用Anchor作为强先验区域的物体检测算法中,如Faster RCNN和SSD,如何设置Anchor的大小,都应该考虑感受野,尤其是有效感受野,过大或过小都不好,来自[2]

2、感受野和 anchor 的关系

经典目标检测和最新目标跟踪中,都用到了 RPN(region proposal network),anchor 是 RPN 的基础,感受野 是 anchor 的基础

目标检测中,anchor 大小及比例的设置应该跟该层特征的 有效感受野相匹配,anchor 比有效感受野大太多不好,小太多也不好

Equal-proportion interval principle: 感受野的大小设置为步长的 n(4) 倍

五、代码实现

# -*- coding: utf-8 -*-

"""

@File : compute_receptive_field.py

@Version : 1.0

"""

import numpy as np

# 需指定每层的 kernel_size, stride, pad, dilation, channel_num

net_struct = {

'vgg16': {'net': [[3, 1, 1, 1, 64], [3, 1, 1, 1, 64], [2, 2, 0, 1, 64], [3, 1, 1, 1, 128], [3, 1, 1, 1, 128],

[2, 2, 0, 1, 128],

[3, 1, 1, 1, 256], [3, 1, 1, 1, 256], [3, 1, 1, 1, 256], [2, 2, 0, 1, 256], [3, 1, 1, 1, 512],

[3, 1, 1, 1, 512],

[3, 1, 1, 1, 512], [2, 2, 0, 1, 512], [3, 1, 1, 1, 512], [3, 1, 1, 1, 512], [3, 1, 1, 1, 512],

[2, 2, 0, 1, 512]],

'name': ['conv1_1', 'conv1_2', 'pool1', 'conv2_1', 'conv2_2', 'pool2', 'conv3_1', 'conv3_2', 'conv3_3',

'pool3', 'conv4_1', 'conv4_2', 'conv4_3', 'pool4', 'conv5_1', 'conv5_2', 'conv5_3', 'pool5']},

'vgg16_ssd': {'net': [[3, 1, 1, 1, 64], [3, 1, 1, 1, 64], [2, 2, 0, 1, 64], [3, 1, 1, 1, 128], [3, 1, 1, 1, 128],

[2, 2, 0, 1, 128], [3, 1, 1, 1, 256], [3, 1, 1, 1, 256], [3, 1, 1, 1, 256], [2, 2, 0, 1, 256],

[3, 1, 1, 1, 512], [3, 1, 1, 1, 512], [3, 1, 1, 1, 512], [2, 2, 0, 1, 512], [3, 1, 1, 1, 512],

[3, 1, 1, 1, 512], [3, 1, 1, 1, 512], [3, 1, 1, 1, 512], [3, 1, 6, 6, 1024], [1, 1, 0, 1, 1024],

[1, 1, 0, 1, 256], [3, 2, 1, 1, 512], [1, 1, 0, 1, 128], [3, 2, 1, 1, 256], [1, 1, 0, 1, 128],

[3, 1, 0, 1, 256], [1, 1, 0, 1, 128], [3, 1, 0, 1, 256]],

'name': ['conv1_1', 'conv1_2', 'pool1', 'conv2_1', 'conv2_2', 'pool2', 'conv3_1', 'conv3_2', 'conv3_3',

'pool3', 'conv4_1', 'conv4_2', 'conv4_3', 'pool4', 'conv5_1', 'conv5_2', 'conv5_3', 'pool5',

'fc6', 'fc7', 'conv6_1', 'conv6_2', 'conv7_1', 'conv7_2', 'conv8_1', 'conv8_2', 'conv9_1',

'conv9_2']}

}

# 输出特征图大小的计算(down-top calculation)

def out_from_in(img_size_f, net_p, net_n, layer_len):

total_stride = 1

in_size = img_size_f

for layer in range(layer_len):

k_size, stride, pad, dilation, _ = net_p[layer]

net_name = net_n[layer]

if 'pool' in net_name:

out_size = np.ceil(1.0 * (in_size + 2 * pad - dilation * (k_size - 1) - 1) / stride).astype(np.int32) + 1

else:

out_size = np.floor(1.0 * (in_size + 2 * pad - dilation * (k_size - 1) - 1) / stride).astype(np.int32) + 1

in_size = out_size

total_stride = total_stride * stride

return out_size, total_stride

# 输出特征图上每个像素感受野的计算(top-down calculation)

def in_from_out(net_p, layer_len):

RF = 1

for layer in reversed(range(layer_len)):

k_size, stride, pad, dilation, _ = net_p[layer]

RF = ((RF - 1) * stride) + dilation * (k_size - 1) + 1

return RF

if __name__ == '__main__':

img_size = 300

print("layer output sizes given image = %dx%d" % (img_size, img_size))

for net in net_struct.keys():

print('************net structrue name is %s**************' % net)

for i in range(len(net_struct[net]['net'])):

p = out_from_in(img_size, net_struct[net]['net'], net_struct[net]['name'], i + 1)

rf = in_from_out(net_struct[net]['net'], i + 1)

print(

"layer_name = {:<8} output_size = {:<4} total_stride = {:<3} output_channel = {:<4} rf_size = {:<4}".format(

net_struct[net]['name'][i], p[0], p[1], net_struct[net]['net'][i][4], rf)

)

# vgg16 输出结果如下

layer output sizes given image = 300x300

************net structrue name is vgg16**************

layer_name = conv1_1 output_size = 300 total_stride = 1 output_channel = 64 rf_size = 3

layer_name = conv1_2 output_size = 300 total_stride = 1 output_channel = 64 rf_size = 5

layer_name = pool1 output_size = 150 total_stride = 2 output_channel = 64 rf_size = 6

layer_name = conv2_1 output_size = 150 total_stride = 2 output_channel = 128 rf_size = 10

layer_name = conv2_2 output_size = 150 total_stride = 2 output_channel = 128 rf_size = 14

layer_name = pool2 output_size = 75 total_stride = 4 output_channel = 128 rf_size = 16

layer_name = conv3_1 output_size = 75 total_stride = 4 output_channel = 256 rf_size = 24

layer_name = conv3_2 output_size = 75 total_stride = 4 output_channel = 256 rf_size = 32

layer_name = conv3_3 output_size = 75 total_stride = 4 output_channel = 256 rf_size = 40

layer_name = pool3 output_size = 38 total_stride = 8 output_channel = 256 rf_size = 44

layer_name = conv4_1 output_size = 38 total_stride = 8 output_channel = 512 rf_size = 60

layer_name = conv4_2 output_size = 38 total_stride = 8 output_channel = 512 rf_size = 76

layer_name = conv4_3 output_size = 38 total_stride = 8 output_channel = 512 rf_size = 92

layer_name = pool4 output_size = 19 total_stride = 16 output_channel = 512 rf_size = 100

layer_name = conv5_1 output_size = 19 total_stride = 16 output_channel = 512 rf_size = 132

layer_name = conv5_2 output_size = 19 total_stride = 16 output_channel = 512 rf_size = 164

layer_name = conv5_3 output_size = 19 total_stride = 16 output_channel = 512 rf_size = 196

layer_name = pool5 output_size = 10 total_stride = 32 output_channel = 512 rf_size = 212

# vgg16_ssd 输出结果如下

************net structrue name is vgg16_ssd**************

layer_name = conv1_1 output_size = 300 total_stride = 1 output_channel = 64 rf_size = 3

layer_name = conv1_2 output_size = 300 total_stride = 1 output_channel = 64 rf_size = 5

layer_name = pool1 output_size = 150 total_stride = 2 output_channel = 64 rf_size = 6

layer_name = conv2_1 output_size = 150 total_stride = 2 output_channel = 128 rf_size = 10

layer_name = conv2_2 output_size = 150 total_stride = 2 output_channel = 128 rf_size = 14

layer_name = pool2 output_size = 75 total_stride = 4 output_channel = 128 rf_size = 16

layer_name = conv3_1 output_size = 75 total_stride = 4 output_channel = 256 rf_size = 24

layer_name = conv3_2 output_size = 75 total_stride = 4 output_channel = 256 rf_size = 32

layer_name = conv3_3 output_size = 75 total_stride = 4 output_channel = 256 rf_size = 40

layer_name = pool3 output_size = 38 total_stride = 8 output_channel = 256 rf_size = 44

layer_name = conv4_1 output_size = 38 total_stride = 8 output_channel = 512 rf_size = 60

layer_name = conv4_2 output_size = 38 total_stride = 8 output_channel = 512 rf_size = 76

layer_name = conv4_3 output_size = 38 total_stride = 8 output_channel = 512 rf_size = 92

layer_name = pool4 output_size = 19 total_stride = 16 output_channel = 512 rf_size = 100

layer_name = conv5_1 output_size = 19 total_stride = 16 output_channel = 512 rf_size = 132

layer_name = conv5_2 output_size = 19 total_stride = 16 output_channel = 512 rf_size = 164

layer_name = conv5_3 output_size = 19 total_stride = 16 output_channel = 512 rf_size = 196

layer_name = pool5 output_size = 19 total_stride = 16 output_channel = 512 rf_size = 228

layer_name = fc6 output_size = 19 total_stride = 16 output_channel = 1024 rf_size = 420

layer_name = fc7 output_size = 19 total_stride = 16 output_channel = 1024 rf_size = 420

layer_name = conv6_1 output_size = 19 total_stride = 16 output_channel = 256 rf_size = 420

layer_name = conv6_2 output_size = 10 total_stride = 32 output_channel = 512 rf_size = 452

layer_name = conv7_1 output_size = 10 total_stride = 32 output_channel = 128 rf_size = 452

layer_name = conv7_2 output_size = 5 total_stride = 64 output_channel = 256 rf_size = 516

layer_name = conv8_1 output_size = 5 total_stride = 64 output_channel = 128 rf_size = 516

layer_name = conv8_2 output_size = 3 total_stride = 64 output_channel = 256 rf_size = 644

layer_name = conv9_1 output_size = 3 total_stride = 64 output_channel = 128 rf_size = 644

layer_name = conv9_2 output_size = 1 total_stride = 64 output_channel = 256 rf_size = 772

reference

目标检测和感受野的总结和想法 - 知乎 (zhihu.com)

1、关于感受野的理解与计算:https://www.jianshu.com/p/9997c6f5c01e

2、书籍《深度学习之PyTorch物体检测实战》

本文详细介绍了卷积神经网络中的感受野概念,包括其定义、计算方法以及在图像分类、目标检测和语义分割等任务中的作用。感受野的大小影响网络对全局和局部特征的捕捉能力,较大的感受野对应更全局的特征,较小的感受野则关注细节。此外,还探讨了有效感受野的概念,它通常小于理论感受野,并且在计算时要考虑像素的使用频率。最后,提供了计算感受野的代码示例,如AlexNet和VGG16的网络结构。

本文详细介绍了卷积神经网络中的感受野概念,包括其定义、计算方法以及在图像分类、目标检测和语义分割等任务中的作用。感受野的大小影响网络对全局和局部特征的捕捉能力,较大的感受野对应更全局的特征,较小的感受野则关注细节。此外,还探讨了有效感受野的概念,它通常小于理论感受野,并且在计算时要考虑像素的使用频率。最后,提供了计算感受野的代码示例,如AlexNet和VGG16的网络结构。

1170

1170

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?