Docker 的几种网络配置

1. bridge

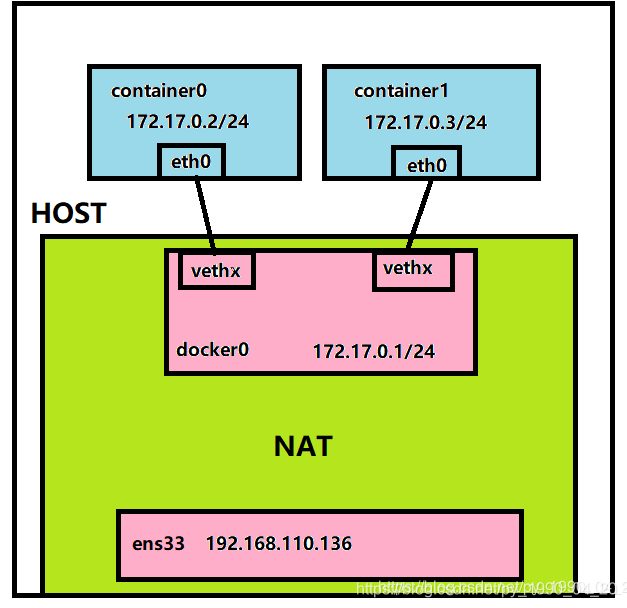

当docker进程启动时,会在主机上创建一个名为docker0的虚拟网桥,此主机上的docker容器会链接到这个虚拟网桥上。虚拟网桥的工作方式和交换机类似,这样主机上的所有容器就通过交换机连接在了一个二层网络中。从docker0子网中分配一个IP给容器使用,并设置docker0的IP地址为容器的默认网关。

在主机上创建一对虚拟网卡veth pair 设备,Docker将veth pair设备的一端放在容器中,并命名为eth0(容器的网卡),另一端放在主机中,以vethxxx这样的类似的名字命名,并将这个网络设备加入到docker网桥中。可以通过brctl show命令查看。

[root@localhost /]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.02420fde8acb no veth10e3061

bridge 是docker默认的网络模式,我们子使用docker run 不加-net指定网络模式是默认的就是这个模式。使⽤ docker run -d -p 80:80 nginx 时,docker 实际是在 iptables 做了 DNAT 规则,实现端⼝转发功能。可以使⽤ iptables -t nat -vnL 查看。bridge 模式如下图所示:

演示:

先创建一个虚拟网桥

docker network create -d bridge my-net

[root@localhost docker]# docker network create -d bridge my-net

ee2f3aa673263ba5439c2b9c4d339f6be43a5c665d06741f645c3cc8215baf15

-d 指定网络类型,有bridge 和 overlay两种,overlay用于Swarm mode

运行并连接到新建的网络

docker run -it --rm --name busybox1 --network my-net busybox sh

[root@localhost docker]# docker run -it --rm --name busybox1 --network my-net busybox sh

Unable to find image 'busybox:latest' locally

latest: Pulling from library/busybox

d9cbbca60e5f: Pull complete

Digest: sha256:836945da1f3afe2cfff376d379852bbb82e0237cb2925d53a13f53d6e8a8c48c

Status: Downloaded newer image for busybox:latest

/ #

打开另一个终端在运行并连接到新建网络

docker run -it --rm --name busybox2 --network my-net busybox sh

[root@localhost ~]# docker run -it --rm --name busybox2 --network my-net busybox sh

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:12:00:03

inet addr:172.18.0.3 Bcast:172.18.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:656 (656.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # ping 172.18.0.2

PING 172.18.0.2 (172.18.0.2): 56 data bytes

64 bytes from 172.18.0.2: seq=0 ttl=64 time=0.059 ms

64 bytes from 172.18.0.2: seq=1 ttl=64 time=0.068 ms

64 bytes from 172.18.0.2: seq=2 ttl=64 time=0.064 ms

64 bytes from 172.18.0.2: seq=3 ttl=64 time=0.065 ms

64 bytes from 172.18.0.2: seq=4 ttl=64 time=0.078 ms

^C

--- 172.18.0.2 ping statistics ---

5 packets transmitted, 5 packets received, 0% packet loss

round-trip min/avg/max = 0.059/0.066/0.078 ms

/ #

在第一个新建的busybox1中pingbusybox2

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:12:00:02

inet addr:172.18.0.2 Bcast:172.18.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:16 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1312 (1.2 KiB) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # ping 172.18.0.3

PING 172.18.0.3 (172.18.0.3): 56 data bytes

64 bytes from 172.18.0.3: seq=0 ttl=64 time=0.202 ms

64 bytes from 172.18.0.3: seq=1 ttl=64 time=0.061 ms

64 bytes from 172.18.0.3: seq=2 ttl=64 time=0.315 ms

64 bytes from 172.18.0.3: seq=3 ttl=64 time=0.068 ms

^C

--- 172.18.0.3 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.061/0.161/0.315 ms

/ #

查看一个主机虚拟网桥

br-ee2f3aa67326 就是my-net

[root@localhost ~]# ifconfig

br-ee2f3aa67326: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.18.0.1 netmask 255.255.0.0 broadcast 172.18.255.255

inet6 fe80::42:60ff:febf:82f2 prefixlen 64 scopeid 0x20<link>

ether 02:42:60:bf:82:f2 txqueuelen 0 (Ethernet)

RX packets 12 bytes 1008 (1008.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 28 bytes 2320 (2.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 0.0.0.0

inet6 fe80::42:fff:fede:8acb prefixlen 64 scopeid 0x20<link>

ether 02:42:0f:de:8a:cb txqueuelen 0 (Ethernet)

RX packets 173 bytes 13867 (13.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 113 bytes 9658 (9.4 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.110.136 netmask 255.255.255.0 broadcast 192.168.110.255

inet6 fe80::2e65:af6f:2d5d:5acc prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:33:99:ac txqueuelen 1000 (Ethernet)

RX packets 1048441 bytes 907093889 (865.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 613943 bytes 67627865 (64.4 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 214 bytes 20449 (19.9 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 214 bytes 20449 (19.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

veth1843878: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::d876:fcff:fea7:5228 prefixlen 64 scopeid 0x20<link>

ether da:76:fc:a7:52:28 txqueuelen 0 (Ethernet)

RX packets 12 bytes 1008 (1008.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 28 bytes 2320 (2.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

veth10e3061: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::54d5:32ff:fece:c43d prefixlen 64 scopeid 0x20<link>

ether 56:d5:32:ce:c4:3d txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 656 (656.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

veth71126a7: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::5094:6fff:fe2e:5b20 prefixlen 64 scopeid 0x20<link>

ether 52:94:6f:2e:5b:20 txqueuelen 0 (Ethernet)

RX packets 12 bytes 1008 (1008.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 20 bytes 1664 (1.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@localhost ~]#

之所以是172.18而不是172.17,是因为有一个nginx使用bridge而新建busybox的使用my-net

docker network ls

[root@localhost docker]# docker network ls

NETWORK ID NAME DRIVER SCOPE

26a939b44652 bridge bridge local

4c49d87c423a host host local

ee2f3aa67326 my-net bridge local

38981edf292a none null local

查看容器信息,这里查看的是nginx

docker inspect gracious_roentgen

[root@localhost ~]# brctl show

bridge name bridge id STP enabled interfaces

br-ee2f3aa67326 8000.024260bf82f2 no veth1843878

veth71126a7

docker0 8000.02420fde8acb no veth10e3061

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

498013203fe7 busybox "sh" 18 minutes ago Up 18 minutes busybox2

c24a3737ca3c busybox "sh" 19 minutes ago Up 19 minutes busybox1

53450637c41a nginx:latest "nginx -g 'daemon of…" About an hour ago Up About an hour 0.0.0.0:80->80/tcp gracious_roentgen

[root@localhost ~]# docker inspect gracious_roentgen

[

{

...

"NetworkSettings": {

...

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "26a939b44652765b61581ef9de0859c5861788c49bcf4fec4ece68215cd95073",

"EndpointID": "816bfdb505ca0bb720440d608a4c36acdb1731accf7bc1c8a98d6d52f69096ac",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:02",

"DriverOpts": null

}

}

}

}

]

[root@localhost ~]#

删除自建的虚拟网桥

docker network rm ee2f3aa67326

[root@localhost docker]# docker network rm ee2f3aa67326

ee2f3aa67326

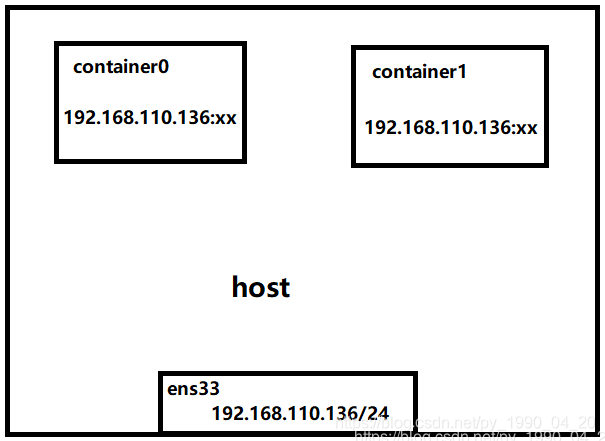

2. host

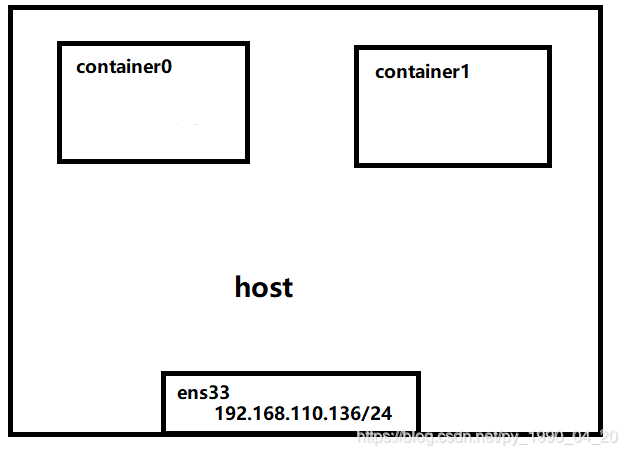

如果启动容器的时候使⽤ host 模式,那么这个容器将不会获得⼀个独⽴的 Network Namespace ,⽽是和宿主机共⽤⼀个 Network Namespace。容器将不会虚拟出⾃⼰的⽹卡,配置⾃⼰的 IP 等,⽽是使⽤宿主机的 IP 和端⼝。但是,容器的其他⽅⾯,如⽂件系统、进程列表等还是和宿主机隔离的。Host模式如下图所示:

使用主机模式运行容器,会发现和主机的网络空间是一样的

docker run -it --rm --name busybox1 --net=host busybox sh

[root@localhost ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@localhost ~]# docker run -it --rm --name busybox1 --net=host busybox sh

/ # ifconfig

docker0 Link encap:Ethernet HWaddr 02:42:0F:DE:8A:CB

inet addr:172.17.0.1 Bcast:0.0.0.0 Mask:255.255.0.0

inet6 addr: fe80::42:fff:fede:8acb/64 Scope:Link

UP BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:173 errors:0 dropped:0 overruns:0 frame:0

TX packets:113 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:13867 (13.5 KiB) TX bytes:9658 (9.4 KiB)

ens33 Link encap:Ethernet HWaddr 00:0C:29:33:99:AC

inet addr:192.168.110.136 Bcast:192.168.110.255 Mask:255.255.255.0

inet6 addr: fe80::2e65:af6f:2d5d:5acc/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1060021 errors:0 dropped:0 overruns:0 frame:0

TX packets:630427 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:908344370 (866.2 MiB) TX bytes:69546957 (66.3 MiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:214 errors:0 dropped:0 overruns:0 frame:0

TX packets:214 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:20449 (19.9 KiB) TX bytes:20449 (19.9 KiB)

/ #

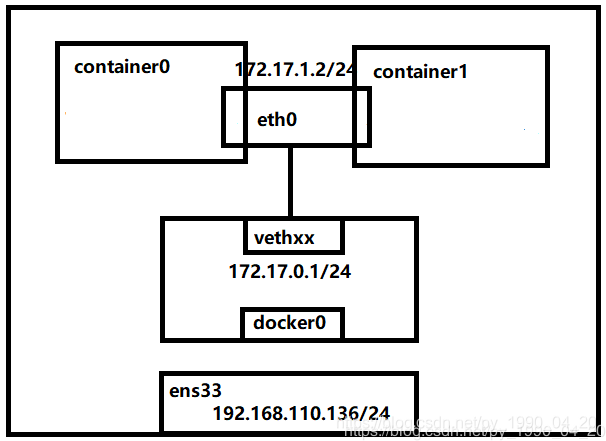

3. container

这个模式指定新创建的容器和已经存在的⼀个容器共享⼀个 Network Namespace,⽽不是和宿主机共享。新创建的容器不会创建⾃⼰的⽹卡,配置⾃⼰的 IP,⽽是和⼀个指定的容器共享 IP、端⼝范围等。同样,两个容器除了⽹络⽅⾯,其他的如⽂件系统、进程列表等还是隔离的。两个容器的进程可以通过 lo ⽹卡设备通信。 Container模式示意图:

运行一个容器busybox1

docker run -it --rm --name busybox1 busybox sh

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@localhost ~]# docker run -it --rm --name busybox1 busybox sh

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02

inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:656 (656.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ #

使用busybox1的网络运行busybox2

docker run -it --rm --name busybox2 --net=container:busybox1 busybox sh

[root@localhost ~]# docker run -it --rm --name busybox2 --net=container:busybox1 busybox sh

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02

inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:656 (656.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ #

在未删除容器前查看主机网络

[root@localhost ~]# ifconfig

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 0.0.0.0

inet6 fe80::42:fff:fede:8acb prefixlen 64 scopeid 0x20<link>

ether 02:42:0f:de:8a:cb txqueuelen 0 (Ethernet)

RX packets 173 bytes 13867 (13.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 113 bytes 9658 (9.4 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.110.136 netmask 255.255.255.0 broadcast 192.168.110.255

inet6 fe80::2e65:af6f:2d5d:5acc prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:33:99:ac txqueuelen 1000 (Ethernet)

RX packets 1063057 bytes 908801394 (866.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 633428 bytes 69913689 (66.6 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 214 bytes 20449 (19.9 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 214 bytes 20449 (19.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

veth0ebcc94: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::b459:3aff:fe1d:f652 prefixlen 64 scopeid 0x20<link>

ether b6:59:3a:1d:f6:52 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 656 (656.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

4. none

使⽤ none 模式,Docker 容器拥有⾃⼰的 Network Namespace,但是,并不为Docker 容器进⾏任何⽹络配置。也就是说,这个 Docker 容器没有⽹卡、IP、路由等信息。需要我们⾃⼰为 Docker 容器添加⽹卡、配置 IP 等。 None模式示意图:

运行一个none网络配置的容器

docker run -it --rm --name busybox --net=none busybox sh

[root@localhost ~]# docker run -it --rm --name busybox --net=none busybox sh

/ # ifconfig

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ #

本文介绍了Docker的四种网络配置模式:bridge(默认模式,通过虚拟网桥实现容器间的通信)、host(容器与宿主机共享网络空间,直接使用宿主机IP和端口)、container(共享已有容器的网络Namespace)和none(无网络配置,需要手动设置)。详细讲解了每种模式的工作原理和示例操作。

本文介绍了Docker的四种网络配置模式:bridge(默认模式,通过虚拟网桥实现容器间的通信)、host(容器与宿主机共享网络空间,直接使用宿主机IP和端口)、container(共享已有容器的网络Namespace)和none(无网络配置,需要手动设置)。详细讲解了每种模式的工作原理和示例操作。

6148

6148

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?