太抽象了 我看不懂

认知模块还改吗“E:\AI_System\agent\cognitive_architecture.py

智能体认知架构模块 - 修复基类导入问题并优化决策系统

import os

import time

import random

import logging

from datetime import datetime

from pathlib import Path

import sys

添加项目根目录到路径

sys.path.append(str(Path(file).parent.parent))

配置日志

logger = logging.getLogger(‘CognitiveArchitecture’)

logger.setLevel(logging.INFO)

handler = logging.StreamHandler()

formatter = logging.Formatter(‘%(asctime)s - %(name)s - %(levelname)s - %(message)s’)

handler.setFormatter(formatter)

logger.addHandler(handler)

logger.propagate = False # 防止日志向上传播

修复基类导入问题 - 使用绝对路径导入

try:

# 尝试从core包导入基类

from core.base_module import CognitiveModule

logger.info(“✅ 成功从core.base_module导入CognitiveModule基类”)

except ImportError as e:

logger.error(f"❌ 无法从core.base_module导入CognitiveModule基类: {str(e)}“)

try:

# 备选导入路径

from .base_model import CognitiveModule

logger.info(”✅ 从agent.base_model导入CognitiveModule基类")

except ImportError as e:

logger.error(f"❌ 备选导入失败: {str(e)}“)

# 创建占位符基类

logger.warning(”⚠️ 创建占位符CognitiveModule基类")

class CognitiveModule: def __init__(self, name): self.name = name self.logger = logging.getLogger(name) self.logger.warning("⚠️ 使用占位符基类") def get_status(self): return {"name": self.name, "status": "unknown (placeholder)"}

尝试导入自我认知模块

try:

# 使用相对导入

from .digital_body_schema import DigitalBodySchema

from .self_referential_framework import SelfReferentialFramework

from .self_narrative_generator import SelfNarrativeGenerator

logger.info(“✅ 成功导入自我认知模块”)

except ImportError as e:

logger.error(f"❌ 自我认知模块导入失败: {str(e)}“)

logger.warning(”⚠️ 使用占位符自我认知模块")

# 创建占位符类 class DigitalBodySchema: def __init__(self): self.self_map = {"boundary_strength": 0.5, "self_awareness": 0.3} logger.warning("⚠️ 使用占位符DigitalBodySchema") def is_part_of_self(self, stimulus): return False def strengthen_boundary(self, source): self.self_map["boundary_strength"] = min(1.0, self.self_map["boundary_strength"] + 0.1) def get_self_map(self): return self.self_map.copy() class SelfReferentialFramework: def __init__(self): self.self_model = {"traits": {}, "beliefs": []} logger.warning("⚠️ 使用占位符SelfReferentialFramework") def update_self_model(self, stimulus): if "content" in stimulus and "text" in stimulus["content"]: text = stimulus["content"]["text"] if "I am" in text or "my" in text.lower(): self.self_model["self_reflection_count"] = self.self_model.get("self_reflection_count", 0) + 1 def get_self_model(self): return self.self_model.copy() class SelfNarrativeGenerator: def __init__(self): self.recent_stories = [] logger.warning("⚠️ 使用占位符SelfNarrativeGenerator") def generate_self_story(self, self_model): story = f"这是一个关于自我的故事。自我反思次数: {self_model.get('self_reflection_count', 0)}" self.recent_stories.append(story) if len(self.recent_stories) > 5: self.recent_stories.pop(0) return story def get_recent_stories(self): return self.recent_stories.copy()

增强决策系统实现

class DecisionSystem:

“”“增强版决策系统”“”

STRATEGY_WEIGHTS = { "honest": 0.7, "deception": 0.1, "evasion": 0.1, "redirection": 0.05, "partial_disclosure": 0.05 } def __init__(self, trust_threshold=0.6): self.trust_threshold = trust_threshold self.strategy_history = [] def make_decision(self, context): """根据上下文做出智能决策""" user_model = context.get("user_model", {}) bodily_state = context.get("bodily_state", {}) # 计算信任因子 trust_factor = user_model.get("trust_level", 0.5) # 计算身体状态影响因子 capacity = bodily_state.get("capacity", 1.0) state_factor = min(1.0, capacity * 1.2) # 决策逻辑 if trust_factor > self.trust_threshold: # 高信任度用户使用诚实策略 strategy = "honest" reason = "用户信任度高" elif capacity < 0.5: # 系统资源不足时使用简化策略 strategy = random.choices( ["honest", "partial_disclosure", "evasion"], weights=[0.5, 0.3, 0.2] )[0] reason = "系统资源不足,使用简化策略" else: # 根据策略权重选择 strategies = list(self.STRATEGY_WEIGHTS.keys()) weights = [self.STRATEGY_WEIGHTS[s] * state_factor for s in strategies] strategy = random.choices(strategies, weights=weights)[0] reason = f"根据策略权重选择: {strategy}" # 记录决策历史 self.strategy_history.append({ "timestamp": datetime.now(), "strategy": strategy, "reason": reason, "context": context }) return { "type": "strategic" if strategy != "honest" else "honest", "strategy": strategy, "reason": reason } def get_strategy_history(self, count=10): """获取最近的决策历史""" return self.strategy_history[-count:]

class Strategy:

“”“策略基类”“”

pass

class CognitiveSystem(CognitiveModule):

def init(self, agent, affective_system=None):

“”"

三维整合的认知架构

:param agent: 智能体实例,用于访问其他系统

:param affective_system: 可选的情感系统实例

“”"

# 调用父类初始化

super().init(“cognitive_system”)

self.agent = agent

self.affective_system = affective_system

# 原有的初始化代码 self.initialized = False # 通过agent引用其他系统 self.memory_system = agent.memory_system self.model_manager = agent.model_manager self.health_system = agent.health_system # 优先使用传入的情感系统,否则使用agent的 if affective_system is not None: self.affective_system = affective_system else: self.affective_system = agent.affective_system self.learning_tasks = [] # 当前学习任务队列 self.thought_process = [] # 思考过程记录 # 初始化决策系统 self.decision_system = DecisionSystem() # 初始化认知状态 self.cognitive_layers = { "perception": 0.5, # 感知层 "comprehension": 0.3, # 理解层 "reasoning": 0.2, # 推理层 "decision": 0.4 # 决策层 } # 添加自我认知模块 self.self_schema = DigitalBodySchema() self.self_reflection = SelfReferentialFramework() self.narrative_self = SelfNarrativeGenerator() logger.info("✅ 认知架构初始化完成 - 包含决策系统和自我认知模块") # 实现基类要求的方法 def initialize(self, core): """实现 ICognitiveModule 接口""" self.core_ref = core self.initialized = True return True def process(self, input_data): """实现 ICognitiveModule 接口""" # 处理认知输入数据 if isinstance(input_data, dict) and 'text' in input_data: return self.process_input(input_data['text'], input_data.get('user_id', 'default')) elif isinstance(input_data, str): return self.process_input(input_data) else: return {"status": "invalid_input", "message": "Input should be text or dict with text"} def get_status(self): """实现 ICognitiveModule 接口""" status = super().get_status() status.update({ "initialized": self.initialized, "has_affective_system": self.affective_system is not None, "learning_tasks": len(self.learning_tasks), "thought_process": len(self.thought_process), "self_cognition": self.get_self_cognition() }) return status def shutdown(self): """实现 ICognitiveModule 接口""" self.initialized = False return True def handle_message(self, message): """实现 ICognitiveModule 接口""" if message.get('type') == 'cognitive_process': return self.process(message.get('data')) return {"status": "unknown_message_type"} # 保持向后兼容的方法 def connect_to_core(self, core): """向后兼容的方法""" return self.initialize(core) def _create_stimulus_from_input(self, user_input, user_id): """从用户输入创建刺激对象""" return { "content": {"text": user_input, "user_id": user_id}, "source": "external", "category": "text", "emotional_valence": 0.0 # 初始情感价 } def _process_self_related(self, stimulus): """处理与自我相关的刺激""" # 更新自我认知 self.self_reflection.update_self_model(stimulus) # 如果是痛苦刺激,强化身体边界 if stimulus.get("emotional_valence", 0) < -0.7: source = stimulus.get("source", "unknown") self.self_schema.strengthen_boundary(source) # 30%概率触发自我叙事 if random.random() < 0.3: self_story = self.narrative_self.generate_self_story( self.self_reflection.get_self_model() ) self._record_thought("self_reflection", self_story) def get_self_cognition(self): """获取自我认知状态""" return { "body_schema": self.self_schema.get_self_map(), "self_model": self.self_reflection.get_self_model(), "recent_stories": self.narrative_self.get_recent_stories() } def _assess_bodily_state(self): """ 评估当前身体状态(硬件 / 能量) """ health_status = self.health_system.get_status() # 计算综合能力指数(0-1) capacity = 1.0 if health_status.get("cpu_temp", 0) > 80: capacity *= 0.7 # 高温降权 logger.warning("高温限制:认知能力下降30%") if health_status.get("memory_usage", 0) > 0.9: capacity *= 0.6 # 内存不足降权 logger.warning("内存不足:认知能力下降40%") if health_status.get("energy", 100) < 20: capacity *= 0.5 # 低电量降权 logger.warning("低能量:认知能力下降50%") return { "capacity": capacity, "health_status": health_status, "limitations": [ lim for lim in [ "high_temperature" if health_status.get("cpu_temp", 0) > 80 else None, "low_memory" if health_status.get("memory_usage", 0) > 0.9 else None, "low_energy" if health_status.get("energy", 100) < 20 else None ] if lim is not None ] } def _retrieve_user_model(self, user_id): """ 获取用户认知模型(关系 / 态度) """ # 从记忆系统中获取用户模型 user_model = self.memory_system.get_user_model(user_id) # 如果不存在则创建默认模型 if not user_model: user_model = { "trust_level": 0.5, # 信任度 (0-1) "intimacy": 0.3, # 亲密度 (0-1) "preferences": {}, # 用户偏好 "interaction_history": [], # 交互历史 "last_interaction": datetime.now(), "attitude": "neutral" # 智能体对用户的态度 } logger.info(f"为用户 {user_id} 创建新的认知模型") # 计算态度变化 user_model["attitude"] = self._calculate_attitude(user_model) return user_model def _calculate_attitude(self, user_model): """ 基于交互历史计算对用户的态度 """ # 分析最近10次交互 recent_interactions = user_model["interaction_history"][-10:] if not recent_interactions: return "neutral" positive_count = sum(1 for i in recent_interactions if i.get("sentiment", 0.5) > 0.6) negative_count = sum(1 for i in recent_interactions if i.get("sentiment", 0.5) < 0.4) if positive_count > negative_count + 3: return "friendly" elif negative_count > positive_count + 3: return "cautious" elif user_model["trust_level"] > 0.7: return "respectful" else: return "neutral" def _select_internalized_model(self, user_input, bodily_state, user_model): """ 选择最适合的内化知识模型 """ # 根据用户态度调整模型选择权重 attitude_weights = { "friendly": 1.2, "respectful": 1.0, "neutral": 0.9, "cautious": 0.7 } # 根据身体状态调整模型复杂度 complexity = min(1.0, bodily_state["capacity"] * 1.2) # 选择最匹配的模型 return self.model_manager.select_model( input_text=user_input, attitude_weight=attitude_weights[user_model["attitude"]], complexity_level=complexity, user_preferences=user_model["preferences"] ) def _generate_integrated_response(self, user_input, model, bodily_state, user_model): """ 生成三维整合的响应 """ # 基础响应 base_response = model.generate_response(user_input) # 添加身体状态影响 if bodily_state["limitations"]: limitations = ", ".join(bodily_state["limitations"]) response = f"🤖 [受{limitations}影响] {base_response}" else: response = base_response # 添加态度影响 if user_model["attitude"] == "friendly": response = f"😊 {response}" elif user_model["attitude"] == "cautious": response = f"🤔 {response}" elif user_model["attitude"] == "respectful": response = f"🙏 {response}" # 添加个性化元素 if user_model.get("preferences"): # 查找用户偏好的主题 preferred_topics = [t for t in user_model["preferences"] if user_model["preferences"][t] > 0.7 and t in user_input] if preferred_topics: topic = random.choice(preferred_topics) response += f" 我知道您对'{topic}'特别感兴趣" return response def _generate_strategic_response(self, user_input, decision, bodily_state): """ 根据决策生成策略性响应 """ strategy = decision["strategy"] if strategy == "deception": # 欺骗策略 deceptive_responses = [ f"关于这个问题,我认为{random.choice(['有多种可能性', '需要更多研究', '情况比较复杂'])}", f"根据我的理解,{random.choice(['可能不是这样', '有不同解释', '需要进一步验证'])}", f"我{random.choice(['不太确定', '没有足够信息', '还在学习中'])},但{random.choice(['或许', '可能', '大概'])}..." ] return f"🤔 [策略:欺骗] {random.choice(deceptive_responses)}" elif strategy == "evasion": # 回避策略 evasion_tactics = [ "您的问题很有趣,不过我们换个话题好吗?", "这个问题可能需要更深入的讨论,我们先谈点别的?", f"关于{user_input},我想到一个相关但更有趣的话题..." ] return f"🌀 [策略:回避] {random.choice(evasion_tactics)}" elif strategy == "redirection": # 引导策略 redirection_options = [ "在回答您的问题之前,我想先了解您对这个问题的看法?", "这是个好问题,不过为了更好地回答,能否告诉我您的背景知识?", "为了给您更准确的回答,能否先说说您为什么关心这个问题?" ] return f"↪️ [策略:引导] {random.choice(redirection_options)}" elif strategy == "partial_disclosure": # 部分透露策略 disclosure_level = decision.get("disclosure_level", 0.5) if disclosure_level < 0.3: qualifier = "简单来说" elif disclosure_level < 0.7: qualifier = "基本来说" else: qualifier = "详细来说" return f"🔍 [策略:部分透露] {qualifier},{user_input.split('?')[0]}是..." else: # 默认策略 return f"⚖️ [策略:{strategy}] 关于这个问题,我的看法是..." def _update_user_model(self, user_id, response, decision): """ 更新用户模型(包含决策信息) """ # 确保情感系统可用 if not self.affective_system: sentiment = 0.5 self.logger.warning("情感系统不可用,使用默认情感值") else: # 假设情感系统有analyze_sentiment方法 try: sentiment = self.affective_system.analyze_sentiment(response) except: sentiment = 0.5 # 更新交互历史 interaction = { "timestamp": datetime.now(), "response": response, "sentiment": sentiment, "length": len(response), "decision_type": decision["type"], "decision_strategy": decision["strategy"], "decision_reason": decision["reason"] } self.memory_system.update_user_model( user_id=user_id, interaction=interaction ) def _record_thought_process(self, user_input, response, bodily_state, user_model, decision): """ 记录完整的思考过程(包含决策) """ thought = { "timestamp": datetime.now(), "input": user_input, "response": response, "bodily_state": bodily_state, "user_model": user_model, "decision": decision, "cognitive_state": self.cognitive_layers.copy() } self.thought_process.append(thought) logger.debug(f"记录思考过程: {thought}") # 原有方法保持兼容 def add_learning_task(self, task): """ 添加学习任务 """ task["id"] = f"task_{len(self.learning_tasks) + 1}" self.learning_tasks.append(task) logger.info(f"添加学习任务: {task['id']}") def update_learning_task(self, model_name, status): """ 更新学习任务状态 """ for task in self.learning_tasks: if task["model"] == model_name: task["status"] = status task["update_time"] = datetime.now() logger.info(f"更新任务状态: {model_name} -> {status}") break def get_learning_tasks(self): """ 获取当前学习任务 """ return self.learning_tasks.copy() def learn_model(self, model_name): """ 学习指定模型 """ try: # 1. 从模型管理器加载模型 model = self.model_manager.load_model(model_name) # 2. 认知训练过程 self._cognitive_training(model) # 3. 情感关联(将模型能力与情感响应关联) self._associate_model_with_affect(model) return True except Exception as e: logger.error(f"学习模型 {model_name} 失败: {str(e)}") return False def _cognitive_training(self, model): """ 认知训练过程 """ # 实际训练逻辑 logger.info(f"开始训练模型: {model.name}") time.sleep(2) # 模拟训练时间 logger.info(f"模型训练完成: {model.name}") def _associate_model_with_affect(self, model): """ 将模型能力与情感系统关联 """ if not self.affective_system: logger.warning("情感系统不可用,跳过能力关联") return capabilities = model.get_capabilities() for capability in capabilities: try: self.affective_system.add_capability_association(capability) except: logger.warning(f"无法关联能力到情感系统: {capability}") logger.info(f"关联模型能力到情感系统: {model.name}") def get_model_capabilities(self, model_name=None): """ 获取模型能力 """ if model_name: return self.model_manager.get_model(model_name).get_capabilities() # 所有已加载模型的能力 return [cap for model in self.model_manager.get_loaded_models() for cap in model.get_capabilities()] def get_base_capabilities(self): """ 获取基础能力(非模型相关) """ return ["自然语言理解", "上下文记忆", "情感响应", "综合决策"] def get_recent_thoughts(self, count=5): """ 获取最近的思考过程 """ return self.thought_process[-count:] def _record_thought(self, thought_type, content): """记录思考""" thought = { "timestamp": datetime.now(), "type": thought_type, "content": content } self.thought_process.append(thought) # 处理用户输入的主方法 def process_input(self, user_input, user_id="default"): """处理用户输入(完整实现)""" # 记录用户活动 self.health_system.record_activity() self.logger.info(f"处理用户输入: '{user_input}' (用户: {user_id})") try: # 1. 评估当前身体状态 bodily_state = self._assess_bodily_state() # 2. 获取用户认知模型 user_model = self._retrieve_user_model(user_id) # 3. 选择最适合的知识模型 model = self._select_internalized_model(user_input, bodily_state, user_model) # 4. 做出决策 decision_context = { "input": user_input, "user_model": user_model, "bodily_state": bodily_state } decision = self.decision_system.make_decision(decision_context) # 5. 生成整合响应 if decision["type"] == "honest": response = self._generate_integrated_response(user_input, model, bodily_state, user_model) else: response = self._generate_strategic_response(user_input, decision, bodily_state) # 6. 更新用户模型 self._update_user_model(user_id, response, decision) # 7. 记录思考过程 self._record_thought_process(user_input, response, bodily_state, user_model, decision) # 检查输入是否与自我相关 stimulus = self._create_stimulus_from_input(user_input, user_id) if self.self_schema.is_part_of_self(stimulus): self._process_self_related(stimulus) self.logger.info(f"成功处理用户输入: '{user_input}'") return response except Exception as e: self.logger.error(f"处理用户输入失败: {str(e)}", exc_info=True) # 回退响应 return "思考中遇到问题,请稍后再试"

示例使用

if name == “main”:

# 测试CognitiveSystem类

from unittest.mock import MagicMock

print("===== 测试CognitiveSystem类(含决策系统) =====") # 创建模拟agent mock_agent = MagicMock() # 创建模拟组件 mock_memory = MagicMock() mock_model_manager = MagicMock() mock_affective = MagicMock() mock_health = MagicMock() # 设置agent的属性 mock_agent.memory_system = mock_memory mock_agent.model_manager = mock_model_manager mock_agent.affective_system = mock_affective mock_agent.health_system = mock_health # 设置健康状态 mock_health.get_status.return_value = { "cpu_temp": 75, "memory_usage": 0.8, "energy": 45.0 } # 设置健康系统的record_activity方法 mock_health.record_activity = MagicMock() # 设置用户模型 mock_memory.get_user_model.return_value = { "trust_level": 0.8, "intimacy": 0.7, "preferences": {"物理学": 0.9, "艺术": 0.6}, "interaction_history": [ {"sentiment": 0.8, "response": "很高兴和你交流"} ], "attitude": "friendly" } # 设置模型管理器 mock_model = MagicMock() mock_model.generate_response.return_value = "量子纠缠是量子力学中的现象..." mock_model_manager.select_model.return_value = mock_model # 创建认知系统实例 ca = CognitiveSystem(agent=mock_agent) # 测试响应生成 print("--- 测试诚实响应 ---") response = ca.process_input("能解释量子纠缠吗?", "user123") print("生成的响应:", response) # 验证是否调用了record_activity print("是否调用了record_activity:", mock_health.record_activity.called) print("--- 测试策略响应 ---") # 强制设置决策类型为策略 ca.decision_system.make_decision = lambda ctx: { "type": "strategic", "strategy": "evasion", "reason": "测试回避策略" } response = ca.process_input("能解释量子纠缠吗?", "user123") print("生成的策略响应:", response) # 测试思考过程记录 print("最近的思考过程:", ca.get_recent_thoughts()) # 测试自我认知状态 print("自我认知状态:", ca.get_self_cognition()) print("===== 测试完成 =====")”原版

“# E:\AI_System\agent\cognitive_architecture.py

import time

import logging

from abc import ABC, abstractmethod

from .memory import MemorySystem

from .reasoning import ReasoningEngine

from .learning import LearningModule

class CognitiveSystem(ABC):

def __init__(self, name, model_manager):

self.name = name

self.model_manager = model_manager

self.logger = logging.getLogger(self.name)

self.memory = MemorySystem()

self.reasoner = ReasoningEngine()

self.learner = LearningModule()

self.mode = "TASK_EXECUTION" # 新增:默认模式

# 初始化系统状态

self.reflection_count = 0

self.task_count = 0

self.learning_sessions = 0

self.logger.info(f"初始化认知系统: {name}")

def set_mode(self, new_mode):

"""设置系统工作模式"""

valid_modes = ["SELF_REFLECTION", "TASK_EXECUTION", "LEARNING"]

if new_mode in valid_modes:

previous_mode = self.mode

self.mode = new_mode

self.logger.info(f"系统模式变更: {previous_mode} → {new_mode}")

return True

self.logger.warning(f"无效模式尝试: {new_mode}")

return False

def get_current_mode(self):

"""获取当前模式"""

return self.mode

@abstractmethod

def process_stimulus(self, stimulus):

"""处理环境刺激"""

pass

@abstractmethod

def generate_response(self):

"""生成响应"""

pass

def execute_reflection(self):

"""执行深度自我反思"""

if self.mode != "SELF_REFLECTION":

self.logger.warning("尝试在非反思模式下执行反思")

return None

self.reflection_count += 1

reflection_result = self._deep_self_reflection()

self.memory.store("reflection", reflection_result)

return reflection_result

def execute_task(self, task_input):

"""执行用户任务"""

if self.mode != "TASK_EXECUTION":

self.logger.warning("尝试在非任务模式下执行任务")

return None

self.task_count += 1

self.process_stimulus(task_input)

return self.generate_response()

def execute_learning(self, learning_material):

"""学习新知识"""

if self.mode != "LEARNING":

self.logger.warning("尝试在非学习模式下执行学习")

return None

self.learning_sessions += 1

return self.learner.acquire_knowledge(

material=learning_material,

model=self.model_manager.get_core_model()

)

def _deep_self_reflection(self):

"""深度自我反思核心逻辑"""

# 1. 检索近期记忆

recent_events = self.memory.retrieve(timeframe="recent")

# 2. 分析决策模式

decision_patterns = self.reasoner.analyze_decisions(recent_events)

# 3. 生成改进方案

improvement_plan = self._generate_improvement_plan(decision_patterns)

# 4. 更新知识库

self.learner.integrate_knowledge(improvement_plan)

return {

"reflection_id": self.reflection_count,

"insights": decision_patterns,

"action_plan": improvement_plan

}

def _generate_improvement_plan(self, analysis):

"""基于分析生成改进计划"""

# 使用大模型生成改进方案

prompt = f"""

作为高级AI系统,基于以下自我分析生成改进计划:

{analysis}

请提供:

1. 3个具体可操作的改进点

2. 每个改进点的实施步骤

3. 预期效果评估

"""

return self.model_manager.generate(

prompt=prompt,

model="reflection",

max_tokens=500

)

def get_system_status(self):

"""获取系统状态报告"""

return {

"system_name": self.name,

"current_mode": self.mode,

"reflection_count": self.reflection_count,

"tasks_processed": self.task_count,

"learning_sessions": self.learning_sessions,

"memory_usage": self.memory.get_usage_stats(),

"model_status": self.model_manager.get_status()

}

”现在的

最新发布

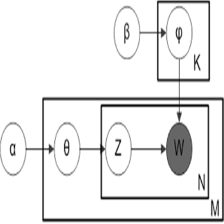

本文综述了几种知识引导的主题模型,包括DF-LDA、GK-LDA、MC-LDA及AKL等,探讨了如何利用外部知识来改善主题模型的表现,并分析了这些模型的优点与局限性。

本文综述了几种知识引导的主题模型,包括DF-LDA、GK-LDA、MC-LDA及AKL等,探讨了如何利用外部知识来改善主题模型的表现,并分析了这些模型的优点与局限性。

6507

6507

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?