自定义Interceptor

1)案例需求

使用 Flume 采集服务器本地日志,需要按照日志类型的不同,将不同种类的日志发往不同的分析系统

2)需求分析

在实际的开发中,一台服务器产生的日志类型可能有很多种,不同类型的日志可能需要 发送到不同的分析系统。此时会用到 Flume 拓扑结构中的 Multiplexing 结构,Multiplexing 的原理是,根据 event 中 Header 的某个 key 的值,将不同的 event 发送到不同的 Channel中,所以我们需要自定义一个 Interceptor,为不同类型的 event 的Header中的 key 赋予 不同的值。

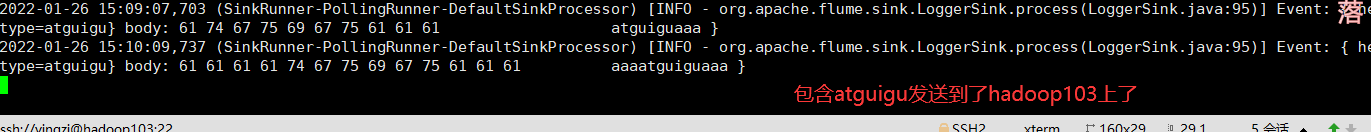

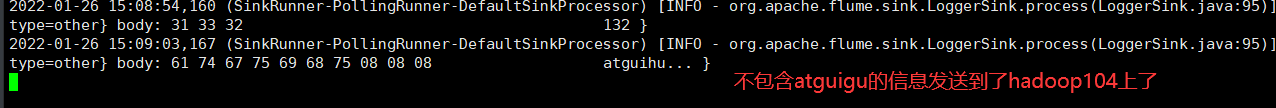

在该案例中,我们以端口数据模拟日志,以是否包含”atguigu”模拟不同类型的日志, 我们需要自定义 interceptor 区分数据中是否包含”atguigu”,将其分别发往不同的分析 系统(Channel)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-eiMHqhAV-1643203548141)(C:\Users\Admin\AppData\Roaming\Typora\typora-user-images\image-20220126145030203.png)]](https://i-blog.csdnimg.cn/blog_migrate/7e89eb34f2a78e957ece0bb8fa2511b2.png)

3)编码

pom.xml配置文件配置添加信息

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-core</artifactId>

<version>1.9.0</version>

</dependency>

定义 CustomInterceptor 类并实现 Interceptor 接口

package com.yingzi.interceptor;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

/**

* @author 影子

* @create 2022-01-25-15:33

**/

public class TypeInterceptor implements Interceptor {

//声明一个集合用于存放拦截器处理后的事件

private List<Event> addHeaderEvents;

@Override

public void initialize() {

//初始化集合,用于存储拦截器处理后的事件

addHeaderEvents = new ArrayList<>();

}

//单个事件处理方法

@Override

public Event intercept(Event event) {

//1.获取header&body

Map<String, String> headers = event.getHeaders();

String body = new String(event.getBody());

//2.根据body中是否包含"atguigu"添加不同的头信息

if (body.contains("atguigu")) {

headers.put("type", "atguigu");

} else {

headers.put("type", "other");

}

//返回数据

return event;

}

//批量事件处理方法

@Override

public List<Event> intercept(List<Event> list) {

//1.清空集合

addHeaderEvents.clear();

//2.遍历events

for (Event event : list) {

addHeaderEvents.add(intercept(event));

}

//3.返回数据

return addHeaderEvents;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder{

@Override

public Interceptor build() {

return new TypeInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

将文件打包导入flume/lib下(注意查看原文件内没有此重名的文件)

4)配置文件

(1)在haodoop102的flume/job/group下创建flume1.conf

为 hadoop102 上的 Flume1 配置 1 个 netcat source,1 个 sink group(2 个 avro sink), 并配置相应的 ChannelSelector 和 interceptor

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1 c2

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.yingzi.interceptor.TypeInterceptor$Builder

a1.sources.r1.selector.type = multiplexing

a1.sources.r1.selector.header = type

a1.sources.r1.selector.mapping.atguigu = c1

a1.sources.r1.selector.mapping.other = c2

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop103

a1.sinks.k1.port = 4141

a1.sinks.k2.type=avro

a1.sinks.k2.hostname = hadoop104

a1.sinks.k2.port = 4242

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Use a channel which buffers events in memory

a1.channels.c2.type = memory

a1.channels.c2.capacity = 1000

a1.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1 c2

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c2

(2)在haodoop103的flume/job/group下创建flume2.conf

a1.sources = r1

a1.sinks = k1

a1.channels = c1

a1.sources.r1.type = avro

a1.sources.r1.bind = hadoop103

a1.sources.r1.port = 4141

a1.sinks.k1.type = logger

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.sinks.k1.channel = c1

a1.sources.r1.channels = c1

(2)在haodoop104的flume/job/group下创建flume3.conf

a1.sources = r1

a1.sinks = k1

a1.channels = c1

a1.sources.r1.type = avro

a1.sources.r1.bind = hadoop104

a1.sources.r1.port = 4242

a1.sinks.k1.type = logger

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.sinks.k1.channel = c1

a1.sources.r1.channels = c1

5)启动命令

依次启动

hadoop102

bin/flume-ng agent -c conf/ -f job/group4/flume1.conf -n a1

hadoop103

bin/flume-ng agent -c conf/ -f job/group4/flume2.conf -n a1 -Dflume.root.logger=INFO,console

hadoop104

bin/flume-ng agent -c conf/ -f job/group4/flume3.conf -n a1 -Dflume.root.logger=INFO,console

6)检验

在haodoop102启动

nc localhost 44444

发送包含atguigu和不包含的信息,查看hadoop103、hadoop104打印的日志

439

439

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?