目录

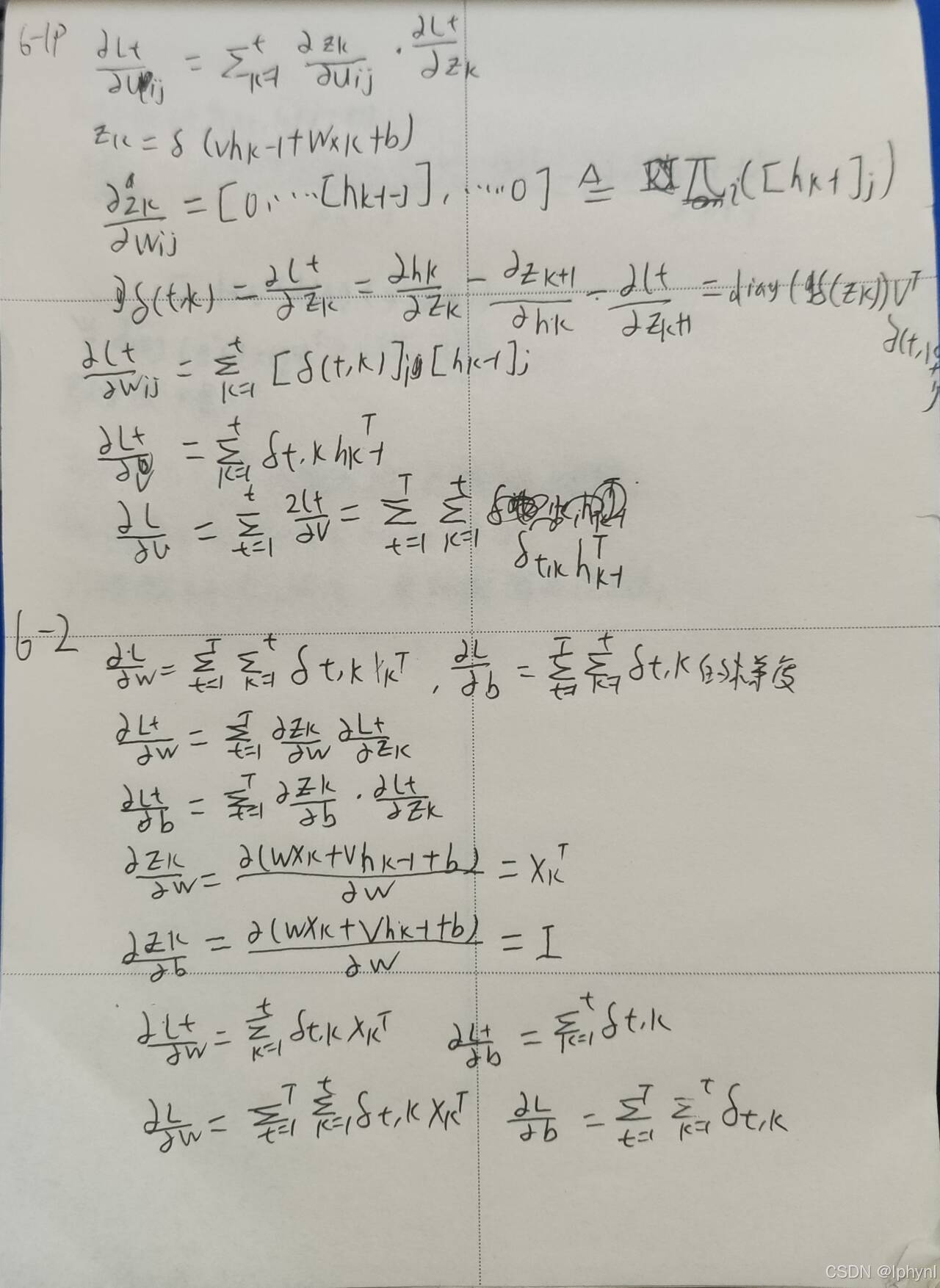

习题6-2 推导公式(6.40)和公式(6.41)中的梯度.

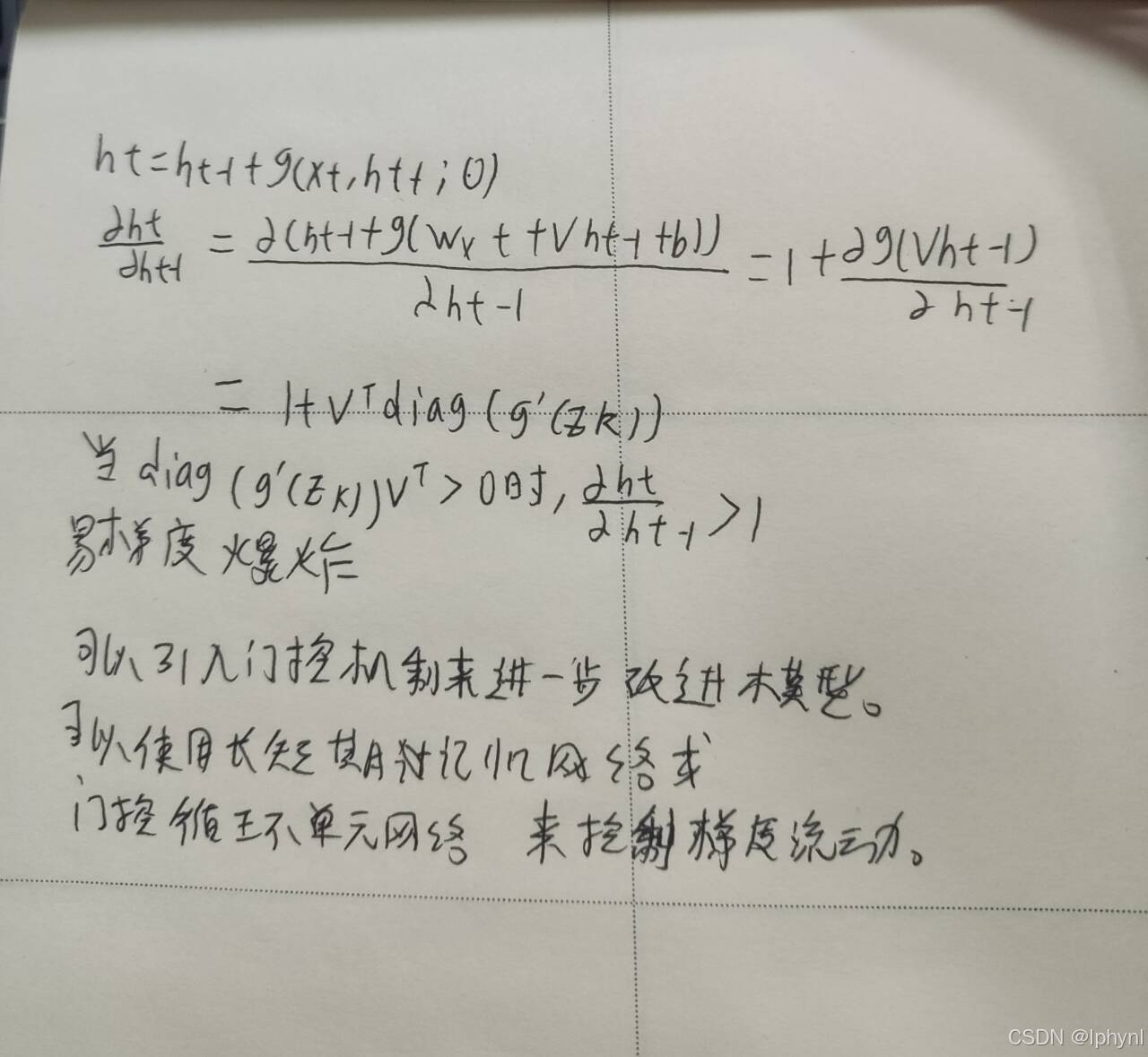

习题6-3 当使用公式(6.50)作为循环神经网络的状态更新公式时, 分析其可能存在梯度爆炸的原因并给出解决方法.

习题6-2P 设计简单RNN模型,分别用Numpy、Pytorch实现反向传播算子,并代入数值测试.

习题6-1P 推导RNN反向传播算法BPTT.

习题6-2 推导公式(6.40)和公式(6.41)中的梯度.

习题6-3 当使用公式(6.50)作为循环神经网络的状态更新公式时, 分析其可能存在梯度爆炸的原因并给出解决方法.

习题6-2P 设计简单RNN模型,分别用Numpy、Pytorch实现反向传播算子,并代入数值测试.

代码:

import torch

import torch.nn as nn

import numpy as np

class RNNCell(nn.Module):

def __init__(self, input_size, hidden_size):

super(RNNCell, self).__init__()

self.weight_ih = nn.Parameter(torch.randn(hidden_size, input_size))

self.weight_hh = nn.Parameter(torch.randn(hidden_size, hidden_size))

self.bias_ih = nn.Parameter(torch.randn(hidden_size, 1))

self.bias_hh = nn.Parameter(torch.randn(hidden_size, 1))

def forward(self, x, prev_hidden):

next_h = torch.tanh(

torch.mm(self.weight_ih, x.T) +

torch.mm(self.weight_hh, prev_hidden.T) +

self.bias_ih +

self.bias_hh

).T

return next_h

if __name__ == '__main__':

torch.manual_seed(123)

torch.set_printoptions(precision=6, sci_mode=False)

# 参数设置

input_size = 4

hidden_size = 5

batch_size = 3

nums = 3

# 初始化 RNNCell

rnn_pytorch = RNNCell(input_size, hidden_size)

# 生成随机输入和隐藏状态,并转换为 float32

x3_pytorch = torch.tensor(np.random.random((nums, batch_size, input_size)).astype(np.float32), requires_grad=True)

h3_pytorch = torch.tensor(np.random.random((1, batch_size, hidden_size)).astype(np.float32), requires_grad=True)

dh_pytorch = torch.tensor(np.random.random((nums, batch_size, hidden_size)).astype(np.float32))

# 前向传播

h_pytorch_list = []

h_pytorch = h3_pytorch[0]

for i in range(nums):

h_pytorch = rnn_pytorch(x3_pytorch[i], h_pytorch)

h_pytorch_list.append(h_pytorch)

# 反向传播

loss = torch.sum(torch.stack(h_pytorch_list) * dh_pytorch)

loss.backward()

# 打印结果

print("PyTorch Hidden States:")

for i, h in enumerate(h_pytorch_list):

print(f"Time Step {i + 1}:\n{h.detach().numpy()}\n")

print("Numpy Hidden States:")

for i, h in enumerate(h_pytorch_list):

print(f"Time Step {i + 1}:\n{h.detach().cpu().numpy()}\n")

print("PyTorch Gradients:")

print("dx:\n", x3_pytorch.grad.detach().numpy())

print("dw_ih:\n", rnn_pytorch.weight_ih.grad.detach().numpy())

print("dw_hh:\n", rnn_pytorch.weight_hh.grad.detach().numpy())

print("db_ih:\n", rnn_pytorch.bias_ih.grad.detach().numpy().T)

print("db_hh:\n", rnn_pytorch.bias_hh.grad.detach().numpy().T)

print("Numpy Gradients:")

print("dx:\n", x3_pytorch.grad.detach().cpu().numpy())

print("dw_ih:\n", rnn_pytorch.weight_ih.grad.detach().cpu().numpy())

print("dw_hh:\n", rnn_pytorch.weight_hh.grad.detach().cpu().numpy())

print("db_ih:\n", rnn_pytorch.bias_ih.grad.detach().cpu().numpy().T)

print("db_hh:\n", rnn_pytorch.bias_hh.grad.detach().cpu().numpy().T)运行结果:

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?