hive执行出现oom情况以及问题解决方法:

2022-11-22 11:10:47,797 Stage-3 map = 100%, reduce = 100%, Cumulative CPU 8290.44 sec

MapReduce Total cumulative CPU time: 0 days 2 hours 18 minutes 10 seconds 440 msec

Ended Job = job_1667594872527_3123 with errors

Error during job, obtaining debugging information...

Examining task ID: task_1667594872527_3123_m_000008 (and more) from job job_1667594872527_3123

Examining task ID: task_1667594872527_3123_m_000087 (and more) from job job_1667594872527_3123

...............

Task with the most failures(10):

-----

Task ID:

task_1667594872527_3123_r_000058

URL:

http://0.0.0.0:8088/taskdetails.jsp?jobid=job_1667594872527_3123&tipid=task_1667594872527_3123_r_000058

-----

Diagnostic Messages for this Task:

[2022-11-22 11:10:47.546]Container [pid=10961,containerID=container_e17_1667594872527_3123_01_001180] is running 6098944B beyond the 'PHYSICAL' memory limit. Current usage: 1.0 GB of 1 GB physical memory used; 2.6 GB of 2.1 GB virtual memory used. Killing container.

Dump of the process-tree for container_e17_1667594872527_3123_01_001180 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 10991 10961 10961 10961 (java) 5279 206 2787180544 263347 /usr/java/jdk1.8.0_341/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Djava.net.preferIPv4Stack=true -Xmx820m -Djava.io.tmpdir=/yarn/nm/usercache/root/appcache/application_1667594872527_3123/container_e17_1667594872527_3123_01_001180/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/yarn/container-logs/application_1667594872527_3123/container_e17_1667594872527_3123_01_001180 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 10.110.17.34 43972 attempt_1667594872527_3123_r_000058_9 18691697673372

|- 10961 10959 10961 10961 (bash) 0 0 9793536 286 /bin/bash -c /usr/java/jdk1.8.0_341/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Djava.net.preferIPv4Stack=true -Xmx820m -Djava.io.tmpdir=/yarn/nm/usercache/root/appcache/application_1667594872527_3123/container_e17_1667594872527_3123_01_001180/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/yarn/container-logs/application_1667594872527_3123/container_e17_1667594872527_3123_01_001180 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 10.110.17.34 43972 attempt_1667594872527_3123_r_000058_9 18691697673372 1>/yarn/container-logs/application_1667594872527_3123/container_e17_1667594872527_3123_01_001180/stdout 2>/yarn/container-logs/application_1667594872527_3123/container_e17_1667594872527_3123_01_001180/stderr

[2022-11-22 11:10:47.556]Container killed on request. Exit code is 143

[2022-11-22 11:10:47.562]Container exited with a non-zero exit code 143.

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

MapReduce Jobs Launched:

Stage-Stage-1: Map: 190 Reduce: 31 Cumulative CPU: 2001.3 sec HDFS Read: 2075677112 HDFS Write: 9637583714 HDFS EC Read: 0 SUCCESS

Stage-Stage-14: Map: 930 Cumulative CPU: 4420.6 sec HDFS Read: 9767999402 HDFS Write: 11084131787 HDFS EC Read: 0 SUCCESS

Stage-Stage-3: Map: 930 Reduce: 166 Cumulative CPU: 8290.44 sec HDFS Read: 11193940829 HDFS Write: 8041237546 HDFS EC Read: 0 FAIL

Total MapReduce CPU Time Spent: 0 days 4 hours 5 minutes 12 seconds 340 msec

原因是:虚拟内存不足。

解决方法:

在代码前添加以下几个参数即可。

hive> set hive.exec.max.dynamic.partitions.pernode=500;

hive> set mapreduce.reduce.memory.mb=3072;

hive> set mapreduce.map.memory.mb=1536;

hive> set mapred.reduce.max.attempts = 10;

hive> set mapred.map.max.attempts = 10;

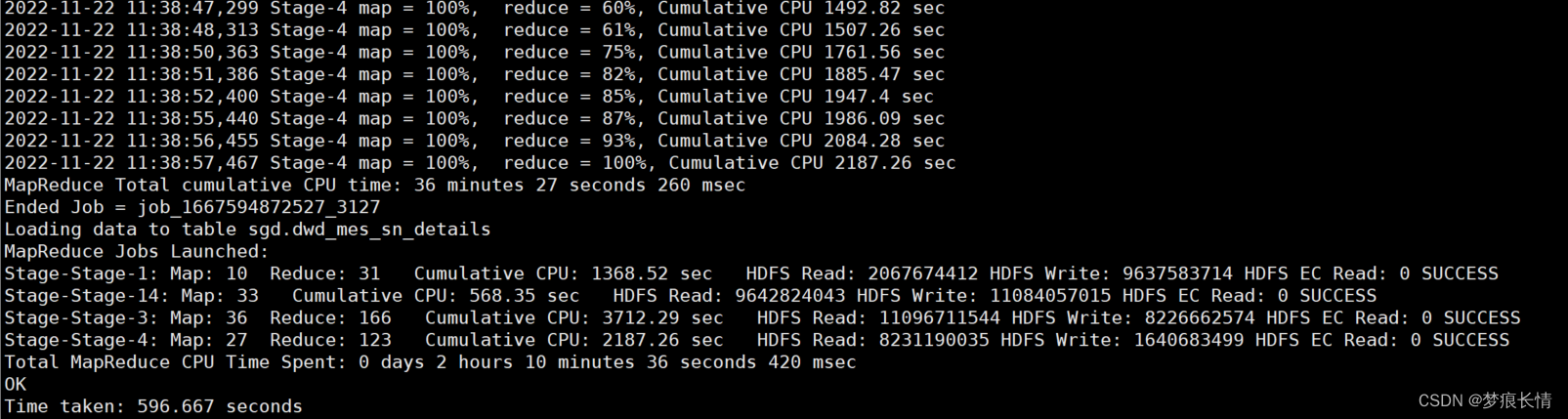

再次执行,完美解决!

5380

5380

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?