Ubuntu下phg的安装

文章目录

PHG (Parallel Hierarchical Grid) 是科学与工程计算国家重点实验室正在发展的专门为三维自适应有限元设计的并行程序开发平台,其核心是分布式的层次网格结构。目前,PHG 处理的网格对象是三维四面体协调网格。PHG 采用 C 语言开发,基于 MPI 消息传递通信实现并行。

PHG 通过面向对象的数据结构以及用户接口实现了并行网格剖分、动态负载平衡和网格局部自适应加密与放粗,在隐藏并行细节的同时为并行自适应有限元程序的开发提供了足够的灵活性。用户通过 PHG 灵活的自由度管理模块能轻松完成有限元离散和刚度矩阵的组装,通过线性解法器或特征值解法器接口能方便地完成方程组和特征值问题的求解。PHG 支持将计算结果以 VTK 或 OpenDX 的格式输出进行可视化处理。

下面简单介绍PHG在Ubuntu平台下的安装。

准备工作

安装好Ubuntu系统,将Ubuntu系统中自带的mpi有关的东西,统统卸载掉,比如说openmpi、lib*mpi*等东西卸载干净。你可以使用形容下面的命令来完成这件事情: sudo apt remove *openmpi*、sudo apt automove *mpi*

为什么要把mpi相关的东西卸载干净呢?这是因为我后面要用默认的configure来配置phg,如果没清理干净,在关联mpi相关组件的时候,有可能关键出错。为什么不使用configure来配置相关依赖的位置呢?这是因为,容易出错,即使你语法没有什么问题,也容易出现mpi的lib库没关联上等问题。

安装mpich

mpich的安装可以参考这里,不同的是,我们这里安装mpich保持默认的configure,即configure后面不指定安装位置。

切记,不要使用configure指定位置,保持默认。

安装lapack(带了blas)

下载完后,解压进入INSTALL文件夹,拷贝一个文件到上层文件夹并重命名。cp make.inc.gfortran ../make.inc

修改Makefile文件,如下:

lib: lapacklib tmglib

#lib: blaslib variants lapacklib tmglib

改为

#lib: lapacklib tmglib

lib: blaslib variants lapacklib tmglib

出来make即可。

如果出现错误:

Makefile:463: recipe for target 'znep.out' failed

这应该是测试错误,编译其实基本已经完成了

这时只需要执行下面的语句,修改一下栈的大小就可以编译成功了。

ulimit -s unlimited

或者

ulimit -s 100000

最后把文件夹下面的liblapack.a,librefblas.a,libtmglib.a 三个库拷贝到/usr/lib下:

sudo cp liblapack.a librefblas.a libtmglib.a /usr/lib

安装了lapack,作为依赖的blas自然安装好了。当然也可以单独安装。blas下载地址

phg的安装

-

下载地址,下载解压。

-

文件拷贝,因为直接编译好生成的src 中的refine.o和coarsen.o文件可能并不直接支持并行计算,跑例子程序的时候可能也会报错告诉你这个。需要根据自己电脑的硬件,以及所安装的mpi的版本,从子目录obj中挑选相应的refine.o和coarsen.o文件 (详细的说明参见phg中obj子目录中的ReadMe.txt文件)复制到src中(覆盖原来的两个文件)。即进入顶层目录执行

cp obj/mpich-x86_64/double_int/*.o src/.,复制完后,把src下原有的几个文件删掉,命令如下:rm -f src/{refine,coarsen}.c src/libphg.*。 -

cd进目录,进行

configure。完了之

后,make和make install,make install可以先不干。这里的configure是可以指定位置的。 -

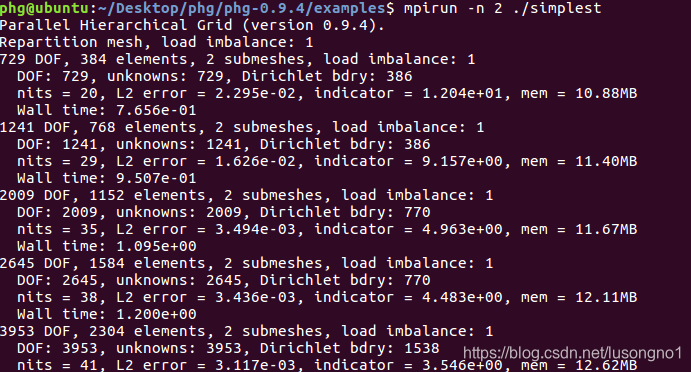

测试,去example中make,产生可执行文件(绿色),用mpirun或者mpiexec跑跑看看行不行。测试结果如下:

奥克姆剃刀原则能指导我们很好地完成这就事情。

一些其他tips

1、如果configure不当,测试时,你可能会遇到如下报错:

error while loading shared libraries: libmpi.so.12: cannot open shared object file: No such file or directory

就是说库文件libmpi.so.12找不到,这时候如果你通过apt-file search libmpi.so.12寻找有这个库的相关软件包,发现找出来的是libopenmpi1.10,安装这个,没有用,治标不治本。因为你通过locate libmpi.so.12查找,发现这个库本身就是有的,只是phg的configure没关联上而已。如果你仔细观察原来的congure信息,你可能会发现有可能你的mpi链接和没搞好,像下面这样:

checking whether we can include MPI headers ... no

checking whether we can link with MPI libraries ... no

configure: *** MPI support disabled

如果你不想要保持默认,非要通过configure,将mpi链接上,你要通过形如下面方式进行配置configure参数。但是这样做,还有可能即使你配置上没问题,最后,mpi的库文件和头文件还是没被phg关联上。

./configure --prefix=/usr/local/phg-0.9.4 --with-mpi-libdir=/usr/local/mpich-3.2.1/lib --with-mpi-incdir=/usr/local/mpich-3.2.1/include --with-mpi-lib="-lpmpich -lmpich -lpmpich -lmpich -lpthread -lrt" --enable-mpi

不知道如何configure,试试./configure --help |grep mpi,查看mpi相关configure内容。

2、当你想重新configure的时候,一般如果重新configure不成,那么请重新解压安装包,再configure。或者,当需要重新configure时,要执行gmake confclean,然后configure。

3、你可以使用形如ldd simplest的命令,来查看一个可执行文件的依赖。

4、关于locate的使用:当缺少文件时,locate有,但ls没有。是因为locate 是一个文件路径索引工具,一般每天更新一次。也可以手动使用 updatedb 更新。

5、你可以使用locate libmpi.so.12 定位你的mpich的库文件位置,使用locate mpi.h定位你的mpi头文件位置,如果你不想保持configure的默认,想要自己configure配置路径的话。

6、configure后加上形如下面这样的configure相关配置phg安装的configure:

--host=HOST cross-compile to build programs to run on HOST [BUILD]

--target=TARGET configure for building compilers for TARGET [HOST]

--with-rpath-flag=FLAG compiler flag for rpath (e.g., "-Wl,-rpath,")

--with-omp-flags=FLAGS compiler flags for OpenMP

--enable-mpi enable MPI support (default)

--disable-mpi disable MPI support

--with-mpi-libdir=DIR path for MPI libraries

--with-mpi-incdir=DIR path for MPI header files

--with-mpi-lib=SPEC MPI libraries (default '-lmpi' or '-lmpich')

--enable-mpiio enable MPI I/O

--disable-mpiio disable MPI I/O (default)

7、make uninstall可以做make的逆向操作。

要点:删光原mpi相关的其他东西(放下屠刀),一切尽可能保持默认(捡起奥卡姆剃刀)。

附:集群中 phg 的调用

mpich 和 phg 配置

拷贝如下内容为家目录下的 .bahsrc 文件,

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER=

# User specific aliases and functions

alias vi='vim'

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# MPI and compilers

#export MKLROOT="/share/soft/intel_2017/mkl"

export MV2_ENABLE_AFFINITY=0 # for MVAPICH2+OpenMP

#export OMP_PLACES=cores

#export OMP_PROC_BIND=spread

#----------- Intel compilers

. /share/soft/intel_2018_update1/bin/compilervars.sh intel64

. /share/soft/intel_2017/bin/compilervars.sh intel64

#----------- MPI

module purge

module load mvapich2

#module load openmpi

export PATH="/soft/Scripts:$PATH"

# phg

# export PHG_MAKEFILE_INC=/soft/phg/phg-0.9.3-mvapich2-20171209/share/phg/Makefile.inc

# export PHG_LIB_DIR=/share/soft/phg/phg-0.9.3-mvapich2-20171209/lib

export PHG_MAKEFILE_INC=/soft/phg/phg-0.9.4-mvapich2-20200413/share/phg/Makefile.inc

export PHG_LIB_DIR=/share/soft/phg/phg-0.9.4-mvapich2-20200413/lib

export PATH=${PATH}:/share/soft/phg/phg-0.9.4-mvapich2-20200413/bin

#myphg

if [ -f "/share/soft/intel_2018/bin/compilervars.sh" ]; then

. /share/soft/intel_2018/bin/compilervars.sh intel64

fi

自己的家目录下的 .bashrc 文件,表示每次登陆或者打开一个新的 shell 窗口都会执行一次。

这个 .bashrc 文件是我自用的,要调用系统自带的 phg,里面关键的内容在于,

- 加载需要的 mpich 模块。

module load mvapich2 - 在环境目录中为你想要的 phg 版本的 inc 文件和链接库路径指定别名,指定别名是为了在写 Makefile 的时候,可以直接用别名替代路径,省得写一长串。你要替换 phg 版本的时候,也只需要改动这个地方。

export PHG_MAKEFILE_INC=/soft/phg/phg-0.9.4-mvapich2-20200413/share/phg/Makefile.inc

export PHG_LIB_DIR=/share/soft/phg/phg-0.9.4-mvapich2-20200413/lib

有人好奇,这个 inc 文件是干嘛的,为什么没有给头文件路径指定一个别名,譬如 phg.h 所在的位置。

phg 用 make 的方式来组织编译是比较方便的。Makefile.inc 文件,顾名思义就是在写 Makefile 的使用需要 include 进来的文件,它就包括了一些编译选项,包括头文件(phg.h 等)路径的指定以及 make 的隐式规则等等。Makefile.inc 它大概是写成这样,

CPP = /share/soft/mvapich2-2.3.3_icc/bin/mpicc -E

CPPFLAGS = -I/share/soft/phg/phg-0.9.4-mvapich2-20200413/include -I/share/soft/phg/mvapich2/include -I/share/soft/phg/mvapich2/hypre-2.11.2/include -I/share/soft/phg/mvapich2/include/superlu_dist -D__PHG__

BUILD_CPPFLAGS = -DMPI_NO_CPPBIND -DVTK_DIR="\"\"" -DGZIP_PROG="\"/usr/bin/gzip\"" -DBZIP2_PROG="\"/usr/bin/bzip2\""

CC = /share/soft/mvapich2-2.3.3_icc/bin/mpicc

CFLAGS = -g -O2 -w -fopenmp

BUILD_CFLAGS = -fPIC

USER_CFLAGS =

CXX = /share/soft/mvapich2-2.3.3_icc/bin/mpicxx

CXXFLAGS = -g -O2 -w -DMPICH_IGNORE_CXX_SEEK -DMPICH_SKIP_MPICXX -fopenmp

BUILD_CXXFLAGS = -fPIC

USER_CXXFLAGS =

FC = /share/soft/mvapich2-2.3.3_icc/bin/mpif90

FCFLAGS = -g

USER_FCFLAGS =

F77 = /share/soft/mvapich2-2.3.3_icc/bin/mpif77

FFLAGS = -g

USER_FFLAGS =

LINKER = /share/soft/mvapich2-2.3.3_icc/bin/mpicxx

LDFLAGS = -L/share/soft/phg/phg-0.9.4-mvapich2-20200413/lib -L/share/soft/phg/mvapich2/lib -Wl,-rpath,/share/soft/phg/mvapich2/lib -Wl,-rpath,/soft/phg/mpich/petsc-3.8.2/lib -fopenmp -L/share/soft/phg/mvapich2/hypre-2.11.2/lib

USER_LDFLAGS =

LIBS = -Wl,-rpath,/share/soft/phg/phg-0.9.4-mvapich2-20200413/lib -lphg -lmpfr -lgmp -lBLOPEX -lparpack -lminres -lsmumps -ldmumps -lmumps_common -lpord -lptesmumps -lptscotch -lptscotcherrexit -lesmumps -lscotch -lscotcherrexit -lmkl_scalapack_lp64 -lmkl_blacs_intelmpi_lp64 -lsuperlu_dist -Wl,-rpath,/share/soft/phg/mvapich2/hypre-2.11.2/lib -lHYPRE -lparmetis -lmetis -lGKlib -lmkl_lapack95_lp64 -lmkl_core -lmkl_intel_lp64 -lmkl_intel_thread -liomp5 -lm -L/share/soft/phg/mvapich2/lib -L/soft/mvapich2-2.3.3_icc/lib -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/ipp/lib/intel64 -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/compiler/lib/intel64_lin -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/mkl/lib/intel64_lin -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/tbb/lib/intel64/gcc4.7 -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/daal/lib/intel64_lin -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/daal/../tbb/lib/intel64_lin/gcc4.4 -L/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/compiler/lib/intel64_lin -L/usr/lib/gcc/x86_64-redhat-linux/4.8.5/ -L/usr/lib/gcc/x86_64-redhat-linux/4.8.5/../../../../lib64 -L/usr/lib/gcc/x86_64-redhat-linux/4.8.5/../../../../lib64/ -L/lib/../lib64 -L/lib/../lib64/ -L/usr/lib/../lib64 -L/usr/lib/../lib64/ -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/ipp/lib/intel64/ -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/compiler/lib/intel64_lin/ -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/mkl/lib/intel64_lin/ -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/tbb/lib/intel64/gcc4.7/ -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/daal/lib/intel64_lin/ -L/share/soft/intel_2018_update1/compilers_and_libraries_2018.1.163/linux/daal/../tbb/lib/intel64_lin/gcc4.4/ -L/usr/lib/gcc/x86_64-redhat-linux/4.8.5/../../../ -L/lib64 -L/lib/ -L/usr/lib64 -L/usr/lib -liomp5 -limf -lm -lmpifort -lmpi -lifport -lifcoremt -lsvml -lipgo -lirc -lpthread -lirc_s -ldl -liomp5 -limf -lm -lmpifort -lmpi -lifport -lifcoremt -lsvml -lipgo -lirc -lpthread -lirc_s -ldl -Wl,-rpath,/usr/local/lib -Wl,-rpath,/usr/local/lib64

USER_LIBS =

AR = /usr/bin/ar

RANLIB = /usr/bin/ranlib

BUILD_SHARED = gcc -shared

BUILD_SHARED_LIBS =

LIB_SUFFIX = .so

.c.o:

${CC} ${CFLAGS} ${CPPFLAGS} ${USER_CFLAGS} -c $*.c

.cxx.o:

${CXX} ${CXXFLAGS} ${CPPFLAGS} ${USER_CXXFLAGS} -c $*.cxx

.f.o:

${FC} ${FCFLAGS} ${USER_FCFLAGS} -c $*.f

% : %.o

${LINKER} ${USER_LDFLAGS} ${LDFLAGS} -o $@ $*.o ${USER_LIBS} ${LIBS}

% : %.c

${CC} ${CFLAGS} ${CPPFLAGS} ${USER_CFLAGS} -c $*.c

${LINKER} ${USER_LDFLAGS} ${LDFLAGS} -o $@ $*.o ${USER_LIBS} ${LIBS}

% : %.cxx

${CXX} ${CXXFLAGS} ${CPPFLAGS} ${USER_CXXFLAGS} -c $*.cxx

${CXX} ${USER_LDFLAGS} ${LDFLAGS} -o $@ $*.o ${USER_LIBS} ${LIBS}

% : %.f

${FC} ${FCFLAGS} ${USER_FCFLAGS} -c $*.f

${LINKER} ${USER_LDFLAGS} ${LDFLAGS} -o $@ $*.o ${USER_LIBS} ${LIBS}

CPPFLAGS:

@echo $(CPPFLAGS)

CFLAGS:

@echo $(CFLAGS)

CXXFLAGS:

@echo $(CXXFLAGS)

LDFLAGS:

@echo $(LDFLAGS)

LIBS:

@echo $(LIBS)

例子程序

为了省事,不妨从安装包中拷贝一个例子程序,譬如 simplest.c。

/* Parallel Hierarchical Grid -- an adaptive finite element library.

*

* Copyright (C) 2005-2010 State Key Laboratory of Scientific and

* Engineering Computing, Chinese Academy of Sciences. */

/* This library is free software; you can redistribute it and/or

* modify it under the terms of the GNU Lesser General Public

* License as published by the Free Software Foundation; either

* version 2.1 of the License, or (at your option) any later version.

*

* This library is distributed in the hope that it will be useful,

* but WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

* Lesser General Public License for more details.

*

* You should have received a copy of the GNU Lesser General Public

* License along with this library; if not, write to the Free Software

* Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston,

* MA 02110-1301 USA */

/* $Id: simplest.c,v 1.116 2014/06/16 03:12:52 zlb Exp $

*

* This sample code solves the Helmholtz equation:

* -\Delta u + a u = f

* with Dirichlet or periodic boundary conditions */

#include "phg.h"

#if (PHG_VERSION_MAJOR <= 0 && PHG_VERSION_MINOR < 9)

# undef ELEMENT

typedef SIMPLEX ELEMENT;

#endif

#include <string.h>

#include <math.h>

static FLOAT a = 1.0;

static void

func_u(FLOAT x, FLOAT y, FLOAT z, FLOAT *value)

{

*value = Cos(2. * M_PI * x) * Cos(2. * M_PI * y) * Cos(2. * M_PI * z);

}

static void

func_f(FLOAT x, FLOAT y, FLOAT z, FLOAT *value)

{

func_u(x, y, z, value);

*value = 12. * M_PI * M_PI * *value + a * *value;

}

static void

build_linear_system(SOLVER *solver, DOF *u_h, DOF *f_h)

{

int i, j;

GRID *g = u_h->g;

ELEMENT *e;

assert(u_h->dim == 1);

ForAllElements(g, e) {

int N = DofGetNBas(u_h, e); /* number of bases in the element */

FLOAT A[N][N], rhs[N], buffer[N];

INT I[N];

/* compute \int \grad\phi_j \cdot \grad\phi_i making use of symmetry */

for (i = 0; i < N; i++) {

I[i] = phgSolverMapE2L(solver, 0, e, i);

for (j = 0; j <= i; j++) {

A[j][i] = A[i][j] =

/* stiffness */

phgQuadGradBasDotGradBas(e, u_h, j, u_h, i, QUAD_DEFAULT) +

/* mass */

a * phgQuadBasDotBas(e, u_h, j, u_h, i, QUAD_DEFAULT);

}

}

/* loop on basis functions */

for (i = 0; i < N; i++) {

if (phgDofDirichletBC(u_h, e, i, func_u, buffer, rhs+i,

DOF_PROJ_NONE)) {

phgSolverAddMatrixEntries(solver, 1, I + i, N, I, buffer);

}

else { /* interior node */

/* right hand side = \int f * phi_i */

phgQuadDofTimesBas(e, f_h, u_h, i, QUAD_DEFAULT, rhs + i);

phgSolverAddMatrixEntries(solver, 1, I + i, N, I, A[i]);

}

}

phgSolverAddRHSEntries(solver, N, I, rhs);

}

}

static void

estimate_error(DOF *u_h, DOF *f_h, DOF *grad_u, DOF *error)

/* compute H1 error indicator */

{

GRID *g = u_h->g;

ELEMENT *e;

DOF *jump, *residual, *tmp;

jump = phgQuadFaceJump(grad_u, DOF_PROJ_DOT, NULL, QUAD_DEFAULT);

tmp = phgDofDivergence(grad_u, NULL, NULL, NULL);

residual = phgDofGetSameOrderDG(u_h, -1, NULL);

phgDofCopy(f_h, &residual, NULL, NULL);

phgDofAXPY(-a, u_h, &residual);

phgDofAXPY(1.0, tmp, &residual);

phgDofFree(&tmp);

ForAllElements(g, e) {

int i;

FLOAT eta, h;

FLOAT diam = phgGeomGetDiameter(g, e);

eta = 0.0;

/* for each face F compute [grad_u \cdot n] */

for (i = 0; i < NFace; i++) {

if (e->bound_type[i] & (DIRICHLET | NEUMANN))

continue; /* boundary face */

h = phgGeomGetFaceDiameter(g, e, i);

eta += *DofFaceData(jump, e->faces[i]) * h;

}

eta = eta * .5 + diam * diam * phgQuadDofDotDof(e, residual, residual,

QUAD_DEFAULT);

*DofElementData(error, e->index) = eta;

}

phgDofFree(&jump);

phgDofFree(&residual);

return;

}

int

main(int argc, char *argv[])

{

INT periodicity = 0 /* X_MASK | Y_MASK | Z_MASK */;

INT mem_max = 400;

FLOAT tol = 5e-1;

char *fn = "../test/cube4.dat";

GRID *g;

ELEMENT *e;

DOF *u_h, *f_h, *grad_u, *error, *u;

SOLVER *solver;

FLOAT L2error, indicator;

size_t mem_peak;

phgOptionsRegisterFloat("a", "Coefficient", &a);

phgOptionsRegisterFloat("tol", "Tolerance", &tol);

phgOptionsRegisterInt("mem_max", "Maximum memory (MB)", &mem_max);

phgOptionsRegisterInt("periodicity", "Set periodicity", &periodicity);

phgInit(&argc, &argv);

g = phgNewGrid(-1);

phgSetPeriodicity(g, periodicity);

if (!phgImport(g, fn, FALSE))

phgError(1, "can't read file \"%s\".\n", fn);

/* The discrete solution */

if (FALSE) {

/* u_h is h-p type */

HP_TYPE *hp = phgHPNew(g, HP_HB);

ForAllElements(g, e)

e->hp_order = DOF_DEFAULT->order + GlobalElement(g, e->index) % 3;

phgHPSetup(hp, FALSE);

u_h = phgHPDofNew(g, hp, 1, "u_h", DofInterpolation);

phgHPFree(&hp);

}

else {

/* u_h is non h-p type */

u_h = phgDofNew(g, DOF_DEFAULT, 1, "u_h", DofInterpolation);

}

phgDofSetDataByValue(u_h, 0.0);

/* RHS function */

f_h = phgDofNew(g, DOF_DEFAULT, 1, "f_h", func_f);

/* DOF for storing a posteriori error estimates */

error = phgDofNew(g, DOF_P0, 1, "error indicator", DofNoAction);

/* The analytic solution */

u = phgDofNew(g, DOF_ANALYTIC, 1, "u", func_u);

while (TRUE) {

double t0 = phgGetTime(NULL);

if (phgBalanceGrid(g, 1.2, 1, NULL, 0.))

phgPrintf("Repartition mesh, load imbalance: %lg\n",

(double)g->lif);

phgPrintf("%"dFMT" DOF, %"dFMT" elements, %"dFMT

" submeshes, load imbalance: %lg\n",

DofGetDataCountGlobal(u_h), g->nleaf_global, g->nprocs,

(double)g->lif);

solver = phgSolverCreate(SOLVER_DEFAULT, u_h, NULL);

phgPrintf(" DOF: %"dFMT", unknowns: %"dFMT

", Dirichlet bdry: %"dFMT"\n",

DofGetDataCountGlobal(u_h), solver->rhs->map->nglobal,

solver->rhs->map->bdry_nglobal);

build_linear_system(solver, u_h, f_h);

phgSolverSolve(solver, TRUE, u_h, NULL);

phgPrintf(" nits = %d, ", solver->nits);

phgSolverDestroy(&solver);

grad_u = phgDofGradient(u_h, NULL, NULL, NULL);

estimate_error(u_h, f_h, grad_u, error);

indicator = Sqrt(phgDofNormL1Vec(error));

phgDofFree(&grad_u);

grad_u = phgDofCopy(u_h, NULL, NULL, NULL);

phgDofAXPY(-1.0, u, &grad_u);

L2error = phgDofNormL2(grad_u);

phgDofFree(&grad_u);

phgMemoryUsage(g, &mem_peak);

phgPrintf("L2 error = %0.3le, indicator = %0.3le, mem = %0.2lfMB\n",

(double)L2error, (double)indicator,

(double)mem_peak / (1024.0 * 1024.0));

phgPrintf(" Wall time: %0.3le\n", phgGetTime(NULL) - t0);

if (indicator < tol || mem_peak >= 1024 * (size_t)mem_max * 1024)

break;

phgMarkRefine(MARK_DEFAULT, error, Pow(0.8,2), NULL, 0., 1,

Pow(tol, 2) / g->nleaf_global);

phgRefineMarkedElements(g);

}

#if 0

phgPrintf("Final mesh written to \"%s\".\n",

phgExportDX(g, "simplest.dx", u_h, error, NULL));

#elif 0

phgPrintf("Final mesh written to \"%s\".\n",

phgExportVTK(g, "simplest.vtk", u_h, error, NULL));

#endif

phgDofFree(&u);

phgDofFree(&u_h);

phgDofFree(&f_h);

phgDofFree(&error);

phgFreeGrid(&g);

phgFinalize();

return 0;

}

编写 Makefile 文件

写一个最简单的 make 文件来编译例子程序。

include ${PHG_MAKEFILE_INC}

simplest.o: ${PHG_LIB_DIR}/libphg${LIB_SUFFIX} simplest.c

这里 include ${PHG_MAKEFILE_INC} 把 Makefile.inc 文件 include 进来,那么头文件目录啊,可执行文件的编译生成规则什么都有了。通过环境变量对应的链接库路径,指定 phg 的动态链接库文件 libphg.so 和源文件,以便进行编译生成可执行文件。

运行

mpirun -n 2 ./simplest

例子程序好像在集群的安装包中没有,可以从 phg 的官网下载。需要注意的是,如果你把整个压缩包都拷到集群上,没有必要去编译它(configure 和 make),编译生成的文件在集群原有的安装包中都有了,如此做,再使用压缩包中自带的 Makefile 文件,只会让你调用的是你下载的这个安装包所生成的一些文件,你调用的并不是集群的 phg。

本文详细介绍了科学与工程计算国家重点实验室开发的并行程序开发平台PHG在Ubuntu系统上的安装步骤与注意事项,包括卸载原有mpi组件、安装mpich与lapack,以及PHG的配置、编译与测试过程。

本文详细介绍了科学与工程计算国家重点实验室开发的并行程序开发平台PHG在Ubuntu系统上的安装步骤与注意事项,包括卸载原有mpi组件、安装mpich与lapack,以及PHG的配置、编译与测试过程。

541

541

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?