概述:本文介绍Hive基本环境的搭建,并实现塞缪尔·厄尔曼《青春》的词频统计

1、下载和HDFS版本匹配的Hive并解压

http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.7.0.tar.gz

http://archive.cloudera.com/cdh5/cdh/5/hive-1.1.0-cdh5.7.0.tar.gz

#注意:尾号一致

2、安装MySQL

步骤包括:下载并解压MySql、目录重命名、创建用户和组、创建配置文件、初始化数据库、修改用户名和密码

3、安装JDK和Hive

tar -zxvf software/jdk-8u201-linux-x64.tar.gz -C app/

tar -zxvf software/hive-1.1.0-cdh5.10.0.tar.gz -C app/

4、环境变量配置

vi .bash_profile

#以下为所有变量

export PATH

export HIVE_HOME=/root/app/hive-1.1.0-cdh5.10.0

export PATH=$HIVE_HOME/bin:$PATH

export JAVA_HOME=/root/app/jdk1.8.0_201

export PATH=$JAVA_HOME/bin:$PATH

export HADOOP_HOME=/root/app/hadoop-2.6.0-cdh5.7.0

export PATH=$HADOOP_HOME/bin:$PATH

#使环境变量生效

source .bash_profile

#检查环境变量

echo $HIVE_HOME

5、Hive配置

#将mysql驱动包导入Hive lib下

cp software/mysql-connector-java-5.1.47-bin.jar app/hive-1.1.0-cdh5.10.0/lib

cd app/hive-1.1.0-cdh5.10.0/conf/

cp hive-env.sh.template cp hive-env.sh

cp hive-env.sh

#新增

HADOOP_HOME=/root/app/hadoop-2.6.0-cdh5.7.0

增加文件hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/sparksql?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

<description>password to use against metastore database</description>

</property>

</configuration>

6、启动Hive

cd /root/app/hive-1.1.0-cdh5.10.0/bin

./hive

#检查数据库表建立情况

mysql -uroot -proot

show databases;

use sparksql;

show tables;

7、Hive的基本使用

(1)创建表

#hive

create table hive_wordcount(context string);

#mysql 查看创建的表和字段

SELECT * FROM TBLS;

SELECT * FROM COLUMNS_V2;

(2)加载数据到Hive表

#hive

LOAD DATA LOCAL INPATH '/root/app/hadoop-2.6.0-cdh5.7.0/bin/Youth.txt' INTO TABLE hive_wordcount;

SELECT * FROM hive_wordcount;

(3)词频统计

SELECT word,count(1) from hive_wordcount lateral view explode(split(context,' ')) wc as word group by word;

#其中:lateral view explode将每行记录按照指定分隔符拆解

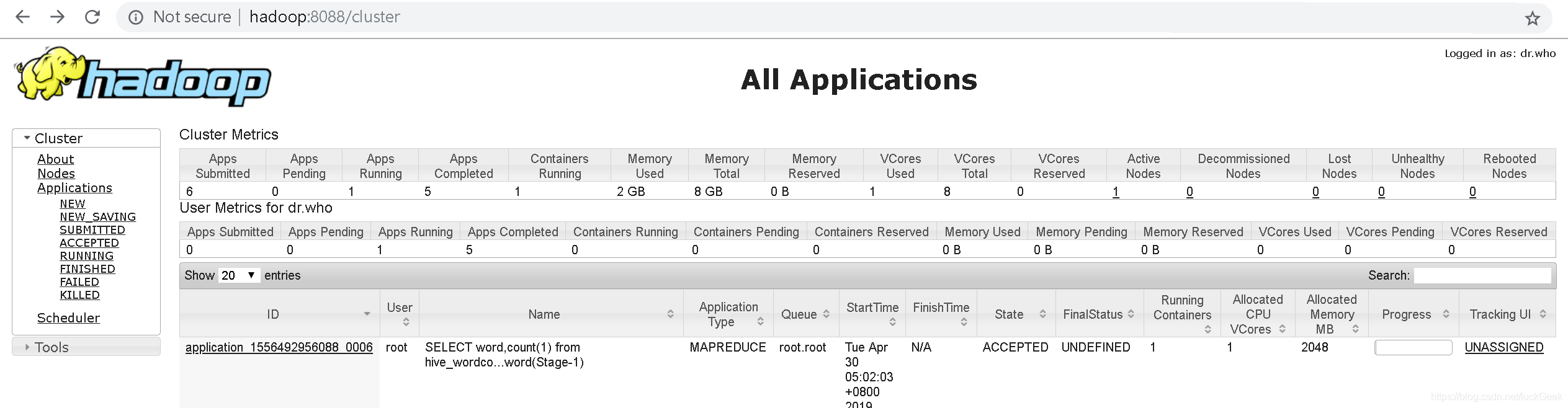

作业提交情况:

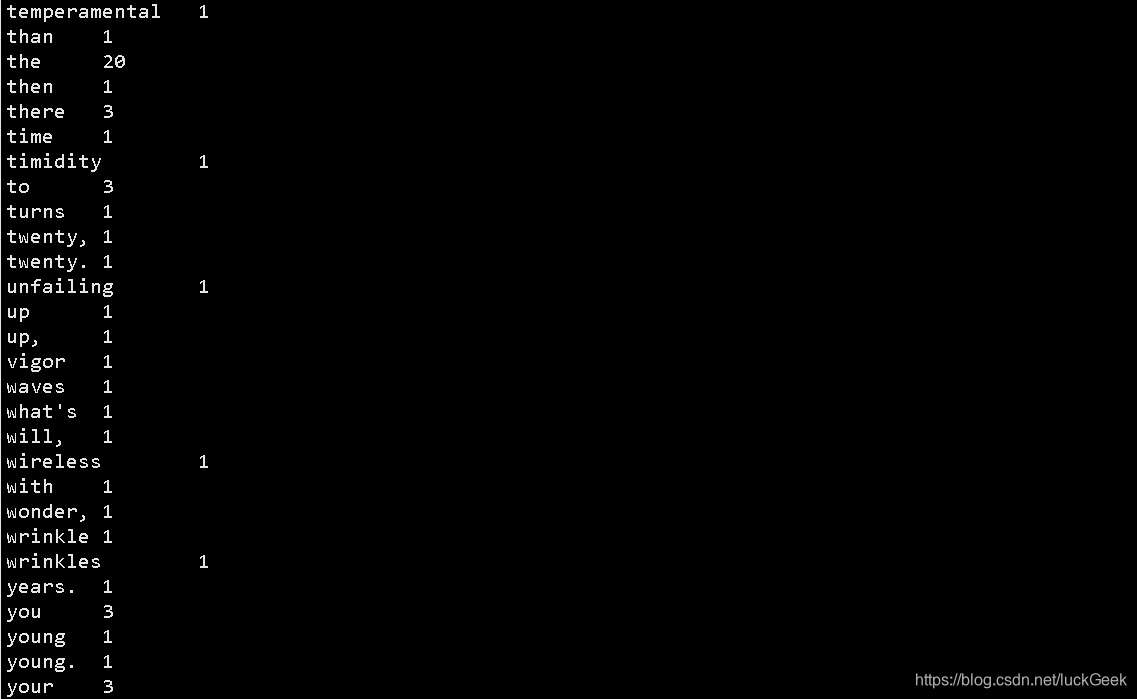

部分统计结果:

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?