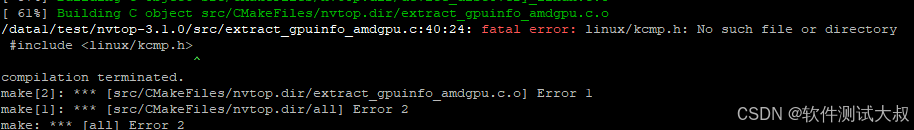

安装nvtop执行make时报错

我这边安装环境nvidia显卡,不需要AMD GPU支持

所以禁用AMD相关选项。

cmake .. -DNVIDIA_SUPPORT=ON -DAMDGPU_SUPPORT=OFF -DINTEL_SUPPORT=ON

然后再次make即可成功。

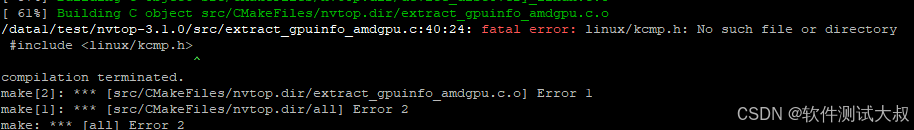

安装nvtop执行make时报错

我这边安装环境nvidia显卡,不需要AMD GPU支持

所以禁用AMD相关选项。

cmake .. -DNVIDIA_SUPPORT=ON -DAMDGPU_SUPPORT=OFF -DINTEL_SUPPORT=ON

然后再次make即可成功。

2391

2391

1万+

1万+

1671

1671

3958

3958

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?