storm-redis提供了最基本的Bolt实现,RedisLookupBolt做数据查询;RedisStoreBolt输数据存储,RedisFilterBolt做查询过滤。

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-redis</artifactId>

<version>${storm.version}</version>

</dependency>

package com.ldz.bigdata.intergration.redis;

import org.apache.commons.io.FileUtils;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.redis.bolt.RedisStoreBolt;

import org.apache.storm.redis.common.config.JedisPoolConfig;

import org.apache.storm.redis.common.mapper.RedisDataTypeDescription;

import org.apache.storm.redis.common.mapper.RedisStoreMapper;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.ITuple;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

import java.io.File;

import java.io.IOException;

import java.util.*;

/**

* @Author: Dazhou Li

* @Description:使用Storm完成词频统计功能

* @CreateDate: 2019/2/8 0008 12:07

*/

public class LocalWordCountRedisStormTopology {

public static class DataSourceSpout extends BaseRichSpout {

private SpoutOutputCollector collector;

public static final String[] words = new String[]{"apple", "oranage", "banana", "pineapple", "water"};

@Override

public void open(Map map, TopologyContext topologyContext, SpoutOutputCollector collector) {

this.collector = collector;

}

@Override

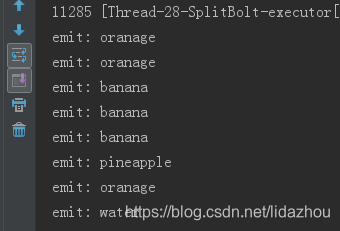

public void nextTuple() {

Random random = new Random();

String word = words[random.nextInt(words.length)];

this.collector.emit(new Values(word));

System.out.println("emit: " + word);

Utils.sleep(1000);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("line"));

}

}

/**

* 对数据进行分割

*/

public static class SplitBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple input) {

String word = input.getStringByField("line");

this.collector.emit(new Values(word));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

/**

* 词频汇总Bolt

*/

public static class CountBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector collector) {

this.collector = collector;

}

Map<String, Integer> map = new HashMap<>();

/**

* 业务逻辑:

* 1)获取每个单词

* 2)对所有单词进行汇总

* 3)输出

*/

@Override

public void execute(Tuple tuple) {

//1)获取每个单词

String word = tuple.getStringByField("word");

Integer count = map.get(word);

if (count == null) {

count = 0;

}

//2)对所有单词进行汇总

count++;

map.put(word, count);

//3)输出

this.collector.emit(new Values(word, map.get(word)));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "count"));

}

}

public static class WordCountStoreMapper implements RedisStoreMapper {

private RedisDataTypeDescription description;

private final String hashKey = "wc";

public WordCountStoreMapper() {

description = new RedisDataTypeDescription(

RedisDataTypeDescription.RedisDataType.HASH, hashKey);

}

@Override

public RedisDataTypeDescription getDataTypeDescription() {

return description;

}

@Override

public String getKeyFromTuple(ITuple tuple) {

return tuple.getIntegerByField("word") + "";

}

@Override

public String getValueFromTuple(ITuple iTuple) {

return null;

}

}

public static void main(String[] args) {

// 通过TopologyBuilder根据Spout和Bolt构建Topology

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("DataSourceSpout", new DataSourceSpout());

builder.setBolt("SplitBolt", new SplitBolt()).shuffleGrouping("DataSourceSpout");

builder.setBolt("CountBolt", new CountBolt()).shuffleGrouping("SplitBolt");

JedisPoolConfig poolConfig=new JedisPoolConfig.Builder()

.setHost("172.20.21.100").setPort(6379).build();

RedisStoreMapper storeMapper = new WordCountStoreMapper();

RedisStoreBolt storeBolt = new RedisStoreBolt(poolConfig, storeMapper);

builder.setBolt("RedisStoreBolt",storeBolt).shuffleGrouping("CountBolt");

// 创建本地集群

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("LocalWordCountRedisStormTopology", new Config(), builder.createTopology());

}

}

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-jdbc</artifactId>

<version>${storm.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.31</version>

</dependency>

package com.ldz.bigdata.intergration.jdbc;

import com.google.common.collect.Maps;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.jdbc.bolt.JdbcInsertBolt;

import org.apache.storm.jdbc.common.ConnectionProvider;

import org.apache.storm.jdbc.common.HikariCPConnectionProvider;

import org.apache.storm.jdbc.mapper.JdbcMapper;

import org.apache.storm.jdbc.mapper.SimpleJdbcMapper;

import org.apache.storm.redis.bolt.RedisStoreBolt;

import org.apache.storm.redis.common.config.JedisPoolConfig;

import org.apache.storm.redis.common.mapper.RedisDataTypeDescription;

import org.apache.storm.redis.common.mapper.RedisStoreMapper;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.ITuple;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

import java.util.HashMap;

import java.util.Map;

import java.util.Random;

/**

* @Author: Dazhou Li

* @Description:使用Storm完成词频统计功能

* @CreateDate: 2019/2/8 0008 12:07

*/

public class LocalWordCountJDBCStormTopology {

public static class DataSourceSpout extends BaseRichSpout {

private SpoutOutputCollector collector;

public static final String[] words = new String[]{"apple", "oranage", "banana", "pineapple", "water"};

@Override

public void open(Map map, TopologyContext topologyContext, SpoutOutputCollector collector) {

this.collector = collector;

}

@Override

public void nextTuple() {

Random random = new Random();

String word = words[random.nextInt(words.length)];

this.collector.emit(new Values(word));

System.out.println("emit: " + word);

Utils.sleep(1000);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("line"));

}

}

/**

* 对数据进行分割

*/

public static class SplitBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple input) {

String word = input.getStringByField("line");

this.collector.emit(new Values(word));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

/**

* 词频汇总Bolt

*/

public static class CountBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector collector) {

this.collector = collector;

}

Map<String, Integer> map = new HashMap<>();

/**

* 业务逻辑:

* 1)获取每个单词

* 2)对所有单词进行汇总

* 3)输出

*/

@Override

public void execute(Tuple tuple) {

//1)获取每个单词

String word = tuple.getStringByField("word");

Integer count = map.get(word);

if (count == null) {

count = 0;

}

//2)对所有单词进行汇总

count++;

map.put(word, count);

//3)输出

this.collector.emit(new Values(word, map.get(word)));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "word_count"));

}

}

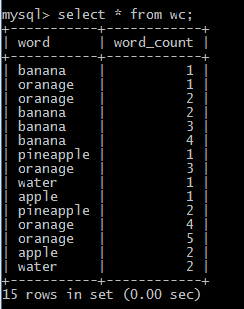

public static void main(String[] args) {

// 通过TopologyBuilder根据Spout和Bolt构建Topology

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("DataSourceSpout", new DataSourceSpout());

builder.setBolt("SplitBolt", new SplitBolt()).shuffleGrouping("DataSourceSpout");

builder.setBolt("CountBolt", new CountBolt()).shuffleGrouping("SplitBolt");

Map hikariConfigMap = Maps.newHashMap();

hikariConfigMap.put("dataSourceClassName", "com.mysql.jdbc.jdbc2.optional.MysqlDataSource");

hikariConfigMap.put("dataSource.url", "jdbc:mysql://localhost/mysql"); //数据库

hikariConfigMap.put("dataSource.user", "root");

hikariConfigMap.put("dataSource.password", "123456");

ConnectionProvider connectionProvider = new HikariCPConnectionProvider(hikariConfigMap);

String tableName = "wc";

JdbcMapper simpleJdbcMapper = new SimpleJdbcMapper(tableName, connectionProvider);

JdbcInsertBolt userPersistanceBolt = new JdbcInsertBolt(connectionProvider, simpleJdbcMapper)

.withTableName(tableName)

.withQueryTimeoutSecs(30);

builder.setBolt("JdbcInsertBolt", userPersistanceBolt).shuffleGrouping("CountBolt");

// 创建本地集群

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("LocalWordCountJDBCStormTopology", new Config(), builder.createTopology());

}

}

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-hdfs</artifactId>

<version>${storm.version}</version>

<exclusions> //防止jar包冲突

<exclusion>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-auth</artifactId>

</exclusion>

</exclusions>

</dependency>

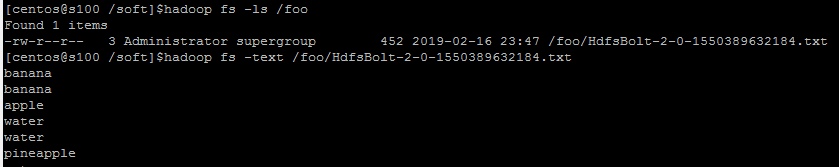

$hadoop fs -chmod 777 /foo //修改自己创建的文件的权限

$hadoop fs -text /foo/HdfsBolt-2-0-1550389632184.txt

package com.ldz.bigdata.intergration.hdfs;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.hdfs.bolt.HdfsBolt;

import org.apache.storm.hdfs.bolt.format.DefaultFileNameFormat;

import org.apache.storm.hdfs.bolt.format.DelimitedRecordFormat;

import org.apache.storm.hdfs.bolt.format.FileNameFormat;

import org.apache.storm.hdfs.bolt.format.RecordFormat;

import org.apache.storm.hdfs.bolt.rotation.FileRotationPolicy;

import org.apache.storm.hdfs.bolt.rotation.FileSizeRotationPolicy;

import org.apache.storm.hdfs.bolt.sync.CountSyncPolicy;

import org.apache.storm.hdfs.bolt.sync.SyncPolicy;

import org.apache.storm.redis.bolt.RedisStoreBolt;

import org.apache.storm.redis.common.config.JedisPoolConfig;

import org.apache.storm.redis.common.mapper.RedisDataTypeDescription;

import org.apache.storm.redis.common.mapper.RedisStoreMapper;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.ITuple;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

import java.util.HashMap;

import java.util.Map;

import java.util.Random;

/**

* @Author: Dazhou Li

* @Description:使用Storm完成词频统计功能

* @CreateDate: 2019/2/8 0008 12:07

*/

public class LocalWordCountHdfsStormTopology {

public static class DataSourceSpout extends BaseRichSpout {

private SpoutOutputCollector collector;

public static final String[] words = new String[]{"apple", "oranage", "banana", "pineapple", "water"};

@Override

public void open(Map map, TopologyContext topologyContext, SpoutOutputCollector collector) {

this.collector = collector;

}

/**

* 业务:

* 1) 读取指定目录的文件夹下的数据:/Users/rocky/data/storm/wc

* 2) 把每一行数据发射出去

*/

@Override

public void nextTuple() {

Random random = new Random();

String word = words[random.nextInt(words.length)];

this.collector.emit(new Values(word));

System.out.println("emit: " + word);

Utils.sleep(1000);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("line"));

}

}

/**

* 对数据进行分割

*/

public static class SplitBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple input) {

String word = input.getStringByField("line");

this.collector.emit(new Values(word));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

public static void main(String[] args) {

// 通过TopologyBuilder根据Spout和Bolt构建Topology

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("DataSourceSpout", new DataSourceSpout());

builder.setBolt("SplitBolt", new SplitBolt()).shuffleGrouping("DataSourceSpout");

// use "|" instead of "," for field delimiter

RecordFormat format = new DelimitedRecordFormat()

.withFieldDelimiter("|");

// sync the filesystem after every 100 tuples

SyncPolicy syncPolicy = new CountSyncPolicy(100);

// rotate files when they reach 5MB

FileRotationPolicy rotationPolicy = new FileSizeRotationPolicy(5.0f, FileSizeRotationPolicy.Units.MB);

FileNameFormat fileNameFormat = new DefaultFileNameFormat()

.withPath("/foo/");

HdfsBolt bolt = new HdfsBolt()

.withFsUrl("hdfs://172.20.21.100:8020")

.withFileNameFormat(fileNameFormat)

.withRecordFormat(format)

.withRotationPolicy(rotationPolicy)

.withSyncPolicy(syncPolicy);

builder.setBolt("HdfsBolt",bolt).shuffleGrouping("SplitBolt");

// 创建本地集群

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("LocalWordCountHdfsStormTopology", new Config(), builder.createTopology());

}

}

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>16.0.1</version>

</dependency>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-hbase</artifactId>

<version>${storm.version}</version>

<exclusions>

<exclusion>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

</exclusion>

</exclusions>

</dependency>

package com.ldz.bigdata.intergration.hbase;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.hbase.bolt.HBaseBolt;

import org.apache.storm.hbase.bolt.mapper.SimpleHBaseMapper;

import org.apache.storm.redis.bolt.RedisStoreBolt;

import org.apache.storm.redis.common.config.JedisPoolConfig;

import org.apache.storm.redis.common.mapper.RedisDataTypeDescription;

import org.apache.storm.redis.common.mapper.RedisStoreMapper;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.ITuple;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

import java.util.HashMap;

import java.util.Map;

import java.util.Random;

/**

* @Author: Dazhou Li

* @Description:使用Storm完成词频统计功能

* @CreateDate: 2019/2/8 0008 12:07

*/

public class LocalWordCountHbaseStormTopology {

public static class DataSourceSpout extends BaseRichSpout {

private SpoutOutputCollector collector;

public static final String[] words = new String[]{"apple", "oranage", "banana", "pineapple", "water"};

@Override

public void open(Map map, TopologyContext topologyContext, SpoutOutputCollector collector) {

this.collector = collector;

}

/**

* 业务:

* 1) 读取指定目录的文件夹下的数据:/Users/rocky/data/storm/wc

* 2) 把每一行数据发射出去

*/

@Override

public void nextTuple() {

Random random = new Random();

String word = words[random.nextInt(words.length)];

this.collector.emit(new Values(word));

System.out.println("emit: " + word);

Utils.sleep(1000);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("line"));

}

}

/**

* 对数据进行分割

*/

public static class SplitBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple input) {

String word = input.getStringByField("line");

this.collector.emit(new Values(word));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

/**

* 词频汇总Bolt

*/

public static class CountBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector collector) {

this.collector = collector;

}

Map<String, Integer> map = new HashMap<>();

/**

* 业务逻辑:

* 1)获取每个单词

* 2)对所有单词进行汇总

* 3)输出

*/

@Override

public void execute(Tuple tuple) {

//1)获取每个单词

String word = tuple.getStringByField("word");

Integer count = map.get(word);

if (count == null) {

count = 0;

}

//2)对所有单词进行汇总

count++;

map.put(word, count);

//3)输出

this.collector.emit(new Values(word, map.get(word)));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "count"));

}

}

public static void main(String[] args) {

Config config = new Config();

HashMap<String, Object> hbaseConf = new HashMap<>();

hbaseConf.put("hbase.rootdir", "hdfs://172.20.21.100:8020/hbase");

hbaseConf.put("hbase.zookeeper.quorum", "172.20.21.100:2181");

config.put("hbase.conf", hbaseConf);

// 通过TopologyBuilder根据Spout和Bolt构建Topology

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("DataSourceSpout", new DataSourceSpout());

builder.setBolt("SplitBolt", new SplitBolt()).shuffleGrouping("DataSourceSpout");

builder.setBolt("CountBolt", new CountBolt()).shuffleGrouping("SplitBolt");

SimpleHBaseMapper mapper = new SimpleHBaseMapper()

.withRowKeyField("word") //rowkey,与前面输出一致

.withColumnFields(new Fields("word")) //列

.withCounterFields(new Fields("count"))

.withColumnFamily("cf"); //列族

HBaseBolt hBaseBolt = new HBaseBolt("WordCount", mapper)

.withConfigKey("hbase.conf"); //表名

builder.setBolt("HBaseBolt", hBaseBolt).shuffleGrouping("CountBolt");

// 创建本地集群

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("LocalWordCountHbaseStormTopology",

config, builder.createTopology());

}

}

本文介绍Apache Storm与多种数据存储系统的整合方案,包括Redis、JDBC、HDFS和HBase。通过具体示例展示如何配置和使用这些组件来实现数据的持久化。

本文介绍Apache Storm与多种数据存储系统的整合方案,包括Redis、JDBC、HDFS和HBase。通过具体示例展示如何配置和使用这些组件来实现数据的持久化。

949

949

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?