dump生产环境hiveserver堆快照,并使用mat工具打开,进行内存泄露检查

可以看到AuditBatchQueue持有的对象引用高达1G多

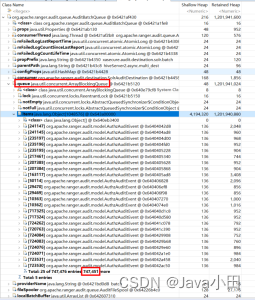

进一步点进去看:

可以看到AuditBatchQueue中有个queue对象,queue里面的元素数量高达74万。

queue里面的对象元素为AuthzAuditEvent,使用jmap histo:live ${pid} | grep AuthzAuditEvent命令,可以看到AuthzAuditEvent的实例个数和这里的数量基本是一样的,并且这个数量在不断升高

目前已经升高到80万+:

AuditBatchQueue这个类的代码并不在hiveserver中,而是在ranger中,ranger的代码是以plugin的形式被hiveserver加载执行的。

这个类的主要功能是用于记录审计日志,使用了生产者-消费者的模式。生产者将事件写到内存队列中,消费者消费数据并将审计日志写入hdfs、solr等。

通过查看线程栈,也可以看到消费者线程一直卡在Thread.sleep()这里

查看AuditBatchQueue.runLogAudit()代码,分析为啥线程会一直卡在sleep这里

分析得知主要是fileSpooler.isPending()这个方法,一直返回true导致。

进一步跟进AuditFileSpool这个类的代码,结合代码和日志平台,找到一些比较关键的日志:

org.apache.solr.common.SolrException: java.util.concurrent.TimeoutException: Could not connect to ZooKeeper hdfs-prod02.yingzi.com:2181,hdfs-prod03.yingzi.com:2181,hdfs-prod04.yingzi.com:2181/infra-solr within 10000 ms

at org.apache.solr.common.cloud.SolrZkClient.<init>(SolrZkClient.java:183) ~[?:?]

at org.apache.solr.common.cloud.SolrZkClient.<init>(SolrZkClient.java:117) ~[?:?]

at org.apache.solr.common.cloud.SolrZkClient.<init>(SolrZkClient.java:107) ~[?:?]

at org.apache.solr.common.cloud.ZkStateReader.<init>(ZkStateReader.java:226) ~[?:?]

at org.apache.solr.client.solrj.impl.ZkClientClusterStateProvider.connect(ZkClientClusterStateProvider.java:131) ~[?:?]

at org.apache.solr.client.solrj.impl.CloudSolrClient.connect(CloudSolrClient.java:631) ~[?:?]

at org.apache.solr.client.solrj.impl.CloudSolrClient.requestWithRetryOnStaleState(CloudSolrClient.java:1084) ~[?:?]

at org.apache.solr.client.solrj.impl.CloudSolrClient.request(CloudSolrClient.java:1073) ~[?:?]

at org.apache.solr.client.solrj.SolrRequest.process(SolrRequest.java:160) ~[?:?]

at org.apache.solr.client.solrj.SolrClient.add(SolrClient.java:106) ~[?:?]

at org.apache.solr.client.solrj.SolrClient.add(SolrClient.java:71) ~[?:?]

at org.apache.solr.client.solrj.SolrClient.add(SolrClient.java:85) ~[?:?]

at org.apache.ranger.audit.utils.SolrAppUtil$1.run(SolrAppUtil.java:35) ~[?:?]

at org.apache.ranger.audit.utils.SolrAppUtil$1.run(SolrAppUtil.java:32) ~[?:?]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_181]

at javax.security.auth.Subject.doAs(Subject.java:422) ~[?:1.8.0_181]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730) ~[hadoop-common-3.1.1.3.0.1.0-187.jar:?]

at org.apache.ranger.audit.provider.MiscUtil.executePrivilegedAction(MiscUtil.java:523) ~[?:?]

at org.apache.ranger.audit.utils.SolrAppUtil.addDocsToSolr(SolrAppUtil.java:32) ~[?:?]

at org.apache.ranger.audit.destination.SolrAuditDestination.log(SolrAuditDestination.java:225) ~[?:?]

at org.apache.ranger.audit.provider.BaseAuditHandler.logJSON(BaseAuditHandler.java:172) ~[?:?]

at org.apache.ranger.audit.queue.AuditFileSpool.sendEvent(AuditFileSpool.java:879) ~[?:?]

at org.apache.ranger.audit.queue.AuditFileSpool.runLogAudit(AuditFileSpool.java:827) ~[?:?]

at org.apache.ranger.audit.queue.AuditFileSpool.run(AuditFileSpool.java:757) ~[?:?]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_181]

Caused by: java.util.concurrent.TimeoutException: Could not connect to ZooKeeper hdfs-prod02.yingzi.com:2181,hdfs-prod03.yingzi.com:2181,hdfs-prod04.yingzi.com:2181/infra-solr within 10000 ms

at org.apache.solr.common.cloud.ConnectionManager.waitForConnected(ConnectionManager.java:233) ~[?:?]

at org.apache.solr.common.cloud.SolrZkClient.<init>(SolrZkClient.java:175) ~[?:?]

... 24 more

2022-06-06T10:34:14,080 WARN [hiveServer2.async.multi_dest.batch_hiveServer2.async.multi_dest.batch.solr_destWriter]: provider.BaseAuditHandler (:()) - Log failure count: 1 in past 01:10.518 minutes; 52160 during process lifetime

2022-06-06T10:34:14,080 ERROR [hiveServer2.async.multi_dest.batch_hiveServer2.async.multi_dest.batch.solr_destWriter]: queue.AuditFileSpool(:()) - Error sending logs to consumer. provider=hiveServer2.async.multi_dest.batch, consumer=hiveServer2.async.multi_dest.batch.solr

2022-06-06T10:34:14,081 INFO [hiveServer2.async.multi_dest.batch_hiveServer2.async.multi_dest.batch.solr_destWriter]: queue.AuditFileSpool(:()) - Destination is down. sleeping for 30000 milli seconds. indexQueue=31, queueName=hiveServer2.async.multi_dest.batch, consumer=hiveServer2.async.multi_dest.batch.solr

可以很明显看出,是由于连接到zk超时导致审计日志写solor失败。

而之所以连接zk超时,是因为我们之前对zk做了迁移,但是hive审计日志这里的zk配置忘记改了。

把xasecure.audit.destination.solr.zookeepers这个配置改为正确的zk地址即可解决问题。

不过hive的审计日志其实并没有什么用,干脆直接禁用审计日志更省事

直接把xasecure.audit.is.enabled这里的勾选去掉即可,这个相当于是一个总开关。

保险一点把Audit to HDFS和Audit to SOLR的选项也一并去掉:

博客内容讲述了在Hive Server中出现内存泄露问题,通过MAT工具分析发现AuditBatchQueue持有大量AuthzAuditEvent对象,进一步调查发现是由于Solr审计日志目的地无法连接到ZooKeeper,原因是ZooKeeper配置错误。解决办法是更新审计配置中的ZooKeeper地址,或者直接禁用审计日志功能。

博客内容讲述了在Hive Server中出现内存泄露问题,通过MAT工具分析发现AuditBatchQueue持有大量AuthzAuditEvent对象,进一步调查发现是由于Solr审计日志目的地无法连接到ZooKeeper,原因是ZooKeeper配置错误。解决办法是更新审计配置中的ZooKeeper地址,或者直接禁用审计日志功能。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?