|

package com.jym.hadoop.mr.rjoin;

import java.io.IOException;

import java.lang.reflect.InvocationTargetException;

import java.util.ArrayList;

import org.apache.commons.beanutils.BeanUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class RJoin {

static class RJoinMapper extends Mapper<LongWritable, Text, Text, InfoBean>

{

InfoBean bean = new InfoBean();

Text k = new Text();

@Override

protected void map(LongWritable key, Text value,Context context) throws IOException, InterruptedException

{

String line = value.toString();

FileSplit inputSplit = (FileSplit) context.getInputSplit();

String name = inputSplit.getPath().getName();

String pid = "";

//通过文件名判断是哪种数据,在进行数据切分操作

if (name.startsWith("order"))

{

String[] fields = line.split(",");

pid = fields[2];

//id date pid amount

bean.set(Integer.parseInt(fields[0]),fields[1], fields[2], Integer.parseInt(fields[3]), "", 0, 0, "0");

}else

{

String[] fields = line.split(",");

pid = fields[0];

bean.set(0,"",pid,0,fields[1],Integer.parseInt(fields[2]),Float.parseFloat(fields[3]),"1");

}

k.set(pid);

context.write(k, bean);

}

}

static class RJoinReducer extends Reducer<Text, InfoBean, InfoBean, NullWritable>

{

InfoBean pdBean = new InfoBean();

ArrayList<InfoBean> orderBeans = new ArrayList<>();

@Override

protected void reduce(Text pid, Iterable<InfoBean> beans,Context context) throws IOException, InterruptedException

{

for(InfoBean bean : beans)

{

if ("1".equals(bean.getFlag()))

{

try

{

BeanUtils.copyProperties(pdBean,bean);

} catch (IllegalAccessException e) {

e.printStackTrace();

} catch (InvocationTargetException e) {

e.printStackTrace();

}

}else

{

InfoBean odBean = new InfoBean();

try

{

BeanUtils.copyProperties(odBean,bean);

orderBeans.add(odBean);

} catch (IllegalAccessException e)

{

e.printStackTrace();

} catch (InvocationTargetException e)

{

e.printStackTrace();

}

}

}

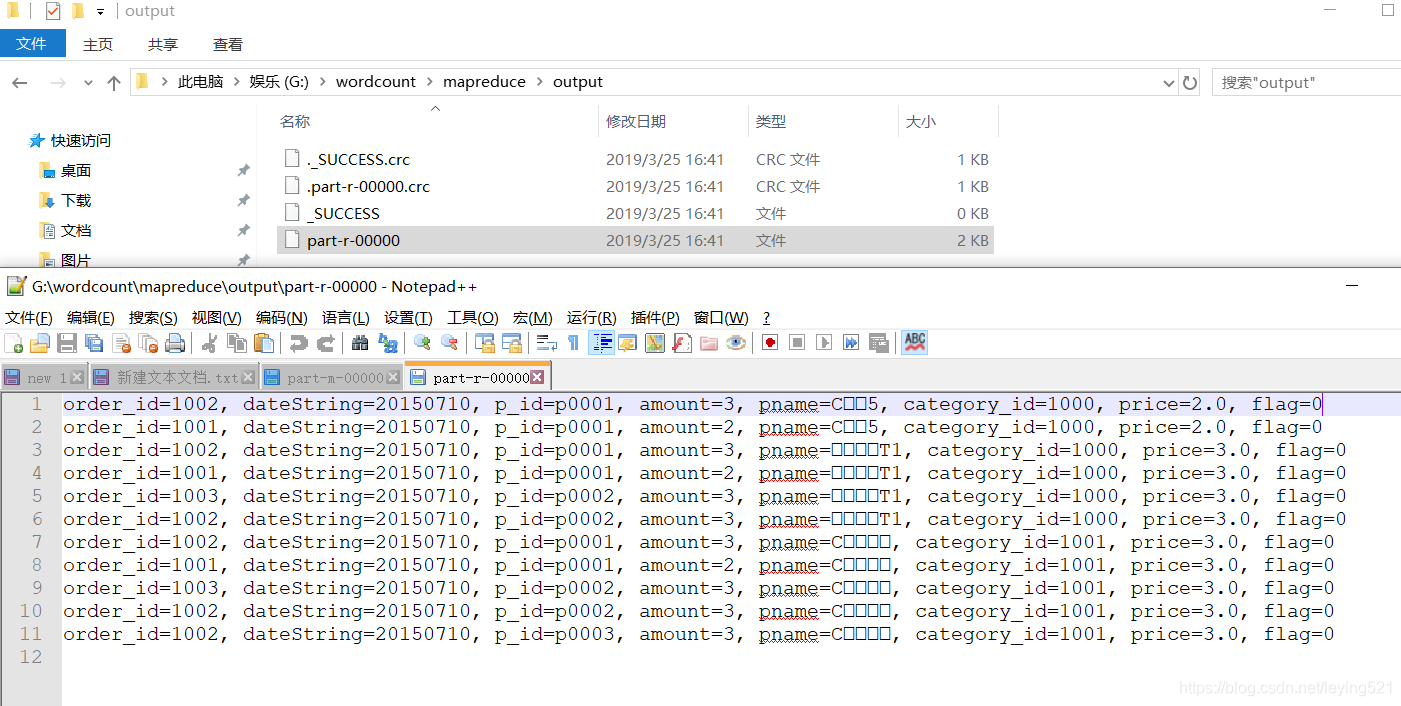

//拼接两类数据形成最终结果

for(InfoBean bean:orderBeans)

{

bean.setPname(pdBean.getPname());

bean.setCategory_id(pdBean.getCategory_id());

bean.setPrice(pdBean.getPrice());

context.write(bean, NullWritable.get());

}

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException

{

Configuration conf=new Configuration();

Job job = Job.getInstance(conf);

//指定本程序的jar包所在的本地路径

job.setJarByClass(RJoin.class);

//指定本业务job要使用的mapper/reducer业务类

job.setMapperClass(RJoinMapper.class);

job.setReducerClass(RJoinReducer.class);

//指定mapper输出数据的kv类型;

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(InfoBean.class);

//指定最终输出的数据的kv类型

job.setOutputKeyClass(InfoBean.class);

job.setOutputValueClass(NullWritable.class);

//指定job的输入原始文件所在目录

//FileInputFormat.setInputPaths(job, new Path(args[0])); //传一个路径参数

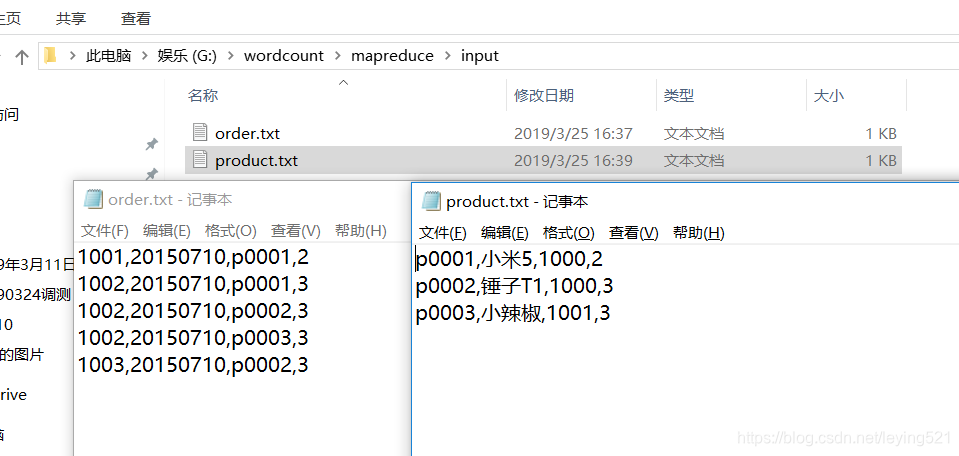

FileInputFormat.setInputPaths(job, new Path("G:/wordcount/mapreduce/input")); //传一个路径

//指定job的输出结果所在目录

//FileOutputFormat.setOutputPath(job, new Path(args[1])); //传一个参数进来作为输出的路径参数

FileOutputFormat.setOutputPath(job, new Path("G:/wordcount/mapreduce/output")); //传一个参数进来作为输出的路径参数

//将job中配置的相关参数,以及job所用的Java类所在的jar包,提交给yarn去运行;

boolean res = job.waitForCompletion(true);

System.exit(res?0:1);

}

}

|

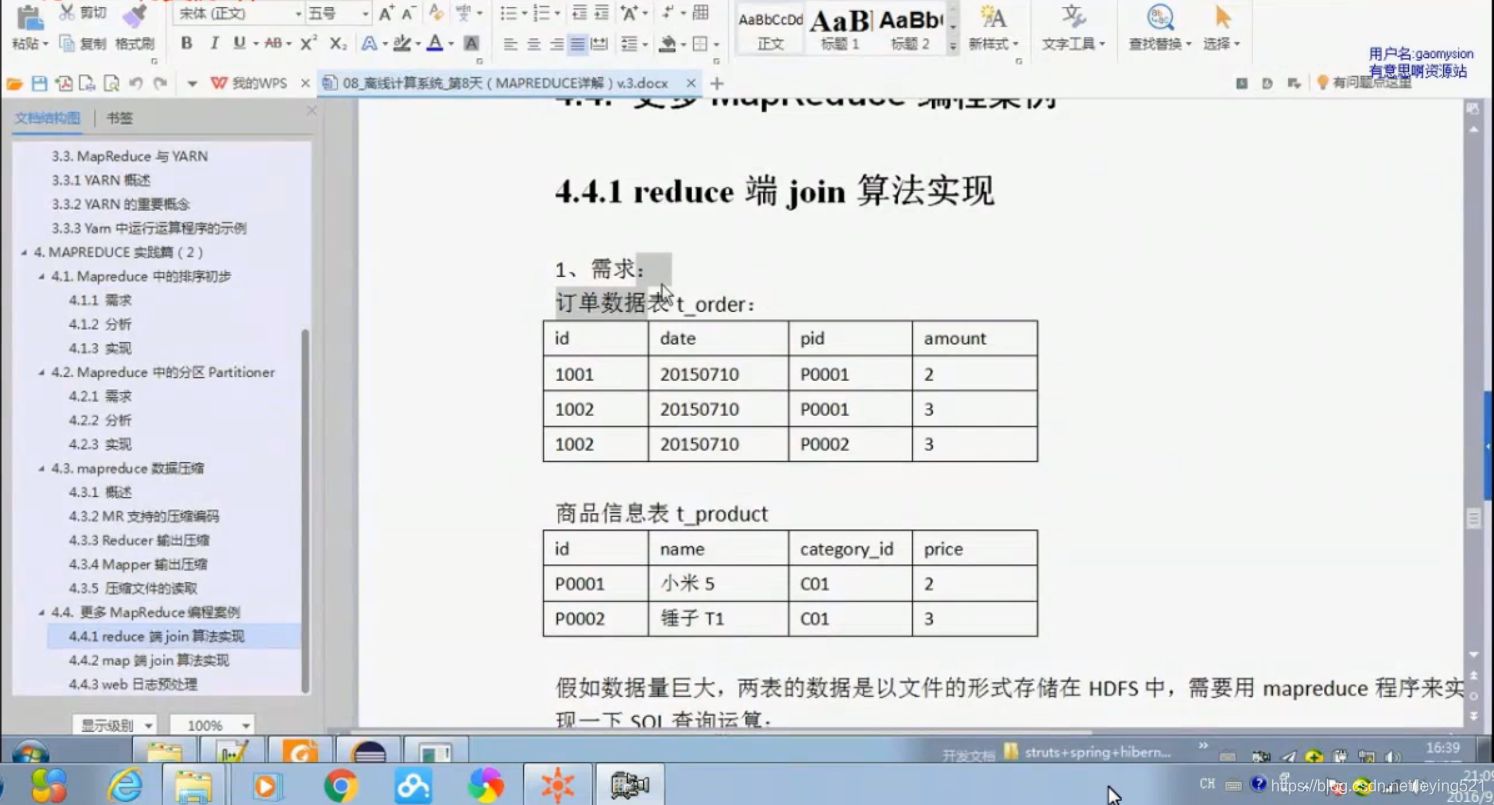

本文介绍了一种使用Hadoop MapReduce实现的RJoin算法,该算法能够有效地合并两个表中的数据。通过自定义InfoBean类来封装订单表和产品信息表的数据,并在Map阶段将数据按PID分组,在Reduce阶段完成数据的整合。

本文介绍了一种使用Hadoop MapReduce实现的RJoin算法,该算法能够有效地合并两个表中的数据。通过自定义InfoBean类来封装订单表和产品信息表的数据,并在Map阶段将数据按PID分组,在Reduce阶段完成数据的整合。

426

426

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?