引言:批处理的现代价值

在大数据时代,批处理(Batch Processing) 作为数据处理的核心范式,正经历着复兴。尽管实时流处理备受关注,但批处理在数据仓库构建、历史数据分析、报表生成等场景中仍不可替代。Python凭借其丰富的数据处理库和简洁的语法,已成为批处理任务的首选工具之一。

本文将深入探讨Python批处理的核心技术、架构设计、性能优化和实战应用,通过6000+字的系统解析和原创代码示例,帮助您构建高效可靠的大规模数据处理系统。

第一部分:批处理基础与架构

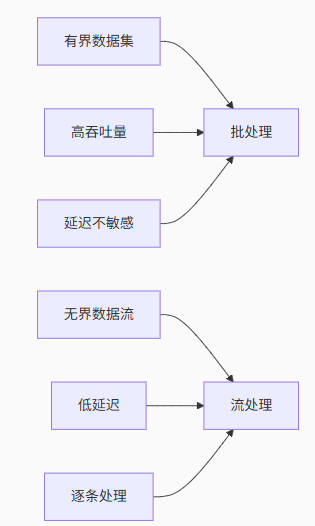

1.1 批处理的核心特征

批处理区别于流处理的三大特性:

典型应用场景:

-

夜间ETL作业

-

月度财务报表生成

-

用户行为历史分析

-

机器学习模型训练

1.2 批处理系统架构

现代Python批处理系统的典型架构:

数据源 --> 提取 --> 处理引擎 --> 存储

↑ ↓

调度器 <-- 监控系统

1.2.1 分层架构实现

class BatchProcessingSystem:

"""Python批处理系统基础架构"""

def __init__(self):

self.extractors = []

self.transformers = []

self.loaders = []

self.scheduler = None

self.monitor = BatchMonitor()

def add_extractor(self, extractor):

"""添加数据提取器"""

self.extractors.append(extractor)

def add_transformer(self, transformer):

"""添加数据转换器"""

self.transformers.append(transformer)

def add_loader(self, loader):

"""添加数据加载器"""

self.loaders.append(loader)

def run_pipeline(self):

"""执行批处理管道"""

try:

# 阶段1: 数据提取

raw_data = []

for extractor in self.extractors:

self.monitor.log(f"开始提取: {extractor.name}")

data = extractor.extract()

raw_data.append(data)

self.monitor.log(f"提取完成: {len(data)} 条记录")

# 阶段2: 数据处理

processed_data = raw_data

for transformer in self.transformers:

self.monitor.log(f"开始转换: {transformer.name}")

processed_data = transformer.transform(processed_data)

self.monitor.log(f"转换完成")

# 阶段3: 数据加载

for loader, data in zip(self.loaders, processed_data):

self.monitor.log(f"开始加载: {loader.name}")

loader.load(data)

self.monitor.log(f"加载完成: {len(data)} 条记录")

self.monitor.report_success()

except Exception as e:

self.monitor.report_failure(str(e))

raise

第二部分:核心处理技术

2.1 内存批处理:Pandas

Pandas是中小规模数据批处理的首选工具:

import pandas as pd

import numpy as np

class PandasBatchProcessor:

"""基于Pandas的批处理器"""

def __init__(self, chunk_size=10000):

self.chunk_size = chunk_size

def process_large_csv(self, input_path, output_path):

"""处理大型CSV文件"""

# 分块读取

chunks = pd.read_csv(input_path, chunksize=self.chunk_size)

processed_chunks = []

for i, chunk in enumerate(chunks):

print(f"处理分块 #{i+1}")

# 执行转换操作

chunk = self._clean_data(chunk)

chunk = self._transform_data(chunk)

chunk = self._calculate_metrics(chunk)

processed_chunks.append(chunk)

# 合并结果

result = pd.concat(processed_chunks)

# 保存结果

result.to_parquet(output_path, index=False)

print(f"处理完成,总记录数: {len(result)}")

def _clean_data(self, df):

"""数据清洗"""

# 删除空值

df = df.dropna(subset=['important_column'])

# 处理异常值

df = df[(df['value'] >= 0) & (df['value'] <= 1000)]

return df

def _transform_data(self, df):

"""数据转换"""

# 类型转换

df['date'] = pd.to_datetime(df['timestamp'], unit='s')

# 特征工程

df['value_category'] = pd.cut(df['value'], bins=[0, 50, 100, 200, np.inf])

return df

def _calculate_metrics(self, df):

"""指标计算"""

# 分组聚合

agg_df = df.groupby('category').agg({

'value': ['sum', 'mean', 'count']

})

agg_df.columns = ['total', 'average', 'count']

return agg_df.reset_index()

2.2 分布式批处理:Dask

Dask用于处理超出内存限制的大型数据集:

import dask.dataframe as dd

from dask.distributed import Client

class DaskBatchProcessor:

"""基于Dask的分布式批处理器"""

def __init__(self, cluster_address=None):

# 连接Dask集群

self.client = Client(cluster_address) if cluster_address else Client()

print(f"连接到Dask集群: {self.client.dashboard_link}")

def process_distributed_data(self, input_paths, output_path):

"""处理分布式数据"""

# 创建Dask DataFrame

ddf = dd.read_parquet(input_paths)

# 数据转换

ddf = ddf[ddf['value'] > 0] # 过滤

ddf['value_normalized'] = ddf['value'] / ddf.groupby('group')['value'].transform('max')

# 复杂计算

ddf['category'] = dd.map_partitions(

self._categorize,

ddf,

meta=('category', 'str')

)

# 聚合操作

result = ddf.groupby('category').agg({

'value': ['sum', 'mean', 'count'],

'value_normalized': 'mean'

}).compute()

# 保存结果

result.to_parquet(output_path)

# 关闭客户端

self.client.close()

def _categorize(self, partition):

"""自定义分类函数(在每个分区执行)"""

# 复杂分类逻辑

conditions = [

(partition['value'] < 10),

(partition['value'] < 50) & (partition['value'] >= 10),

(partition['value'] >= 50)

]

choices = ['low', 'medium', 'high']

partition['category'] = np.select(conditions, choices, default='unknown')

return partition

2.3 云原生批处理:PySpark

PySpark适合在Hadoop集群或云平台上处理超大规模数据:

from pyspark.sql import SparkSession

from pyspark.sql import functions as F

class SparkBatchProcessor:

"""基于PySpark的批处理器"""

def __init__(self):

self.spark = SparkSession.builder \

.appName("LargeScaleBatchProcessing") \

.config("spark.sql.shuffle.partitions", "200") \

.getOrCreate()

def process_huge_dataset(self, input_path, output_path):

"""处理超大规模数据集"""

# 读取数据

df = self.spark.read.parquet(input_path)

print(f"初始记录数: {df.count()}")

# 数据清洗

df = df.filter(F.col("value").isNotNull()) \

.filter(F.col("value") > 0)

# 数据转换

df = df.withColumn("date", F.to_date(F.from_unixtime("timestamp"))) \

.withColumn("value_category", self._categorize_udf(F.col("value")))

# 聚合操作

result = df.groupBy("date", "value_category") \

.agg(

F.sum("value").alias("total_value"),

F.avg("value").alias("avg_value"),

F.count("*").alias("record_count")

)

# 保存结果

result.write.parquet(output_path, mode="overwrite")

print(f"处理完成,结果保存至: {output_path}")

# 停止Spark会话

self.spark.stop()

@staticmethod

def _categorize_udf():

"""定义分类UDF"""

def categorize(value):

if value < 10: return "low"

elif value < 50: return "medium"

else: return "high"

return F.udf(categorize, StringType())

第三部分:性能优化策略

3.1 并行处理技术

from concurrent.futures import ThreadPoolExecutor, as_completed

import multiprocessing

class ParallelProcessor:

"""并行批处理执行器"""

def __init__(self, max_workers=None):

self.max_workers = max_workers or multiprocessing.cpu_count() * 2

def process_in_parallel(self, task_list, task_function):

"""并行处理任务列表"""

results = []

with ThreadPoolExecutor(max_workers=self.max_workers) as executor:

# 提交所有任务

future_to_task = {

executor.submit(task_function, task): task

for task in task_list

}

# 收集结果

for future in as_completed(future_to_task):

task = future_to_task[future]

try:

result = future.result()

results.append(result)

except Exception as e:

print(f"任务 {task} 失败: {str(e)}")

return results

# 使用示例

def process_file(file_path):

"""单个文件处理函数"""

print(f"处理文件: {file_path}")

# 实际处理逻辑

return f"{file_path}_processed"

if __name__ == "__main__":

files = [f"data/file_{i}.csv" for i in range(100)]

processor = ParallelProcessor(max_workers=8)

results = processor.process_in_parallel(files, process_file)

print(f"处理完成 {len(results)} 个文件")

3.2 内存优化技巧

class MemoryOptimizedProcessor:

"""内存优化的批处理器"""

def __init__(self, max_memory_mb=1024):

self.max_memory = max_memory_mb * 1024 * 1024 # 转换为字节

def process_large_data(self, data_generator):

"""处理大型数据集(使用生成器)"""

batch = []

current_size = 0

for item in data_generator:

item_size = self._estimate_size(item)

# 检查批次内存

if current_size + item_size > self.max_memory:

# 处理当前批次

self._process_batch(batch)

# 重置批次

batch = []

current_size = 0

batch.append(item)

current_size += item_size

# 处理剩余批次

if batch:

self._process_batch(batch)

def _process_batch(self, batch):

"""处理单个批次"""

print(f"处理批次: {len(batch)} 条记录")

# 实际处理逻辑

# ...

def _estimate_size(self, item):

"""估算对象内存占用(简化版)"""

return len(str(item)) * 8 # 近似估算

3.3 磁盘辅助处理

import sqlite3

import os

import pickle

class DiskBackedProcessor:

"""磁盘辅助的批处理器"""

def __init__(self, temp_dir="temp"):

self.temp_dir = temp_dir

os.makedirs(temp_dir, exist_ok=True)

def process_very_large_data(self, data_generator):

"""处理超大数据集(使用磁盘辅助)"""

# 步骤1: 分块写入磁盘

chunk_files = []

chunk_size = 100000 # 每块记录数

current_chunk = []

for i, item in enumerate(data_generator):

current_chunk.append(item)

if len(current_chunk) >= chunk_size:

chunk_file = self._save_chunk(current_chunk, i // chunk_size)

chunk_files.append(chunk_file)

current_chunk = []

if current_chunk:

chunk_file = self._save_chunk(current_chunk, len(chunk_files))

chunk_files.append(chunk_file)

# 步骤2: 并行处理分块

results = []

with multiprocessing.Pool() as pool:

results = pool.map(self._process_chunk_file, chunk_files)

# 步骤3: 合并结果

final_result = self._combine_results(results)

# 步骤4: 清理临时文件

for file in chunk_files:

os.remove(file)

return final_result

def _save_chunk(self, chunk, index):

"""保存分块到磁盘"""

file_path = os.path.join(self.temp_dir, f"chunk_{index}.pkl")

with open(file_path, 'wb') as f:

pickle.dump(chunk, f)

return file_path

def _process_chunk_file(self, file_path):

"""处理单个分块文件"""

with open(file_path, 'rb') as f:

chunk = pickle.load(f)

# 实际处理逻辑

return len(chunk) # 示例返回结果

def _combine_results(self, results):

"""合并处理结果"""

return sum(results)

第四部分:错误处理与容错机制

4.1 健壮的批处理框架

class RobustBatchProcessor:

"""带错误处理和重试的批处理器"""

def __init__(self, max_retries=3, retry_delay=10):

self.max_retries = max_retries

self.retry_delay = retry_delay

def safe_process(self, processing_func, data):

"""安全执行处理函数"""

retries = 0

while retries <= self.max_retries:

try:

result = processing_func(data)

return result

except TransientError as e: # 可重试错误

print(f"可重试错误: {str(e)}. 重试 {retries}/{self.max_retries}")

retries += 1

time.sleep(self.retry_delay * retries)

except CriticalError as e: # 不可恢复错误

print(f"不可恢复错误: {str(e)}")

raise

except Exception as e: # 其他未知错误

print(f"未知错误: {str(e)}")

raise

raise MaxRetriesExceeded(f"超过最大重试次数 {self.max_retries}")

# 自定义异常

class TransientError(Exception):

"""临时性错误(可重试)"""

pass

class CriticalError(Exception):

"""关键性错误(不可恢复)"""

pass

class MaxRetriesExceeded(Exception):

"""超过最大重试次数"""

pass

4.2 状态检查点机制

import json

from abc import ABC, abstractmethod

class StatefulBatchProcessor(ABC):

"""支持检查点的状态化批处理器"""

def __init__(self, state_file="batch_state.json"):

self.state_file = state_file

self.state = self._load_state()

def process(self, data_source):

"""执行带状态检查点的处理"""

# 恢复上次状态

current_position = self.state.get("last_position", 0)

try:

for i, item in enumerate(data_source):

if i < current_position:

continue # 跳过已处理项

# 处理当前项

self.process_item(item)

# 更新状态

self.state["last_position"] = i + 1

# 定期保存状态

if (i + 1) % 1000 == 0:

self._save_state()

# 处理完成

self.state["completed"] = True

self._save_state()

except Exception as e:

print(f"处理在位置 {self.state['last_position']} 失败: {str(e)}")

self._save_state()

raise

@abstractmethod

def process_item(self, item):

"""处理单个数据项(由子类实现)"""

pass

def _load_state(self):

"""加载处理状态"""

try:

if os.path.exists(self.state_file):

with open(self.state_file, 'r') as f:

return json.load(f)

except:

pass

return {"last_position": 0, "completed": False}

def _save_state(self):

"""保存处理状态"""

with open(self.state_file, 'w') as f:

json.dump(self.state, f)

第五部分:批处理系统实战案例

5.1 电商数据分析系统

class EcommerceAnalyzer:

"""电商批处理分析系统"""

def __init__(self, data_path, output_path):

self.data_path = data_path

self.output_path = output_path

self.report_date = datetime.now().strftime("%Y-%m-%d")

def generate_daily_report(self):

"""生成每日分析报告"""

# 1. 加载数据

orders = self._load_orders()

users = self._load_users()

products = self._load_products()

# 2. 数据清洗

orders = self._clean_orders(orders)

# 3. 数据合并

merged = orders.merge(users, on='user_id', how='left') \

.merge(products, on='product_id', how='left')

# 4. 关键指标计算

report = {

"report_date": self.report_date,

"total_orders": len(orders),

"total_revenue": orders['amount'].sum(),

"top_products": self._top_products(merged),

"user_metrics": self._user_metrics(merged),

"category_analysis": self._category_analysis(merged)

}

# 5. 保存报告

self._save_report(report)

def _load_orders(self):

"""加载订单数据"""

return pd.read_parquet(f"{self.data_path}/orders")

def _load_users(self):

"""加载用户数据"""

return pd.read_parquet(f"{self.data_path}/users")

def _load_products(self):

"""加载产品数据"""

return pd.read_parquet(f"{self.data_path}/products")

def _clean_orders(self, orders):

"""清洗订单数据"""

# 过滤无效订单

orders = orders[orders['status'] == 'completed']

# 转换日期

orders['order_date'] = pd.to_datetime(orders['order_timestamp'], unit='s')

return orders

def _top_products(self, data):

"""计算热销商品"""

top = data.groupby('product_name')['amount'] \

.sum() \

.sort_values(ascending=False) \

.head(10)

return top.to_dict()

def _user_metrics(self, data):

"""用户指标分析"""

# 新用户数

new_users = data[data['user_type'] == 'new']['user_id'].nunique()

# 平均订单价值

avg_order_value = data.groupby('order_id')['amount'].sum().mean()

return {

"new_users": new_users,

"avg_order_value": round(avg_order_value, 2)

}

def _save_report(self, report):

"""保存分析报告"""

report_path = f"{self.output_path}/daily_report_{self.report_date}.json"

with open(report_path, 'w') as f:

json.dump(report, f, indent=2)

print(f"报告已保存至: {report_path}")

5.2 机器学习特征工程流水线

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler, OneHotEncoder

from sklearn.compose import ColumnTransformer

from sklearn.impute import SimpleImputer

class FeatureEngineeringPipeline:

"""批处理特征工程流水线"""

def __init__(self, config):

self.config = config

self.pipeline = self._build_pipeline()

def _build_pipeline(self):

"""构建特征工程流水线"""

# 数值特征处理

numeric_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='median')),

('scaler', StandardScaler())

])

# 分类特征处理

categorical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='constant', fill_value='missing')),

('onehot', OneHotEncoder(handle_unknown='ignore'))

])

# 组合处理

preprocessor = ColumnTransformer(

transformers=[

('num', numeric_transformer, self.config['numeric_features']),

('cat', categorical_transformer, self.config['categorical_features'])

])

return preprocessor

def process_batch(self, data):

"""处理数据批次"""

return self.pipeline.fit_transform(data)

def save_pipeline(self, file_path):

"""保存训练好的流水线"""

joblib.dump(self.pipeline, file_path)

print(f"流水线已保存至: {file_path}")

def load_pipeline(self, file_path):

"""加载预训练的流水线"""

self.pipeline = joblib.load(file_path)

return self

def transform_batch(self, data):

"""使用预训练流水线转换数据"""

return self.pipeline.transform(data)

# 使用示例

if __name__ == "__main__":

config = {

'numeric_features': ['age', 'income', 'credit_score'],

'categorical_features': ['gender', 'education', 'occupation']

}

# 加载数据

data = pd.read_csv("user_data.csv")

# 创建并运行特征工程

fe_pipeline = FeatureEngineeringPipeline(config)

processed_data = fe_pipeline.process_batch(data)

# 保存处理后的数据和流水线

pd.DataFrame(processed_data).to_parquet("processed_data.parquet")

fe_pipeline.save_pipeline("feature_pipeline.joblib")

第六部分:调度与监控系统

6.1 基于APScheduler的调度系统

from apscheduler.schedulers.background import BackgroundScheduler

from apscheduler.triggers.cron import CronTrigger

class BatchScheduler:

"""批处理作业调度器"""

def __init__(self):

self.scheduler = BackgroundScheduler()

self.jobs = {}

def add_daily_job(self, job_id, func, hour=3, minute=0):

"""添加每日任务"""

trigger = CronTrigger(hour=hour, minute=minute)

job = self.scheduler.add_job(func, trigger, id=job_id)

self.jobs[job_id] = job

print(f"已安排每日任务 {job_id} 在 {hour}:{minute} 执行")

def add_interval_job(self, job_id, func, hours=12):

"""添加间隔任务"""

job = self.scheduler.add_job(func, 'interval', hours=hours, id=job_id)

self.jobs[job_id] = job

print(f"已安排间隔任务 {job_id} 每 {hours} 小时执行")

def start(self):

"""启动调度器"""

self.scheduler.start()

print("调度器已启动")

def shutdown(self):

"""关闭调度器"""

self.scheduler.shutdown()

print("调度器已关闭")

# 使用示例

if __name__ == "__main__":

def generate_reports():

print("开始生成报告...")

# 实际报告生成逻辑

print("报告生成完成")

scheduler = BatchScheduler()

scheduler.add_daily_job("daily_report", generate_reports, hour=2, minute=30)

scheduler.start()

try:

# 保持主线程运行

while True:

time.sleep(1)

except KeyboardInterrupt:

scheduler.shutdown()

6.2 批处理监控系统

import logging

from logging.handlers import RotatingFileHandler

import socket

class BatchMonitor:

"""批处理作业监控系统"""

def __init__(self, log_file="batch_monitor.log"):

self.logger = self._setup_logger(log_file)

self.hostname = socket.gethostname()

self.start_time = datetime.now()

self.metrics = {

"processed_items": 0,

"errors": 0,

"last_error": None

}

def _setup_logger(self, log_file):

"""配置日志记录器"""

logger = logging.getLogger("BatchMonitor")

logger.setLevel(logging.INFO)

# 文件处理器

file_handler = RotatingFileHandler(

log_file, maxBytes=10*1024*1024, backupCount=5

)

file_formatter = logging.Formatter(

'%(asctime)s - %(levelname)s - %(message)s'

)

file_handler.setFormatter(file_formatter)

# 控制台处理器

console_handler = logging.StreamHandler()

console_handler.setFormatter(file_formatter)

logger.addHandler(file_handler)

logger.addHandler(console_handler)

return logger

def log(self, message, level="info"):

"""记录消息"""

log_method = getattr(self.logger, level.lower(), self.logger.info)

log_method(message)

def increment_counter(self, counter_name, amount=1):

"""增加计数器"""

if counter_name in self.metrics:

self.metrics[counter_name] += amount

def record_error(self, error_message):

"""记录错误"""

self.metrics["errors"] += 1

self.metrics["last_error"] = error_message

self.log(f"错误: {error_message}", "error")

def report_start(self, job_name):

"""报告作业开始"""

self.start_time = datetime.now()

self.log(f"作业 {job_name} 在 {self.hostname} 开始执行")

def report_success(self, job_name):

"""报告作业成功"""

duration = datetime.now() - self.start_time

self.log(

f"作业 {job_name} 成功完成! "

f"处理时长: {duration.total_seconds():.2f}秒, "

f"处理项: {self.metrics['processed_items']}"

)

self._reset_counters()

def report_failure(self, job_name, error_message):

"""报告作业失败"""

duration = datetime.now() - self.start_time

self.record_error(error_message)

self.log(

f"作业 {job_name} 失败! "

f"运行时长: {duration.total_seconds():.2f}秒, "

f"错误: {error_message}",

"error"

)

def _reset_counters(self):

"""重置计数器"""

self.metrics = {k: 0 for k in self.metrics}

self.metrics["last_error"] = None

第七部分:最佳实践与未来趋势

7.1 Python批处理最佳实践

-

数据分块处理:始终将大数据集分解为可管理的块

-

资源监控:实时跟踪内存、CPU和I/O使用情况

-

幂等设计:确保作业可安全重试而不会产生副作用

-

增量处理:使用状态检查点处理新增数据

-

测试策略:

-

单元测试:针对每个处理函数

-

集成测试:完整管道测试

-

负载测试:模拟生产数据量

-

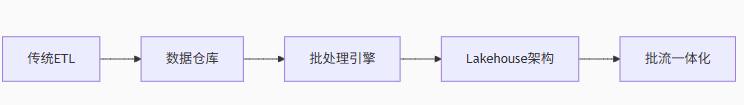

7.2 批处理架构演进

7.3 云原生批处理技术栈

| 组件类型 | AWS生态系统 | Azure生态系统 | GCP生态系统 |

|---|---|---|---|

| 存储 | S3, EFS | Blob Storage, ADLS | Cloud Storage |

| 计算引擎 | AWS Batch, EMR | Azure Batch, HDInsight | Dataproc, Dataflow |

| 编排调度 | Step Functions, MWAA | Data Factory | Cloud Composer |

| 监控 | CloudWatch | Monitor | Cloud Monitoring |

结语:批处理的未来之路

Python批处理技术正朝着更智能、更高效的方向发展:

-

AI增强处理:集成机器学习优化处理逻辑

-

自动优化:基于数据特征的运行时优化

-

无服务器批处理:按需使用的云原生架构

-

批流融合:统一批处理和流处理的编程模型

"批处理不是过时的技术,而是数据生态的基石。掌握批处理的艺术,就是掌握数据的过去、现在和未来。" —— 数据工程箴言

通过本文的系统探索,您已掌握Python批处理的核心技术和实践方法。无论您处理的是GB级还是PB级数据,这些知识和工具都能帮助您构建健壮、高效的批处理系统。在实际应用中,建议根据数据规模和处理需求灵活选择技术方案,并持续优化您的处理流水线。

771

771

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?