by Xiaoxiao Wen, Yijie Zhang, Zhenyu Gao, Weitao Luo

1 Introduction and Motivation

In this project, we study n-step bootstrapping in actor critic methods [1], more specific, we would like to investigate, when using n-step bootstrapping for advantage actor critic (A2C), how different values of n n n will affect the model performances, measured by different metrics, for example, convergence speed and stability (variance).

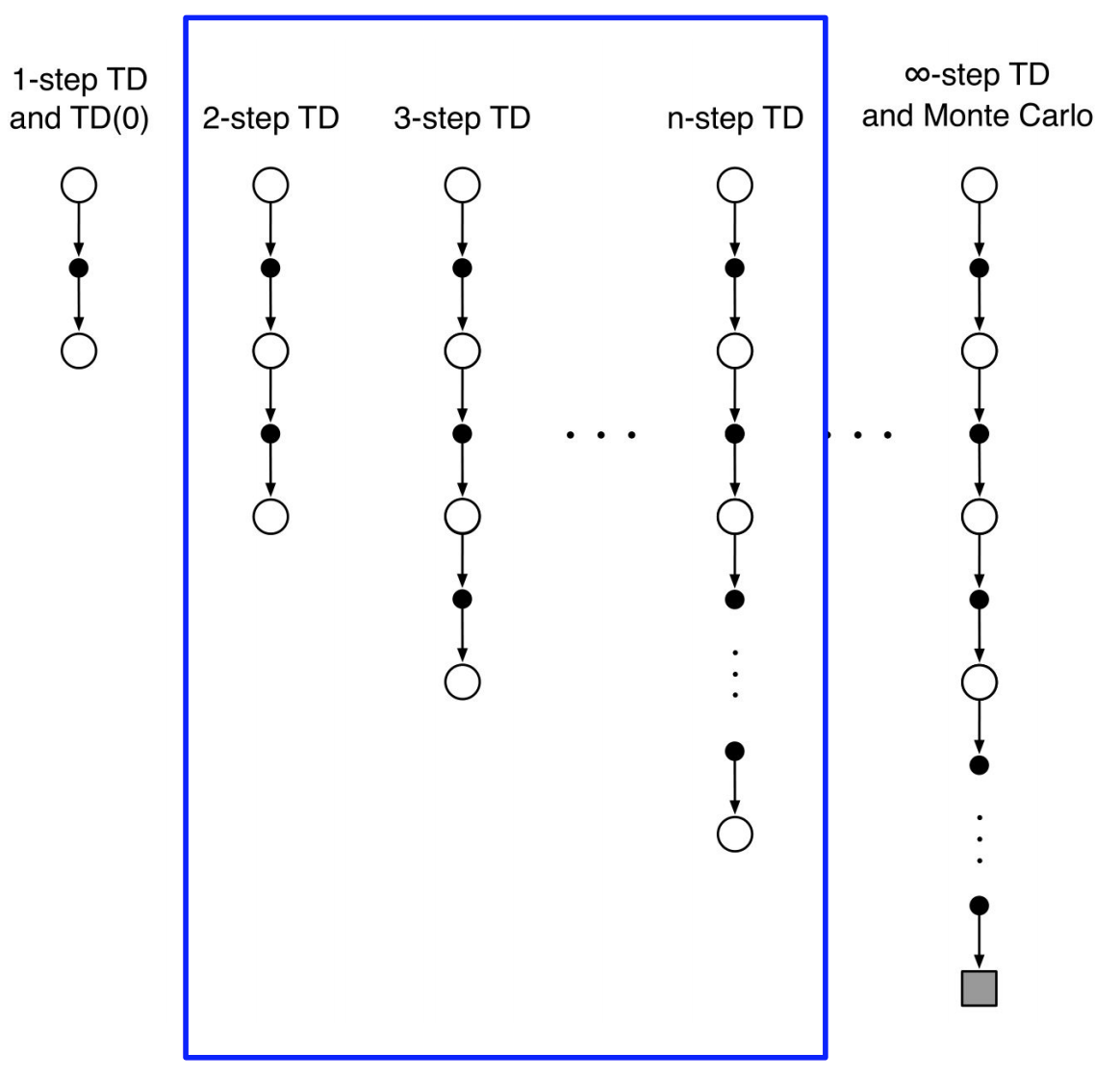

1.1 N-step bootstrapping

N-step bootstrapping [1], or TD(N) is a very important technique in Reinforcement Learning that performs update based on intermediate number of rewards. In this view, N-step bootstrapping unifies and generalizes the Monte Carlo (MC) methods and Temporal Difference (TD) methods. From one extreme, when N = 1 N=1 N=1, it is equivalent to TD(1), from another extreme, when N = ∞ N=\infty N=∞, i.e., taking as many steps as possible until the end of the episode, it becomes MC. As a result, N-step bootstrapping also combines the advantages of Monte Carlo and 1-step TD. Compared to 1-step TD, n-step bootstrapping will converge faster because it bootstraps with more real information and it is freed from the “tyranny of the time step”. Compared to MC, the updates do not have to wait until the end of the episode and it is also more efficient and less variants. In general, when facing different problems / situations, with a suitable N, we could often achieve faster and more stable learning.

1.2 Advantage Actor Critic (A2C)

Actor-Critic algorithms are a power families of learning algorithms within the policy-based framework in Reinforcement Learning. It composes of actor, the policy that makes decision and critic, the value function that evaluates if it is a good decision. With the assistant from critic, the actor can usually achieves better performance, such as by reducing gradient variance in vanilla policy gradients. From the GAE paper [2], John Schulman has unified the framework for advantage estimation, between all the GAE variants, we picked A2C considering the amazing performance of A3C and it is a simplified version of A3C with equivalent performance.

In the following sections, we first explain the method of n-step Bootstrapping for A2C, which also includes 1-step and Monte-Carlo as mentioned above, and then briefly introduce the neural network architecture. Subsequently, we introduce the conducted experiments with their corresponding settings and finally we discuss the results and draw some conclusions.

2 Methods

n-step Bootstrapping for A2C

n-step A2C is an online algorithm that uses roll-outs of size n + 1 of the current policy to perform a policy improvement step. In order to train the policy-head, an approximation of the policy-gradient is computed for each state of the roll-out

(

x

t

+

i

,

a

t

+

i

∼

π

(

⋅

∣

x

t

+

i

;

θ

π

)

,

r

t

+

i

)

i

=

0

n

\left(x_{t+i}, a_{t+i} \sim \pi\left(\cdot | x_{t+i} ; \theta_{\pi}\right), r_{t+i}\right)_{i=0}^{n}

(xt+i,at+i∼π(⋅∣xt+i;θπ),rt+i)i=0n, expressed as

∇

θ

π

log

(

π

(

a

t

+

i

∣

x

t

+

i

;

θ

π

)

)

[

Q

^

i

−

V

(

x

t

+

i

;

θ

V

)

]

\nabla_{\theta_{\pi}} \log \left(\pi\left(a_{t+i} | x_{t+i} ; \theta_{\pi}\right)\right)\left[\hat{Q}_{i}-V\left(x_{t+i} ; \theta_{V}\right)\right]

∇θπlog(π(at+i∣xt+i;θπ))[Q^i−V(xt+i;θV)]

where

Q

^

i

\hat{Q}_{i}

Q^i is an estimation of the return

Q

^

i

=

∑

j

=

i

n

−

1

γ

j

−

i

r

t

+

j

+

γ

n

−

i

V

(

x

t

+

n

;

θ

V

)

\hat{Q}_{i}=\sum_{j=i}^{n-1} \gamma^{j-i} r_{t+j}+\gamma^{n-i} V\left(x_{t+n} ; \theta_{V}\right)

Q^i=∑j=in−1γj−irt+j+γn−iV(xt+n;θV). The gradients

j

=

i

j=i

j=i

are then added to obtain the cumulative gradient of the roll-out as

∑

i

=

0

n

∇

θ

π

log

(

π

(

a

t

+

i

∣

x

t

+

i

;

θ

π

)

)

[

Q

^

i

−

V

(

x

t

+

i

;

θ

V

)

]

\sum_{i=0}^{n} \nabla_{\theta_{\pi}} \log \left(\pi\left(a_{t+i} | x_{t+i} ; \theta_{\pi}\right)\right)\left[\hat{Q}_{i}-V\left(x_{t+i} ; \theta_{V}\right)\right]

i=0∑n∇θπlog(π(at+i∣xt+i;θπ))[Q^i−V(xt+i;θV)]

A2C trains the value-head by minimising the error between the estimated return and the value as

∑

i

=

0

n

(

Q

^

i

−

V

(

x

t

+

i

;

θ

V

)

)

2

\sum_{i=0}^{n}\left(\hat{Q}_{i}-V\left(x_{t+i} ; \theta_{V}\right)\right)^{2}

i=0∑n(Q^i−V(xt+i;θV))2

Therefore, the network parameters

(

θ

π

,

θ

V

)

\left(\theta_{\pi}, \theta_{V}\right)

(θπ,θV) are updated after each roll-out as follows:

θ

π

←

θ

π

+

α

π

∑

i

=

0

n

∇

θ

π

log

(

π

(

a

t

+

i

∣

x

t

+

i

;

θ

π

)

)

[

Q

^

i

−

V

(

x

t

+

i

;

θ

V

)

]

θ

V

←

θ

V

−

α

V

∑

i

=

0

n

∇

θ

V

[

Q

^

i

−

V

(

x

t

+

i

;

θ

V

)

]

2

\begin{array}{l}{\theta_{\pi} \leftarrow \theta_{\pi}+\alpha_{\pi} \sum_{i=0}^{n} \nabla_{\theta_{\pi}} \log \left(\pi\left(a_{t+i} | x_{t+i} ; \theta_{\pi}\right)\right)\left[\hat{Q}_{i}-V\left(x_{t+i} ; \theta_{V}\right)\right]} \\ {\theta_{V} \leftarrow \theta_{V}-\alpha_{V} \sum_{i=0}^{n} \nabla_{\theta_{V}}\left[\hat{Q}_{i}-V\left(x_{t+i} ; \theta_{V}\right)\right]^{2}}\end{array}

θπ←θπ+απ∑i=0n∇θπlog(π(at+i∣xt+i;θπ))[Q^i−V(xt+i;θV)]θV←θV−αV∑i=0n∇θV[Q^i−V(xt+i;θV)]2

where

(

α

π

,

α

V

)

\left(\alpha_{\pi}, \alpha_{V}\right)

(απ,αV) are learning rates are policy-head and value-head.

2.2 Network Architecture

Similar to the A c t o r − c r i t i c e x a m p l e f r o m p y t o r c h \href{https://github.com/pytorch/examples/blob/master/reinforcement_learning/actor_critic.py}{Actor-critic example from pytorch} Actor−criticexamplefrompytorch and F l o o d s u n g ′ s r e p o \href{https://github.com/floodsung/a2c_cartpole_pytorch/blob/master/a2c_cartpole.py}{Floodsung's repo} Floodsung′srepo, we use simple and straightforward networks for both the actor function and the critic function.

2.2.1 Actor Network

The actor network consists of two fully connected layers which extract potential features from the input observations. To introduce non-linearity, the ReLU activations [3] are used upon these two layers. Then, the output is another fully connected layer which summarizes into one-dimensional continuous value.

2.2.2 Critic Network

The critic network shares the similar architecture with the actor network with the fully connected layers and the ReLU activations. However, depending on the environment, discrete or continuous action space, the final output is distinct. For discrete action space, the log \log log Softmax is used to compute the distribution of the available discrete actions. For continuous action space, we instead output a mean and a standard deviation for the univariate Gaussian distribution, which we later use to sample continuous actions.

3 Experiments

All our code can be see in Github.

3.1 Environments

For this project, our main goal is to compare the performance of the n-step bootstrapping variation of A2C with its Monte-Carlo and 1-step variations in a straightforward manner. Therefore, we do not seek to deliver an ultimate agent that can solve some complicated fancy games or park your car.

The experiments are designed with classical control problems, i.e. InvertedPendulum, CartPole, Acrobot, MountainCar etc. For the sake of convenience in terms of implementation, we use the off-the-shelf environments provided by O p e n A I ′ s G y m l i b r a r y \href{https://gym.openai.com/}{ OpenAI's Gym library} OpenAI′sGymlibrary. Under the category Classical Control \textit{Classical Control} Classical Control, we picked out two discrete environments: CartPole-v0 \textbf{CartPole-v0} CartPole-v0 and Acrobot-v1 \textbf{Acrobot-v1} Acrobot-v1.

The CartPole-v0 \textbf{CartPole-v0} CartPole-v0 environment contains a pole attached by a an un-actuated joint to a cart in a 2D plane. The cart moves left/right along a frictionless track. The goal is to balance the poll staying upright by indirectly influencing the velocity of the cart. There are 4 continuous observations of this environment, consisting of the Cart Position \textit{Cart Position} Cart Position, Cart Velocity \textit{Cart Velocity} Cart Velocity, Pole Angle \textit{Pole Angle} Pole Angle and Pole Velocity At Tip \textit{Pole Velocity At Tip} Pole Velocity At Tip within their corresponding range. The available actions consist of two discrete actions Push Left \textit{Push Left} Push Left and Push Right \textit{Push Right} Push Right, which increases the Cart Velocity \textit{Cart Velocity} Cart Velocity by an unknown amount in the corresponding direction. At starting state, all the observations are initialized with a small uniform random value. The termination of each episode is defined by the pole angle’s or the cart position’s exceeding a certain threshold, or when the episode length exceeds 200 steps. The reward is 1 for every step taken in each episode, indicating the durability of the stabilization by the agent. You can see it in the Figure 2 (Form “Using Bayesian Optimization for Reinforcement Learning”).

The Pendulum-v0 \textbf{Pendulum-v0} Pendulum-v0 environment contains a pendulum, or in other words, a link connected to an actuated joint in a 2D plane. The pendulum can spin frictionlessly. The goal is to keep the pendulum upright by applying a continuous torque on the joint. There are 3 continuous observations of this environment, consisting of the cos \cos cos and sin \sin sin values of the Pendulum Angle \textit{Pendulum Angle} Pendulum Angle respectively and the Angular Velocity \textit{Angular Velocity} Angular Velocity of the pendulum. The available action is a continuous action Apply Torque \textit{Apply Torque} Apply Torque. At starting state, the pendulum is initiated at a random angle and a random angular velocity. The environment is terminated when the episode length exceeds 200 steps. The reward is computed according to a function of the angle, angular velocity and the torque, which ranges from − 16 -16 −16 to 0 0 0, so the goal can be explained as to remain at zero angle, with the least angular velocity and the least torque, indicating the speed and the quality of the stabilization by the agent. You can see it in Figure 3 (From “Solving Reinforcement Learning Classic Control Problems | OpenAIGym”).

3.2 Settings

The hyperparameter settings are trivial for the actor and critic networks. In the aforementioned fully connected layers, we use a hidden size of 64 nodes, which we found empirically. Furthermore, the discount rate γ \gamma γ is set to 0.99.

In order to evaluate how different n n n will affect the performances, we need to conduct experiments and investigate the model behaviors in different learning stages.

To make the comparison objective and accurate, instead of fixing the number of training episodes, we fix the total number of 10000 10000 10000 training steps. More specific, for training, each agent will interact and learn in the environments for 100 100 100 steps according to their updating schemes strictly (n-steps, TD or MC). For example, if in a training cycle, the MC agent starts in time-step 14 and after 86 steps (totally 100 steps) the episode still continues, the MC agent will not receive any updates. Then, for evaluation, we run the agent in the exact environments as in training for 50 episodes and store the total rewards per episode. With this data, the learning behavior and model performance of each agent can be represented and compared scientifically in terms of mean and standard deviation per evaluation step and, furthermore, the mean and variance could be used to show the effectiveness and stability of learning accordingly.

To fully cover MC, TD and n-step bootstrapping, we chose and tested 6 different n n n given the different termination conditions of two environments, which ranges from 1-step to max-step (or equivalently Monte Carlo). Finally, to decrease the effect of randomness, three different random seeds are tested and the experiment settings are shown in Table 1.

- Training: each agent will gain experience in the environment for every 100 100 100 steps and learn (or update their networks) according to their schemes.

- Evaluation: after very 100 100 100 training steps, each agents will be tested in the same environments and play for 50 50 50 episodes. There will be no learning in this stage and the rewards will be stored.

| Environment | Train Steps | Eval Interval | Eval steps | Random Seeds | Steps Roll-out |

|---|---|---|---|---|---|

| CartPole-v0 | 10000 | 100 | 50 | [42, 36, 15] | [1, 10, 40, 80, 150, 200] |

| Pendulum-v0 | 10000 | 100 | 50 | [42, 36, 15] | [1, 10, 40, 80, 150, 200] |

4 Results

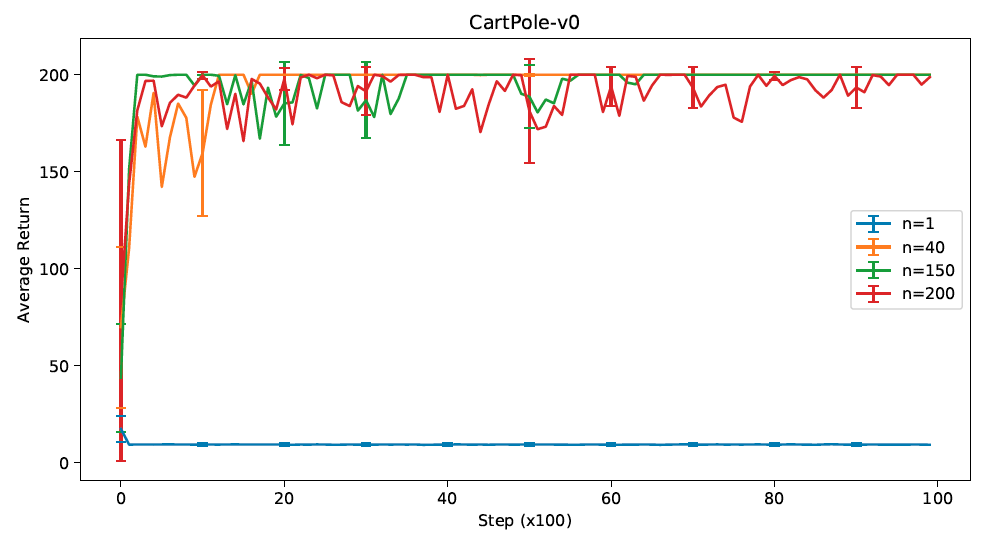

The results are shown in Figure 4 - Figure 7, where for better visualization and distinction, only two n-step variations are selected.

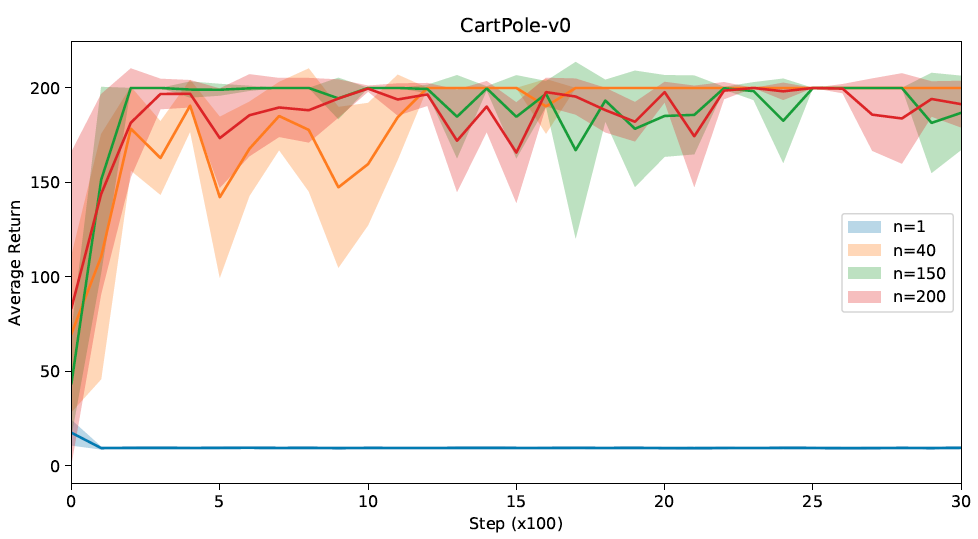

4.1 CartPole

As shown in Figure 4, generally, except for 1-step TD, all the variations converge finally to the expected average return of 200 200 200. The 1-step TD fails to learn and achieve an average reward of around 10, which is possibly because of the complexity of the environment, where the bias in 1-step learning is too large and the learning diverges. It can also be observed that, with increasing n n n, the variance increases, which also aligns with the fact that for Monte-Carlo methods compared with 1-step TD methods, the bias is low but the variance is high, and vice versa.

Furthermore, observing the truncated results for 3000 steps in Figure 5, we can see that because of the relatively higher biases for smaller n n n's (40), the convergences are significantly slower. While for n = 150 n=150 n=150 and n = 200 n=200 n=200, the convergence is fast.

To summarize, by taking into consideration both the convergence stability and the convergence speed, a relatively good performance is observed for n = 40 n=40 n=40.

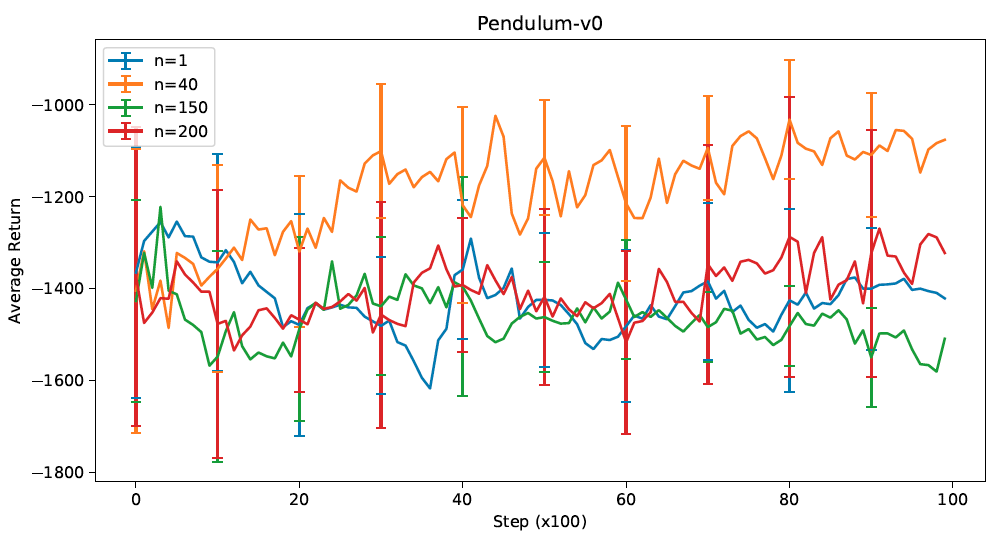

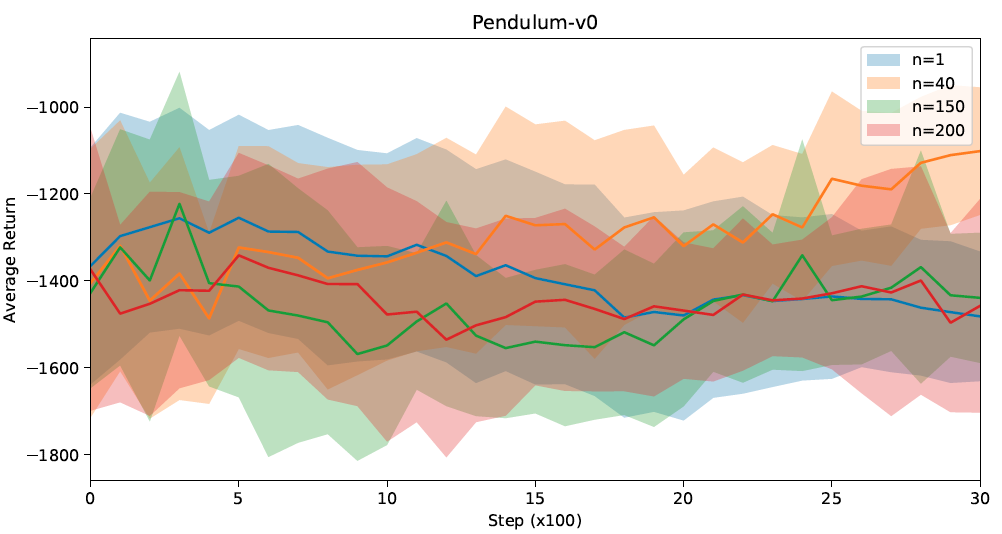

4.2 Pendulum

As shown in Figure 6, in general, the performance is not as good as the CartPole case, and convergence is hardly achieved. For n = 40 n=40 n=40, it is obvious that the learning curve tends to increase and converge, but for the other curves, given the high variance, no similar conclusions can be made. Nonetheless, the pattern with bias and variance trade-off w.r.t. n n n can still be observed, as the standard deviations for smaller n n n's (1, 40) are relatively higher than those for larger n n n's (150, 200).

On the other hand, by observing the truncated results in Figure 7, it can be seen that for n = 40 n=40 n=40, there tends to be a faster convergence compared to the others.

To summarize, n = 40 n=40 n=40 would be the preferable choice for this environment.

Conclusion

In this project, we investigate how different n for n-step bootstrapping could affect the learning behaviors of the A2C agent. We show that when using n=1, i.e., the TD method, the A2C agents are seldom capable of learning for both the CartPole and Pendulum environments. While using MC, for the CartPole environment, the A2C agents are capable of learning with faster convergence but show a more volatile and unstable behaviors. However, for the Pendulum environment, convergence is not observed and future investigations need to be done. After the experiments, in general, we show that n-step bootstrapping achieves a relatively better performance in terms of convergence stability and the convergence speed compared to 1-step TD and MC by compensating between bias and variance. Hence, choosing an appropriate intermediate n could be vital for different application or problems in Reinforcement Learning.

References

[1] Sutton, Richard S., and Andrew G. Barto. Reinforcement learning: An introduction. MIT press, 2018.

[2] Schulman, John, et al. “High-dimensional continuous control using generalized advantage estimation.” arXiv preprint arXiv:1506.02438 (2015).

[3] Agarap, Abien Fred. “Deep learning using rectified linear units (relu).” arXiv preprint arXiv:1803.08375 (2018).

本文探讨了N步引导技术在优势演员评论家(A2C)算法中的应用,通过对比不同N值对收敛速度和稳定性的影响,揭示了在强化学习中选择合适N值的重要性。

本文探讨了N步引导技术在优势演员评论家(A2C)算法中的应用,通过对比不同N值对收敛速度和稳定性的影响,揭示了在强化学习中选择合适N值的重要性。

859

859

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?