系统如下

[root@master ~]# lsb_release -a

LSB Version: :base-4.0-amd64:base-4.0-noarch:core-4.0-amd64:core-4.0-noarch:graphics-4.0-amd64:graphics-4.0-noarch:printing-4.0-amd64:printing-4.0-noarch

Distributor ID: CentOS

Description: CentOS release 6.4 (Final)

Release: 6.4

Codename: Final

java版本和路径

[root@master ~]# java -version

java version "1.8.0_181"

Java(TM) SE Runtime Environment (build 1.8.0_181-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode)

[root@master ~]# which java

/usr/java/jdk1.8.0_181/bin/java

linux ip地址:10.1.108.64

hadoop版本为2.8.0,下载地址 http://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-2.8.0/hadoop-2.8.0.tar.gz

1.安装JDK

2.下载hadoop之后解压到如下路径/root/hadoop/hadoop-2.8.0

修改/root/.bash_profile,增加如下并执行 bash /root/.bash_profile

export JAVA_HOME=/usr/java/jdk1.8.0_181

export HADOOP_HOME=/root/hadoop/hadoop-2.8.0

export PATH=$JAVA_HOME/bin:$PATH:$HOME/bin:$HADOOP_HOME/bin

修改/etc/profile,增加如下内容并使用source /etc/profile使其生效

export JAVA_HOME=/usr/java/jdk1.8.0_181

export HADOOP_HOME=/root/hadoop/hadoop-2.8.0

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR"

3.确认主机名,如下:

[root@master hadoop]# hostname

master

修改/etc/hosts,如下:

[root@master hadoop]# cat /etc/hosts

127.0.0.1 localhost master localhost4 localhost4.localdomain4

::1 localhost master localhost6 localhost6.localdomain6

修改/etc/sysconfig/network,如下:

[root@master hadoop]# cat /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=master

GATEWAY=10.1.108.1

reboot让修改生效

4.hadoop相关文件配置,包含core-site.xml,hdfs-site.xml,mapred-site.xml,yarn-site.xml,如下:

core-site.xml,在/root/hadoop/下新建tmp目录,主机名需要改成自己的,剩下的可以复制,

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/root/hadoop/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131702</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

</configuration>

hdfs-site.xml,在/root/hadoop下新建hdfs,在hdfs下新建name和data文件

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/root/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/root/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

yarn-site.xml,注意webapp.address一栏不能用master,必须用10.1.108.64,否则windows无法识别,浏览器无法打开页面

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>10.1.108.64:8088</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>6078</value>

</property>

</configuration>

5.修改脚本yarn-env.sh,找到JAVA_HOME,修改为export JAVA_HOME=/usr/java/jdk1.8.0_121,修改JAVA_HEAP_MAX=-Xmx1024m,修改脚本hadoop-env.sh,修改JAVA_HOME为export JAVA_HOME=/usr/java/jdk1.8.0_181

6.修改节点配置文件slaves,目前只有一个master,填写master,如下

[root@master hadoop]# cat slaves

master

[root@master hadoop]#

7.首次启动之前,在master节点执行namenode初始化,命令如下

hdfs namenode -format

最后出现如下内容说明成功:

18/09/18 16:24:21 INFO util.ExitUtil: Exiting with status 0

18/09/18 16:24:21 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost/127.0.0.1

************************************************************/

8.启动hadoop,这里将hdfs和mapreduce全部启动,如下:

[root@master hadoop-2.8.0]# pwd

/root/hadoop/hadoop-2.8.0

[root@master hadoop-2.8.0]# ./sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /root/hadoop/hadoop-2.8.0/logs/hadoop-root-namenode-master.out

master: starting datanode, logging to /root/hadoop/hadoop-2.8.0/logs/hadoop-root-datanode-master.out

Starting secondary namenodes [master]

master: starting secondarynamenode, logging to /root/hadoop/hadoop-2.8.0/logs/hadoop-root-secondarynamenode-master.out

18/09/18 16:28:00 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

starting yarn daemons

starting resourcemanager, logging to /root/hadoop/hadoop-2.8.0/logs/yarn-root-resourcemanager-master.out

master: starting nodemanager, logging to /root/hadoop/hadoop-2.8.0/logs/yarn-root-nodemanager-master.out

[root@master hadoop-2.8.0]#

查看节点,此时应该有节点了

[root@master hadoop-2.8.0]# ./bin/hdfs dfsadmin -report

Configured Capacity: 0 (0 B)

Present Capacity: 0 (0 B)

DFS Remaining: 0 (0 B)

DFS Used: 0 (0 B)

DFS Used%: NaN%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Pending deletion blocks: 0

-------------------------------------------------

输入jps显示如下内容表示成功

[root@master hadoop-2.8.0]# jps

18674 ResourceManager

18499 SecondaryNameNode

19108 Jps

18777 NodeManager

18351 DataNode

9.关闭linux防火墙,命令service iptables stop,查看防火墙状态为关闭

[root@master hadoop-2.8.0]# service iptables status

iptables: Firewall is not running.

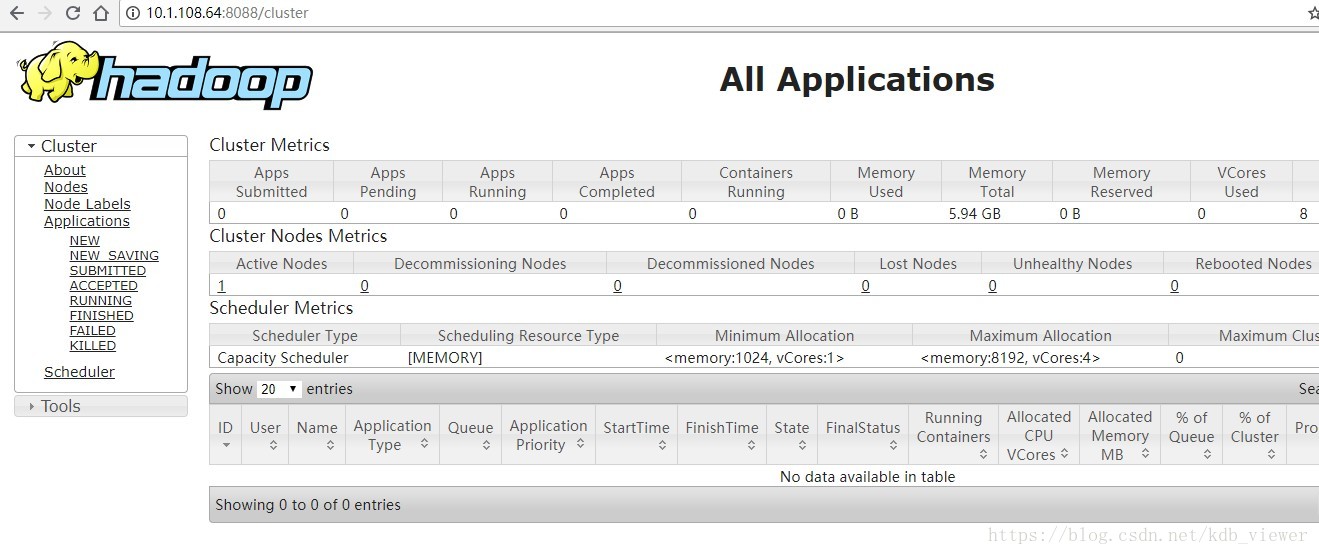

10.查看8088端口发现已经被监听,如下

[root@master hadoop-2.8.0]# netstat -lntp | grep 8088

tcp 0 0 ::ffff:10.1.108.64:8088 :::* LISTEN 21702/java

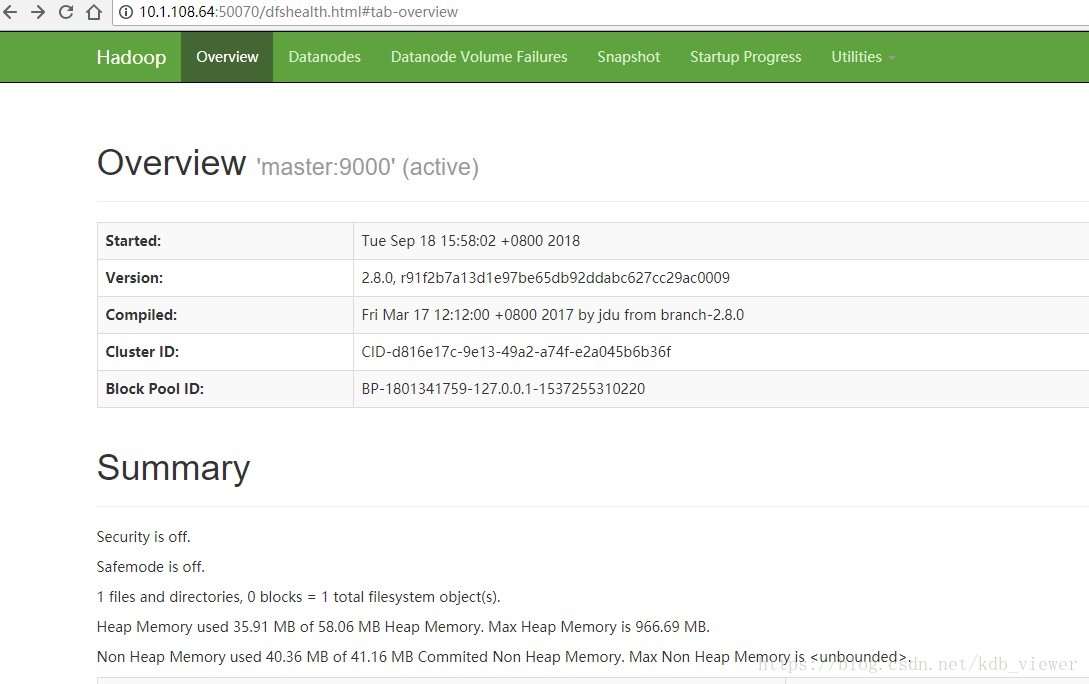

登陆http://10.1.108.64:8088和http://10.1.108.64:50070对hadoop进行管理,注意若要访问50070

1898

1898

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?