TITLE: Be Your Own Prada: Fashion Synthesis with Structural Coherence

AUTHOR: Shizhan Zhu, Sanja Fidler, Raquel Urtasun, Dahua Lin, Chen Change Loy

ASSOCIATION: The Chinese University of Hong Kong, University of Toronto, Vector Institute, Uber Advanced Technologies Group

FROM: ICCV2017

CONTRIBUTION

A method that can generate new outfits onto existing photos is developped so that it can

- retain the body shape and pose of the wearer,

- roduce regions and the associated textures that conform to the language description,

- Enforce coherent visibility of body parts.

METHOD

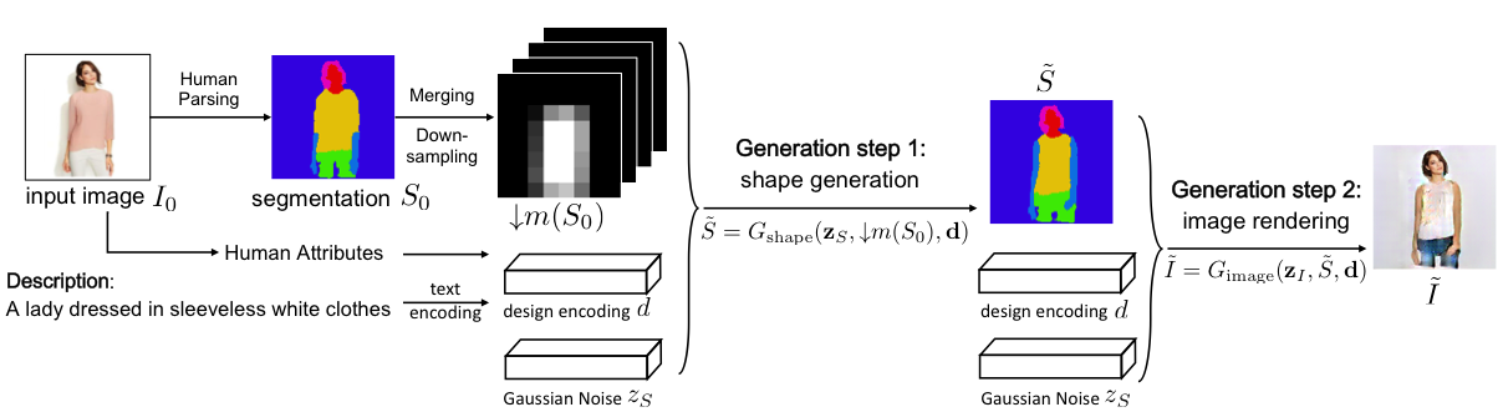

Given an input photograph of a person and a sentence description of a new desired outfit, the model first generates a segmentation map S~ using the generator from the first GAN. Then the new image is rendered with another GAN, with the guidance from the segmentation map generated in the previous step. At test time, the final rendered image is obtained with a forward pass through the two GAN networks. The workflow of this work is shown in the following figure.

The first generator

Gshape

aims to generate the desired semantic segmentation map

S~

by conditioning on the spatial constraint

↓m(S0)

, the design coding

d

, and the Gaussian noise

zS

.

S0

is the original pixel-wise one-hot segmentation map of the input image with height of

m

, width of

The second generator Gimage renders the final image I~ based on the generated segmentation map S~ , design coding d , and the Gaussian noise zI .

提出一种新方法,能够将描述的新穿搭合成为现有照片上的人体,同时保留穿戴者的身体形态和姿势,确保服装区域及纹理符合描述,并保持身体各部位的可见性一致性。

提出一种新方法,能够将描述的新穿搭合成为现有照片上的人体,同时保留穿戴者的身体形态和姿势,确保服装区域及纹理符合描述,并保持身体各部位的可见性一致性。

1074

1074

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?