伙伴系统

伙伴系统承担内核初始完后的物理内存管理工作,负责管理各个zone中的物理内存分配,释放。其基本工作原理如下:

- 把内存按照页划分成很多阶,最大阶为MAX_ORDER,一般设置为11,每个阶内存区的内存块数为2^n,我们称之为内存区。

- 当进程申请一段内存时,总是从适合大小的阶中分配指定内存区,比如当分配7k(4k * 2^1,7k离8k最近)内存的时候,会从第1阶分配对应的内存区。

- 当第k个阶的内存区全部被分完,没有可分配的k阶内存区时,会从第K+1阶划分出来两个新的内存区,供第K阶使用。比如第4阶的内存内存都分配完了,从第5阶分裂出来两个四阶的内存区。

- 当从高阶内存区划分出来的两个区都被释放时,该两个区的两个内存区会重新合并回高阶内存区。

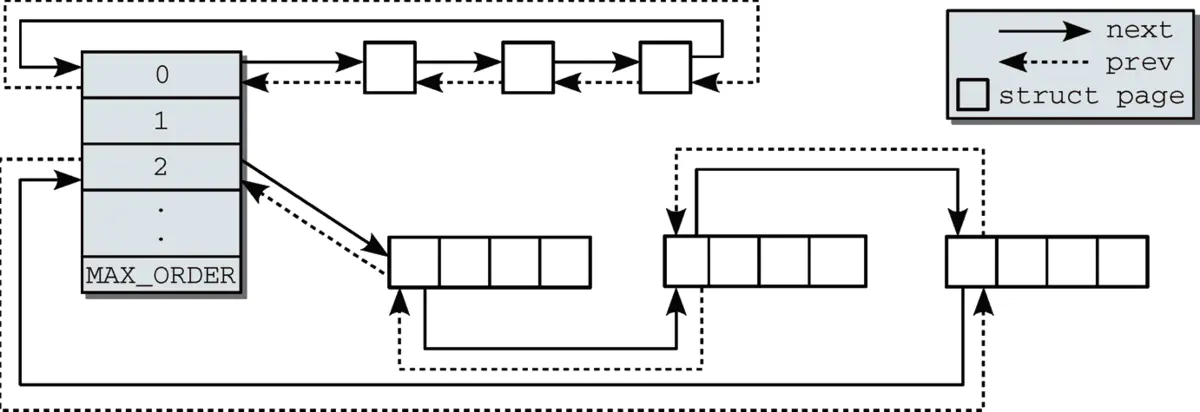

伙伴系统的结构

struct free_area {

struct list_head free_list[MIGRATE_TYPES]; /* 页类型,不可移动,可回收,不可回收,主要用于处理外部碎片 */

unsigned long nr_free; /* 当前内存域各个阶空闲区之和*/

};

nr_free的值是各个阶之和,不是空闲块之和。

伙伴系统中内存区示意图

每个阶的内存区不必是彼此连接的,而内存区内部是一块完整的内存。如果一个内存区在分解为两半时,会连接到对应的阶

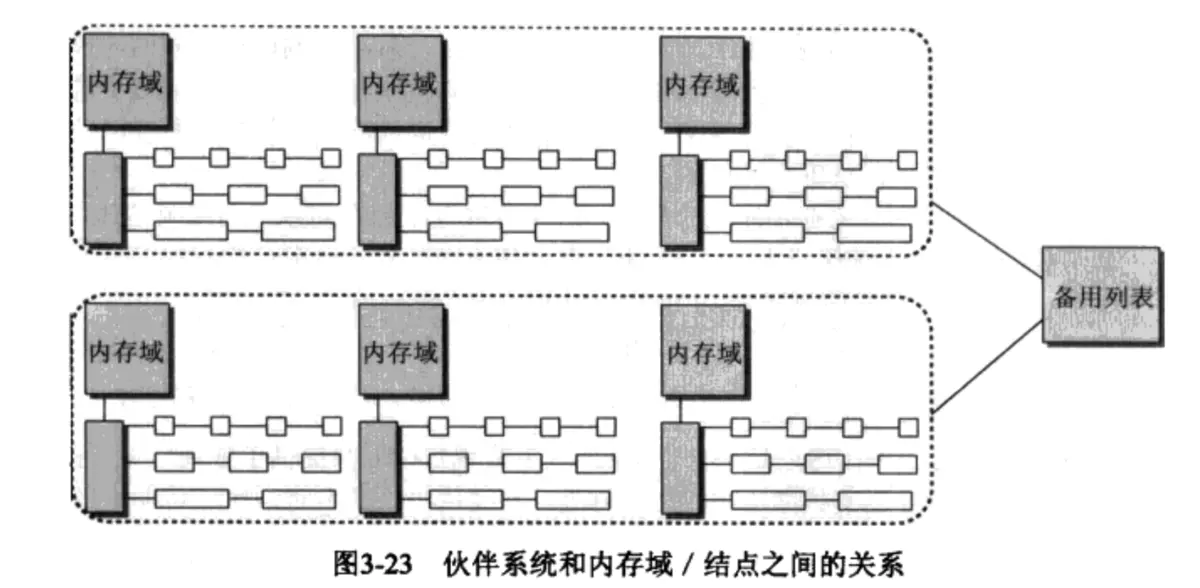

伙伴系统和内存域/节点之间的关系

前面有说到在NUMA系统中,每个内存节点会有内存域和备用节点链表,备用节点是从从可用内存节点中根据距离及负载按优先级排序的节点链表,而且每个节点内的内存域按照廉价到昂贵排序组成的备用列表,具体结构图如上。

碎片避免

之前说到外部内存碎片只涉及内核,因为只有内核是直接将虚拟地址映射为物理地址的(大多数内存),而用户空间是通过虚拟地址空间按页非连续分配的,这只是部分正确的,因为现在有很多CPU支持分配巨型页,因此用户空间也需要处理内存碎片问题。内存直接映射的最大问题就是会产生大量碎片,当内存反复分配,当第MAX_ORDER内存区没有可用空间时,就无法继续分配超过对应的内存了,而其实系统还存在大量的内存,内核反碎片的思想是尽量避免产生碎片。

物理内存碎片

碎片问题再存储中一般是通过移动整理存储块来解决,但是有些内存是不能移动的。为了解决内存碎片的问题,内核提出了"反碎片"化技术,即尽量不产生碎片,内核将内存区划分为三个类别:不可移动页,可回收页,可移动页,根据程序的特性选择对应的内存类别,来尽量避免内存碎片的产生。

不可移动页:内存固定,不能移动到其他地方,主要用于一些核心内核程序。

可回收页:不能直接移动,但是可以删除,其内容可以从某些源重新生成,比如映射自文件的数据,kswapd会会根据访问频率,周期性的释放此类内存。

可移动页:可以随意移动,从而将内存整理的更为紧凑,腾出大块连续的可分配内存,该类型页属于用户空间的页(不过用户空间的页也一般不需要移动,除了上述讨论的巨型页分配时需要)。

其实就算划分了不同类型的页,但是对于内核来说还是没法很好的解决内核内存碎片问题,内核在分配内存的时候,是怎么按类别分配呢?

当对应类型的空间不足时,该怎么办?

内核在分配物理内存时,如果对应类型的内存没有可分配空间时,会后退到下一级类型的类型,基本顺序为 不可移动>可回收>可移动>保留内存。

全局变量和辅助函数

也可移动性只有在系统中有适当内存时才会打开,那么内核是如何定义这个“适当”呢?就是通过两个全局变量pageblock_order和pageblock_pages计算的,第一个表示内核认为是“大”的一个分配阶,pageblock_pages表示该阶对应的页数,如果体系结构提供了巨型页的机制,pageblock_order也通常定义为巨型页对应的分配阶。而这个判断是在build_all_zonelists中判断的:

enum migratetype {

MIGRATE_UNMOVABLE,

MIGRATE_MOVABLE,

MIGRATE_RECLAIMABLE,

MIGRATE_PCPTYPES, /* the number of types on the pcp lists */

MIGRATE_HIGHATOMIC = MIGRATE_PCPTYPES,

#ifdef CONFIG_CMA

/*

* MIGRATE_CMA migration type is designed to mimic the way

* ZONE_MOVABLE works. Only movable pages can be allocated

* from MIGRATE_CMA pageblocks and page allocator never

* implicitly change migration type of MIGRATE_CMA pageblock.

*

* The way to use it is to change migratetype of a range of

* pageblocks to MIGRATE_CMA which can be done by

* __free_pageblock_cma() function. What is important though

* is that a range of pageblocks must be aligned to

* MAX_ORDER_NR_PAGES should biggest page be bigger then

* a single pageblock.

*/

MIGRATE_CMA,

#endif

#ifdef CONFIG_MEMORY_ISOLATION

MIGRATE_ISOLATE, /* can't allocate from here */

#endif

MIGRATE_TYPES

};

void __ref build_all_zonelists(pg_data_t *pgdat)

{

...

vm_total_pages = nr_free_pagecache_pages(); /* */

if (vm_total_pages < (pageblock_nr_pages * MIGRATE_TYPES))

page_group_by_mobility_disabled = 1;

else

page_group_by_mobility_disabled = 0;

...

}

unsigned long nr_free_pagecache_pages(void)

{

return nr_free_zone_pages(gfp_zone(GFP_HIGHUSER_MOVABLE));/* 获取 包含有可回收,可移动 类型的 zone 的 free pages*/

}

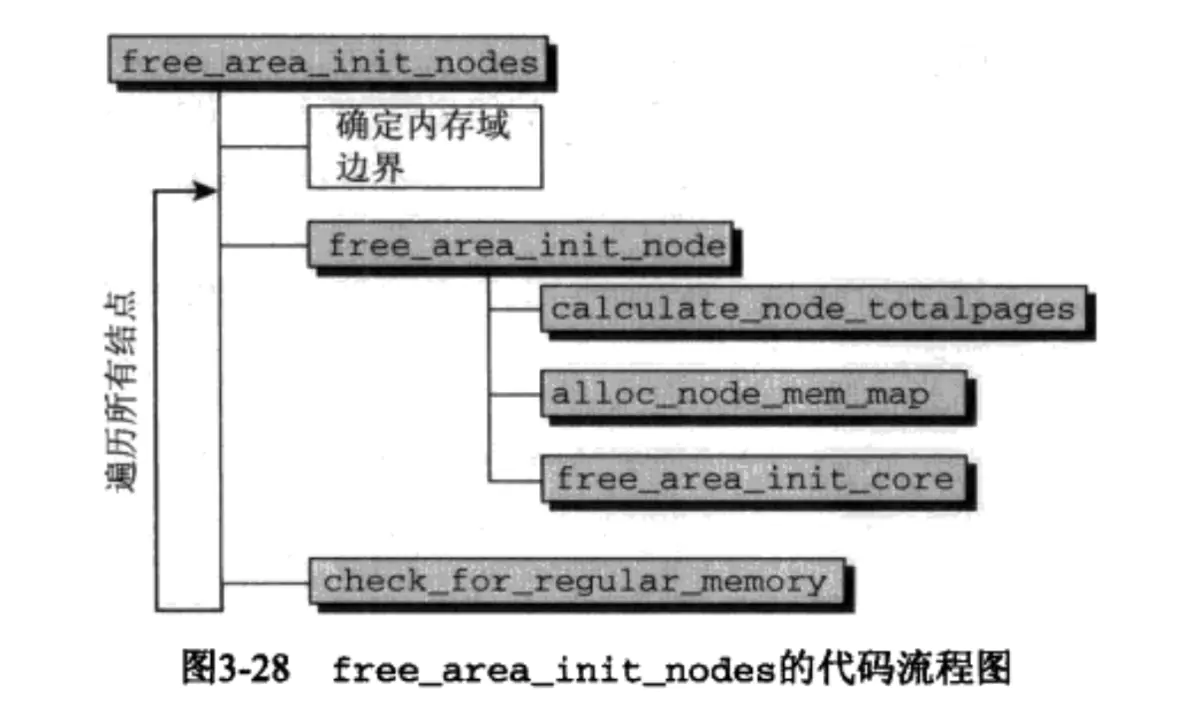

内存域初始化

上一篇文章中 系统加载内核内存管理模块的流程 提到了内核内存初始化的流程其中最后一步就是通过 free_area_init_nodes,free_area_init_nodes则是通过free_area_init_node初始化各个节点的内存域以及初始化伙伴系统。

free_area_init_nodes代码流程图

具体见代码:

/*

*pfn : page frame number, 页帧号

*max_zone_pfn 记录了各个域的上界

*/

void __init free_area_init_nodes(unsigned long *max_zone_pfn)

{

unsigned long start_pfn, end_pfn;

int i, nid;

/* 记录各个zone的最低和最高页帧的编号 */

memset(arch_zone_lowest_possible_pfn, 0,

sizeof(arch_zone_lowest_possible_pfn));

memset(arch_zone_highest_possible_pfn, 0,

sizeof(arch_zone_highest_possible_pfn));

start_pfn = find_min_pfn_with_active_regions(); /*第一个内存域的起始页帧*/

for (i = 0; i < MAX_NR_ZONES; i++) {

if (i == ZONE_MOVABLE) /* ZONE_MOVABLE 单独计算*/

continue;

end_pfn = max(max_zone_pfn[i], start_pfn);

arch_zone_lowest_possible_pfn[i] = start_pfn;

arch_zone_highest_possible_pfn[i] = end_pfn;

start_pfn = end_pfn;

}

/* Find the PFNs that ZONE_MOVABLE begins at in each node */

memset(zone_movable_pfn, 0, sizeof(zone_movable_pfn));

find_zone_movable_pfns_for_nodes();

... /* print some state*/

/* Initialise every node */

mminit_verify_pageflags_layout();

setup_nr_node_ids(); /* 给各个节点编号 */

zero_resv_unavail(); /* 将一些保留的内存重置 */

for_each_online_node(nid) {

pg_data_t *pgdat = NODE_DATA(nid);

free_area_init_node(nid, NULL,

find_min_pfn_for_node(nid), NULL);

/* Any memory on that node */

if (pgdat->node_present_pages)

node_set_state(nid, N_MEMORY);

check_for_memory(pgdat, nid);

}

}

void __init free_area_init_node(int nid, unsigned long *zones_size,

unsigned long node_start_pfn,

unsigned long *zholes_size)

{

pg_data_t *pgdat = NODE_DATA(nid);

unsigned long start_pfn = 0;

unsigned long end_pfn = 0;

/* 初始化内存节点pgdat */

pgdat->node_id = nid;

pgdat->node_start_pfn = node_start_pfn;

pgdat->per_cpu_nodestats = NULL;

...

calculate_node_totalpages(pgdat, start_pfn, end_pfn,

zones_size, zholes_size); /* 计算页帧数: spanned_pages, present_pages */

alloc_node_mem_map(pgdat);

pgdat_set_deferred_range(pgdat);

free_area_init_core(pgdat);

}

// 初始化node_mem_map的数据结构,但是不分配具体内容,具体的内容由free_area_init_core分配。

static void __ref alloc_node_mem_map(struct pglist_data *pgdat)

{

unsigned long __maybe_unused start = 0;

unsigned long __maybe_unused offset = 0;

/* Skip empty nodes */

if (!pgdat->node_spanned_pages)

return;

start = pgdat->node_start_pfn & ~(MAX_ORDER_NR_PAGES - 1); /* 最高阶起始位置*/

offset = pgdat->node_start_pfn - start; /* 起始页帧 相对于 最高阶内偏移*/

/* ia64 gets its own node_mem_map, before this, without bootmem */

if (!pgdat->node_mem_map) {

unsigned long size, end;

struct page *map;

end = pgdat_end_pfn(pgdat); /* 节点结尾页帧 */

end = ALIGN(end, MAX_ORDER_NR_PAGES); /* 节点结尾页帧偏移 */

size = (end - start) * sizeof(struct page);

map = memblock_alloc_node_nopanic(size, pgdat->node_id);

pgdat->node_mem_map = map + offset;

}

#ifndef CONFIG_NEED_MULTIPLE_NODES

/*

* With no DISCONTIG, the global mem_map is just set as node 0's

*/

if (pgdat == NODE_DATA(0)) {

mem_map = NODE_DATA(0)->node_mem_map;

#if defined(CONFIG_HAVE_MEMBLOCK_NODE_MAP) || defined(CONFIG_FLATMEM)

if (page_to_pfn(mem_map) != pgdat->node_start_pfn)

mem_map -= offset;

#endif /* CONFIG_HAVE_MEMBLOCK_NODE_MAP */

}

#endif

}

/*

* Set up the zone data structures:

* - mark all pages reserved

* - mark all memory queues empty

* - clear the memory bitmaps

* NOTE: pgdat should get zeroed by caller.

* NOTE: this function is only called during early init.

*/

static void __init free_area_init_core(struct pglist_data *pgdat)

{

enum zone_type j;

int nid = pgdat->node_id;

pgdat_init_internals(pgdat);

pgdat->per_cpu_nodestats = &boot_nodestats;

for (j = 0; j < MAX_NR_ZONES; j++) {

struct zone *zone = pgdat->node_zones + j;

unsigned long size, freesize, memmap_pages;

unsigned long zone_start_pfn = zone->zone_start_pfn;

size = zone->spanned_pages;

freesize = zone->present_pages;

memmap_pages = calc_memmap_size(size, freesize); /* 计算用于描述各个内存域所有struct page的数据结构(meta)所占内存页数 */

...

// nr_kernel_pages 用于一致性映射的页数, 包括 nr_all_pages在highmem在内的页数

if (!is_highmem_idx(j))

nr_kernel_pages += freesize;

/* Charge for highmem memmap if there are enough kernel pages */

else if (nr_kernel_pages > memmap_pages * 2)

nr_kernel_pages -= memmap_pages;

nr_all_pages += freesize;

/*

* 初始化节点信息 元信息 并 初始化 cpu高速缓存。

*/

zone_init_internals(zone, j, nid, freesize);

if (!size)

continue;

set_pageblock_order(); /* 设置 pageblock_order*/

setup_usemap(pgdat, zone, zone_start_pfn, size); /* 初始化空闲页使用位图*/

init_currently_empty_zone(zone, zone_start_pfn, size); /* 通过zone_init_free_lists调用初始化free_area列表,并将属于该内存域的所有内存页都初始化为默认值 */

memmap_init(size, nid, j, zone_start_pfn); /* 用来初始化内存页*/

}

}

static void __meminit zone_init_free_lists(struct zone *zone)

{

unsigned int order, t;

for_each_migratetype_order(order, t) { /* 遍历各个阶的各个类型的伙伴页list */

INIT_LIST_HEAD(&zone->free_area[order].free_list[t]);/* 将双向列表next指针和prev指针指向自己*/

zone->free_area[order].nr_free = 0;

}

}

/* 这是初始化伙伴系统各个空闲页列表的关键函数*/

void __meminit memmap_init_zone(unsigned long size, int nid, unsigned long zone,

unsigned long start_pfn, enum memmap_context context,

struct vmem_altmap *altmap)

{

unsigned long pfn, end_pfn = start_pfn + size;

struct page *page;

if (highest_memmap_pfn < end_pfn - 1)

highest_memmap_pfn = end_pfn - 1;

#ifdef CONFIG_ZONE_DEVICE

/* device类型的zone 暂且不讨论 */

#endif

for (pfn = start_pfn; pfn < end_pfn; pfn++) {

...

page = pfn_to_page(pfn);

__init_single_page(page, pfn, zone, nid);

if (context == MEMMAP_HOTPLUG)

__SetPageReserved(page);

/*

* Mark the block movable so that blocks are reserved for

* movable at startup. This will force kernel allocations

* to reserve their blocks rather than leaking throughout

* the address space during boot when many long-lived

* kernel allocations are made.

* bitmap is created for zone's valid pfn range. but memmap

* can be created for invalid pages (for alignment)

* check here not to call set_pageblock_migratetype() against

* pfn out of zone.

*/

if (!(pfn & (pageblock_nr_pages - 1))) {

set_pageblock_migratetype(page, MIGRATE_MOVABLE);

cond_resched();

}

}

}

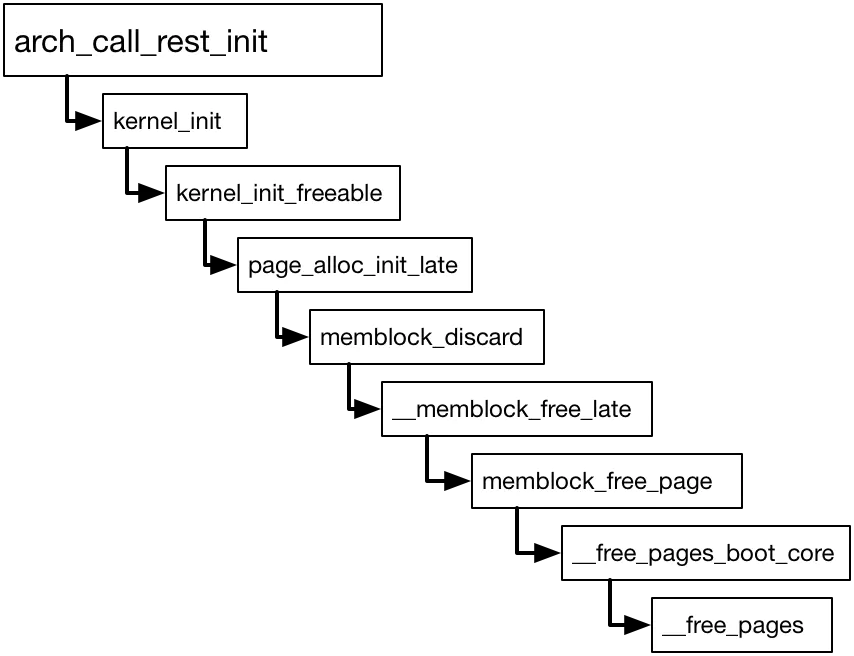

现在内存中所有page都已初始化完成,伙伴系统的结构也已经初始化完毕,在上述代码中可以看到free_area->free_list[]还是一个空列表数组,并没有完全完成伙伴系统的初始化工作,上一篇文章中有说到,真正完成伙伴系统中free_list初始化工作的是在arch_call_rest_init中,其代码调用图如下:

伙伴系统free_list初始化

而初始化free_list的关键工作是交给__free_pages这个函数的,其实就是页的释放,具体其工作原理在内存释放小结详细描述。

伙伴系统中的各个类型页的各个阶的分配情况样例:

[root@node1 vagrant]# cat /proc/pagetypeinfo

Page block order: 9

Pages per block: 512

Free pages count per migrate type at order 0 1 2 3 4 5 6 7 8 9 10

Node 0, zone DMA, type Unmovable 1 4 3 0 0 1 0 0 1 0 0

Node 0, zone DMA, type Reclaimable 0 0 0 1 0 1 1 1 1 1 0

Node 0, zone DMA, type Movable 5 2 0 3 1 0 1 1 1 0 1

Node 0, zone DMA, type Reserve 0 0 0 0 0 0 0 0 0 1 0

Node 0, zone DMA, type CMA 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA, type Isolate 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA32, type Unmovable 0 1 8 2 1 1 1 1 5 0 2

Node 0, zone DMA32, type Reclaimable 1 0 1 0 1 0 1 1 1 1 0

Node 0, zone DMA32, type Movable 0 0 0 0 1 33 20 7 1 0 290

Node 0, zone DMA32, type Reserve 0 0 0 0 0 0 0 0 0 0 1

Node 0, zone DMA32, type CMA 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA32, type Isolate 0 0 0 0 0 0 0 0 0 0 0

Number of blocks type Unmovable Reclaimable Movable Reserve CMA Isolate

Node 0, zone DMA 1 2 4 1 0 0

Node 0, zone DMA32 32 10 716 2 0 0

内存分配

伙伴系统在分配内存时,会选取一个内存域进行分配,如果当前内存域无可用内存时会尝试从备用内存域中的下一个域中分配

[root@node1 vagrant]# cat /proc/buddyinfo # 查看各个内存域中各个阶的内存区数量

Node 0, zone DMA 4 7 1 4 1 2 2 2 3 2 1

Node 0, zone DMA32 153 38 16 1 2 24 22 8 7 1 293

调用流程:

alloc_pages => alloc_pages_node => __alloc_pages_node => __alloc_pages => __alloc_pages_nodemask => (get_page_from_freelist or __alloc_pages_slowpath /* 会先进行回收,然后再分配*/ )

static struct page *

get_page_from_freelist(gfp_t gfp_mask, unsigned int order, int alloc_flags,

const struct alloc_context *ac)

{

struct zoneref *z; /* 备用域 */

...

for_next_zone_zonelist_nodemask(zone, z, ac->zonelist, ac->high_zoneidx, ac->nodemask) { /* 遍历备用域直到找到一块合适的内存 */

struct page *page;

unsigned long mark;

if (cpusets_enabled() &&

(alloc_flags & ALLOC_CPUSET) &&

!__cpuset_zone_allowed(zone, gfp_mask))

continue;

try_this_zone:

/* 主要是通过rmqueue来分配合适的内存区 */

page = rmqueue(ac->preferred_zoneref->zone, zone, order,

gfp_mask, alloc_flags, ac->migratetype);

...

}

/*

* It's possible on a UMA machine to get through all zones that are

* fragmented. If avoiding fragmentation, reset and try again.

*/

...

return NULL;

}

static inline

struct page *rmqueue(struct zone *preferred_zone,

struct zone *zone, unsigned int order,

gfp_t gfp_flags, unsigned int alloc_flags,

int migratetype)

{

unsigned long flags;

struct page *page;

if (likely(order == 0)) {

/* 从缓存中获取页 */

page = rmqueue_pcplist(preferred_zone, zone, order,

gfp_flags, migratetype, alloc_flags);

goto out;

}

/*

* We most definitely don't want callers attempting to

* allocate greater than order-1 page units with __GFP_NOFAIL.

*/

WARN_ON_ONCE((gfp_flags & __GFP_NOFAIL) && (order > 1));

spin_lock_irqsave(&zone->lock, flags);

do {

page = NULL;

if (alloc_flags & ALLOC_HARDER) { /* 放宽限制,即如果当前阶无可用内存时,可以向分配更高阶的内存区*/

page = __rmqueue_smallest(zone, order, MIGRATE_HIGHATOMIC);

if (page)

trace_mm_page_alloc_zone_locked(page, order, migratetype);

}

if (!page)

page = __rmqueue(zone, order, migratetype, alloc_flags);

} while (page && check_new_pages(page, order));

spin_unlock(&zone->lock);

if (!page)

goto failed;

...

}

static __always_inline struct page *

__rmqueue(struct zone *zone, unsigned int order, int migratetype,

unsigned int alloc_flags)

{

struct page *page;

retry:

page = __rmqueue_smallest(zone, order, migratetype);

if (unlikely(!page)) {

if (migratetype == MIGRATE_MOVABLE) /* 从CMA 类型的列表中分配*/

page = __rmqueue_cma_fallback(zone, order);

if (!page && __rmqueue_fallback(zone, order, migratetype,

alloc_flags))

goto retry;

}

trace_mm_page_alloc_zone_locked(page, order, migratetype);

return page;

}

/*

* Go through the free lists for the given migratetype and remove

* the smallest available page from the freelists

*/

static __always_inline

struct page *__rmqueue_smallest(struct zone *zone, unsigned int order,

int migratetype)

{

unsigned int current_order;

struct free_area *area;

struct page *page;

/* Find a page of the appropriate size in the preferred list */

for (current_order = order; current_order < MAX_ORDER; ++current_order) {

area = &(zone->free_area[current_order]);

page = list_first_entry_or_null(&area->free_list[migratetype],

struct page, lru);

if (!page)

continue;

list_del(&page->lru);

rmv_page_order(page);

area->nr_free--;

expand(zone, page, order, current_order, area, migratetype); /* 如果当前阶高于理想阶(current_order > order),则需要往下拆分内存区*/

set_pcppage_migratetype(page, migratetype);

return page;

}

return NULL;

}

static inline void expand(struct zone *zone, struct page *page,

int low, int high, struct free_area *area,

int migratetype)

{

unsigned long size = 1 << high;

while (high > low) {

area--;

high--;

size >>= 1;

VM_BUG_ON_PAGE(bad_range(zone, &page[size]), &page[size]);

/*

* Mark as guard pages (or page), that will allow to

* merge back to allocator when buddy will be freed.

* Corresponding page table entries will not be touched,

* pages will stay not present in virtual address space

*/

if (set_page_guard(zone, &page[size], high, migratetype)) /* 将其对应的伙伴标记为空闲的,并设置其阶为high*/

continue;

list_add(&page[size].lru, &area->free_list[migratetype]);

area->nr_free++;

set_page_order(&page[size], high);

}

}

内存释放

内存释放的入口有很多,诸如:free_pcppages_bulk,释放指定数量的页回热页列表;free_one_page,释放一个页,free_one_pages释放多个页;等等。但是最终都是通过__free_one_page来释放对应的页,具体代码如下:

/*

* Freeing function for a buddy system allocator.

* 伙伴系统中通过一个映射表记录了每个阶的内存区,

* 最低级别的表映射了最少数量的内存页,

* 在此之上各个阶依次映射了对应阶的内存页数,

* 在较高层次上,在底层标记当前层是否可被分配,

* 并当有需要时向上报告自己的有效性,并做一些统计工作

* 以配合虚拟内存系统的工作。

* 每一层分配的连续内存页,也就是各个阶的内存区大小为2^k个连续内存页

* 该页所在的阶保存在page_private(page)=> page->private中,

* 当我们分配或者释放页的时候,我们可以追溯其他页的阶

* 当我们分配一个内存块时,如果当前阶已经被分配,那么需要从更高阶拆分

* 当释放一个内存块时,如果对应的伙伴块也是空闲的,就要合并两个内存块

* The concept of a buddy system is to maintain direct-mapped table

* (containing bit values) for memory blocks of various "orders".

* The bottom level table contains the map for the smallest allocatable

* units of memory (here, pages), and each level above it describes

* pairs of units from the levels below, hence, "buddies".

* At a high level, all that happens here is marking the table entry

* at the bottom level available, and propagating the changes upward

* as necessary, plus some accounting needed to play nicely with other

* parts of the VM system.

* At each level, we keep a list of pages, which are heads of continuous

* free pages of length of (1 << order) and marked with PageBuddy.

* Page's order is recorded in page_private(page) field.

* So when we are allocating or freeing one, we can derive the state of the

* other. That is, if we allocate a small block, and both were

* free, the remainder of the region must be split into blocks.

* If a block is freed, and its buddy is also free, then this

* triggers coalescing into a block of larger size.

*

* -- nyc

*/

static inline void __free_one_page(struct page *page,

unsigned long pfn,

struct zone *zone, unsigned int order,

int migratetype)

{

unsigned long combined_pfn;

unsigned long uninitialized_var(buddy_pfn);

struct page *buddy;

unsigned int max_order;

max_order = min_t(unsigned int, MAX_ORDER, pageblock_order + 1);

continue_merging:

while (order < max_order - 1) {

buddy_pfn = __find_buddy_pfn(pfn, order); /* 对应的伙伴页起始页帧号 */

buddy = page + (buddy_pfn - pfn); /* 伙伴页帧 */

if (!pfn_valid_within(buddy_pfn))

goto done_merging;

if (!page_is_buddy(page, buddy, order))

goto done_merging;

/*

* Our buddy is free or it is CONFIG_DEBUG_PAGEALLOC guard page,

* merge with it and move up one order.

*/

if (page_is_guard(buddy)) {

clear_page_guard(zone, buddy, order, migratetype); /* 去掉CONFIG_DEBUG_PAGEALLOC标记 */

} else {

list_del(&buddy->lru);

zone->free_area[order].nr_free--;

rmv_page_order(buddy); /* 将伙伴的阶设为0 */

}

combined_pfn = buddy_pfn & pfn;

page = page + (combined_pfn - pfn);

pfn = combined_pfn;

order++;

}

if (max_order < MAX_ORDER) {

/* If we are here, it means order is >= pageblock_order.

* We want to prevent merge between freepages on isolate

* pageblock and normal pageblock. Without this, pageblock

* isolation could cause incorrect freepage or CMA accounting.

* We don't want to hit this code for the more frequent

* low-order merging.

*/

if (unlikely(has_isolate_pageblock(zone))) {

int buddy_mt;

buddy_pfn = __find_buddy_pfn(pfn, order); /*page_pfn ^ (1 << order);*/

buddy = page + (buddy_pfn - pfn);

buddy_mt = get_pageblock_migratetype(buddy);

if (migratetype != buddy_mt

&& (is_migrate_isolate(migratetype) ||

is_migrate_isolate(buddy_mt)))

goto done_merging;

}

max_order++;

goto continue_merging;

}

done_merging:

set_page_order(page, order);

/*

* If this is not the largest possible page, check if the buddy

* of the next-highest order is free. If it is, it's possible

* that pages are being freed that will coalesce soon. In case,

* that is happening, add the free page to the tail of the list

* so it's less likely to be used soon and more likely to be merged

* as a higher order page

*/

if ((order < MAX_ORDER-2) && pfn_valid_within(buddy_pfn)) {

struct page *higher_page, *higher_buddy;

combined_pfn = buddy_pfn & pfn;

higher_page = page + (combined_pfn - pfn);

buddy_pfn = __find_buddy_pfn(combined_pfn, order + 1);

higher_buddy = higher_page + (buddy_pfn - combined_pfn);

if (pfn_valid_within(buddy_pfn) &&

page_is_buddy(higher_page, higher_buddy, order + 1)) {

list_add_tail(&page->lru,

&zone->free_area[order].free_list[migratetype]);

goto out;

}

}

list_add(&page->lru, &zone->free_area[order].free_list[migratetype]);

out:

zone->free_area[order].nr_free++;

}

/*

* This function checks whether a page is free && is the buddy

* we can coalesce a page and its buddy if

* (a) the buddy is not in a hole (check before calling!) &&

* (b) the buddy is in the buddy system &&

* (c) a page and its buddy have the same order &&

* (d) a page and its buddy are in the same zone.

* For recording whether a page is in the buddy system, we set PageBuddy.

* Setting, clearing, and testing PageBuddy is serialized by zone->lock.

* For recording page's order, we use page_private(page).

*/

static inline int page_is_buddy(struct page *page, struct page *buddy,

unsigned int order)

{

if (page_is_guard(buddy) && page_order(buddy) == order) {

if (page_zone_id(page) != page_zone_id(buddy))

return 0;

return 1;

}

if (PageBuddy(buddy) && page_order(buddy) == order) {

if (page_zone_id(page) != page_zone_id(buddy))

return 0;

return 1;

}

return 0;

}

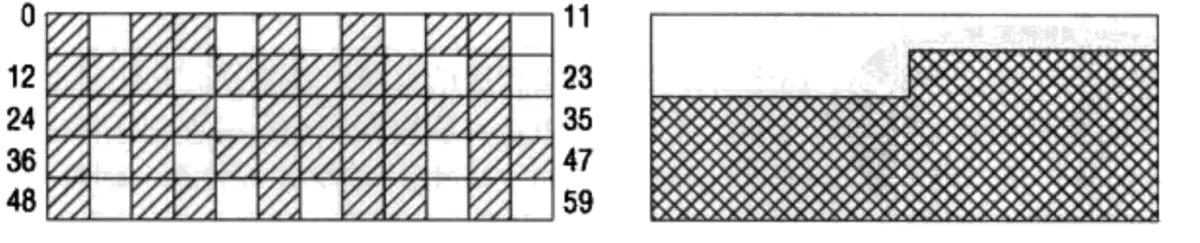

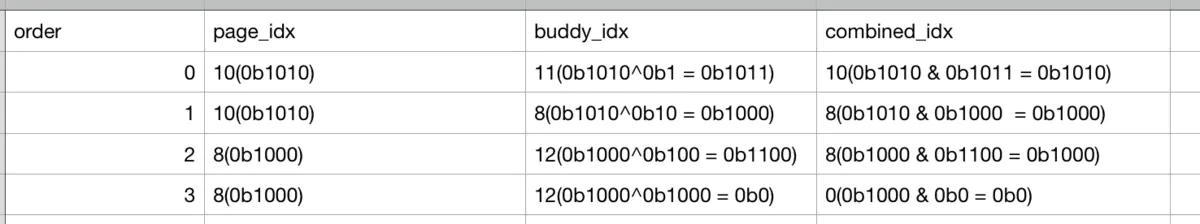

这里面对于buddy的计算有点难以理解,buddy = page_pfn ^ (1 << order); combined = page_pfn & buddy; 在深入linux内核架构中有一个不错的例子:加入释放一个0阶内存块,该页的索引为10,MAX_ORDER为4,下表给出了所需计算:

buddy计算

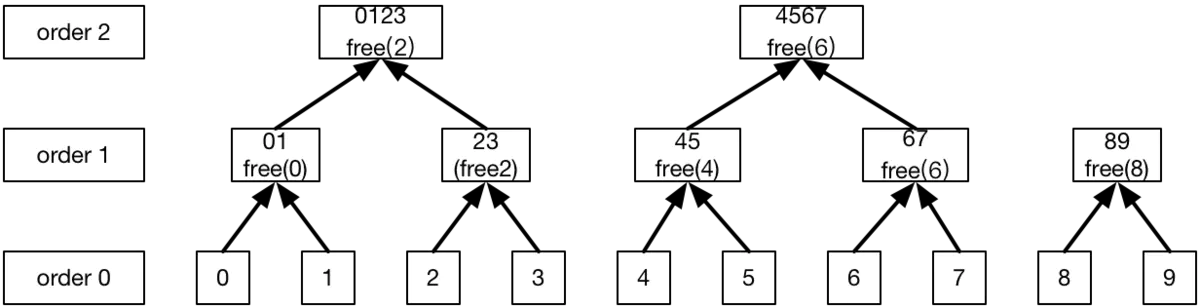

看完上述代码,结合上移篇文章中提到内核通过__free_pages_boot_core并最终通过__free_pages来初始化freearea->free_list。通过代码调用可以发现__free_pages最终还是会调用到__free_one_page。但是为啥一个单单的__free_one_page就可以建立起来整个free_list,而且__free_one_page中还需要各种用到page->private;然而根本没有看到有哪里提到初始化这个private;大家看完后是不是会有这种疑问?

其实page->private初始化为0是解决这个疑问的关键,在__free_pages_boot_core执行之前所有page的private都为0,也就是所有页都为最低级的页,当调用__free_one_page(start_page)后,那么起始页的buddy->private == start_page,那么就会将相应的两页进行合并成1阶伙伴内存区。依次内推会把所有页向上的合并,直至到所有页都已经处于MAX_ORDER阶中的内存区中了(除了多余出来的那些页),具体见如下代码:

void __init __memblock_free_late(phys_addr_t base, phys_addr_t size)

{

phys_addr_t cursor, end;

end = base + size - 1;

memblock_dbg("%s: [%pa-%pa] %pF\n",

__func__, &base, &end, (void *)_RET_IP_);

kmemleak_free_part_phys(base, size);

cursor = PFN_UP(base);

end = PFN_DOWN(base + size);

for (; cursor < end; cursor++) { /* 会对所有页进行释放工作 */

memblock_free_pages(pfn_to_page(cursor), cursor, 0);

totalram_pages_inc();

}

}

咱们以一个10页,MAX_ORDER为3的内存的例子画一下合并图:

伙伴系统初始化示意图

至此,我们就对伙伴系统有了比较深入的了解了。

作者:淡泊宁静_3652

链接:https://www.jianshu.com/p/af0ed7de9f04

来源:简书

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

本文深入解析Linux内核中的伙伴系统,阐述其在物理内存管理中的作用,包括内存分配、释放的基本原理,以及如何避免碎片问题。文章详细介绍了伙伴系统中各内存区的划分与管理,解释了不同类型的内存页如何避免碎片化,以及内核如何在不同类型间进行内存分配。

本文深入解析Linux内核中的伙伴系统,阐述其在物理内存管理中的作用,包括内存分配、释放的基本原理,以及如何避免碎片问题。文章详细介绍了伙伴系统中各内存区的划分与管理,解释了不同类型的内存页如何避免碎片化,以及内核如何在不同类型间进行内存分配。

1076

1076

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?