需求说明

今天到现在为止实战课程的访问量

从今天到现在为止从搜索引擎引流过来的实战课程访问量

互联网访问日志概述

为什么要记录用户访问日志

1)网站页面的访问量

2)网站的黏性

3)推荐

用户行为日志内容

用户行为日志分析的意义

网站的眼睛

网站的神经

网站的大脑

Python日志产生器开发之产生访问url和ip信息

使用Python脚本实时产生数据

Python实时日志产生器开发

新建 generate_log.py

#coding=UTF-8

import random

url_paths = [

"class/112.html",

"class/128.html",

"class/145.html",

"class/146.html",

"class/131.html",

"class/130.html",

"learn/821",

"course/list"

]

ip_slices = [132,156,124,10,29,167,143,187,30,46,55,63,72,87,98,168]

def sample_url():

return random.sample(url_paths,1)[0]

def generate_log(count = 10):

while count>=1:

query_log = "${url}".format(url=sample_url())

print query_log

count = count - 1

if __name__ == '__main__':

generate_log()

功能开发及本地运行

[hadoop@hadoop000 logs]$ tail -200f access.log

query_log = “{ip}\t{local_time}\t{url}\t{status_code}\t{referer}”.format(url=sample_url(),ip=sample_ip(),referer=sample_referer(),status_code=sample_status_code(),local_time=time_str)

linux crontab

网站: http://tool.lu/crontab

每一分钟执行一次crontab表达式:*/1 * * * *

vi log_generator.sh

crontab -e

*/1 * * * * /home/hadoop/data/project/log_generator.sh

[hadoop@hadoop000 ~]$ sudo find / -name ‘mysql-libs-5.1.73-8.el6*’

/var/cache/yum/x86_64/6/base/packages/mysql-libs-5.1.73-8.el6_8.x86_64.rpm

错误:crontab 安装与本机的mysql有冲突

打通Flume&Kafka&Spark Streaming线路

对接python日志产生器输出的日志到Flume

streaming_project.conf

选型:access.log ==> 控制台输出

exec

memory

logger

exec-memory-logger.sources = exec-source

exec-memory-logger.sinks = logger-sink

exec-memory-logger.channels = memory-channel

exec-memory-logger.sources.exec-source = exec

exec-memory-logger.sources.command = tail -F /home/hadoop/data/project/logs/access.log

exec-memory-logger.sources.shell = /bin/sh -c

exec-memory-logger.channels.memory-channel.type = memory

exec-memory-logger.sinks.logger-sink = logger

exec-memory-logger.sources.exec-source.channels = memory-channel

exec-memory-logger.sinks.logger-sink.channel = memory-channel

启动:

flume-ng agent

–name exec-memory-logger

–conf $FLUME_HOME/conf

–conf-file /home/hadoop/data/project/streaming_project.conf

-Dflume.root.logger=INFO,console

日志==>Flume==>Kafka

启动./zkServer.sh start

启动kafka Server:[hadoop@hadoop000 bin]$ ./kafka-server-start.sh -daemon /home/hadoop/app/kafka_2.11-0.9.0.0/config/server.properties

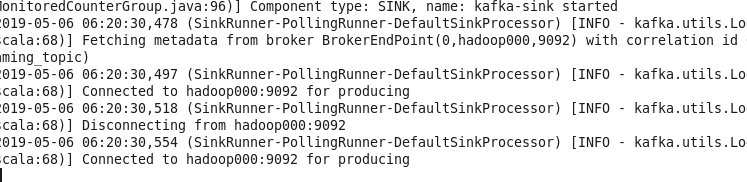

修改Flume配置文件使得flume sink 到kafka

streaming_project2.conf

exec-memory-kafka.sources = exec-source

exec-memory-kafka.sinks = kafka-sink

exec-memory-kafka.channels = memory-channel

exec-memory-kafka.sources.exec-source.type = exec

exec-memory-kafka.sources.exec-source.command = tail -F /home/hadoop/data/project/logs/access.log

exec-memory-kafka.sources.exec-source.shell = /bin/sh -c

exec-memory-kafka.channels.memory-channel.type = memory

exec-memory-kafka.sinks.kafka-sink.type = org.apache.flume.sink.kafka.kafkaSink

exec-memory-kafka.sinks.kafka-sink.brokerList = hadoop000:9092

exec-memory-kafka.sinks.kafka-sink.topic = streaming_topic

exec-memory-kafka.sinks.kafka-sink.batchSize = 5

exec-memory-kafka.sinks.kafka-sink.requiredAcks = 1

exec-memory-kafka.sources.exec-source.channels = memory-channel

exec-memory-kafka.sinks.kafka-sink.channel = memory-channel

启动:

flume-ng agent

–name exec-memory-kafka

–conf $FLUME_HOME/conf

–conf-file /home/hadoop/data/project/streaming_project2.conf

-Dflume.root.logger=INFO,console

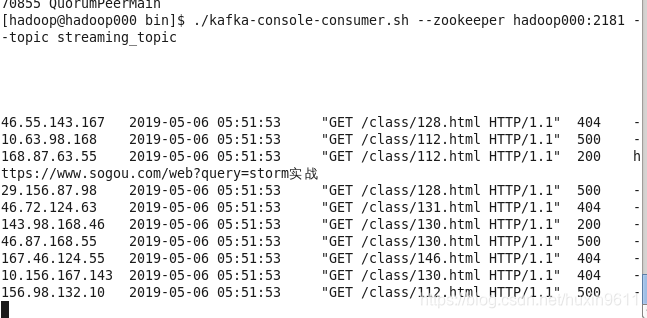

./kafka-console-consumer.sh --zookeeper hadoop000:2181 --topic streaming_topic

打通Flume&Kafka&Spark Streaming线路

在Spark应用程序接收到数据并完成记录数统计

数据清洗

按照需求对实时产生的数据进行清洗

数据清洗操作:从原始日志中取出我们所需要的字段信息就可以了

数据清洗结果类似如下:

ClickLog(72.143.168.30,20190506083207,130,404,-)

ClickLog(143.124.29.87,20190506083207,128,404,-)

ClickLog(143.10.63.55,20190506083207,130,200,-)

ClickLog(124.167.63.187,20190506083207,131,200,-)

ClickLog(143.124.98.168,20190506083207,130,404,-)

ClickLog(10.46.87.63,20190506083207,131,404,-)

ClickLog(30.10.98.124,20190506083207,112,500,-)

ClickLog(46.10.98.187,20190506083207,145,200,-)

ClickLog(55.167.143.168,20190506083207,112,404,https://www.so.com/s?q=spark streaming实战)

ClickLog(63.30.10.87,20190506083207,128,404,https://www.sogou.com/web?query=spark streaming实战)

到数据清洗完为止,日志中只包含了实战课程的日志

补充一点:希望你们的机器配置别太低

Hadoop/zk/HBase/Spark Streaming/Flume/Kafka

hadoop000:8core 内存:8G

**

功能1:今天到现在为止 实战课程的访问量

**

yyyyMMdd courseid

使用数据库来存储我们的统计结果

Spark Streaming把统计结果写入到数据库里面

可视化前端根据yyyyMMdd courseid把数据库里面的统计结果展示出来

选择什么数据库作为统计结果的存储呢?

RDBMS:MySQL Oracle…

day course_id click_count

20171111 1 10

20171111 1 10

下一个批次数据进来以后:

20171111 + 1 ==> click_count + 下一个批次的统计结果 ==>写入到数据库

NoSQL:Hbase, Redis…

HBase :一个API就能搞定,非常方便

20171111 + 1 ==>click_count + 下一个批次的统计结果

本次课程为什么选择HBase的一个原因所在 前提: HDFS、Zookeeper、HBase

zookeeper启动:[hadoop@hadoop000 ~]$ ./zkServer.sh start

标题

启动hadoop:[hadoop@hadoop000 sbin]$ ./start-dfs.sh

启动hbase:[hadoop@hadoop000 bin]$ ./start-hbase.sh

[hadoop@hadoop000 bin]$ jps

78561 NameNode

78659 DataNode

70901 Kafka

70855 QuorumPeerMain

77913 SecondaryNameNode

79658 Jps

79100 HMaster

79244 HRegionServer

[hadoop@hadoop000 bin]$ ./hbase shell

前提:HDFS Zookeeper HBase

HBase 表设计:

hbase(main):002:0> create 'imooc_course_clickcount', 'info'

**

hbase(main):005:0> describe ‘imooc_course_clickcount’

Rowkey设计:

day_courseid

如何使用SCALA来操作HBase

JAVA+Scala联合调用

功能一:将Spark Streaming的处理结果写入到HBase

启动Flume:flume-ng agent

–name exec-memory-kafka

–conf $FLUME_HOME/conf

–conf-file /home/hadoop/data/project/streaming_project2.conf

-Dflume.root.logger=INFO,console

启动KAFKA

功能二: 统计今天到现在为止从搜索引擎引流过来的实战课程的访问量

HBase 表设计

create ‘imooc_course_search_clickcount’

hbase(main):015:0> create ‘imooc_course_search_clickcount’, ‘info’

0 row(s) in 3.2210 seconds

=> Hbase::Table - imooc_course_search_clickcount

rowkey设计:也是根据业务需求来的

20171111 + search + 课程编号

域名,课程编号,时间

过滤掉host为空的

清空表数据 :hbase(main):019:0> truncate ‘imooc_course_search_clickcount’

生产环境运行

打包编译

注释掉SETMASTER

val sparkConf = new SparkConf().setAppName(“ImoocStatStreamingApp”).setMaster(“local[2]”)

mvn clean package -DskipTests

本文详细介绍了如何使用Spark Streaming处理互联网访问日志,包括Python日志产生器的开发,通过Flume和Kafka传递日志,以及利用HBase存储和统计访问量。在实践中,实现了实时统计实战课程的访问量,并讨论了HBase表设计和Rowkey策略。

本文详细介绍了如何使用Spark Streaming处理互联网访问日志,包括Python日志产生器的开发,通过Flume和Kafka传递日志,以及利用HBase存储和统计访问量。在实践中,实现了实时统计实战课程的访问量,并讨论了HBase表设计和Rowkey策略。

2690

2690

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?