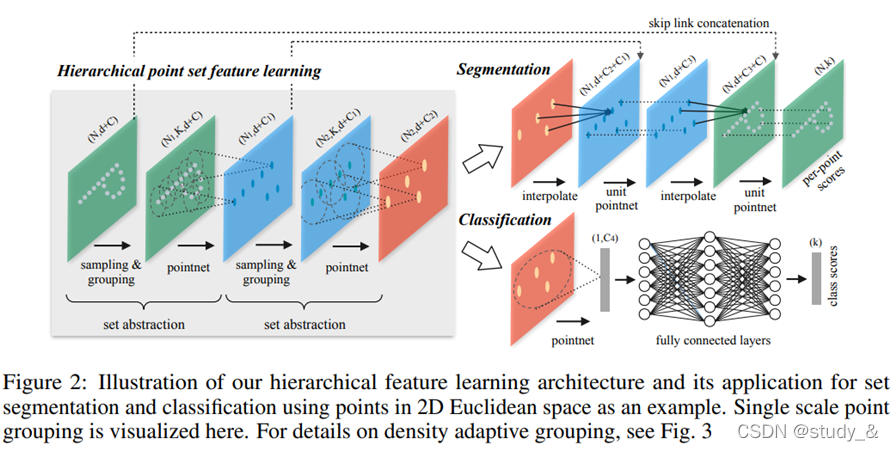

1.PointNet++分割任务

分割任务

分为Set Abstraction layers特征提取 , Feature Propagation layers特征传递 , FC layers全链接 三个模块。一个类似unet的结构,整个分割网络的代码如下:

# Set Abstraction layers

l1_xyz, l1_points, l1_indices = pointnet_sa_module(l0_xyz, l0_points, npoint=512, radius=0.2, nsample=64, mlp=[64,64,128], mlp2=None, group_all=False, is_training=is_training, bn_decay=bn_decay, scope='layer1')

l2_xyz, l2_points, l2_indices = pointnet_sa_module(l1_xyz, l1_points, npoint=128, radius=0.4, nsample=64, mlp=[128,128,256], mlp2=None, group_all=False, is_training=is_training, bn_decay=bn_decay, scope='layer2')

l3_xyz, l3_points, l3_indices = pointnet_sa_module(l2_xyz, l2_points, npoint=None, radius=None, nsample=None, mlp=[256,512,1024], mlp2=None, group_all=True, is_training=is_training, bn_decay=bn_decay, scope='layer3')

# Feature Propagation layers

l2_points = pointnet_fp_module(l2_xyz, l3_xyz, l2_points, l3_points, [256,256], is_training, bn_decay, scope='fa_layer1')

l1_points = pointnet_fp_module(l1_xyz, l2_xyz, l1_points, l2_points, [256,128], is_training, bn_decay, scope='fa_layer2')

l0_points = pointnet_fp_module(l0_xyz, l1_xyz, tf.concat([l0_xyz,l0_points],axis=-1), l1_points, [128,128,128], is_training, bn_decay, scope='fa_layer3')

# FC layers

net = tf_util.conv1d(l0_points, 128, 1, padding='VALID', bn=True, is_training=is_training, scope='fc1', bn_decay=bn_decay)

end_points['feats'] = net

net = tf_util.dropout(net, keep_prob=0.5, is_training=is_training, scope='dp1')

net = tf_util.conv1d(net, 50, 1, padding='VALID', activation_fn=None, scope='fc2')

- SA module 特征提取模块:下采样。输入是(N,D)表示N个D维特征的points,输出是(N’,D’)表示N’个下采样之后的点,每个点采用最远点采样寻找N’个中心点,通过pointnet计算得到N’维度的特征。前面的分类网络说过不再赘述。

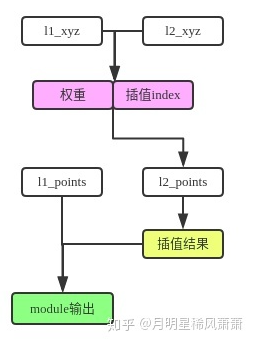

- FP module 特征传递模块:用来上采样。

采用反距离加权插值(把距离的倒数作为weight)。这种插值输入(N, D),输出(N’, D),保证输入的特征维度不变。

白色代表输入,绿色代表输出。

代码如下:

def pointnet_fp_module(xyz1, xyz2, points1, points2, mlp, is_training, bn_decay, scope, bn=True):

''' PointNet Feature Propogation (FP) Module

Input:

xyz1: (batch_size, ndataset1, 3) TF tensor

xyz2: (batch_size, ndataset2, 3) TF tensor, sparser than xyz1

points1: (batch_size, ndataset1, nchannel1) TF tensor

points2: (batch_size, ndataset2, nchannel2) TF tensor

mlp: list of int32 -- output size for MLP on each point

Return:

new_points: (batch_size, ndataset1, mlp[-1]) TF tensor

'''

with tf.variable_scope(scope) as sc:

dist, idx = three_nn(xyz1, xyz2)

dist = tf.maximum(dist, 1e-10)

norm = tf.reduce_sum((1.0/dist),axis=2,keep_dims=True)

norm = tf.tile(norm,[1,1,3])

weight = (1.0/dist) / norm #weight is the inverse of distance

# interpolate

interpolated_points = three_interpolate(points2, idx, weight)

if points1 is not None:

new_points1 = tf.concat(axis=2, values=[interpolated_points, points1]) # B,ndataset1,nchannel1+nchannel2

else:

new_points1 = interpolated_points

new_points1 = tf.expand_dims(new_points1, 2)

for i, num_out_channel in enumerate(mlp):

new_points1 = tf_util.conv2d(new_points1, num_out_channel, [1,1],

padding='VALID', stride=[1,1],

bn=bn, is_training=is_training,

scope='conv_%d'%(i), bn_decay=bn_decay)

new_points1 = tf.squeeze(new_points1, [2]) # B,ndataset1,mlp[-1]

return new_points1

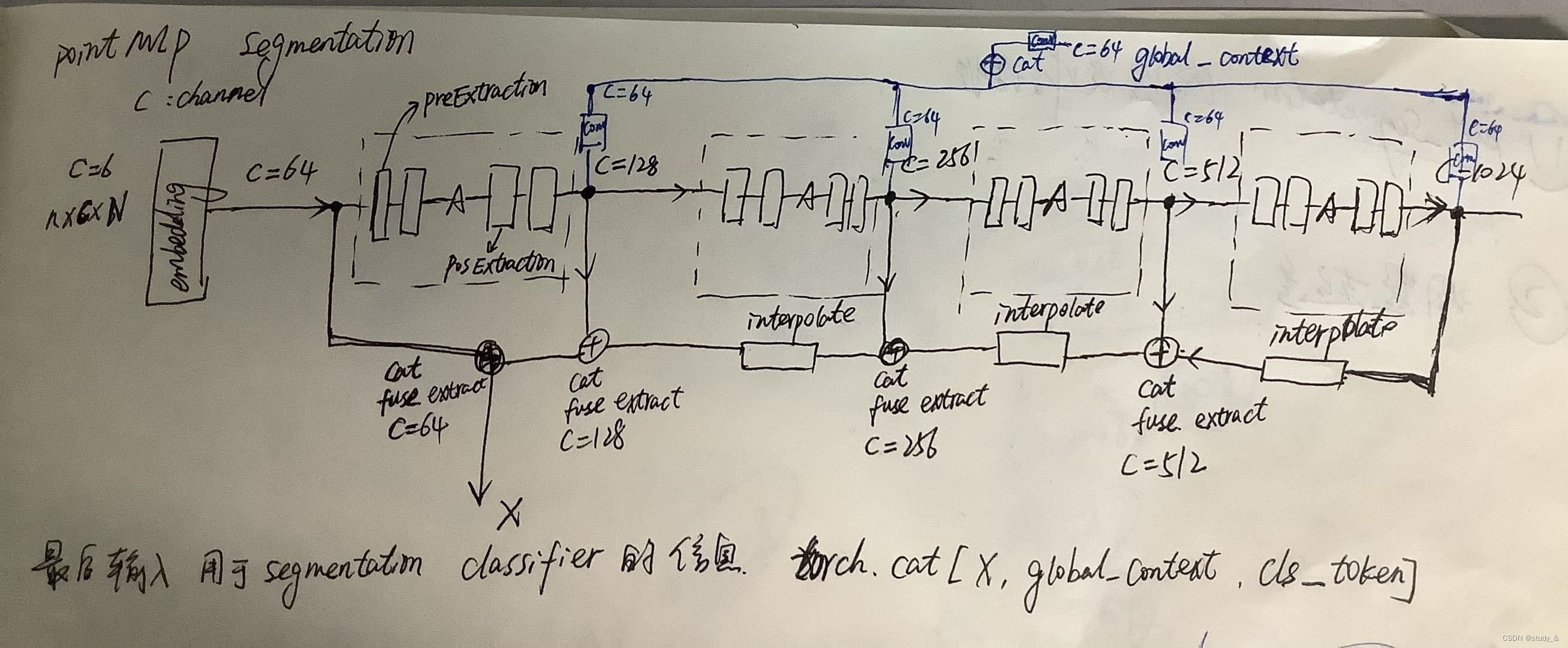

2.PointMLP segmentation关键代码段

segmentation部分示意图

class PointNetFeaturePropagation(nn.Module):

def __init__(self, in_channel, out_channel, blocks=1, groups=1, res_expansion=1.0, bias=True, activation='relu'):

super(PointNetFeaturePropagation, self).__init__()

self.fuse = ConvBNReLU1D(in_channel, out_channel, 1, bias=bias)

self.extraction = PosExtraction(out_channel, blocks, groups=groups,

res_expansion=res_expansion, bias=bias, activation=activation)

本文详细解读了PointNet++的SetAbstraction和FeaturePropagation模块,展示了PointMLP中Segmentation的关键代码段,并介绍了PAConv在点云分割任务中的应用。这些技术在3D点云分析中发挥着核心作用,为理解点云数据的分割提供了深入视角。

本文详细解读了PointNet++的SetAbstraction和FeaturePropagation模块,展示了PointMLP中Segmentation的关键代码段,并介绍了PAConv在点云分割任务中的应用。这些技术在3D点云分析中发挥着核心作用,为理解点云数据的分割提供了深入视角。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1270

1270

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?