import tensorflow as tf

from tensorflow.keras.layers import Dense, ReLU, Layer

class DeepCrossLater(Layer):

def __init__(self,dim_stack, name=None):

"""

:param hidden_unit: A list. Neural network hidden units.

:param dim_stack: A scalar. The dimension of inputs unit.

"""

super(DeepCrossLater, self).__init__()

self.relu = ReLU()

self.cross_num = 3

self.cross_weight = []

self.bais_weight = []

self.onehot_embedding = self.add_weight(shape=(10, 5),

initializer=tf.initializers.glorot_normal())

for i in range(3):

self.cross_weight.append(

self.add_weight(shape=(dim_stack, 1),

initializer=tf.initializers.glorot_normal(),

name="{}/cross_net_{}_weight".format(name,i)))

for i in range(3):

self.cross_weight.append(

self.bais_weight.append(self.add_weight(shape=(dim_stack, 1),

initializer=tf.initializers.glorot_normal(),

name="{}/cross_net_{}_bias".format(name, i))))

def call(self, inputs, **kwargs):

x0 = tf.nn.embedding_lookup(self.onehot_embedding, inputs)

xl = x0

for i in range(3):

xl = tf.matmul(x0, xl, transpose_a=True)

xl = tf.matmul(xl, self.cross_weight[i])

xl = xl + self.bais_weight[i] + tf.transpose(x0, [0, 2, 1])

xl = tf.transpose(xl, [0, 2, 1])

print("method1 xl:", xl)

xl = tf.transpose(x0, [0, 2, 1])

for i in range(3):

xl = tf.matmul(x0, xl, transpose_a=True, transpose_b=True)

xl = tf.matmul(xl, self.cross_weight[i])

xl = xl + self.bais_weight[i] + tf.transpose(x0, [0, 2, 1])

xl = tf.transpose(xl, [0, 2, 1])

print("method3 xl:", xl)

xl = x0

x0 = tf.transpose(x0, [0, 2, 1])

for i in range(3):

xl = tf.matmul(x0, xl)

xl = tf.matmul(xl, self.cross_weight[i])

xl = xl + x0 + self.bais_weight[i]

xl = tf.transpose(xl, [0, 2, 1])

print("method2 xl:", xl)

return xl

if __name__ == '__main__':

input = tf.constant([[1],

[2]], dtype=tf.int32)

print("input:", input.shape)

ru_ins = DeepCrossLater(5, "CrossNet")

res = ru_ins(input)

print("res:", res)

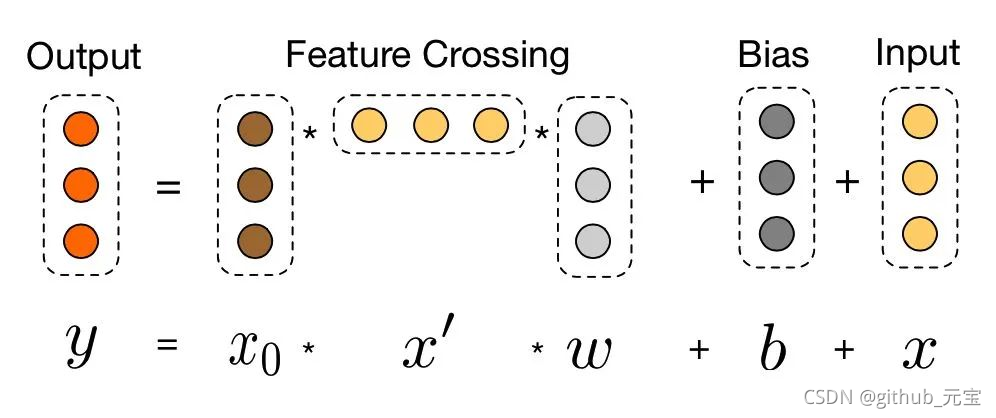

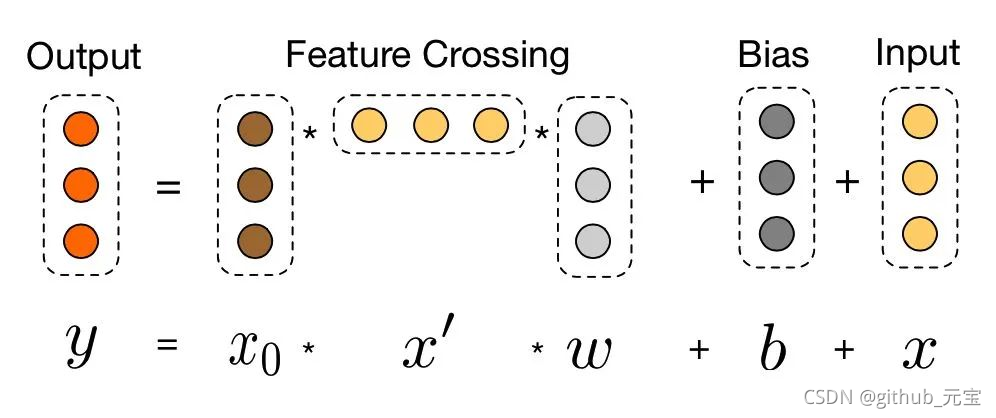

本文介绍了如何使用TensorFlow实现一个名为DeepCrossLater的自定义层,通过三种方法计算输入向量之间的深度交叉,适用于多维数据的特征交互。核心部分展示了权重初始化和矩阵运算的实现细节。

本文介绍了如何使用TensorFlow实现一个名为DeepCrossLater的自定义层,通过三种方法计算输入向量之间的深度交叉,适用于多维数据的特征交互。核心部分展示了权重初始化和矩阵运算的实现细节。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?