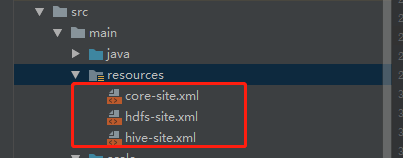

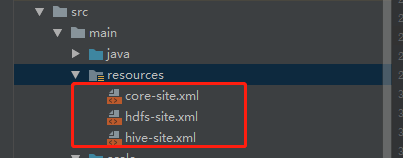

- 在服务器下载配置文件放在本地项目resources目录下

- 定义好kerberos认证方法,先进行认证再执行其他代码即可,示例代码如下

import org.apache.spark.SparkConf

import org.apache.spark.sql.SparkSession

import org.apache.hadoop.security.UserGroupInformation

object day03 {

def main(args: Array[String]): Unit = {

System.setProperty("HADOOP_USER_NAME", "hive")

kerberosAuth()

val conf = new SparkConf()

val ss :SparkSession = SparkSession.builder

.appName("Spark to Hive")

.master("local[*]")

// .master("yarn-cluster")

.config(conf)

.config("spark.testing.memory", 512*1024*1024+"")

.enableHiveSupport()

.getOrCreate()

val sql = """select * from test.test0506"""

val abt_df = ss.sql(sql)

abt_df.show()

}

def kerberosAuth(): Unit = {

try {

System.setProperty("java.security.krb5.conf", "D:\\dongwei\\krb5\\krb5.ini")

// System.setProperty("java.security.krb5.conf","linux_path");

System.setProperty("javax.security.auth.useSubjectCredsOnly", "false")

System.setProperty("sun.security.krb5.debug", "true")

UserGroupInformation.loginUserFromKeytab("hive/n1.bigdatatest.com@BIGDATATEST.COM", "D:\\dongwei\\krb5\\hive.service.keytab")

// UserGroupInformation.loginUserFromKeytab("hive/n1.bigdatatest.com@BIGDATATEST.COM", "linux_path");

} catch {

case e: Exception =>

e.printStackTrace()

}

}

}

本文展示了如何在Scala中使用Kerberos认证来连接Hive。通过设置系统属性,加载Kerberos配置文件和密钥tab文件,实现安全的身份验证。然后创建SparkSession并执行SQL查询来展示数据。

本文展示了如何在Scala中使用Kerberos认证来连接Hive。通过设置系统属性,加载Kerberos配置文件和密钥tab文件,实现安全的身份验证。然后创建SparkSession并执行SQL查询来展示数据。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?