系列文章

深度学习-卷积神经网络(目标检测环境搭建)-TensorFlow及Keras环境搭建&详细安装教程

深度学习-卷积神经网络-目标检测之YOLOV3模型-代码运行图片检测实践1

深度学习-卷积神经网络-目标检测之YOLOV3模型-代码运行图片检测实践2

相关文章

深度学习-卷积神经网络-实例及代码0.8—基于最小均方误差的线性判别函数参数拟合训练

深度学习-卷积神经网络-实例及代码0.9—MNIST数据集介绍、下载及基本操作

深度学习-卷积神经网络-实例及代码1(入门)—利用Tensorflow和mnist数据集训练单层前馈神经网络/感知机实现手写数字识别

深度学习-卷积神经网络-实例及代码2(初级)—利用Tensorflow和mnist数据集训练简单的深度网络模型实现手写数字识别

深度学习-卷积神经网络-实例及代码3(图像分类LeNet5模型)—利用Tensorflow和mnist数据集训练LeNet5-CNN模型实现手写数字识别

前一篇文章介绍了在Windows下用CPU实现利用YOLOV3-Keras版本代码执行图片检测,使用的是已经训练好的模型权重

本篇文章详细说明如何使用自己的数据集进行YOLOV3模型权重的训练

使用的代码版本是在前一篇文章中完成的项目KerasYolo3Test2

在Windows环境下使用CPU进行训练

(操作系统:Windows 10专业版 64位)

(CPU:Intel(R) Core(TM) i5-4590 CPU@3.30GHz,内存8G)

第一部分:建立数据集,完成模型权重训练

1、收集图片,形成自己的图片库

图片尽量高清,低分辨率影响训练效果

2、生成标准VOC2007数据集文件夹模板

下载VOC2007数据集:下载地址https://pjreddie.com/projects/,从中找到Pascal VOC Dataset Mirror,点击进入下载VOC 2007中的Train/Validation Data (439 MB)

将下载后的文件解压,解压后的文件夹名称为VOCdevkit,删除该文件夹下的所有文件,仅保留里面的所有文件夹(可右击文件夹-属性查看文件夹中文件数是否为0)

或者不用下载VOC2007数据集,直接按照VOCdevkit文件夹目录结构建好

3、拷贝图片

将VOCdevkit文件夹模板拷贝到前篇文章项目KerasYolo3Test2下

将自己的图片库拷贝到VOCdevkit/VOC2007/JPEGImages目录下

4、标注图片

使用LabelImg工具标注图片

OpenDir--打开VOCdevkit/VOC2007/JPEGImages目录

Change Save Dir--将标注后生成的xml文件设置保存目录为VOCdevkit/VOC2007/Annotations目录

Create Rectbox--对图片目录中打开的图片标注矩形框并标注类别,标注完成后点击Save自动生成xml文件保存到前面设置的保存目录中

LabelImg工具下载地址:https://download.youkuaiyun.com/download/firemonkeycs/13038056

5、生成数据集txt文件,从图片库中按比例随机选取生成测试集、验证集、训练集

将CreateTxt.py文件放置在VOCdevkit\VOC2007目录下,CreateTxt.py文件代码如下:

import os

import random

trainval_percent = 0.1 #表示测试集和验证集所占总图片的比例。

train_percent = 0.9 #测试集所占测试集与验证集总和的比例

xmlfilepath = 'Annotations' # xml文件的路径

txtsavepath = 'ImageSets\Main' # 新生成文件的保存路径

# os.listdir() 方法用于返回指定的文件夹包含的文件或文件夹的名字的列表。这个列表以字母顺序。

# 它不包括 '.' 和'..' 即使它在文件夹中。

total_xml = os.listdir(xmlfilepath) #返回Annotations文件夹下的文件和文件夹的列表;得到一个xml文件名列表

num = len(total_xml) #xml文件的总数,也就是列表的长度

list = range(num) #相当于range(0,num,1),这里的0是首位(默认是0)、num是末位、1为跳跃间距(默认是1);但不包括num。

#得到一个[0,1,2,..,num-1]的列表。

tv = int(num * trainval_percent) #xml文件中的交叉验证集数

tr = int(tv * train_percent) #xml文件中的训练集数,注意,我们在前面定义的是训练集占验证集的比例。

#print(tv,tr) #打印出tv、tr进行验证

#random.sample()函数是从指定序列中随机获取指定长度的片段,原有序列不会改变。有两个参数,第一个参数代表指定序列,第二个参数是需获取的片段长度。

#这里相当于获得验证集和训练集数。

trainval = random.sample(list, tv) #随机在list列表中,获得长度为tv的验证集样本列表;

train = random.sample(trainval, tr) #随机在trainval列表,中获得长度为tr的验证集样本列表;

# print(trainval ,'\n',tr) #打印出trainval、train进行验证

#以可写的方式将数据写入文件中

ftrainval = open('ImageSets/Main/trainval.txt', 'w') #得到交叉验证集

ftest = open('ImageSets/Main/test.txt', 'w') #得到测试集

ftrain = open('ImageSets/Main/train.txt', 'w') #得到训练集

fval = open('ImageSets/Main/val.txt', 'w') #得到验证集

#三层循环

#第一层:在总的xml文件列表中

for i in list:

#name = total_xml[i]

name = total_xml[i][:-4]+'\n' #[:-4]用到了切片;因为文件名为 xx.xml ,[:-4]相当于从第一位截取到倒数第四位,即保留了 xx部分

# print(name)

#在交叉验证集列表中

if i in trainval:

#将符合条件的xml文件名的写入交叉验证集中

ftrainval.write(name)

#在训练集列表中

if i in train:

#将符合条件的xml文件名写入到测试集中

ftest.write(name)

else:

#不符合条件的xml文件名写入验证集中

fval.write(name)

#不在交叉验证集中,那么就在训练集中

else:

ftrain.write(name)

#关闭文件

ftrainval.close()

ftrain.close()

fval.close()

ftest.close()run运行CreateTxt.py文件生成数据集txt文件到VOCdevkit\VOC2007\ImageSets\Main目录下

6,生成YOLOV3模型所需的txt文件

修改voc_annotation.py中的classes = ["", ""]为自己要训练的类别

run运行voc_annotation.py

中间遇到一个报错

>>> runfile('E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2/voc_annotation.py', wdir='E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2')

Traceback (most recent call last):

File "<input>", line 1, in <module>

File "D:\Program Files\JetBrains\PyCharm 2020.2.1\plugins\python\helpers\pydev\_pydev_bundle\pydev_umd.py", line 197, in runfile

pydev_imports.execfile(filename, global_vars, local_vars) # execute the script

File "D:\Program Files\JetBrains\PyCharm 2020.2.1\plugins\python\helpers\pydev\_pydev_imps\_pydev_execfile.py", line 18, in execfile

exec(compile(contents+"\n", file, 'exec'), glob, loc)

File "E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2/voc_annotation.py", line 31, in <module>

convert_annotation(year, image_id, list_file)

File "E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2/voc_annotation.py", line 11, in convert_annotation

tree=ET.parse(in_file)

File "C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\xml\etree\ElementTree.py", line 1196, in parse

tree.parse(source, parser)

File "C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\xml\etree\ElementTree.py", line 597, in parse

self._root = parser._parse_whole(source)

UnicodeDecodeError: 'gbk' codec can't decode byte 0x86 in position 42: illegal multibyte sequence原因为步骤3和4中,我没有先把图片拷贝到项目中去再标注,而是直接标注后再拷贝进去,这样生成的xml标注文件中的<folder>路径在执行的时候就会不对,而且我的<folder>标签内容含有中文,所以报gbk code错误;

<annotation>

<folder>收集人脸数据集</folder>

<filename>001.jpg</filename>

<path>E:/LearnSoftwareDataVedio-PCnew/收集人脸数据集/001.jpg</path>

<source>

<database>Unknown</database>

</source>需要修改<folder>标签内容且不要包含中文,或者就老老实实按照步骤3和4来

7,修改参数配置文件—yolo3.cfg

在yolo3.cfg查找yolo,有三处需要修改,每处修改三个值如下:

[convolutional]

size=1

stride=1

pad=1

filters=21 #修改1:3*(5+类别数),这里训练2个类别

activation=linear

[yolo]

mask = 6,7,8

anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

classes=2 #修改2:类别数目

num=9

jitter=.3

ignore_thresh = .5

truth_thresh = 1

random=0 #修改3:关闭多尺度训练7,修改voc类别文件—model_data/voc_classes.txt

修改为自己标注/训练的类别

run运行train.py文件,执行训练

(1)中间遇到第一个报错

>>> runfile('E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2/train.py', wdir='E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2')

Using TensorFlow backend.

2020-10-19 22:15:59.619686: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'cudart64_100.dll'; dlerror: cudart64_100.dll not found

2020-10-19 22:15:59.654059: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

WARNING:tensorflow:From C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow_core\python\ops\resource_variable_ops.py:1630: calling BaseResourceVariable.__init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version.

Instructions for updating:

If using Keras pass *_constraint arguments to layers.

2020-10-19 22:16:34.125153: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'nvcuda.dll'; dlerror: nvcuda.dll not found

2020-10-19 22:16:34.125459: E tensorflow/stream_executor/cuda/cuda_driver.cc:318] failed call to cuInit: UNKNOWN ERROR (303)

2020-10-19 22:16:34.180022: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:169] retrieving CUDA diagnostic information for host: USER-PC

2020-10-19 22:16:34.180428: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:176] hostname: USER-PC

2020-10-19 22:16:34.220132: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

Create YOLOv3 model with 9 anchors and 2 classes.

Traceback (most recent call last):

File "<input>", line 1, in <module>

File "D:\Program Files\JetBrains\PyCharm 2020.2.1\plugins\python\helpers\pydev\_pydev_bundle\pydev_umd.py", line 197, in runfile

pydev_imports.execfile(filename, global_vars, local_vars) # execute the script

File "D:\Program Files\JetBrains\PyCharm 2020.2.1\plugins\python\helpers\pydev\_pydev_imps\_pydev_execfile.py", line 18, in execfile

exec(compile(contents+"\n", file, 'exec'), glob, loc)

File "E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2/train.py", line 190, in <module>

_main()

File "E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2/train.py", line 33, in _main

freeze_body=2, weights_path='model_data/yolo_weights.h5') # make sure you know what you freeze

File "E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2/train.py", line 120, in create_model

model_body.load_weights(weights_path, by_name=True, skip_mismatch=True)

File "C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\site-packages\keras\engine\saving.py", line 492, in load_wrapper

return load_function(*args, **kwargs)

File "C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\site-packages\keras\engine\network.py", line 1221, in load_weights

with h5py.File(filepath, mode='r') as f:

File "C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\site-packages\h5py\_hl\files.py", line 408, in __init__

swmr=swmr)

File "C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\site-packages\h5py\_hl\files.py", line 173, in make_fid

fid = h5f.open(name, flags, fapl=fapl)

File "h5py\_objects.pyx", line 54, in h5py._objects.with_phil.wrapper

File "h5py\_objects.pyx", line 55, in h5py._objects.with_phil.wrapper

File "h5py\h5f.pyx", line 88, in h5py.h5f.open

OSError: Unable to open file (unable to open file: name = 'model_data/yolo_weights.h5', errno = 2, error message = 'No such file or directory', flags = 0, o_flags = 0)原因是原始的train.py文件中create_model方法:

def create_model(input_shape, anchors, num_classes, load_pretrained=True, freeze_body=2,

weights_path='model_data/yolo_weights.h5'):

................................

if load_pretrained:

model_body.load_weights(weights_path, by_name=True, skip_mismatch=True)

........................

................................

其中load_pretrained默认为True,因此使用了weights_path参数,其默认为'model_data/yolo_weights.h5',源代码中并没有提供该预训练模型文件,因此报错

需要修改train.py解决以上问题,train.py文件修改为如下内容:

。。。。。。。。。。。。。。。。。。。。。。。。。。。。。

(2)中间遇到第二个报错

yolo Process finished with exit code -1073740791 (0xC0000409)

ImportError: DLL load failed: 页面文件太小,无法完成操作

AttributeError: module 'keras.backend' has no attribute 'control_flow_ops'这里原因是另一个项目也在运行,关掉后重新运行这个即可

Python 3.6.5 (v3.6.5:f59c0932b4, Mar 28 2018, 17:00:18) [MSC v.1900 64 bit (AMD64)] on win32

runfile('E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2/train.py', wdir='E:/PythonProject2020-PCnew/MyPythonTest/KerasYolo3Test2')

Using TensorFlow backend.

2020-10-20 21:59:46.212319: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'cudart64_100.dll'; dlerror: cudart64_100.dll not found

2020-10-20 21:59:46.212694: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

WARNING:tensorflow:From C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow_core\python\ops\resource_variable_ops.py:1630: calling BaseResourceVariable.__init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version.

Instructions for updating:

If using Keras pass *_constraint arguments to layers.

2020-10-20 22:00:06.105742: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'nvcuda.dll'; dlerror: nvcuda.dll not found

2020-10-20 22:00:06.106074: E tensorflow/stream_executor/cuda/cuda_driver.cc:318] failed call to cuInit: UNKNOWN ERROR (303)

2020-10-20 22:00:06.189661: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:169] retrieving CUDA diagnostic information for host: USER-PC

2020-10-20 22:00:06.191324: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:176] hostname: USER-PC

2020-10-20 22:00:06.223672: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

Create YOLOv3 model with 9 anchors and 2 classes.

WARNING:tensorflow:From C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\site-packages\keras\backend\tensorflow_backend.py:3170: where (from tensorflow.python.ops.array_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.where in 2.0, which has the same broadcast rule as np.where

Train on 27 samples, val on 3 samples, with batch size 2.

WARNING:tensorflow:From C:\Users\USER\AppData\Local\Programs\Python\Python36\lib\site-packages\keras\backend\tensorflow_backend.py:422: The name tf.global_variables is deprecated. Please use tf.compat.v1.global_variables instead.

Epoch 1/50

2020-10-20 22:00:41.177837: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:533] remapper failed: Invalid argument: Subshape must have computed start >= end since stride is negative, but is 0 and 2 (computed from start 0 and end 9223372036854775807 over shape with rank 2 and stride-1)

2020-10-20 22:00:44.540757: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:533] remapper failed: Invalid argument: Subshape must have computed start >= end since stride is negative, but is 0 and 2 (computed from start 0 and end 9223372036854775807 over shape with rank 2 and stride-1)

1/13 [=>............................] - ETA: 3:35 - loss: 7435.0278

2/13 [===>..........................] - ETA: 2:25 - loss: 7998.6389

3/13 [=====>........................] - ETA: 1:56 - loss: 7704.9342

4/13 [========>.....................] - ETA: 1:36 - loss: 7287.2770

5/13 [==========>...................] - ETA: 1:22 - loss: 6748.4561

6/13 [============>.................] - ETA: 1:09 - loss: 6198.6502

7/13 [===============>..............] - ETA: 57s - loss: 5707.3658

8/13 [=================>............] - ETA: 47s - loss: 5299.7036

9/13 [===================>..........] - ETA: 37s - loss: 4943.8615

10/13 [======================>.......] - ETA: 27s - loss: 4628.3490

11/13 [========================>.....] - ETA: 18s - loss: 4334.4019

12/13 [==========================>...] - ETA: 8s - loss: 4088.2245 2020-10-20 22:02:33.312656: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:533] remapper failed: Invalid argument: Subshape must have computed start >= end since stride is negative, but is 0 and 2 (computed from start 0 and end 9223372036854775807 over shape with rank 2 and stride-1)

2020-10-20 22:02:33.668379: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:533] remapper failed: Invalid argument: Subshape must have computed start >= end since stride is negative, but is 0 and 2 (computed from start 0 and end 9223372036854775807 over shape with rank 2 and stride-1)

13/13 [==============================] - 120s 9s/step - loss: 3873.2753 - val_loss: 83836181069955072.0000

Epoch 2/50

1/13 [=>............................] - ETA: 1:36 - loss: 1042.4215

2/13 [===>..........................] - ETA: 1:28 - loss: 1006.2632

3/13 [=====>........................] - ETA: 1:20 - loss: 965.0129

4/13 [========>.....................] - ETA: 1:12 - loss: 919.7690

5/13 [==========>...................] - ETA: 1:04 - loss: 869.3804

6/13 [============>.................] - ETA: 56s - loss: 830.6708

7/13 [===============>..............] - ETA: 48s - loss: 805.4417

8/13 [=================>............] - ETA: 40s - loss: 767.4460

9/13 [===================>..........] - ETA: 32s - loss: 758.7014

10/13 [======================>.......] - ETA: 24s - loss: 734.1800

11/13 [========================>.....] - ETA: 16s - loss: 709.9692

12/13 [==========================>...] - ETA: 8s - loss: 688.0914

13/13 [==============================] - 108s 8s/step - loss: 666.7036 - val_loss: 7863124120043520.0000

.............................................

Epoch 49/50

1/13 [=>............................] - ETA: 1:35 - loss: 48.1933

2/13 [===>..........................] - ETA: 1:27 - loss: 49.1706

3/13 [=====>........................] - ETA: 1:19 - loss: 48.3104

4/13 [========>.....................] - ETA: 1:11 - loss: 51.0536

5/13 [==========>...................] - ETA: 1:03 - loss: 50.8756

6/13 [============>.................] - ETA: 55s - loss: 50.7547

7/13 [===============>..............] - ETA: 47s - loss: 50.3091

8/13 [=================>............] - ETA: 40s - loss: 50.8045

9/13 [===================>..........] - ETA: 32s - loss: 50.6192

10/13 [======================>.......] - ETA: 24s - loss: 50.3993

11/13 [========================>.....] - ETA: 16s - loss: 49.4737

12/13 [==========================>...] - ETA: 8s - loss: 49.2359

13/13 [==============================] - 107s 8s/step - loss: 49.2499 - val_loss: 63.7801

Epoch 50/50

1/13 [=>............................] - ETA: 1:38 - loss: 45.8472

2/13 [===>..........................] - ETA: 1:29 - loss: 46.3536

3/13 [=====>........................] - ETA: 1:20 - loss: 46.0816

4/13 [========>.....................] - ETA: 1:12 - loss: 46.7334

5/13 [==========>...................] - ETA: 1:03 - loss: 46.6016

6/13 [============>.................] - ETA: 55s - loss: 47.3732

7/13 [===============>..............] - ETA: 47s - loss: 48.5612

8/13 [=================>............] - ETA: 39s - loss: 48.6074

9/13 [===================>..........] - ETA: 31s - loss: 47.3833

10/13 [======================>.......] - ETA: 23s - loss: 48.0357

11/13 [========================>.....] - ETA: 15s - loss: 47.7770

12/13 [==========================>...] - ETA: 7s - loss: 47.6821

13/13 [==============================] - 106s 8s/step - loss: 47.7529 - val_loss: 55.1019至此训练完成,不得不说CPU上跑训练弱得很,只整了50张图片,batch_size=2,Epoch=500,跑了大概30多个小时

有条件还是上GPU

第二部分:利用训练得到的权重进行图片目标检测

1,修改代码—yolo.py

"model_path": 'logs/000/trained_weights.h5',

"anchors_path": 'model_data/yolo_anchors.txt',

"classes_path": 'model_data/voc_classes.txt',

2,yolov3.cfg的训练模式改为测试模式

修改yolov3_voc.cfg文件里的注释为Testing

# Testing

batch=1

subdivisions=1

# Training

#batch=64

#subdivisions=163,run运行yolo_video.py文件

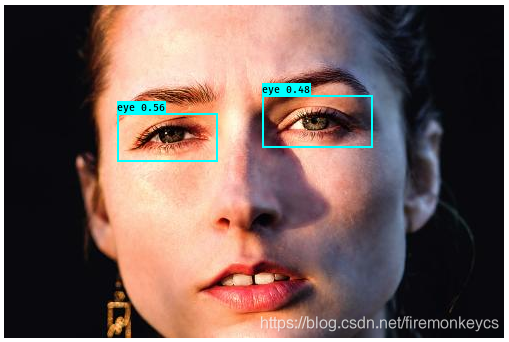

修改代码中的image文件名路径,run执行,执行结果如下:

Use tf.where in 2.0, which has the same broadcast rule as np.where

(416, 416, 3)

2020-11-03 07:21:14.748958: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:533] remapper failed: Invalid argument: Subshape must have computed start >= end since stride is negative, but is 0 and 2 (computed from start 0 and end 9223372036854775807 over shape with rank 2 and stride-1)

2020-11-03 07:21:14.937836: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:533] remapper failed: Invalid argument: Subshape must have computed start >= end since stride is negative, but is 0 and 2 (computed from start 0 and end 9223372036854775807 over shape with rank 2 and stride-1)

Found 2 boxes for img

eye 0.48 (258, 90) (368, 142)

eye 0.56 (113, 108) (213, 156)

1.7451701

time:1.7498764991760254

(416, 416, 3)

2020-11-03 07:25:34.138863: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:533] remapper failed: Invalid argument: Subshape must have computed start >= end since stride is negative, but is 0 and 2 (computed from start 0 and end 9223372036854775807 over shape with rank 2 and stride-1)

2020-11-03 07:25:34.340585: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:533] remapper failed: Invalid argument: Subshape must have computed start >= end since stride is negative, but is 0 and 2 (computed from start 0 and end 9223372036854775807 over shape with rank 2 and stride-1)

Found 1 boxes for img

eye 0.84 (531, 267) (627, 312)

1.8089032999999999

time:1.8089618682861328

这里第一张图检测到两个eye,置信度一般,但没有检测出mouth;第二张图只检测到了一个eye,但置信度还比较高;

分析可能原因是训练集图片太少了,另外眼睛有变形/旋转的训练样本更少,导致检测效果还不够好

后续改进可以考虑增加样本数量,同时增加样本中眼睛有形变情况的占比,提高检测效果,本文作为模型算法的实践体验基本达到目的!

如果没有出框,修改yolo.py中的score和iou,score足够小能出框但不准,另外主要跟样本数量、train.py中的训练次数epochs有关

"score" : 0.12,#标准0.3

"iou" : 0.3,#标准0.45

附修改后的源代码下载地址:https://download.youkuaiyun.com/download/firemonkeycs/13081632

本文详述如何在Windows环境下,使用CPU训练YOLOV3模型,并针对自己的数据集进行标注和训练。通过调整配置文件、处理标注错误、创建数据集txt文件,成功执行训练。尽管训练时间长,但最终能够实现目标检测。未来改进方向包括增加样本数量和训练次数以提升检测效果。

本文详述如何在Windows环境下,使用CPU训练YOLOV3模型,并针对自己的数据集进行标注和训练。通过调整配置文件、处理标注错误、创建数据集txt文件,成功执行训练。尽管训练时间长,但最终能够实现目标检测。未来改进方向包括增加样本数量和训练次数以提升检测效果。

1641

1641

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?