公众号:尤而小屋

作者:Peter

编辑:Peter

今天给大家推荐一个机器学习可视化神器:Yellowbrick

文章有点长,建议直接收藏!

什么是Yellowbrick

Yellowbrick是一个用于可视化机器学习模型和评估性能的Python库。它提供了一系列高级可视化工具,帮助数据科学家和机器学习从业者更好地理解、调试和优化他们的模型。

Yellowbrick的主要目标是通过可视化技术增强机器学习工作流程的可解释性和可视化分析。它建立在Scikit-Learn、Matplotlib库之上,并与其无缝集成。通过使用Yellowbrick,用户可以直观地探索模型的输入特征,理解特征之间的关系,并可视化训练过程中的评估指标。

Yellowbrick能够做什么

Yellowbrick提供了多种可视化方法,包括特征可视化、模型选择、调参、回归和分类模型评估等。其中一些可视化工具包括特征重要性条形图、学习曲线、分类报告、ROC曲线和混淆矩阵等。

使用Yellowbrick库,用户可以轻松地生成丰富的可视化图表,从而更好地理解和解释机器学习模型的行为和性能。这些可视化工具不仅对于初学者学习机器学习概念和模型表现非常有帮助,也对于有经验的数据科学家进行模型调试和改进非常有用。

(1)特征分析

(2)回归可视化

(3)聚类、模型选择、文本可视化等

更多的内容请参考官网学习地址:

https://www.scikit-yb.org/en/latest/

https://www.scikit-yb.org/en/latest/api/index.html

https://www.scikit-yb.org/en/latest/gallery.html

下面详细介绍Yellowbrick的使用。

安装

安装非常简单:

pip install yellowbrick特征分析

In [1]:

import pandas as pd

import numpy as np

from sklearn import datasets

from yellowbrick.target import FeatureCorrelation

from yellowbrick.features.rankd import Rank1D, Rank2D

from yellowbrick.features.radviz import RadViz

from yellowbrick.features.pcoords import ParallelCoordinates

from yellowbrick.features.jointplot import JointPlotVisualizer

from yellowbrick.features.pca import PCADecomposition

from yellowbrick.features.manifold import Manifold

import warnings

warnings.filterwarnings("ignore")特征相关性Feature Correlation

常见分析一般是求解特征和目标变量的Person相关性:

In [2]:

# 导入数据

data = datasets.load_diabetes()

X, y = data['data'], data['target']

features = np.array(data['feature_names'])

print(features) # 特征信息

visualizer = FeatureCorrelation(labels=features)

visualizer.fit(X, y) # 每个特征和target之间的相关性

visualizer.show()

['age' 'sex' 'bmi' 'bp' 's1' 's2' 's3' 's4' 's5' 's6']

现将数据生成DataFrame格式:

In [3]:

df = pd.DataFrame(X, columns=features)

df.head()

添加一列目标变量y:

In [4]:

df["target"] = y

df.head()Out[4]:

直接使用pandas对相关性系数进行求解:

In [5]:

corr = df.corr() # 相关性

corr

对target类的特征进行排序:

In [6]:

corr["target"].sort_values(ascending=False)Out[6]:

target 1.000000

bmi 0.586450

s5 0.565883

bp 0.441484

s4 0.430453

s6 0.382483

s1 0.212022

age 0.187889

s2 0.174054

sex 0.043062

s3 -0.394789

Name: target, dtype: float64可以将这里的结果和基于yellowbrick求解的结果做对比,发现是一致的。

特征重要性

特征工程过程包括选择生成有效模型所需的最少特征,因为模型包含的特征越多,它就越复杂(数据越稀疏),因此模型对方差引起的误差就越敏感。

通用的做法是消除或者减少特征。比如常见的决策树,还有集成的树模型:随机森林、梯度提升树、GBDT等都提供了feature_importances属性来筛选特征。

yellowbrick提供了FeatureImportances来可视化特征的重要性:

In [8]:

from sklearn.ensemble import RandomForestClassifier

from yellowbrick.datasets import load_credit

from yellowbrick.model_selection import FeatureImportances

X,y = load_credit()

rf = RandomForestClassifier(n_estimators=10)

viz = FeatureImportances(rf) # 基于指定模型的特征分类

viz.fit(X,y)

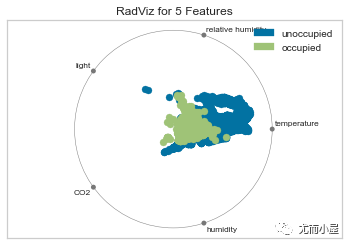

viz.show()RadVizVisualizer

In [9]:

from yellowbrick.datasets import load_occupancy # 数据

from yellowbrick.features import RadViz # 绘制雷达图In [10]:

X,y = load_occupancy()

X.head()Out[10]:

In [11]:

y.head()Out[11]:

0 1

1 1

2 1

3 1

4 1

Name: occupancy, dtype: int64基础使用

In [12]:

# 目标标签

classes = ["unoccupied", "occupied"]

visualizer = RadViz(classes=classes) # 实例化RadViz类

visualizer.fit(X, y)

visualizer.transform(X)

visualizer.show()

快速使用

使用radviz方法:

In [13]:

# 方法2:基于radviz

from yellowbrick.features.radviz import radviz

from yellowbrick.datasets import load_occupancy

X,y = load_occupancy()

classes = ["unoccupied", "occupied"]

radviz(X,y,classes=classes)平行坐标Parallel Coordinates

基础使用

In [14]:

from yellowbrick.features import ParallelCoordinates

from yellowbrick.datasets import load_occupancy

X, y = load_occupancy()

# 指定特征和目标分类变量

features = ["temperature", "relative humidity", "light", "CO2", "humidity"]

classes = ["unoccupied", "occupied"]

# 实例化可视化对象

visualizer = ParallelCoordinates(

classes=classes,

features=features,

sample=0.05,

shuffle=True

)

visualizer.fit_transform(X, y)

visualizer.show()

还可以对数据进行标准化处理:观察左侧y轴坐标值的变化

In [15]:

from yellowbrick.features import ParallelCoordinates

from yellowbrick.datasets import load_occupancy

X, y = load_occupancy()

# 指定特征和目标分类变量

features = ["temperature", "relative humidity", "light", "CO2", "humidity"]

classes = ["unoccupied", "occupied"]

# 实例化可视化对象

visualizer = ParallelCoordinates(

classes=classes,

features=features,

sample=0.05,

normalize='standard', # 标准化

shuffle=True,

fast=True # 快速出图

)

visualizer.fit_transform(X, y)

visualizer.show()

快速方法

In [16]:

from yellowbrick.features.pcoords import parallel_coordinates

from yellowbrick.datasets import load_occupancy

X, y = load_occupancy()

features = ["temperature", "relative humidity", "light", "CO2", "humidity"]

classes = ["unoccupied", "occupied"]

# 实例化

visualizer = parallel_coordinates(X, y, classes=classes, features=features)双变量关系图

基础使用

In [17]:

from yellowbrick.datasets import load_concrete

from yellowbrick.features import JointPlotVisualizer # 双变量连接关系图

X, y = load_concrete()

visualizer = JointPlotVisualizer(columns="cement",

# kind="hexbin" # 图形类型,默认是圆点

)

result=visualizer.fit_transform(X, y)

visualizer.show()两两特征间关系

In [18]:

from yellowbrick.datasets import load_concrete

from yellowbrick.features import JointPlotVisualizer # 双变量连接关系图

X, y = load_concrete()

visualizer = JointPlotVisualizer(columns=["cement","ash"],

# kind="hexbin" # 图形类型,默认是圆点

)

result=visualizer.fit_transform(X, y)

visualizer.show()快速方法

通过快速的方法join_plot来实现

In [19]:

from yellowbrick.datasets import load_concrete

from yellowbrick.features import joint_plot

X, y = load_concrete()

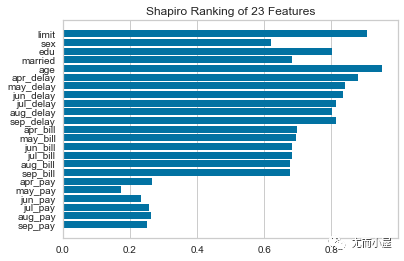

visualizer = joint_plot(X, y, columns="cement")特征排序

Rank 1D

In [20]:

from yellowbrick.datasets import load_credit

from yellowbrick.features import Rank1D

X,y = load_credit()

visualizer = Rank1D(algorithm="shapiro")

visualizer.fit(X,y)

visualizer.transform(X)

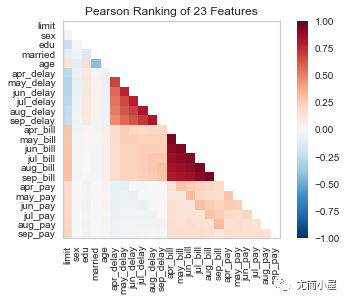

visualizer.show()Rank 2D

In [21]:

from yellowbrick.datasets import load_credit

from yellowbrick.features import Rank2D

X,y = load_credit()

# 基于皮尔逊相关系数

visualizer = Rank2D(algorithm="pearson")

visualizer.fit(X,y)

visualizer.transform(X)

visualizer.show()

基于特征的协方差排序:

In [22]:

X,y = load_credit()

# 基于协方差

visualizer = Rank2D(algorithm="covariance")

visualizer.fit(X,y)

visualizer.transform(X)

visualizer.show()Rank1d and Rank2D

In [23]:

import matplotlib.pyplot as plt

from yellowbrick.datasets import load_concrete

from yellowbrick.features import rank1d, rank2d

# 导入数据

X, _ = load_concrete()

_, axes = plt.subplots(ncols=2, figsize=(8,4))

rank1d(X, ax=axes[0], show=False)

rank2d(X, ax=axes[1], show=False)

plt.show()特征平行分布图Parallel Coordinates

In [24]:

from yellowbrick.features import ParallelCoordinates

from yellowbrick.datasets import load_occupancy

# 导入数据

X, y = load_occupancy()In [25]:

X.head()Out[25]:

In [26]:

features = X.columns

classes = ["unoccupied", "occupied"]

# 实例化对象

visualizer = ParallelCoordinates(

classes=classes, # 目标分类

features=features, # 特征属性

sample=0.05,

# normalize='standard', # 是否标准化

fast=True, # 快速生成图形

shuffle=True # 打乱数据

)

visualizer.fit_transform(X, y)

visualizer.show()PCA降维

PCA Projection

In [27]:

from yellowbrick.features import PCA

from yellowbrick.datasets import load_credit

X, y = load_credit()

classes = ['account in default', 'current with bills']

visualizer = PCA(scale=True, classes=classes)

visualizer.fit_transform(X, y)

visualizer.show()

默认情况下生成两个主成分;可以通过参数projection进行修改:

In [28]:

from yellowbrick.features import PCA

from yellowbrick.datasets import load_credit

X, y = load_credit()

classes = ['account in default', 'current with bills']

visualizer = PCA(scale=True,

projection=3, # 修改主成分个数

classes=classes)

visualizer.fit_transform(X, y)

visualizer.show()Biplot

主成分分析的投影图中增加数据点

In [29]:

from yellowbrick.features import PCA

from yellowbrick.datasets import load_credit

X, y = load_credit()

classes = ['account in default', 'current with bills']

visualizer = PCA(scale=True,

proj_features=True, # 增加参数

classes=classes)

visualizer.fit_transform(X, y)

visualizer.show()

3个主成分下的可视化:

In [30]:

from yellowbrick.features import PCA

from yellowbrick.datasets import load_credit

X, y = load_credit()

classes = ['account in default', 'current with bills']

visualizer = PCA(scale=True,

projection=3, # 3个主成分

proj_features=True, # 增加参数

classes=classes)

visualizer.fit_transform(X, y)

visualizer.show()

流行可视化Mainfold visualization

主要是针对维数据的可视化,将多维度的可视化通过Mainfold visualization嵌入到2维中;同时基于散点图来显示数据内在的结构。

离散型变量

In [31]:

from yellowbrick.features import Manifold

from yellowbrick.datasets import load_occupancy

# 导入数据

X, y = load_occupancy()

classes = ["unoccupied", "occupied"]

# 实例化

# mainfold的选择:lle ltsa hessian modified isomap mds spectral tsne

viz = Manifold(manifold="tsne", classes=classes)

viz.fit_transform(X, y)

viz.show()

上面的结果消耗很长时间。解决方法:

对数据特征进行缩放:StandScalar()

对实例进行采样,比如使用train_test_split来保持类分层

对特征进行过滤,减少数据中特征的稀疏性

一种常见的方法是用SelectKBest选择与目标数据集具有统计相关性的要素。例如,我们可以使用f_classif分数在数据集中找到3个最佳的特征:

In [32]:

from sklearn.pipeline import Pipeline

from sklearn.feature_selection import f_classif, SelectKBest

from yellowbrick.features import Manifold

from yellowbrick.datasets import load_occupancy

X, y = load_occupancy()

classes = ["unoccupied", "occupied"]

# 模型管道

model = Pipeline([

("selectk", SelectKBest(k=3, score_func=f_classif)),

("viz", Manifold(manifold="isomap", n_neighbors=10, classes=classes)),

])

model.fit_transform(X, y)

model.named_steps['viz'].show()连续型变量

In [33]:

from yellowbrick.features import Manifold

from yellowbrick.datasets import load_concrete

X, y = load_concrete()

viz = Manifold(manifold="isomap", n_neighbors=10)

viz.fit_transform(X, y)

viz.show()快速方法

使用manifold_embedding

In [34]:

from yellowbrick.features.manifold import manifold_embedding

from yellowbrick.datasets import load_concrete

X, y = load_concrete()

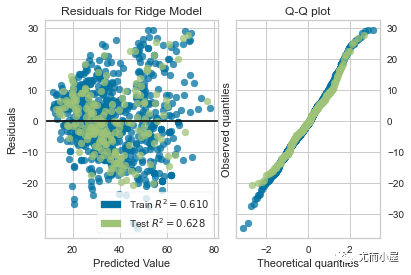

manifold_embedding(X, y, manifold="isomap", n_neighbors=10)回归分析

残差图可视化

In [1]:

from sklearn.linear_model import Ridge

from sklearn.model_selection import train_test_split

from yellowbrick.regressor import ResidualsPlot

import warnings

warnings.filterwarnings("ignore")In [2]:

from yellowbrick.datasets import load_concrete

# 导入数据

X, y = load_concrete()In [3]:

X.head(3)Out[3]:

In [4]:

y.head()Out[4]:

0 79.986111

1 61.887366

2 40.269535

3 41.052780

4 44.296075

Name: strength, dtype: float64基础使用

In [5]:

# 切分数据集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# 初始化模型

model = Ridge()

visualizer = ResidualsPlot(model)

visualizer.fit(X_train, y_train) # 模型训练

visualizer.score(X_test, y_test) # 得分

visualizer.show()

也可以隐藏右边的直方图:

In [6]:

visualizer = ResidualsPlot(model, hist=False)

visualizer.fit(X_train, y_train)

visualizer.score(X_test, y_test)

visualizer.show()QQ图

绘制QQ图:

In [7]:

visualizer = ResidualsPlot(model,

hist=False, # 隐藏

qqplot=True) # 显示

visualizer.fit(X_train, y_train)

visualizer.score(X_test, y_test)

visualizer.show()快速方法

提供一种快速绘图的方法:residuals_plot

In [8]:

from sklearn.ensemble import RandomForestRegressor

from yellowbrick.regressor import residuals_plot

# 导入数据

X, y = load_concrete()

# 切分数据

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, shuffle=True)

# 随机森林回归模型可视化 residuals_plot

viz = residuals_plot(RandomForestRegressor(),

X_train, y_train,

X_test, y_test,

train_color="blue",

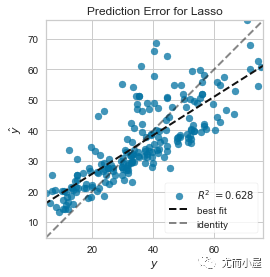

test_color="red")预测误差可视化

基础使用

In [9]:

from sklearn.linear_model import Lasso

from sklearn.model_selection import train_test_split

from yellowbrick.datasets import load_concrete

from yellowbrick.regressor import PredictionError # 预测误差

# 导入数据并切分

X, y = load_concrete()

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# 模型初始化

model = Lasso()

visualizer = PredictionError(model)

visualizer.fit(X_train, y_train)

visualizer.score(X_test, y_test)

visualizer.show()快速方法

提供快速绘图的方法:prediction_error

In [10]:

from sklearn.linear_model import Lasso

from sklearn.model_selection import train_test_split

from yellowbrick.datasets import load_concrete

# 增加语句

from yellowbrick.regressor import prediction_error

X, y = load_concrete()

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

model = Lasso()

# 增加

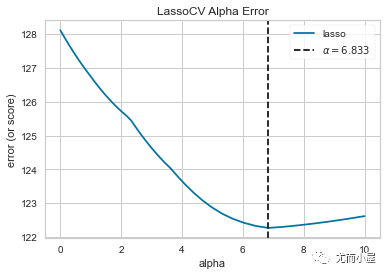

visualizer = prediction_error(model, X_train, y_train, X_test, y_test)alpha选择

在回归模型中,如何选择系数alpha:

In [11]:

import numpy as np

from sklearn.linear_model import LassoCV

from yellowbrick.regressor import AlphaSelection # 导入类

from yellowbrick.datasets import load_concrete

X, y = load_concrete()

# alpha的可选数组

alphas = np.logspace(-10, 1, 400)

model = LassoCV(alphas=alphas)

visualizer = AlphaSelection(model)

visualizer.fit(X, y)

visualizer.show()

快速生成的方法:

In [12]:

from sklearn.linear_model import LassoCV

from yellowbrick.regressor.alphas import alphas # 导入类

from yellowbrick.datasets import load_concrete

X, y = load_concrete()

alphas(LassoCV(random_state=0), X, y) # 调用柯克距离Cook`s Distance

库克距离(Cook's distance)是回归分析中用于评估数据点对回归模型拟合的影响程度的统计量。它用于检测在模型中移除某个数据点后,对模型参数估计产生的影响。库克距离测量了每个观测值对于模型预测结果的敏感程度。

具体而言,库克距离是通过比较在模型中包含某个数据点和不包含该数据点时的预测差异来计算的。它基于残差和杠杆统计量来度量观测点对回归模型拟合的贡献。

库克距离的计算公式为:

库克距离的值越大,表示相应的数据点对于模型的影响越大。通常,当库克距离超过某个阈值时,该数据点被认为是具有显著影响的"异常值"或"离群值"。

通过分析库克距离,可以识别并调查那些对回归模型具有重要影响的观测点。这有助于检测异常值、识别数据中的异常模式,并评估回归模型的稳健性和可靠性。

In [13]:

from yellowbrick.regressor import CooksDistance

from yellowbrick.datasets import load_concrete

X, y = load_concrete()

visualizer = CooksDistance()

visualizer.fit(X, y)

visualizer.show()分类问题

介绍分类问题中的常用评价指标:精准率、召回率、F1_score等

在机器学习分类问题中,有许多评价指标可以用来评估分类模型的性能。下面是一些常见的评价指标:

1、准确率(Accuracy):分类正确的样本数与总样本数之比。它是最常见的评价指标之一,适用于数据集类别分布相对平衡的情况。然而,在不平衡数据集中,准确率可能会产生误导。

2、精确率(Precision):预测为正例且正确的样本数与预测为正例的样本总数之比。它衡量了模型在预测为正例的样本中的准确性。

3、召回率(Recall):预测为正例且正确的样本数与实际为正例的样本总数之比。它衡量了模型对于正例样本的识别能力。

4、F1分数(F1 Score):精确率和召回率的调和平均值。它综合考虑了精确率和召回率,可以帮助判断模型的综合性能。

5、特异度(Specificity):预测为负例且正确的样本数与实际为负例的样本总数之比。它衡量了模型在预测为负例的样本中的准确性。

6、假阳性率(False Positive Rate,FPR):预测为负例但实际为正例的样本数与实际为负例的样本总数之比。它衡量了模型将负例样本错误预测为正例的能力。

7、ROC曲线(Receiver Operating Characteristic Curve):ROC曲线绘制了真阳性率(TPR,召回率)与假阳性率(FPR)之间的关系。它可以帮助评估模型在不同阈值下的性能表现。

8、AUC值(Area Under the ROC Curve):ROC曲线下的面积,用于衡量分类器的性能。AUC值越大,分类器性能越好。

这些评价指标可以根据具体问题的需求和数据集的特点选择使用。需要注意的是,在不同的问题背景和数据集上,不同的评价指标可能会有不同的重要性和解释。因此,了解问题的上下文和评估指标的含义是非常重要的。

分类报告Classification Report

基础使用

In [1]:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import warnings

warnings.filterwarnings("ignore")In [2]:

from sklearn.model_selection import TimeSeriesSplit

from sklearn.naive_bayes import GaussianNB

from yellowbrick.classifier import ClassificationReport

from yellowbrick.datasets import load_occupancy

X, y = load_occupancy()

X.head()Out[2]:

In [3]:

y.head()Out[3]:

0 1

1 1

2 1

3 1

4 1

Name: occupancy, dtype: int64In [4]:

classes = ["unoccupied", "occupied"]

tscv = TimeSeriesSplit()

for train_index, test_index in tscv.split(X):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y.iloc[train_index], y.iloc[test_index]

# 实例化模型

model = GaussianNB()

visualizer = ClassificationReport(model, classes=classes, support=True)

visualizer.fit(X_train, y_train) # 模型训练

visualizer.score(X_test, y_test) # 测试机得分

visualizer.show() # 可视化快速方法

使用classification_report快速方法:

In [5]:

from sklearn.model_selection import TimeSeriesSplit

from sklearn.naive_bayes import GaussianNB

from yellowbrick.datasets import load_occupancy

from yellowbrick.classifier import classification_report # 分类报告

X, y = load_occupancy()

classes = ["unoccupied", "occupied"]

tscv = TimeSeriesSplit()

for train_index, test_index in tscv.split(X):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y.iloc[train_index], y.iloc[test_index]

visualizer = classification_report(GaussianNB(), X_train, y_train, X_test, y_test, classes=classes, support=True)混淆矩阵Confusion Matrix

基础使用

In [6]:

import pandas as pd

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split as tts

from sklearn.linear_model import LogisticRegression

from yellowbrick.classifier import ConfusionMatrix

digits = load_digits()

X,y = digits.data, digits.target目标变量y中总共有10个类别:

In [7]:

pd.value_counts(y).sort_index()Out[7]:

0 178

1 182

2 177

3 183

4 181

5 182

6 181

7 179

8 174

9 180

dtype: int64In [8]:

# 数据切分

X_train, X_test, y_train, y_test = tts(X, y, test_size =0.2, random_state=11)

# 模型实例化

model = LogisticRegression(multi_class="auto", solver="liblinear")调用ConfusionMatrix类:

In [9]:

cm = ConfusionMatrix(model, classes=[0,1,2,3,4,5,6,7,8,9], cmap="GnBu")

cm.fit(X_train,y_train)

cm.score(X_test,y_test)

cm.show()带上类别名称

In [10]:

from sklearn.datasets import load_iris

iris = load_iris()

X = iris.data

y = iris.target

classes = iris.target_names

X_train, X_test, y_train, y_test = tts(X, y, test_size=0.2)

model = LogisticRegression(multi_class="auto", solver="liblinear")

iris_cm = ConfusionMatrix(

model,

classes=classes,

cmap="Purples",

# 带上类别名称

# iris数据集中的3个类别

label_encoder={0: 'setosa', 1: 'versicolor', 2: 'virginica'}

)

iris_cm.fit(X_train, y_train)

iris_cm.score(X_test, y_test)

iris_cm.show()快速方法

基于confunsion_matrix实现:

In [11]:

import matplotlib.pyplot as plt

from yellowbrick.datasets import load_credit

from yellowbrick.classifier import confusion_matrix

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split as tts

X, y = load_credit()

X_train, X_test, y_train, y_test = tts(X, y, test_size=0.2)

X, y = load_credit()

X_train, X_test, y_train, y_test = tts(X, y, test_size=0.2)

lr = LogisticRegression()

confusion_matrix(

lr,

X_train,

y_train,

X_test,

y_test,

classes=["Not_Defaulted", "Default"])

plt.tight_layout()ROC-AUC曲线

二分类问题

In [12]:

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from yellowbrick.classifier import ROCAUC # 导入ROCAUC类

from yellowbrick.datasets import load_spam

X, y = load_spam()

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=42)

model = LogisticRegression(multi_class="auto", solver="liblinear")

visualizer = ROCAUC(model, classes=["not_spam", "is_spam"])

visualizer.fit(X_train, y_train)

visualizer.score(X_test, y_test)

visualizer.show()多分类问题

In [13]:

from sklearn.linear_model import RidgeClassifier

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import OrdinalEncoder, LabelEncoder

from yellowbrick.classifier import ROCAUC

from yellowbrick.datasets import load_game

X, y = load_game()查看X和y的数据信息:

In [14]:

X.head()Out[14]:

In [15]:

y.head()Out[15]:

0 win

1 win

2 win

3 win

4 win

Name: outcome, dtype: object目标变量y中有3个分类:

In [16]:

pd.value_counts(y)Out[16]:

win 44473

loss 16635

draw 6449

Name: outcome, dtype: int64对特征X和目标变量y实施编码:

In [17]:

# 编码过程

X = OrdinalEncoder().fit_transform(X)

y = LabelEncoder().fit_transform(y)In [18]:

# 切分数据

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=42)

# 模型实例化

model = RidgeClassifier()

visualizer = ROCAUC(model, classes=["win", "loss", "draw"])

# 模型训练与测试集得分

visualizer.fit(X_train, y_train)

visualizer.score(X_test, y_test)

visualizer.show()快速方法

基于roc_auc方法直接生成:

In [19]:

from yellowbrick.classifier.rocauc import roc_auc

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from yellowbrick.datasets import load_credit

X, y = load_credit()

X_train, X_test, y_train, y_test = train_test_split(X,y)

# 模型实例化

model = LogisticRegression()

roc_auc(model, X_train, y_train, X_test=X_test, y_test=y_test, classes=['Not_Defaulted', 'Defaulted'])准确率和召回率Precision-Recall Curves

二分类

In [20]:

import matplotlib.pyplot as plt

from sklearn.linear_model import RidgeClassifier

from yellowbrick.classifier import PrecisionRecallCurve # 准确率和召回率曲线

from sklearn.model_selection import train_test_split as tts

# 导入数据

from yellowbrick.datasets import load_spam

X, y = load_spam()

# 切分数据

X_train, X_test, y_train, y_test = tts(X, y, test_size=0.2, shuffle=True, random_state=0)

# 给每个分类指定权重weights

weights = {0:0.2, 1:0.8}

# 绘制准确率和召回率曲线

viz = PrecisionRecallCurve(RidgeClassifier(random_state=0,

# class_weight=weights # 指定权重

))

viz.fit(X_train, y_train) # 训练

viz.score(X_test, y_test) # 得分

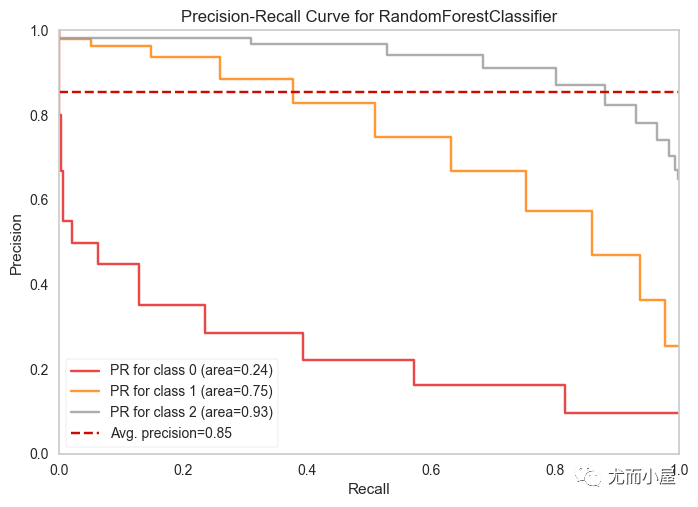

viz.show() 多分类

多分类

针对多分类问题如何实现准确率和召回率曲线的绘制:

In [21]:

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import OrdinalEncoder, LabelEncoder

from yellowbrick.classifier import PrecisionRecallCurve

from yellowbrick.datasets import load_game

X, y = load_game()In [22]:

X = OrdinalEncoder().fit_transform(X)

y = LabelEncoder().fit_transform(y)

X_train,X_test,y_train,y_test = tts(X,y,test_size=0.2,shuffle=True)通过设置参数per_class=True来实现每个分类的显示:

In [23]:

viz = PrecisionRecallCurve(RandomForestClassifier(n_estimators=10),

per_class=True,

cmap="Set1")

viz.fit(X_train, y_train)

viz.score(X_test, y_test)

viz.show() 分类预测误差ClassPredictionError

分类预测误差ClassPredictionError

基础使用

使用的是ClassPredictionError类:

In [24]:

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from yellowbrick.classifier import ClassPredictionError

from yellowbrick.datasets import load_credit

X, y = load_credit()

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20, random_state=42)

classes = ['account in default', 'current with bills']

# 预测误差

visualizer = ClassPredictionError(RandomForestClassifier(n_estimators=10), classes=classes)

visualizer.fit(X_train, y_train)

visualizer.score(X_test, y_test)

visualizer.show() 快速方法

快速方法

使用class_prediction_error方法:

In [25]:

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from yellowbrick.classifier import class_prediction_error

from yellowbrick.datasets import load_credit

X, y = load_credit()

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20, random_state=42)

classes = ['account in default', 'current with bills']

# 预测误差

# 注意传入到方法中数据的顺序;和切分的时候稍有差别

visualizer = class_prediction_error(RandomForestClassifier(n_estimators=10),

X_train, y_train, X_test, y_test,

classes=classes) 阈值鉴别Discrimination Threshold

阈值鉴别Discrimination Threshold

基础使用

主要是对评价指标中的精准率、召回率等的阈值进行判定:

In [26]:

from sklearn.linear_model import LogisticRegression

from yellowbrick.classifier import DiscriminationThreshold

from yellowbrick.datasets import load_credit

X, y = load_credit()

classes = ['account in default', 'current with bills']

# 预测误差

visualizer = DiscriminationThreshold(RandomForestClassifier(n_estimators=10), classes=classes)

visualizer.fit(X, y)

visualizer.show() 快速方法

快速方法

使用discrimination_threshold快速生成:

In [27]:

from yellowbrick.classifier.threshold import discrimination_threshold

from yellowbrick.datasets import load_occupancy

from sklearn.neighbors import KNeighborsClassifier

X, y = load_occupancy()

model = KNeighborsClassifier(3) # k近邻算法,分3个类

discrimination_threshold(model, X, y)聚类分析

基于肘图确定K值

基础使用

In [1]:

import pandas as pd

import numpy as np

from sklearn.cluster import KMeans

from sklearn.datasets import make_blobs

# 聚类肘图可视化

from yellowbrick.cluster import KElbowVisualizer

import warnings

warnings.filterwarnings("ignore")模拟生成一份数据:100个样本,12个特征,存在8个类

In [2]:

X, y = make_blobs(n_samples=1000, n_features=12, centers=8, random_state=42)

X[:3]Out[2]:

array([[ -0.98607355, 9.55319617, 3.60263268, 1.78283101,

-7.75524544, -8.26290932, -7.91215021, 9.23293956,

0.62373266, 4.72442079, -10.23895268, 8.91107166],

[ -3.48301954, -3.28381631, 6.25659168, -2.26420045,

-5.28752514, 0.99590158, -6.86779232, 4.55933482,

-7.90071996, 11.08367374, 5.27024744, -4.66551824],

[ 5.80121889, -6.40530668, -7.45313369, -7.11667171,

-4.28566324, -0.91043839, -1.34548129, -3.27314002,

1.32930171, -5.69083886, -3.64631494, -1.64218255]])查看模拟数据的相关信息:

In [3]:

X.shapeOut[3]:

(1000, 12)In [4]:

y[:10]Out[4]:

array([0, 5, 1, 5, 6, 4, 2, 3, 6, 6])In [5]:

pd.value_counts(y)Out[5]:

0 125

1 125

2 125

3 125

4 125

5 125

6 125

7 125

dtype: int64在4到11进行k值的选择:

In [6]:

model = KMeans() # 建立聚类模型

visualizer = KElbowVisualizer(

model,

k=(4,12),

timings=True, # 是否关闭右侧的时间

metric="distortion", # distortion, silhouette,calinski_harabasz

)

visualizer.fit(X)

visualizer.show() 结果中可以看到:k=7是效果是最好的

结果中可以看到:k=7是效果是最好的

默认情况下,聚类算法的评分参数是distortion。计算所有点到其质心距离的平方之和。还有其他的指标:比如轮廓系数silhouette 和 calinski_harabasz。

快速方法

使用kelbow_visualizer方法:

In [7]:

from sklearn.cluster import KMeans

from yellowbrick.cluster.elbow import kelbow_visualizer

from yellowbrick.datasets.loaders import load_nfl

X, y = load_nfl()

kelbow_visualizer(KMeans(random_state=4), X, k=(2,10),locate_elbow=True) 轮廓系数可视化

轮廓系数可视化

基础使用

In [8]:

from sklearn.cluster import KMeans

from yellowbrick.cluster import SilhouetteVisualizer # 轮廓系数可视化

from yellowbrick.datasets import load_nfl

X, y = load_nfl()

features = ['Rec', 'Yds', 'TD', 'Fmb', 'Ctch_Rate']

X = X.query('Tgt >= 20')[features]

# 模型:分为5个类

model = KMeans(5, random_state=42)

visualizer = SilhouetteVisualizer(model, colors='yellowbrick')

visualizer.fit(X)

visualizer.show() 快速方法

快速方法

使用silhouette_visualizer方法,传入聚类模型和带处理的数据

In [9]:

from sklearn.cluster import KMeans

from yellowbrick.cluster import silhouette_visualizer # 轮廓系数可视化

from yellowbrick.datasets import load_nfl

X, y = load_nfl()

features = ['Rec', 'Yds', 'TD', 'Fmb', 'Ctch_Rate']

X = X.query('Tgt >= 20')[features]

# 模型:分为5个类

model = KMeans(5, random_state=42)

silhouette_visualizer(model, X, colors='yellowbrick') 集群聚类图

集群聚类图

基础使用

In [10]:

from sklearn.cluster import KMeans

from sklearn.datasets import make_blobs

from yellowbrick.cluster import InterclusterDistance

# 模拟数据

X, y = make_blobs(n_samples=1000, n_features=12, centers=12, random_state=42)

model = KMeans(6)

visualizer = InterclusterDistance(model)

visualizer.fit(X)

visualizer.show() 快速方法

快速方法

使用intercluster_distance方法:

In [11]:

from yellowbrick.datasets import load_nfl

from sklearn.cluster import MiniBatchKMeans

from yellowbrick.cluster import intercluster_distance

X, _ = load_nfl()

# 内部传入聚类模型 + 数据

intercluster_distance(MiniBatchKMeans(5, random_state=777), X)文本可视化

基于Token的词频分布

基础使用

In [1]:

from sklearn.feature_extraction.text import CountVectorizer

from yellowbrick.text import FreqDistVisualizer

from yellowbrick.datasets import load_hobbies

import warnings

warnings.filterwarnings("ignore")先导入数据:

In [2]:

corpus = load_hobbies()

corpusOut[2]:

<yellowbrick.datasets.base.Corpus at 0x1baa80d3220>向量化过程:

In [3]:

vectorizer = CountVectorizer()In [4]:

docs = vectorizer.fit_transform(corpus.data)

features = vectorizer.get_feature_names()In [5]:

features[:10]Out[5]:

['00',

'000',

'00000151',

'00000153',

'00000154',

'000m',

'008',

'01',

'014',

'02']词频可视化:orient控制垂直或者水平柱状图

In [6]:

visualizer = FreqDistVisualizer(features=features, orient='v')

visualizer.fit(docs)

visualizer.show() 使用停用词

使用停用词

在英文文本中有很多无用词语,比如the、and等,我们去掉这些无用词语:

In [7]:

from sklearn.feature_extraction.text import CountVectorizer

from yellowbrick.text import FreqDistVisualizer

from yellowbrick.datasets import load_hobbies

# 数据

corpus = load_hobbies()

vectorizer = CountVectorizer(stop_words='english') # 传入停用词参数

docs = vectorizer.fit_transform(corpus.data)

features = vectorizer.get_feature_names()

visualizer = FreqDistVisualizer(features=features, orient='v')

visualizer.fit(docs)

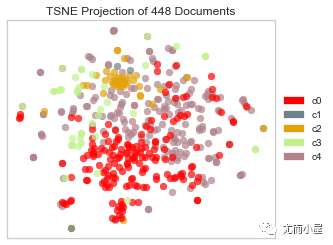

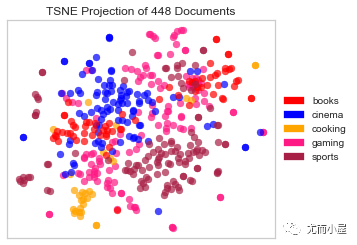

visualizer.show() 基于t-SNE的语料库可视化

基于t-SNE的语料库可视化

基础使用

t-SNE主要是对高维数据的可视化,但是它很耗时,一般也可使用SVD或者PCA

In [8]:

from sklearn.feature_extraction.text import TfidfVectorizer

from yellowbrick.text import TSNEVisualizer

from yellowbrick.datasets import load_hobbies

corpus = load_hobbies()

tfidf = TfidfVectorizer() # tfidf向量化

X = tfidf.fit_transform(corpus.data)

y = corpus.target

tsne = TSNEVisualizer()

tsne.fit(X, y)

tsne.show()基于聚类的可视化

In [9]:

from sklearn.cluster import KMeans

from sklearn.feature_extraction.text import TfidfVectorizer # 文本特征提取

from yellowbrick.text import TSNEVisualizer # TSNE可视化

from yellowbrick.datasets import load_hobbies

corpus = load_hobbies()

tfidf = TfidfVectorizer() # 向量化

X = tfidf.fit_transform(corpus.data)

clusters = KMeans(n_clusters=5)

clusters.fit(X)

tsne = TSNEVisualizer(colors=['red', '#6e828A', '#e1a20A', '#c1f28A', '#b1828A'])

tsne.fit(X, ["c{}".format(c) for c in clusters.labels_]) # clusters.labels_表示聚类的结果0 1 2 3 4

tsne.show()快速方法

In [10]:

from yellowbrick.text.tsne import tsne # 导入tsne

from sklearn.feature_extraction.text import TfidfVectorizer

from yellowbrick.datasets import load_hobbies

# 生成数据

corpus = load_hobbies()

tfidf = TfidfVectorizer()

X = tfidf.fit_transform(corpus.data)

y = corpus.target

tsne(X, y, colors=["red","blue","orange","#fe1984","#a81f45"])基于UMAP的语料可视化

先安装UMAP库:

pip install umap-learn -i https://pypi.tuna.tsinghua.edu.cn/simple基础使用

In [11]:

from sklearn.feature_extraction.text import TfidfVectorizer

from yellowbrick.datasets import load_hobbies

from yellowbrick.text import UMAPVisualizer

# 导入数据

corpus = load_hobbies()

tfidf = TfidfVectorizer()

docs = tfidf.fit_transform(corpus.data)

labels = corpus.target

# 初始文本可视化对象

umap = UMAPVisualizer(colors=['#11828A', '#Ae828A', '#d1ac8A', '#7e828A', '#51d28A'])

umap.fit(docs, labels)

umap.show()

指定metric参数,改变评价指标:

In [12]:

umap = UMAPVisualizer(metric='cosine',colors=['#11828A', '#Ae828A', '#d1ac8A', '#7e828A', '#51d28A'])

umap.fit(docs, labels)

umap.show()基于聚类的可视化

先对数据进行聚类分簇,再使用UMAP进行可视化:

In [13]:

from sklearn.cluster import KMeans

from sklearn.feature_extraction.text import TfidfVectorizer

from yellowbrick.datasets import load_hobbies

from yellowbrick.text import UMAPVisualizer

corpus = load_hobbies()

tfidf = TfidfVectorizer()

docs = tfidf.fit_transform(corpus.data)

# 先聚类

clusters = KMeans(n_clusters=5)

clusters.fit(docs)

# 指定每个簇不同的颜色

umap = UMAPVisualizer(colors=['#01828A', '#Ae828A', '#d1a28A', '#e1a28A', '#01828A'])

umap.fit(docs, ["c{}".format(c) for c in clusters.labels_]) # 使用聚类的结果

umap.show()特征分散图可视化Dispersion Plot

基础使用

一个词语的重要性可以根据它在语料库中的分散程度来决定。

In [14]:

from yellowbrick.text import DispersionPlot

from yellowbrick.datasets import load_hobbies

corpus = load_hobbies()

text = [doc.split() for doc in corpus.data]

y = corpus.target

target_words = ['points', 'money', 'score', 'win', 'reduce']

visualizer = DispersionPlot(

target_words,

colormap="Accent", # 颜色谱

title="Basic Dispersion Plot" # 标题

)

visualizer.fit(text, y)

visualizer.show()快速方法

直接使用dispersion方法:

In [15]:

from yellowbrick.text import dispersion

from yellowbrick.datasets import load_hobbies

corpus = load_hobbies()

text = [doc.split() for doc in corpus.data]

target_words = ['features', 'mobile', 'cooperative', 'competitive', 'combat', 'online']

dispersion(target_words, text, colors=['olive'])词相关性Word Correlation Plot

基础使用

In [16]:

from yellowbrick.datasets import load_hobbies

from yellowbrick.text.correlation import WordCorrelationPlot

corpus = load_hobbies()

words = ["Tatsumi Kimishima", "Nintendo", "game", "play", "man", "woman"]

viz = WordCorrelationPlot(words)

viz.fit(corpus.data) # 语料训练

viz.show()快速方法

使用word_correlation方法:

In [17]:

from yellowbrick.datasets import load_hobbies

from yellowbrick.text.correlation import word_correlation

corpus = load_hobbies()

words = ["Tatsumi Kimishima", "Nintendo", "game", "play", "man", "woman"]

# 传入待分析的词语和对应的语料

word_correlation(words,

corpus.data,

ignore_case=True, # 处理前词语先全部转成小写,即忽略大小写

cmap="", # 色谱

colorbar=True # 是否显示右侧的颜色柱

)

往期精彩回顾

适合初学者入门人工智能的路线及资料下载(图文+视频)机器学习入门系列下载机器学习及深度学习笔记等资料打印《统计学习方法》的代码复现专辑机器学习交流qq群955171419,加入微信群请扫码

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?