flume接受数据流并sink到aws S3

一、问题描述

场景问题:flume接受http中数据,并将文件名称写入flume的event中的header中(header存储时候是<key1,value1>形式),需要flume接受source端的数据流,并解析其中的相应字段之后,sink到S3中。

提示:解析出header中的filename字段要作为文件名称,并保存event消息对象为小文件。

二、思路与过程

1.根据场景问题,配置flume中的启动的conf-file文件fang-custom-sink2s3.conf

csuHttp.sources = r1

csuHttp.channels = c1

csuHttp.sinks = s1

# ============= Configure the source ==========

csuHttp.sources.r1.type = http

csuHttp.sources.r1.port = xxxx

csuHttp.sources.r1.bind = 172.xx.xx.xxx

csuHttp.sources.r1.handler = com.navinfo.flume.source.http.CSUBlobHandler

# ========== Configure the sink ==========

csuHttp.sinks.s1.type = flume2s3.MySinks2S3

csuHttp.sinks.s1.hdfs.rollSize = 0

csuHttp.sinks.s1.hdfs.rollCount = 1

#============= my defined var :for aws s3 ==================

csuHttp.sinks.s1.aws_access_key = my_aws_access_key

csuHttp.sinks.s1.aws_secret_key = my_aws_secret_key

csuHttp.sinks.s1.my_bucket_path = public-sss-cn-ss-sss-cc-s3

csuHttp.sinks.s1.my_endpoint = s3.cn-northwest-1.amazonaws.com.cn

csuHttp.sinks.s1.my_region = cn-northwest-1

#============= my defined var :for local s3 ==================

#csuHttp.sinks.s1.aws_access_key = xxxxxxxxxxxxxxxxxx

#csuHttp.sinks.s1.aws_secret_key = xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

#csuHttp.sinks.s1.my_bucket_path = mybucket_path/upload

##csuHttp.sinks.s1.my_region = 可空置

#csuHttp.sinks.s1.my_endpoint = http://192.168.xx.xxx:xxxx

# ========== define channel from kafka source to hdfs sink ==========

csuHttp.channels.c1.type = memory

# channel store size

csuHttp.channels.c1.capacity = 1000

# transaction size

csuHttp.channels.c1.transactionCapacity = 1000

csuHttp.channels.c1.byteCapacity = 838860800000

csuHttp.echannels.c1.byteCapacityBufferPercentage = 20

csuHttp.echannels.c1.keep-alive = 60

# ========== Bind the sources and sinks to the channels ==========

csuHttp.sources.r1.channels = c1

csuHttp.sinks.s1.channel = c1

2.此处需要flume自定义sink,具体的sink代码实现如下:

java代码实现:

package flume2s3;

import com.amazonaws.auth.AWSCredentials;

import com.amazonaws.auth.AWSStaticCredentialsProvider;

import com.amazonaws.auth.BasicAWSCredentials;

import com.amazonaws.client.builder.AwsClientBuilder;

import com.amazonaws.services.s3.AmazonS3;

import com.amazonaws.services.s3.AmazonS3ClientBuilder;

import com.amazonaws.services.s3.model.ObjectMetadata;

import com.amazonaws.services.s3.model.PutObjectRequest;

import org.apache.flume.*;

import org.apache.flume.conf.Configurable;

import org.apache.flume.sink.AbstractSink;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.ByteArrayInputStream;

import java.util.Date;

import java.util.Map;

/**

* Created by user on 2019/7/9.

*/

public class MySinks2S3 extends AbstractSink implements Configurable{

private static String aws_access_key = null; // 【你的 access_key】

private static String aws_secret_key = null; // 【你的 aws_secret_key】

private static String my_bucket_path = null; // 【你 bucket 的名字】 # 首先需要保证 s3 上已经存在该存储桶

private static String my_region = null; //aws的region区域

private static String my_endpoint = null;

private AmazonS3 s3Client;

@Override

public synchronized void start() {//自定义sink资源的初始化工作

super.start();

initResourceConn();

System.out.println("[fct] start(): initResourceConn finished!! ");

}

@Override

public void configure(Context context) {

aws_access_key = context.getString("aws_access_key", null);

aws_secret_key = context.getString("aws_secret_key", null);

my_bucket_path = context.getString("my_bucket_path", null);

my_region = context.getString("my_region",null);

my_endpoint = context.getString("my_endpoint",null);

System.out.println(" [ read flume configure] ---- aws_access_key: "+aws_access_key +"; aws_secret_key: "+aws_secret_key+ " ;my_bucket_path: "+my_bucket_path +"; my_endpoint:"+my_endpoint+"; my_region:"+my_region);

}

public void initResourceConn() {

//静态块:初始化S3的连接对象s3Client! 需要3个参数:AWS_ACCESS_KEY,AWS_SECRET_KEY,AWS_REGION

AWSCredentials awsCredentials = new BasicAWSCredentials(aws_access_key, aws_secret_key);

//这是一个构建者模式,通过不停地来追加各种参数;spark和flink中有很常见

//注意:因为是本地方式,访问相应的S3文件系统,所以signingRegion可以默认为空。

s3Client = AmazonS3ClientBuilder.standard()

.withCredentials(new AWSStaticCredentialsProvider(awsCredentials))

.withEndpointConfiguration(

new AwsClientBuilder.EndpointConfiguration(my_endpoint,my_region))

.build();

//测试是否连接上去S3

System.out.println("[fct] ||| [list all buckets]: " + s3Client.listBuckets()+"\n");

}

private static final Logger logger = LoggerFactory.getLogger(MySinks2S3.class);

@Override

public Status process() throws EventDeliveryException {

// TODO Auto-generated method stub

Status result = Status.READY;

Channel channel = getChannel();

Transaction transaction = channel.getTransaction();

Event event =null;

try {

transaction.begin();

event = channel.take();

if (event != null) {

byte[] eventBodyBytes = event.getBody();

Map<String, String> eventHeader = event.getHeaders();

String fileName = "defaultName";

if (eventHeader != null ) {

if (eventHeader.containsKey("filename")) {

fileName = eventHeader.get("filename");

} else {

// System.out.println("【Warn】msg not containsKey [filename] !!!");

logger.warn("[fct Warn] msg not containsKey [filename] !!!");

}

} else {

logger.warn("[fct Warn] eventHeader is null");

// System.out.println("【Warn】 eventHeader is null" );

}

ByteArrayInputStream byteArrayInputStream = new ByteArrayInputStream(eventBodyBytes);

ObjectMetadata objectMetadata = new ObjectMetadata();

objectMetadata.setContentType("application/x-protobuf");

objectMetadata.setLastModified(new Date());

objectMetadata.setContentLength(eventBodyBytes.length);

PutObjectRequest putObjectRequest = new PutObjectRequest(my_bucket_path, fileName,byteArrayInputStream,objectMetadata );

s3Client.putObject(putObjectRequest);

// s3Client.putObject(bucketName, fileName, eventBody);

// System.out.println("put byte sucessfully!! ---fileName: " + fileName);

logger.info("put byte sucessfully!! ---fileName: " + fileName);

} else {

// No event found, request back-off semantics from the sink runner

result = Status.BACKOFF;

}

transaction.commit();

// System.out.println("transaction commit...");

} catch (Exception ex) {

transaction.rollback();

System.out.println("transaction rollback...");

String errorMsg = "Failed to publish event: " + event;

logger.error(errorMsg);

System.out.println("exception msg:" + ex.getMessage());

throw new EventDeliveryException(errorMsg, ex);

} finally {

transaction.close();

// System.out.println("transaction close...");

}

return result;

}

@Override

public synchronized void stop() {//结束时候的资源清理工作

super.stop();

s3Client.shutdown();

System.out.println("fct|| stop(): s3Client.shutdown();");

}

}

打包该java程序为jar包FangFlume2S3-1.0-SNAPSHOT.jar,然后把该jar包放入指定的flume类路径的lib目录下。

三、最后启动相应的flume配置文件即可。

##在flume安装程序下,启动相应的flume配置文件即可!

./bin/flume-ng agent --conf ./conf -f ./conf/fang-custom-sink2s3_aws3.conf -n csuHttp -Dflume.root.logger=INFO,console

四、常见错误警告

使用本地向S3中上传文件的时候,采用不同的aws,导致版本不匹配问题

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk-s3</artifactId>

<version>1.11.347</version>

</dependency>

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk</artifactId>

<version>1.7.4</version>

</dependency>

出现如下错误:

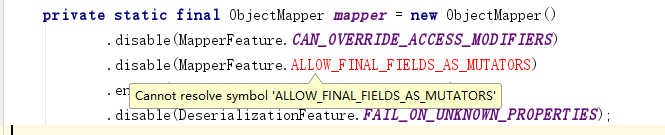

java.lang.NoSuchFieldError: ALLOW_FINAL_FIELDS_AS_MUTATORS

at com.amazonaws.partitions.PartitionsLoader.<clinit>(PartitionsLoader.java:52)

at com.amazonaws.regions.RegionMetadataFactory.create(RegionMetadataFactory.java:30)

at com.amazonaws.regions.RegionUtils.initialize(RegionUtils.java:64)

at com.amazonaws.regions.RegionUtils.getRegionMetadata(RegionUtils.java:52)

at com.amazonaws.regions.RegionUtils.getRegion(RegionUtils.java:105)

at com.amazonaws.client.builder.AwsClientBuilder.getRegionObject(AwsClientBuilder.java:249)

at com.amazonaws.client.builder.AwsClientBuilder.withRegion(AwsClientBuilder.java:238)

at mys3test.UploadTest.<clinit>(UploadTest.java:34)

Exception in thread "main"

Process finished with exit code 1

问题分析:

没有响应的字段,查看源码发现,如下错误,

参见文章:

https://www.e-learn.cn/content/wangluowenzhang/488554

Could not initialize class com.amazonaws.partitions.PartitionsLoader

[java.lang.NoSuchFieldError: ALLOW_FINAL_FIELDS_AS_MUTATORS when I download file from AmazonS3 [duplicate]]

初步确定是版本不匹配,而且是aws先关的版本中Jackson library.版本不正确,后期检查,发现是aws-java-sdk的maven包引入的时候,版本过低(1.7.4)。此处改为与aws-java-sdk-s3包相同的版本号(1.11.347),或者删除该aws-java-sdk包,只是保留aws-java-sdk-s3(1.11.347)的包即可。

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk-s3</artifactId>

<version>1.11.347</version>

</dependency>

<!-- <dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk</artifactId>

<version>1.7.4</version>

</dependency>-->

本文介绍了flume接受数据流并sink到aws S3的相关内容。包括场景问题,即接受http数据、解析字段后sink到S3;思路与过程,如配置conf-file文件、自定义sink并打包放入指定目录;最后启动配置文件。还提及常见错误,如版本不匹配,给出解决办法。

本文介绍了flume接受数据流并sink到aws S3的相关内容。包括场景问题,即接受http数据、解析字段后sink到S3;思路与过程,如配置conf-file文件、自定义sink并打包放入指定目录;最后启动配置文件。还提及常见错误,如版本不匹配,给出解决办法。

110

110

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?