LSTM函数解析

如果return_sequences=True:返回全部时间步的结果,否则为最后一步,

https://www.cnblogs.com/wzdLY/p/10071262.html

return_sequences 和return_state

https://blog.youkuaiyun.com/u011327333/article/details/78501054

模型

https://keras.io/zh/getting-started/functional-api-guide/

http://t.zoukankan.com/USTC-ZCC-p-11319702.html

卷积(Conv1D, Conv2D)

https://keras.io/zh/layers/convolutional/

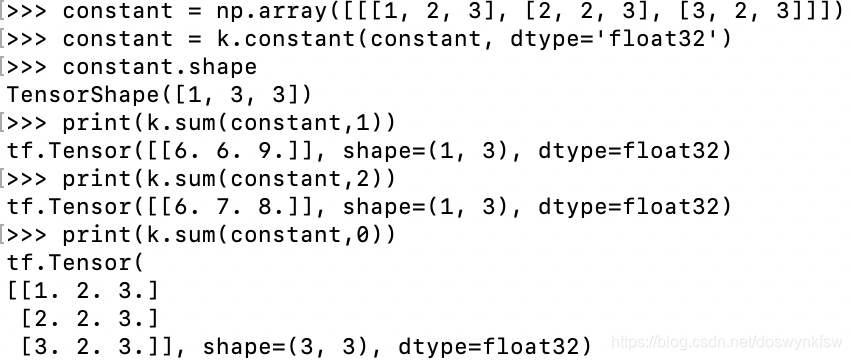

K.sum

import keras.backend as K

import numpy as np

cons = np.array([[[1,2],[1,2]],[[2,3],[2,3]]])

cons = K.constant(cons,dtype='float32')

K.sum中的keepdims意思为True表示和原来的维度保持一致。

Embedding

Embedding 层可以参考这一篇博客

K.rnn

得到递归的实现

https://zhuanlan.zhihu.com/p/94346685

call函数的作用

https://blog.youkuaiyun.com/Acecai01/article/details/125277208

build和call的调用

https://www.5axxw.com/questions/content/u55cte

https://www.meiwen.com.cn/subject/tyjzdqtx.html (build在call之前应该是会被调用一次)

Debug调试

http://sujitpal.blogspot.com/2017/10/debugging-keras-networks.html

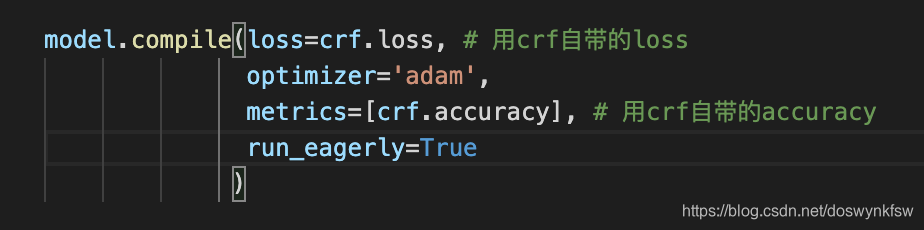

debug 使用early_runing

run_eagerly=True

LSTM与卷积网络详解

LSTM与卷积网络详解

本文详细解析了LSTM及卷积网络的工作原理和技术细节,包括如何通过参数设置获得不同时间步的输出,以及卷积层的具体应用。此外还介绍了关键函数如K.sum的使用方法,并探讨了Embedding层的功能。

本文详细解析了LSTM及卷积网络的工作原理和技术细节,包括如何通过参数设置获得不同时间步的输出,以及卷积层的具体应用。此外还介绍了关键函数如K.sum的使用方法,并探讨了Embedding层的功能。

2374

2374

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?