先放上理想曲线:

几种方法代码:

#!usr/bin/python

#codeing: utf-8

'''

Create on 2018-08-09

Author: Gunther17

Ctrl + 1: 注释/反注释

Ctrl + 4/5: 块注释/块反注释

Ctrl + L: 跳转到行号

Tab/Shift + Tab: 代码缩进/反缩进

Ctrl +I:显示帮助

'''

import os.path

import csv

import time

import numpy as np

import pandas as pd

from sklearn.decomposition import PCA

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.svm import SVC

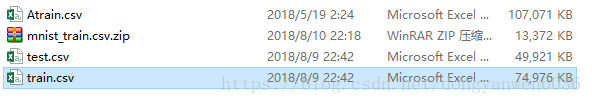

data_dir='D:\data Competition\digit-recognizer/'

#加载数据

def opencsv():

data_train=pd.read_csv(os.path.join(data_dir,'input/train.csv'))

data_test=pd.read_csv(os.path.join(data_dir,'input/test.csv'))

train_data=data_train.values[0:,1:] #读入全部训练数据, [行,列]

train_label=data_train.values[0:,0] # 读取列表的第一列

test_data=data_test.values[0:,0:] # 测试全部测试个数据

return train_data,train_label,test_data

def saveResults(result,csvName):

with open(csvName,'w') as myfile:

'''

创建记录输出结果的文件(w 和 wb 使用的时候有问题)

python3里面对 str和bytes类型做了严格的区分,不像python2里面某些函数里可以混用。

所以用python3来写wirterow时,打开文件不要用wb模式,只需要使用w模式,然后带上newline=''

'''

mywrite=csv.writer(myfile)

mywrite.writerow(["ImageId","Label"])

index=0

for r in result:

index+=1

mywrite.writerow([index,int(r)])

print('Saved successfully....')

def knnClassify(traindata,trainlabel):

print('Train knn...')

knnClf=KNeighborsClassifier() # default:k = 5,defined by yourself:KNeighborsClassifier(n_neighbors=10)

knnClf.fit(traindata,np.ravel(trainlabel))# ravel/flattened 返回1维 array,其中flatten函数返回的是拷贝。.

return knnClf

def dtClassify(traindata,trainlabel):

print('Train decision tree...')

dtClf=DecisionTreeClassifier()

dtClf.fit(traindata,np.ravel(trainlabel))

return dtClf

def rfClassify(traindata,trainlabel):

print('Train Random forest...')

rfClf=RandomForestClassifier()

rfClf.fit(traindata,np.ravel(trainlabel))

return rfClf

def svmClassify(traindata,trainlabel):

print('Train svm...')

svmClf=SVC(C=4,kernel='rbf')

svmClf.fit(traindata,np.ravel(trainlabel))

return svmClf

def dpPCA(x_train,x_test,Com):

print('dimension reduction....')

trainData=np.array(x_train)

testData=np.array(x_test)

'''

n_components>=1

n_components=NUM 设置占特征数量比

0 < n_components < 1

n_components=0.99 设置阈值总方差占比

'''

pca=PCA(n_components=Com,whiten=False)

pca.fit(trainData) #fit the model with X

pcaTrainData=pca.transform(trainData)# 在 X上进行降维

pcaTestData=pca.transform(testData)

#pca 方差大小 方差占比 特征数量

# print(pca.explained_variance_,'\n',pca.explained_variance_ratio_,'\n',pca.components_)

return pcaTrainData,pcaTestData

def dRecognition_knn():

start_time=time.time()

#load data

trainData,trainLabel,testData=opencsv()

print('load data finish...')

stop_time1=time.time()

print('load data take: %f' %(stop_time1-start_time))

#dimension reduction

trainData,testData=dpPCA(trainData,testData,0.8)

knnClf=knnClassify(trainData,trainLabel)

dtClf=dtClassify(trainData,trainLabel)

rfClf=rfClassify(trainData,trainLabel)

svmClf=svmClassify(trainData,trainLabel)

trainlabel_knn=knnClf.predict(trainData)

trainlabel_dt=dtClf.predict(trainData)

trainlabel_rf=rfClf.predict(trainData)

trainlabel_svm=svmClf.predict(trainData)

knn_acc=accuracy_score(trainLabel,trainlabel_knn)

print('knn train accscore:%f'%(knn_acc))

dt_acc=accuracy_score(trainLabel,trainlabel_dt)

print('dt train accscore:%f'%(dt_acc))

rf_acc=accuracy_score(trainLabel,trainlabel_rf)

print('rf train accscore:%f'%(rf_acc))

svm_acc=accuracy_score(trainLabel,trainlabel_svm)

print('svm train accscore:%f'%(svm_acc))

testLabel_knn=knnClf.predict(testData)

testLabel_dt=dtClf.predict(testData)

testLabel_rf=rfClf.predict(testData)

testLabel_svm=svmClf.predict(testData)

saveResults(testLabel_knn,os.path.join(data_dir,'output/Result_knn.csv'))

saveResults(testLabel_dt,os.path.join(data_dir,'output/Result_dt.csv'))

saveResults(testLabel_rf,os.path.join(data_dir,'output/Result_rf.csv'))

saveResults(testLabel_svm,os.path.join(data_dir,'output/Result_svm.csv'))

print('knn dt rf svm process finished ....')

stop_time2=time.time()

print('knn classification take:%f' %(stop_time2-start_time))

if __name__=='__main__':

dRecognition_knn()

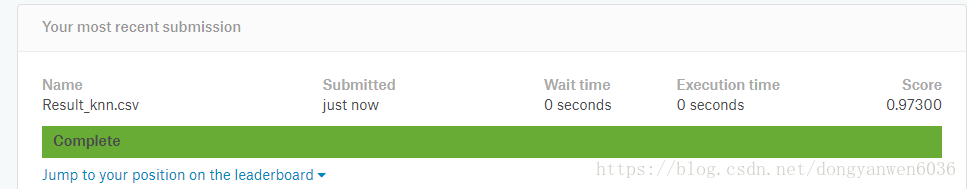

最后附knn图:

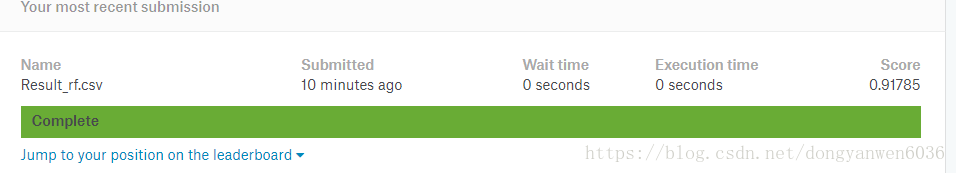

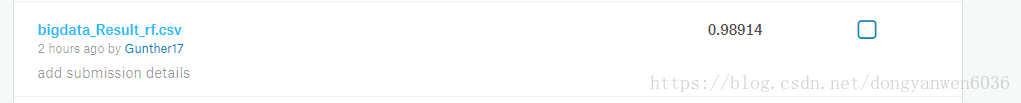

随机森林图:

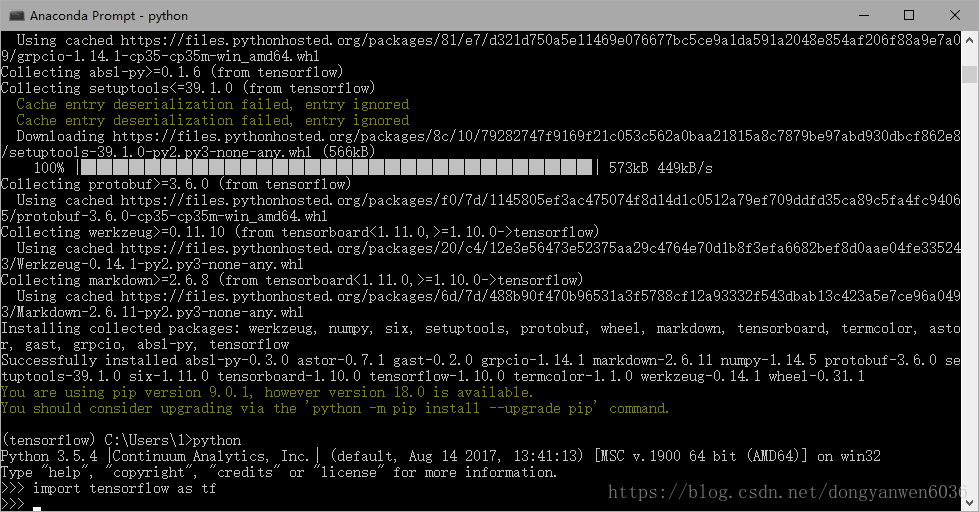

方法二 cnn待更新:

环境:windows10,64 python报错:

ImportError:no module named tensorflow.python

看来这里涉及到安装Win10下用Anaconda安装TensorFlow

解决方案:TensorFlow目前在Windows下只支持Python 3.5版本。

用Anaconda安装tensorflow, conda命令,这样tensorflow cpu版本就安装好了。

卸载了之前的 python 2.7

测试成功!

cnn code:

#!/usr/bin/python

# -*- coding: utf-8 -*-

"""

Created on Sat Aug 11 11:03:20 2018

@author: Gunther17

"""

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

import os

from keras.callbacks import ReduceLROnPlateau

from keras.layers import Conv2D, Dense, Dropout, Flatten, MaxPool2D

from keras.models import Sequential

from keras.optimizers import RMSprop

from keras.preprocessing.image import ImageDataGenerator

from keras.utils.np_utils import to_categorical # convert to one-hot-encoding

np.random.seed(2)

# 数据路径

data_dir = 'D:\data Competition\digit-recognizer/'

# Load the data

train = pd.read_csv(os.path.join(data_dir, 'input/train.csv'))

test = pd.read_csv(os.path.join(data_dir, 'input/test.csv'))

X_train = train.values[:, 1:]

Y_train = train.values[:, 0]

test = test.values

# Normalization

X_train = X_train / 255.0

test = test / 255.0

# Reshape image in 3 dimensions (height = 28px, width = 28px , canal = 1)

X_train = X_train.reshape(-1, 28, 28, 1)

test = test.reshape(-1, 28, 28, 1)

# Encode labels to one hot vectors (ex : 2 -> [0,0,1,0,0,0,0,0,0,0])

Y_train = to_categorical(Y_train, num_classes=10)

# Set the random seed

random_seed = 2

# Split the train and the validation set for the fitting

X_train, X_val, Y_train, Y_val = train_test_split(

X_train, Y_train, test_size=0.1, random_state=random_seed)

# Set the CNN model

# my CNN architechture is In -> [[Conv2D->relu]*2 -> MaxPool2D -> Dropout]*2 -> Flatten -> Dense -> Dropout -> Out

model = Sequential()

model.add(

Conv2D(

filters=32,

kernel_size=(5, 5),

padding='Same',

activation='relu',

input_shape=(28, 28, 1)))

model.add(

Conv2D(

filters=32, kernel_size=(5, 5), padding='Same', activation='relu'))

model.add(MaxPool2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(

Conv2D(

filters=64, kernel_size=(3, 3), padding='Same', activation='relu'))

model.add(

Conv2D(

filters=64, kernel_size=(3, 3), padding='Same', activation='relu'))

model.add(MaxPool2D(pool_size=(2, 2), strides=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(256, activation="relu"))

model.add(Dropout(0.5))

model.add(Dense(10, activation="softmax"))

# Define the optimizer

optimizer = RMSprop(lr=0.001, rho=0.9, epsilon=1e-08, decay=0.0)

# Compile the model

model.compile(

optimizer=optimizer, loss="categorical_crossentropy", metrics=["accuracy"])

epochs = 30

batch_size = 86

# Set a learning rate annealer

learning_rate_reduction = ReduceLROnPlateau(

monitor='val_acc', patience=3, verbose=1, factor=0.5, min_lr=0.00001)

datagen = ImageDataGenerator(

featurewise_center=False, # set input mean to 0 over the dataset

samplewise_center=False, # set each sample mean to 0

featurewise_std_normalization=False, # divide inputs by std of the dataset

samplewise_std_normalization=False, # divide each input by its std

zca_whitening=False, # apply ZCA whitening

rotation_range=10, # randomly rotate images in the range (degrees, 0 to 180)

zoom_range=0.1, # Randomly zoom image

width_shift_range=0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range=0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip=False, # randomly flip images

vertical_flip=False) # randomly flip images

datagen.fit(X_train)

history = model.fit_generator(

datagen.flow(

X_train, Y_train, batch_size=batch_size),

epochs=epochs,

validation_data=(X_val, Y_val),

verbose=2,

steps_per_epoch=X_train.shape[0] // batch_size,

callbacks=[learning_rate_reduction])

# predict results

results = model.predict(test)

# select the indix with the maximum probability

results = np.argmax(results, axis=1)

results = pd.Series(results, name="Label")

submission = pd.concat(

[pd.Series(

range(1, 28001), name="ImageId"), results], axis=1)

submission.to_csv(os.path.join(data_dir, 'output/Result_keras_CNN.csv'),index=False)

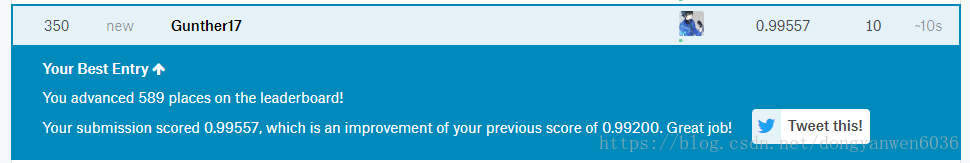

print('finished')- 贴上结果:

- trick没有任何意义:就是拿已经知道的数据集MINIST来训练。接下来就当玩玩。。。

来Atrain.csv训练,然后进行测试。

数据太大了运行好长时间

- 如果用cnn训练所有数据,trick效果是0.99942 参见2.

本文介绍了在Windows 10环境下使用Anaconda解决TensorFlow导入错误的问题,详细阐述了从Python 2.7升级到Python 3.5以及如何通过conda命令安装TensorFlow CPU版本。此外,还探讨了digit-recognizer问题的随机森林方法,并展示了CNN模型的初步应用和训练结果,尽管数据量大导致训练时间较长。

本文介绍了在Windows 10环境下使用Anaconda解决TensorFlow导入错误的问题,详细阐述了从Python 2.7升级到Python 3.5以及如何通过conda命令安装TensorFlow CPU版本。此外,还探讨了digit-recognizer问题的随机森林方法,并展示了CNN模型的初步应用和训练结果,尽管数据量大导致训练时间较长。

1252

1252

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?